- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

my software is very sensitive to low latency and i'm trying to find what cause the latency and how to resolve it.

this is pseudo code of my software

timer_func() {

do some calc

register new timer

}

main(){

while(1){

check if timer ready ?

if ready - timer_func

}

}

i'm measuring the time it takes the timer function to run. i'm doing 10000 iterations.

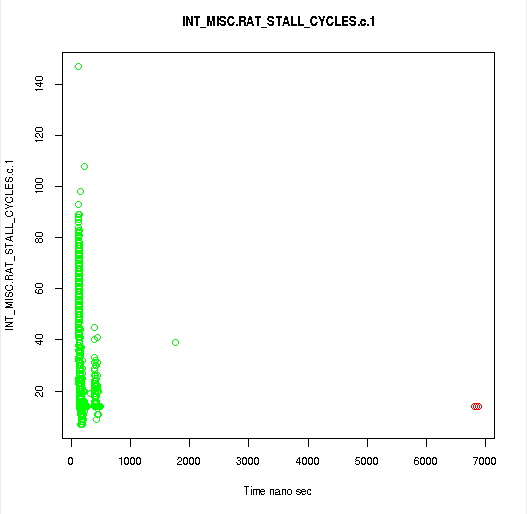

i found that some that almost all the time it takes less then 200 nano to run the timer code but some times around 10 times it takes 6.5 micro second !!!?

i used libpfm to measure cpu events and found out that when it takes 6.5 micro second i'm seeing RAT_STALLS:FLAGS

How can i solve this issue ?

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you be more precise and post RAT_STALLS events?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

>>>i used libpfm to measure cpu events and found out that when it takes 6.5 micro second i'm seeing RAT_STALLS:FLAGS>>>

Sorry in my previous answer I did not spot FLAGS event. That stall is related to some flags being set in EFLAGS register when some instruction depends on flag being set by previous instruction and that flag(s) were not set. I suppose that your code is probably performing integer comparison and jumping on result.

For example: sbc reg,reg ; jc cs:<some_location>

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

One thing i don't understand why it takes 6.5 microsecond ? (this is a lot of time to wait).

how can i found out what the cpu is doing during this time ?

how can i found where to instruction that wait for the FLAGS ? i can't use vtune because the timer function takes less then 1 percent of the process. if i call the timer function more frequent the slow iteration disappear.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think that you should try to check timer function disassembly and search for the occurrence of instruction(s) which set EFLAGS register. I suppose that there should be conditional code execution which is triggered by EFLAGS settings.

I know that VTune will not be able to profile code which runs < 1ms. Regarding 6.5 microsecond execution time I suppose that maybe your code is interrupted by some more privileged thread/ISR , but I am not sure about that.

>>>how can i found out what the cpu is doing during this time ?>>>

Usually this information can be obtained by running CPU profiling/monitoring tool. On Windows you can use Windows Performance Recorder (formerly Xperf) which can show you CPU load breakdown by thread/function. I do not know if Linux has similar tool.

In your case I will try to double check the results of libpfm by running VTune.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

libpfm result are supported by vtune runs.

my run is single process without thread that in pin to specific affinity no context switch or system calls are made during the run. all the memory is pre-allocated.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The 6.5 microsecond latency strongly suggests that the processor core is being taken away from you to handle an interrupt or other kernel function.

It is possible to map IO interrupts to target other processor cores, but every core has to take timer interrupts.

It might be interesting to check the interval between the "slow" iterations. If these are commonly separated by a fixed interval (e.g., 1 millisecond), then the overhead you are seeing is probably due to the interrupt handler for the OS process scheduler.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So I think that slower execution can be due to RAT_STALLS:FLAGS event.

Can you describe me how timer function measures the execution time? Does it use RDTSC machine code instruction or maybe HPET timer?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@John

Do you know if Linux scheduler dispatches ISR to be executed on core0 only? I suppose that there is a possibility of OP code being interrupted by more privileged thread/ISR.

thanks in advance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

the server i'm using is configure for low latency, interrupts affinity is set to core 0 (the only interrupt is timer interrupts).

if i call the timer function more frequent less then 1 millisecond i don't see slow iteration but if it's slower then 1 millisecond i see the problem.

i checked for interrupts, context switch and system call using libpfm and during the test there where none.

i'm measure time with rdtsc.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What is the VTune output? Is it able to resolve IP of the code which caused RAT_STALL:FLAGS?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

On SMP systems the Linux kernel uses "local timer interrupts" on all cores to perform various housekeeping tasks -- for example it updates the kernel data structures that keep track of how long the process has been running. Look for "local timer interrupt" in general and the "smp_local_timer_interrupt" function in the Linux kernel source for more details.

The local timer interrupt is maskable, so if you are in the kernel you can disable it temporarily. For a piece of code that executes in a short amount of time, this is sometimes a reasonable way to determine whether the behavior is intrinsic to the hardware or due to an interrupt. The best way to do this is in a loadable kernel module that is not loaded by default, so that if you mess something up a simple reboot is all you need to recover.

On my systems it looks like the only non-maskable interrupts are the performance monitoring overflow interrupts. These will occur whenever code is running, so you might also need to temporarily disable the NMI watchdog timer to avoid these.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i can't see the timer function in vtune because it takes less then 1 percent of the total run.

NMI interrupts are disable on my server.

i jest want to make sure that what you suggest is to create a kernel module that disable the local timer interrupt on the core that i'm running and to check if i still see the slow iterations. did i understood correctly ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

>>>i can't see the timer function in vtune because it takes less then 1 percent of the total run.>>>

I know that. You wrote that VTune confirmed RAT_STALL:FLAGS event as reported by libpfm so my intention was to ask you for VTune output screenshot.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you want to verify that the slow iterations are caused by interrupts, then you could build a loadable kernel module to test this -- but you probably don't need to.

Approach 1 -- no kernel stuff needed: The test you are doing is quite short -- 10,000 iterations * 200 ns/iteration = 2 milliseconds -- but it is still longer than the typical local timer interrupt period of 1 millisecond. If you decrease the number of iterations so that the expected execution time is less than 1 millisecond, then you should get some runs with no slow iterations. For example, if you run 1000 iterations, the expected execution time would be 0.2 milliseconds, and you would expect about 1 out of 5 runs to experience a local timer interrupt (assuming the standard 1 millisecond local timer interrupt interval). Decreasing the number of iterations would also reduce the overhead of storing the timer values -- assuming 8 Bytes per timer value, you can only hold ~4000 in the L1 data cache, then you would start slowing down (slightly) due to L1 to L2 writebacks. With 1000 iterations you should write the 1000 timer values immediately before the loop, then as the loop runs each store into that array should hit in the L1 data cache.

If this approach provides any indication of avoiding slow iterations, it is also possible to change the local timer interrupt interval as an independent degree of freedom. I am not sure if this can be done without a kernel rebuild...

Approach 2 -- loadable kernel module: The system will probably crash if you disable interrupts for very long, but the test you are doing is quite short so if you embed it in the loadable kernel module there should be no problem. If the 10,000 iterations take 200ns each, then the elapsed time should only be 2 milliseconds.

The loadable kernel module will need to:

- allocate storage for the timing data

- zero-fill the timing array

- save the current interrupt flags

- disable interrupts

- run the test code

- re-enable interrupts

- print the relevant data to the kernel log file

I recommend trying the first approach -- it is disturbingly easy to crash systems while debugging kernel modules.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i'll run with vtune and share the result.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i'm using sandybrige and ivybridge. i check HW_INTERRUPTS_Received event there where none.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hmmm...

Vol 3 of the SW developer's guide shows an event 0xC8 "HW_INT_RCV" that counts hardware interrupts on P6, Core Solo & Duo, Atom, Core, Westmere, Nehalem -- but not for Sandy Bridge or Ivy Bridge. The EventSelect value of 0xC8 on Haswell now points to a TSX-related event.

The 0xCB event is used for MEM_LOAD_RETIRED counts on Atom, Core2, Nehalem, Westmere, Core. It is defined to count RS-related stalls on Silvermont, and is not defined for Sandy Bridge/IvyBridge or Haswell.

Looking over the VTune database files, I see the 0xC8 event (HW_INT_RCV) defined only for Pentium M, Atom and Core 2 processors, with KNC having a hardware interrupt counter event with a different EventSelect code (0x27).

This history suggests that the event might have become unreliable beginning with Nehalem processors?

Looking at the libpfm4 source in the PAPI-5.3.0 distribution, I see that Event 0xCB is defined as "HW_INTERRUPTS", but I don't see any Intel documentation that supports this usage.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here is attempt #3 at posting this message... stupid browsers...

Hello John, I don't know where the documentation for the event is. I've used it before with reliable results on ivybridge and haswell.

Hello Amir: Another thing you can try is tracing your program. On linux, using 'perf' or 'trace-cmd', you can monitor all context switches, interrupts and timers to see exactly how your program is interacting with the OS. I think you are using linux.... you can do the same on Windows with ETW. Let me know if you need a command line to do this.

Pat

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page