- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I have quantized my ONNX FP32 model to ONNX INT8 model using Intel's Neural Compressor.

When I try to load the model to run inference, it fails with an error saying Invalid model.

Error:

File "testOnnxModel.py", line 178, in inference

session = ort.InferenceSession(onnx_file, sess_options=sess_options, providers=['CPUExecutionProvider'])

File "/root/anaconda3/envs/versa_benchmark/lib/python3.7/site-packages/onnxruntime/capi/onnxruntime_inference_collection.py", line 335, in __init__

self._create_inference_session(providers, provider_options, disabled_optimizers)

File "/root/anaconda3/envs/versa_benchmark/lib/python3.7/site-packages/onnxruntime/capi/onnxruntime_inference_collection.py", line 370, in _create_inference_session

sess = C.InferenceSession(session_options, self._model_path, True, self._read_config_from_model)

onnxruntime.capi.onnxruntime_pybind11_state.Fail: [ONNXRuntimeError] : 1 : FAIL : Load model from rappnet2_int8.onnx failed:This is an invalid model. Error: two nodes with same node name (model/rt_block/layer_normalization/moments/SquaredDifference:0_QuantizeLinear).

I am able to quantize and work on the same model's tensorflow version.

Please help on this.

TIA,

Anand Viswanath A

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thank you for posting in Intel Communities.

Could you please share the following details?

1. Sample reproducer code

2. exact steps and the commands used

3. OS details.

Regards,

Diya

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Please find the details below,

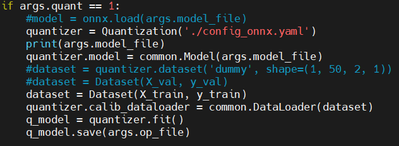

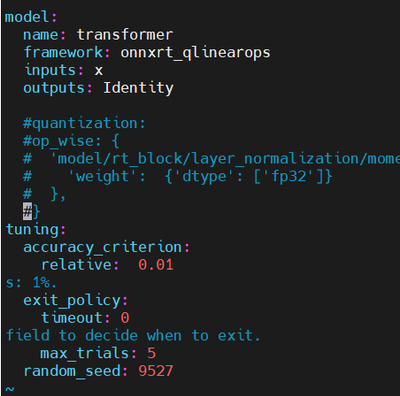

Code :

Config :

Steps:

Execute the python script with the quantization code.

The script takes ONNX FP32 model which is converted from TF Frozen FP32 model using TF2onnx.

The output INT8 ONNX model when loaded for inferencing, gives the above error

OS:

Linux sdp 5.4.0-110-generic #124-Ubuntu

Regards,

Anand Viswanath A

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi ,

As discussed privately please share sample reproducer code which was used for inferencing with exact steps followed .

Regards,

Diya

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We have not heard back from you.

Could you please share the sample reproducer code which was used for inferencing with exact steps followed so that we can try to fix the issue from our end?

Regards,

Diya

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We have not heard back from you. This thread will no longer be monitored by Intel. If you need further assistance, please post a new question.

Thanks and Regards,

Diya

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page