I'm trying to parallelyzing my application using OpenMP. OpenCV (built using IPP for best efficiency) is used as external library.

These are the Google Drive links to my VTune results:

c755823 basic KMP_BLOCKTIME=0 30 runs : basic hotspot with environment variable KMP_BLOCKTIME set to 0 on 30 runs of the same input

c755823 basic 30 runs : same as above, but with default KMP_BLOCKTIME=200

c755823 advanced KMP_BLOCKTIME=0 30 runs : same as first, but advanced hotspot

On my Intel i7-4700MQ the actual wall-clock time of the application on average on 10 runs is around 0.73 seconds. I compile the code with icpc 2017 update 3 with the following compiler flags:

INTEL_OPT=-O3 -ipo -simd -xCORE-AVX2 -parallel -qopenmp -fargument-noalias -ansi-alias -no-prec-div -fp-model fast=2 -fma -align -finline-functions

INTEL_PROFILE=-g -qopt-report=5 -Bdynamic -shared-intel -debug inline-debug-info -qopenmp-link dynamic -parallel-source-info=2 -ldlIn addition I set KMP_BLOCKTIME=0 because the default value (200) was generating an huge overhead.

We can divide the code in 3 parallel regions (wrapped in only one #pragma parallel for efficiency) and a previous serial one, which is around 25% of the algorithm (and it can't be parallelized).

I'll try to describe them (or you can skip to the code structure directly):

- We create a

parallelregion in order to avoid the overhead to create a new parallel region. The final result is to populate the rows of a matrix obejct,cv::Mat descriptor. We have 3 sharedstd::vectorobjects: (a)blurswhich is a chain of blurs (not parallelizable) usingGuassianBlurby OpenCV (which uses the IPP implementation of guassian blurs) (b)hessResps(size known, say 32) (c)findAffineShapeArgs(unkown size, but in order of thousands of elements, say 2.3k) (d)cv::Mat descriptors(unkown size, final result). In the serial part, we populate `blurs, which is a read only vector. - In the first parallel region,

hessRespsis populated usingblurswithout any synchronization mechanism. - In the second parallel region

findLevelKeypointsis populated usinghessRespsas read only. SincefindAffineShapeArgssize is unkown, we need a local vectorlocalfindAffineShapeArgswhich will be appended tofindAffineShapeArgsin the next step - Since

findAffineShapeArgsis shared and its size is unkown, we need acriticalsection where eachlocalfindAffineShapeArgsis appended to it. - In the third parallel region, each

findAffineShapeArgsis used to generate the rows of the finalcv::Mat descriptor. Again, sincedescriptorsis shared, we need a local versioncv::Mat localDescriptors. - A final

criticalsectionpush_backeachlocalDescriptorstodescriptors. Notice that this is extremely fast sincecv::Matis "kinda" of a smart pointer, so wepush_backpointers.

This is the code structure:

cv::Mat descriptors;

std::vector<Mat> blurs(blursSize);

std::vector<Mat> hessResps(32);

std::vector<FindAffineShapeArgs> findAffineShapeArgs;//we don't know its tsize in advance

#pragma omp parallel

{

//compute all the hessianResponses

#pragma omp for collapse(2) schedule(dynamic)

for(int i=0; i<levels; i++)

for (int j = 1; j <= scaleCycles; j++)

{

hessResps[/**/] = hessianResponse(/*...*/);

}

std::vector<FindAffineShapeArgs> localfindAffineShapeArgs;

#pragma omp for collapse(2) schedule(dynamic) nowait

for(int i=0; i<levels; i++)

for (int j = 2; j < scaleCycles; j++){

findLevelKeypoints(localfindAffineShapeArgs, hessResps[/*...*], /*...*/); //populate localfindAffineShapeArgs with push_back

}

#pragma omp critical{

findAffineShapeArgs.insert(findAffineShapeArgs.end(), localfindAffineShapeArgs.begin(), localfindAffineShapeArgs.end());

}

#pragma omp barrier

#pragma omp for schedule(dynamic) nowait

for(int i=0; i<findAffineShapeArgs.size(); i++){

{

findAffineShape(findAffineShapeArgs[i]);

}

#pragma omp critical{

for(size_t i=0; i<localRes.size(); i++)

descriptors.push_back(localRes[i].descriptor);

}

}At the end of the question, you can find FindAffineShapeArgs.

I'm using Intel Amplifier to see hotspots and evaluate my application.

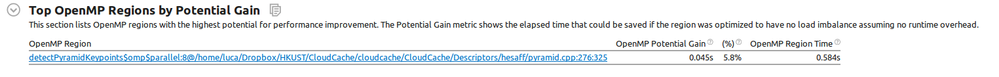

The OpenMP Potential Gain analsysis says that the Potential Gain if there would be perfect load balancing would be 5.8%, so we can say that the workload is balanced between different CPUs.

This i the CPU usage histogram for the OpenMP region (remember that this is the result of 10 consecutive runs):

So as you can see, the Average CPU Usage is 7 cores, which is good.

This OpenMP Region Duration Histogram shows that in these 10 runs the parallel region is executed always with the same time (with a spread around 4 milliseconds):

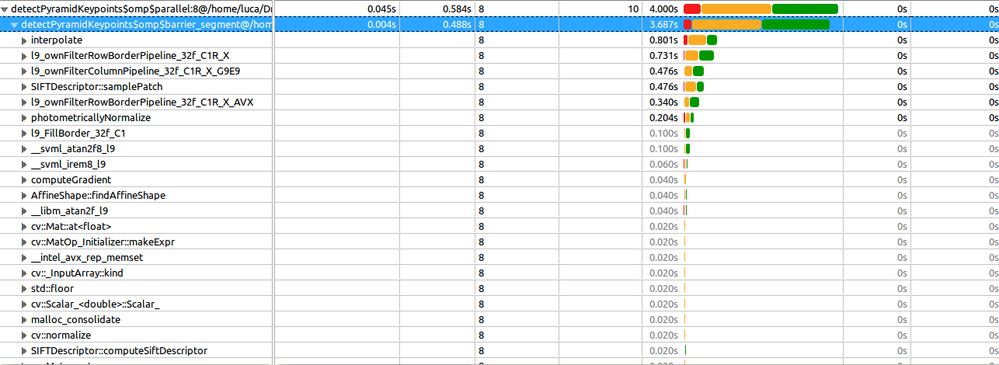

This is the Caller/Calee tab:

For you knowledge:

interpolateis called in the last parallel regionl9_ownFilter*functions are all called in the last parallel regionsamplePatchis called in the last parallel region.hessianResponseis called in the second parallel region

Now, my first question is: how should I interpret the data above? As you can see, in many of the functions half of the time the "Effective Time by Utilization` is "ok", which would probably become "Poor" with more cores (for example on a KNL machine, where I'll test the application next).

Finally, this is the Wait and Lock analysis result:

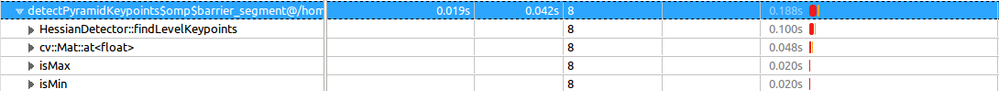

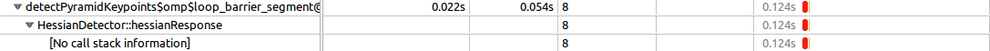

Now, this is the first weird thing: line 276 Join Barrier (which corresponds to the most expensive wait object) is#pragma omp parallel`, so the beginning of the parallel region. So it seems that someone spawned threads before. Am I wrong? In addition, the wait time is longer than the program itself (0.827s vs 1.253s of the Join Barrier that I'm talking about)! But maybe that refers to the waiting of all threads (and not wall-clock time, which is clearly impossible since it's longer than the program itself).

Then, the Explicit Barrier at line 312 is #pragma omp barrier of the code above, and its duration is 0.183s.

Looking at the Caller/Callee tab:

As you can see, most of wait time is poor, so it refers to one thread. But I'm sure that I'm understanding this. My second question is: can we interpret this as "all the threads are waiting just for one thread who is staying behind?".

FindAffineShapeArgs definition:

struct FindAffineShapeArgs

{

FindAffineShapeArgs(float x, float y, float s, float pixelDistance, float type, float response, const Wrapper &wrapper) :

x(x), y(y), s(s), pixelDistance(pixelDistance), type(type), response(response), wrapper(std::cref(wrapper)) {}

float x, y, s;

float pixelDistance, type, response;

std::reference_wrapper<Wrapper const> wrapper;

};