- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Folks,

Recently I'm involved in a project to investigate the NUMA awareness and CPU affinity of a new file system. My method is to choose a baseline file system that is well-known to be NUMA friendly, and then compare the QPI traffic with the new file system by writing the same amount of data.

I have a question about the QPI traffic and the miss count of last level cache fulfilled by remote DRAM and cache. I thought they must be strong correlated as QPI traffic should be caused by remote memory access, until I saw the following stats from vtune:

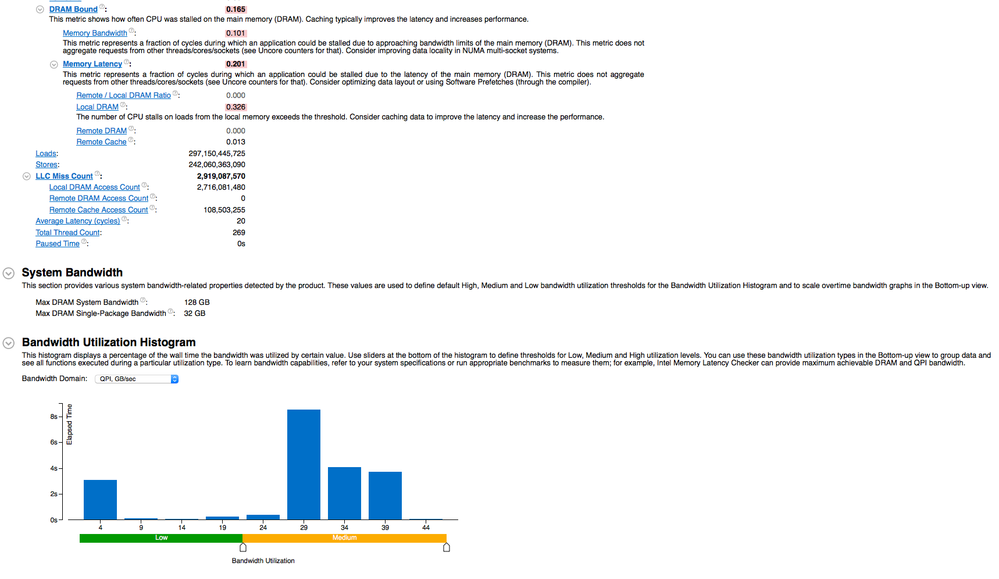

This is the stats of baseline file system:

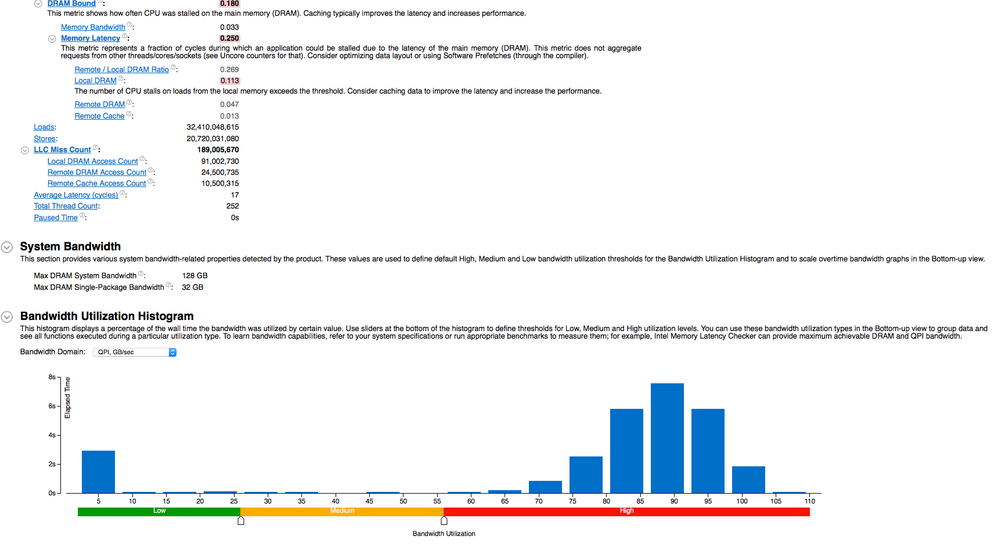

This is the stats from the new file system:

From the graph above, as you can see the new file system produced way more QPI traffic than the old one(the integral of QPI bw utilization should be the total traffic), but the LLC miss count fulfilled by remote memory and cache is way less. In the case of baseline file system, the number is 108M but it is 35M for the new one.

This also can be verified by the summary stats from CLI. The average QPI bandwidth of the baseline file system:

Average Bandwidth

-----------------

Package Total, GB/sec:Self

--------- ------------------

package_0 12.181

package_1 12.103

package_2 13.169

package_3 12.696

And the corresponding number for the new file system:

Average Bandwidth

-----------------

Package Total, GB/sec:Self

--------- ------------------

package_0 15.720

package_1 14.895

package_2 18.983

package_3 18.589

I have tried to enable and disable hardware prefetcher but the results are about the same.

Please share your thoughts and any help is appreciated.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You should specify the hardware of the system under test. Xeon E5 and Xeon E7 are quite different, and each generation (v1,v2,v3,v4) has a different set of performance counter bugs. Starting with Xeon E5 v2, the QPI snooping mode also has a significant impact on QPI traffic counts (and types of transactions).

It is not clear that there is enough information provided here to compute all of the necessary metrics for comparison, but the QPI values for the "new" filesystem are certainly suspicious. The three values that I see don't appear consistent:

- The number of LLC misses satisfied by remote memory plus remote cache with the "new" filesystem is only 1/3 of the number of LLC misses satisfied by remote cache with the "old" filesystem.

- The bandwidth histograms show an increase of the most common utilization from 29% with the "old" filesystem to 90% with the "new" filesystem.

- The CLI results show an increase of 38% in the average QPI bandwidth for the "new" filesystem compared to the "old".

Some of these metrics may be overall QPI traffic and some might be QPI data traffic, but it is still hard to understand how these could all be correct.

It would help to know how much data you requested to be moved to the filesystem and how long the two tests took (though this is often tricky with filesystem tests).

As a general suggestion on methodology, I recommend comparing cases based on raw counts rather than rates whenever possible. You can always divide by the corresponding elapsed times to get rates, but if you start by comparing rates, you can easily get confused about what portion of the change is due to a change in the raw counts and what portion is due to the change in elapsed time.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@John, thanks for reply.

I did the test on a node with 'E7-4890 v2' Ivy Bridge. The node has 4 CPUs installed. However, I picked 8 cores from each node to perform this test. Hardware prefectcher is disabled by running command 'wrmsr 0x1a5 0xf'. Also the operating system is centos7 and I used RAMDisk as the storage. The `old' file system is a variation of ext4 and the `new' file system is ZFS. ZFS checksum is disabled to make the comparison to be fair. It spent 20s for ext4 and 28s for ZFS to write the same amount of data.

Indeed, I should have posted the raw data here. From what I have seen, the raw data from QPI counters match the bandwidth I posted here. These are the raw data for ext4:

UNC_Q_TxL_FLITS_G0.DATA[UNIT0] 1071065448

UNC_Q_TxL_FLITS_G0.DATA[UNIT1] 1077260432

UNC_Q_TxL_FLITS_G0.DATA[UNIT2] 1091149208

UNC_Q_TxL_FLITS_G0.NON_DATA[UNIT0] 19773969221

UNC_Q_TxL_FLITS_G0.NON_DATA[UNIT1] 19776281198

UNC_Q_TxL_FLITS_G0.NON_DATA[UNIT2] 19775481404

UNC_Q_RxL_FLITS_G1.DRS_DATA[UNIT0] 1077104896

UNC_Q_RxL_FLITS_G1.DRS_DATA[UNIT1] 1070909728

UNC_Q_RxL_FLITS_G1.DRS_DATA[UNIT2] 1091002800

UNC_Q_RxL_FLITS_G2.NCB_DATA[UNIT0] 155080

UNC_Q_RxL_FLITS_G2.NCB_DATA[UNIT1] 155384

UNC_Q_RxL_FLITS_G2.NCB_DATA[UNIT2] 145832

The raw data for ZFS:

UNC_Q_TxL_FLITS_G0.DATA[UNIT0] 23075740240

UNC_Q_TxL_FLITS_G0.DATA[UNIT1] 22566842928

UNC_Q_TxL_FLITS_G0.DATA[UNIT2] 22444417296

UNC_Q_TxL_FLITS_G0.NON_DATA[UNIT0] 43433140164

UNC_Q_TxL_FLITS_G0.NON_DATA[UNIT1] 43427824763

UNC_Q_TxL_FLITS_G0.NON_DATA[UNIT2] 43416876640

UNC_Q_RxL_FLITS_G1.DRS_DATA[UNIT0] 22566156256

UNC_Q_RxL_FLITS_G1.DRS_DATA[UNIT1] 23075830560

UNC_Q_RxL_FLITS_G1.DRS_DATA[UNIT2] 22443872160

UNC_Q_RxL_FLITS_G2.NCB_DATA[UNIT0] 557176

UNC_Q_RxL_FLITS_G2.NCB_DATA[UNIT1] 559568

UNC_Q_RxL_FLITS_G2.NCB_DATA[UNIT2] 559752

From the above raw data, ZFS produced about 20 times more QPI traffic than ext4 when writing the same amount of data. Part of the reason is that ZFS doesn't have done any thing for NUMA and the write amplification problem in ZFS.

The problem I don't understand here is that I though LLC miss count should be relative to the QPI traffic. As I mentioned earlier, I used RAMDisk for testing, therefore each actual IO to storage will usually cause cache miss. Is there any optimization inside CPU that it could bypass cache for large data transfer?

@John, please let me know if you want more data.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't know which counters VTune is using in the reports above, but I would guess that the LLC misses reported are only those due to accesses from the cores. IO DMA traffic will cause QPI traffic without causing core-based LLC misses. A non-NUMA-aware filesystem is likely to perform DMA writes to buffers on the "wrong" socket more often than a NUMA-aware filesystem.

The Uncore counters have the ability to count many different types of CBo accesses -- including that associated with different types of IO traffic -- but all of this is very minimally documented and I don't have the infrastructure required to test any of these traffic types.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page