- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi, I use the VTune command line to analyze the MPI program,But I had some problems.

1)Unable to analyze

command:

vtune -collect hpc-performance -k collect-affinity=true -trace-mpi -result-dir /home/hpcadmin/hys/hys_vtune/vtune_projects_dir/test_mpi_dir/mpi_heartdemo_hpc-performance_ppn8_omp3_1node_170 -- mpiexec -genv I_MPI_DEBUG=4 -n 16 -ppn 8 -hosts c1 /home/hpcadmin/hys/hys_vtune/vtune_test_code/Cardiac_demo/build/heart_demo -m /home/hpcadmin/hys/hys_vtune/vtune_test_code/Cardiac_demo/mesh_mid -s /home/hpcadmin/hys/hys_vtune/vtune_test_code/Cardiac_demo/setup_mid.txt -t 100

problems:

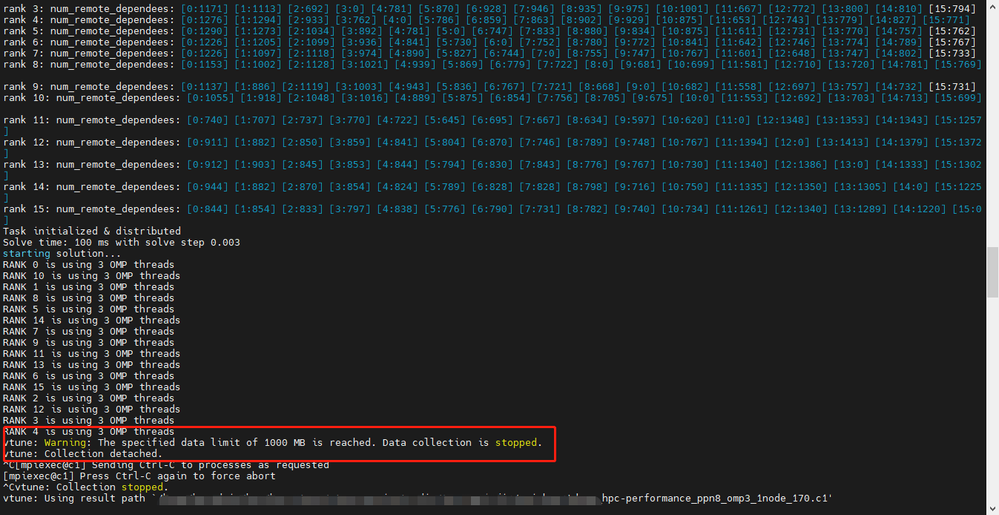

but it stops here (as shown in the red box). You need to press Ctrl + C to continue collecting,Can you let VTune start analyzing automatically.

2)VTune analyzes MPI multi nodes, one of which is killed

command:

vtune -collect hpc-performance -k collect-affinity=true -trace-mpi -result-dir /home/hpcadmin/hys/hys_vtune/vtune_projects_dir/test_mpi_dir/mpi_heartdemo_hpc-performance_ppn8_omp3_2node_172 -- mpiexec -genv I_MPI_DEBUG=4 -n 16 -ppn 8 -hosts c1,c2 /home/hpcadmin/hys/hys_vtune/vtune_test_code/Cardiac_demo/build/heart_demo -m /home/hpcadmin/hys/hys_vtune/vtune_test_code/Cardiac_demo/mesh_mid -s /home/hpcadmin/hys/hys_vtune/vtune_test_code/Cardiac_demo/setup_mid.txt -t 100

output:

vtune: Analyzing data in the node-wide mode. The hostname (c2) will be added to the result path/name.

vtune: Peak bandwidth measurement started.

vtune: Peak bandwidth measurement finished.

vtune: Warning: To enable hardware event-based sampling, VTune Profiler has disabled the NMI watchdog timer. The watchdog timer will be re-enabled after collection completes.

vtune: Collection started. To stop the collection, either press CTRL-C or enter from another console window: vtune -r /home/hpcadmin/hys/hys_vtune/vtune_projects_dir/test_mpi_dir/mpi_heartdemo_hpc-performance_ppn8_omp3_2node_172.c2 -command stop.

[0] MPI startup(): Intel(R) MPI Library, Version 2021.2 Build 20210302 (id: f4f7c92cd)

[0] MPI startup(): Copyright (C) 2003-2021 Intel Corporation. All rights reserved.

[0] MPI startup(): library kind: release

[0] MPI startup(): libfabric version: 1.11.0-impi

[0] MPI startup(): libfabric provider: verbs;ofi_rxm

[0] MPI startup(): File "/opt/intel/oneapi/mpi/2021.2.0/etc/tuning_icx_shm-ofi_verbs-ofi-rxm.dat" not found

[0] MPI startup(): Load tuning file: "/opt/intel/oneapi/mpi/2021.2.0/etc/tuning_icx_shm-ofi.dat"

[0] MPI startup(): File "/opt/intel/oneapi/mpi/2021.2.0/etc/tuning_icx_shm-ofi.dat" not found

[0] MPI startup(): Load tuning file: "/opt/intel/oneapi/mpi/2021.2.0/etc/tuning_clx-ap_shm-ofi.dat"

MPI rank 1 has started on c1

MPI rank 3 has started on c1

MPI rank 7 has started on c1

MPI rank 5 has started on c1

MPI rank 6 has started on c1

MPI rank 4 has started on c1

MPI rank 2 has started on c1

MPI rank 9 has started on c2

MPI rank 13 has started on c2

MPI rank 14 has started on c2

MPI rank 15 has started on c2

MPI rank 11 has started on c2

MPI rank 12 has started on c2

MPI rank 10 has started on c2

MPI rank 8 has started on c2

[0] MPI startup(): Rank Pid Node name Pin cpu

[0] MPI startup(): 0 1202863 c1 {0,1,2,3,4,5,6,56,57,58,59,60,61,62}

[0] MPI startup(): 1 1202864 c1 {7,8,9,10,11,12,13,63,64,65,66,67,68,69}

[0] MPI startup(): 2 1202865 c1 {14,15,16,17,18,19,20,70,71,72,73,74,75,76}

[0] MPI startup(): 3 1202866 c1 {21,22,23,24,25,26,27,77,78,79,80,81,82,83}

[0] MPI startup(): 4 1202867 c1 {28,29,30,31,32,33,34,84,85,86,87,88,89,90}

[0] MPI startup(): 5 1202868 c1 {35,36,37,38,39,40,41,91,92,93,94,95,96,97}

[0] MPI startup(): 6 1202869 c1 {42,43,44,45,46,47,48,98,99,100,101,102,103,104}

[0] MPI startup(): 7 1202870 c1 {49,50,51,52,53,54,55,105,106,107,108,109,110,111}

[0] MPI startup(): 8 2475730 c2 {0,1,2,3,4,5,6,56,57,58,59,60,61,62}

[0] MPI startup(): 9 2475732 c2 {7,8,9,10,11,12,13,63,64,65,66,67,68,69}

[0] MPI startup(): 10 2475734 c2 {14,15,16,17,18,19,20,70,71,72,73,74,75,76}

[0] MPI startup(): 11 2475737 c2 {21,22,23,24,25,26,27,77,78,79,80,81,82,83}

[0] MPI startup(): 12 2475740 c2 {28,29,30,31,32,33,34,84,85,86,87,88,89,90}

[0] MPI startup(): 13 2475743 c2 {35,36,37,38,39,40,41,91,92,93,94,95,96,97}

[0] MPI startup(): 14 2475746 c2 {42,43,44,45,46,47,48,98,99,100,101,102,103,104}

[0] MPI startup(): 15 2475749 c2 {49,50,51,52,53,54,55,105,106,107,108,109,110,111}

MPI rank 0 has started on c1

Number of Nodes: 28187

Number of Cells: 112790

Root init took 0.180209 secs

rank 0: r_start=0 r_end=1761 r_len=1762

rank 1: r_start=1762 r_end=3523 r_len=1762

rank 2: r_start=3524 r_end=5285 r_len=1762

rank 3: r_start=5286 r_end=7047 r_len=1762

rank 4: r_start=7048 r_end=8809 r_len=1762

rank 5: r_start=8810 r_end=10571 r_len=1762

rank 6: r_start=10572 r_end=12333 r_len=1762

rank 7: r_start=12334 r_end=14095 r_len=1762

rank 8: r_start=14096 r_end=15857 r_len=1762

rank 9: r_start=15858 r_end=17619 r_len=1762

rank 10: r_start=17620 r_end=19381 r_len=1762

rank 11: r_start=19382 r_end=21142 r_len=1761

rank 12: r_start=21143 r_end=22903 r_len=1761

rank 13: r_start=22904 r_end=24664 r_len=1761

rank 14: r_start=24665 r_end=26425 r_len=1761

rank 15: r_start=26426 r_end=28186 r_len=1761

rank 0: non_local_ids.size(): 14787

rank 1: non_local_ids.size(): 13859

rank 2: non_local_ids.size(): 13381

rank 3: non_local_ids.size(): 13508

rank 4: non_local_ids.size(): 13314

rank 5: non_local_ids.size(): 12779

rank 6: non_local_ids.size(): 12766

rank 7: non_local_ids.size(): 12449

rank 8: non_local_ids.size(): 12310

rank 9: non_local_ids.size(): 11962

rank 10: non_local_ids.size(): 12027

rank 11: non_local_ids.size(): 12098

rank 12: non_local_ids.size(): 13454

rank 13: non_local_ids.size(): 13766

rank 14: non_local_ids.size(): 13952

rank 15: non_local_ids.size(): 13578

rank 0: non_loc_dependecies: (1:337) (2:565) (3:1171) (4:1276) (5:1290) (6:1226) (7:1226) (8:1153) (9:1137) (10:1055) (11:740) (12:911) (13:912) (14:944) (15:844)

rank 1: non_loc_dependecies: (0:324) (2:519) (3:1113) (4:1294) (5:1273) (6:1205) (7:1097) (8:1002) (9:886) (10:918) (11:707) (12:882) (13:903) (14:882) (15:854)

rank 2: non_loc_dependecies: (0:538) (1:537) (3:692) (4:933) (5:1034) (6:1099) (7:1118) (8:1128) (9:1119) (10:1048) (11:737) (12:850) (13:845) (14:870) (15:833)

rank 3: non_loc_dependecies: (0:1045) (1:1051) (2:675) (4:762) (5:892) (6:936) (7:974) (8:1021) (9:1003) (10:1016) (11:770) (12:859) (13:853) (14:854) (15:797)

rank 4: non_loc_dependecies: (0:1047) (1:1209) (2:925) (3:781) (5:781) (6:841) (7:890) (8:939) (9:943) (10:889) (11:722) (12:841) (13:844) (14:824) (15:838)

rank 5: non_loc_dependecies: (0:1023) (1:1157) (2:998) (3:870) (4:786) (6:730) (7:827) (8:869) (9:836) (10:875) (11:645) (12:804) (13:794) (14:789) (15:776)

rank 6: non_loc_dependecies: (0:1003) (1:1048) (2:1024) (3:928) (4:859) (5:747) (7:744) (8:779) (9:767) (10:854) (11:695) (12:870) (13:830) (14:828) (15:790)

rank 7: non_loc_dependecies: (0:1009) (1:980) (2:1052) (3:946) (4:863) (5:833) (6:752) (8:722) (9:721) (10:756) (11:667) (12:746) (13:843) (14:828) (15:731)

rank 8: non_loc_dependecies: (0:930) (1:930) (2:1046) (3:935) (4:902) (5:880) (6:780) (7:755) (9:668) (10:705) (11:634) (12:789) (13:776) (14:798) (15:782)

rank 9: non_loc_dependecies: (0:918) (1:844) (2:1019) (3:975) (4:929) (5:834) (6:772) (7:747) (8:681) (10:675) (11:597) (12:748) (13:767) (14:716) (15:740)

rank 10: non_loc_dependecies: (0:891) (1:857) (2:938) (3:1001) (4:875) (5:875) (6:841) (7:767) (8:699) (9:682) (11:620) (12:767) (13:730) (14:750) (15:734)

rank 11: non_loc_dependecies: (0:637) (1:611) (2:654) (3:667) (4:653) (5:611) (6:642) (7:601) (8:581) (9:558) (10:553) (12:1394) (13:1340) (14:1335) (15:1261)

rank 12: non_loc_dependecies: (0:789) (1:773) (2:729) (3:772) (4:743) (5:731) (6:746) (7:648) (8:710) (9:697) (10:692) (11:1348) (13:1386) (14:1350) (15:1340)

rank 13: non_loc_dependecies: (0:795) (1:808) (2:753) (3:800) (4:779) (5:770) (6:774) (7:747) (8:720) (9:757) (10:703) (11:1353) (12:1413) (14:1305) (15:1289)

rank 14: non_loc_dependecies: (0:848) (1:818) (2:800) (3:810) (4:827) (5:757) (6:789) (7:802) (8:781) (9:732) (10:713) (11:1343) (12:1379) (13:1333) (15:1220)

rank 15: non_loc_dependecies: (0:785) (1:805) (2:806) (3:794) (4:771) (5:762) (6:767) (7:733) (8:769) (9:731) (10:699) (11:1257) (12:1372) (13:1302) (14:1225)

rank 0: num_remote_dependees: [0:0] [1:324] [2:538] [3:1045] [4:1047] [5:1023] [6:1003] [7:1009] [8:930] [9:918] [10:891] [11:637] [12:789] [13:795] [14:848] [15:785]

rank 1: num_remote_dependees: [0:337] [1:0] [2:537] [3:1051] [4:1209] [5:1157] [6:1048] [7:980] [8:930] [9:844] [10:857] [11:611] [12:773] [13:808] [14:818] [15:805]

rank 2: num_remote_dependees: [0:565] [1:519] [2:0] [3:675] [4:925] [5:998] [6:1024] [7:1052] [8:1046] [9:1019] [10:938] [11:654] [12:729] [13:753] [14:800] [15:806]

rank 3: num_remote_dependees: [0:1171] [1:1113] [2:692] [3:0] [4:781] [5:870] [6:928] [7:946] [8:935] [9:975] [10:1001] [11:667] [12:772] [13:800] [14:810] [15:794]

rank 4: num_remote_dependees: [0:1276] [1:1294] [2:933] [3:762] [4:0] [5:786] [6:859] [7:863] [8:902] [9:929] [10:875] [11:653] [12:743] [13:779] [14:827] [15:771]

rank 5: num_remote_dependees: [0:1290] [1:1273] [2:1034] [3:892] [4:781] [5:0] [6:747] [7:833] [8:880] [9:834] [10:875] [11:611] [12:731] [13:770] [14:757] [15:762]

rank 6: num_remote_dependees: [0:1226] [1:1205] [2:1099] [3:936] [4:841] [5:730] [6:0] [7:752] [8:780] [9:772] [10:841] [11:642] [12:746] [13:774] [14:789] [15:767]

rank 7: num_remote_dependees: [0:1226] [1:1097] [2:1118] [3:974] [4:890] [5:827] [6:744] [7:0] [8:755] [9:747] [10:767] [11:601] [12:648] [13:747] [14:802] [15:733]

rank 8: num_remote_dependees: [0:1153] [1:1002] [2:1128] [3:1021] [4:939] [5:869] [6:779] [7:722] [8:0] [9:681] [10:699] [11:581] [12:710] [13:720] [14:781] [15:769]

rank 9: num_remote_dependees: [0:1137] [1:886] [2:1119] [3:1003] [4:943] [5:836] [6:767] [7:721] [8:668] [9:0] [10:682] [11:558] [12:697] [13:757] [14:732] [15:731]

rank 10: num_remote_dependees: [0:1055] [1:918] [2:1048] [3:1016] [4:889] [5:875] [6:854] [7:756] [8:705] [9:675] [10:0] [11:553] [12:692] [13:703] [14:713] [15:699]

rank 11: num_remote_dependees: [0:740] [1:707] [2:737] [3:770] [4:722] [5:645] [6:695] [7:667] [8:634] [9:597] [10:620] [11:0] [12:1348] [13:1353] [14:1343] [15:1257]

rank 12: num_remote_dependees: [0:911] [1:882] [2:850] [3:859] [4:841] [5:804] [6:870] [7:746] [8:789] [9:748] [10:767] [11:1394] [12:0] [13:1413] [14:1379] [15:1372]

rank 13: num_remote_dependees: [0:912] [1:903] [2:845] [3:853] [4:844] [5:794] [6:830] [7:843] [8:776] [9:767] [10:730] [11:1340] [12:1386] [13:0] [14:1333] [15:1302]

rank 14: num_remote_dependees: [0:944] [1:882] [2:870] [3:854] [4:824] [5:789] [6:828] [7:828] [8:798] [9:716] [10:750] [11:1335] [12:1350] [13:1305] [14:0] [15:1225]

rank 15: num_remote_dependees: [0:844] [1:854] [2:833] [3:797] [4:838] [5:776] [6:790] [7:731] [8:782] [9:740] [10:734] [11:1261] [12:1340] [13:1289] [14:1220] [15:0]

Task initialized & distributed

Solve time: 100 ms with solve step 0.003

starting solution...

RANK 1 is using 3 OMP threads

RANK 0 is using 3 OMP threads

RANK 2 is using 3 OMP threads

RANK 3 is using 3 OMP threads

RANK 4 is using 3 OMP threads

RANK 5 is using 3 OMP threads

RANK 6 is using 3 OMP threads

RANK 7 is using 3 OMP threads

RANK 12 is using 3 OMP threads

RANK 11 is using 3 OMP threads

RANK 10 is using 3 OMP threads

RANK 14 is using 3 OMP threads

RANK 15 is using 3 OMP threads

RANK 8 is using 3 OMP threads

RANK 13 is using 3 OMP threads

RANK 9 is using 3 OMP threads

vcs/collectunits1/tmu/src/tmu.c:939 alloc_record: Assertion '(next)->head <= (next)->write_ptr && (next)->write_ptr <= ((next)->head + (next)->size)' failed.

vcs/collectunits1/tmu/src/tmu.c:939 alloc_record: Assertion '(next)->head <= (next)->write_ptr && (next)->write_ptr <= ((next)->head + (next)->size)' failed.

vcs/collectunits1/tmu/src/tmu.c:939 alloc_record: Assertion '(next)->head <= (next)->write_ptr && (next)->write_ptr <= ((next)->head + (next)->size)' failed.

vcs/collectunits1/tmu/src/tmu.c:939 alloc_record: Assertion '(next)->head <= (next)->write_ptr && (next)->write_ptr <= ((next)->head + (next)->size)' failed.

vcs/collectunits1/tmu/src/tmu.c:939 alloc_record: Assertion '(next)->head <= (next)->write_ptr && (next)->write_ptr <= ((next)->head + (next)->size)' failed.

vcs/collectunits1/tmu/src/tmu.c:939 alloc_record: Assertion '(next)->head <= (next)->write_ptr && (next)->write_ptr <= ((next)->head + (next)->size)' failed.

vcs/collectunits1/tmu/src/tmu.c:939 alloc_record: Assertion '(next)->head <= (next)->write_ptr && (next)->write_ptr <= ((next)->head + (next)->size)' failed.

vcs/collectunits1/tmu/src/tmu.c:939 alloc_record: Assertion '(next)->head <= (next)->write_ptr && (next)->write_ptr <= ((next)->head + (next)->size)' failed.

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= RANK 0 PID 1202863 RUNNING AT c1

= KILLED BY SIGNAL: 6 (Aborted)

===================================================================================

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= RANK 1 PID 1202864 RUNNING AT c1

= KILLED BY SIGNAL: 9 (Killed)

===================================================================================

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= RANK 2 PID 1202865 RUNNING AT c1

= KILLED BY SIGNAL: 9 (Killed)

===================================================================================

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= RANK 3 PID 1202866 RUNNING AT c1

= KILLED BY SIGNAL: 9 (Killed)

===================================================================================

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= RANK 4 PID 1202867 RUNNING AT c1

= KILLED BY SIGNAL: 9 (Killed)

===================================================================================

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= RANK 5 PID 1202868 RUNNING AT c1

= KILLED BY SIGNAL: 9 (Killed)

===================================================================================

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= RANK 6 PID 1202869 RUNNING AT c1

= KILLED BY SIGNAL: 9 (Killed)

===================================================================================

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= RANK 7 PID 1202870 RUNNING AT c1

= KILLED BY SIGNAL: 6 (Aborted)

===================================================================================

vtune: Collection stopped.

vtune: Using result path `/home/hpcadmin/hys/hys_vtune/vtune_projects_dir/test_mpi_dir/mpi_heartdemo_hpc-performance_ppn8_omp3_2node_172.c2'

vtune: Executing actions 19 % Resolving information for `libshm-fi.so'

vtune: Warning: Cannot locate debugging information for file `/opt/intel/oneapi/mpi/2021.2.0/libfabric/lib/prov/libshm-fi.so'.

vtune: Warning: Cannot locate debugging information for file `/usr/lib64/ld-2.28.so'.

vtune: Warning: Cannot locate file `ib_core.ko'.

vtune: Executing actions 19 % Resolving information for `ib_core'

vtune: Warning: Cannot locate file `nfs.ko'.

vtune: Executing actions 19 % Resolving information for `nfs'

vtune: Warning: Cannot locate file `xfs.ko'.

vtune: Warning: Cannot locate file `sep5.ko'.

vtune: Executing actions 19 % Resolving information for `sep5'

vtune: Warning: Cannot locate file `mlx5_ib.ko'.

vtune: Executing actions 19 % Resolving information for `mlx5_ib'

vtune: Warning: Cannot locate file `bnxt_en.ko'.

vtune: Executing actions 19 % Resolving information for `bnxt_en'

vtune: Warning: Cannot locate debugging information for file `/opt/intel/oneapi/vtune/2021.5.0/lib64/runtime/libittnotify_collector.so'.

vtune: Executing actions 20 % Resolving information for `libpthread-2.28.so'

vtune: Warning: Cannot locate debugging information for file `/usr/lib64/libpthread-2.28.so'.

vtune: Executing actions 20 % Resolving information for `libmpi.so.12.0.0'

vtune: Warning: Cannot locate debugging information for file `/usr/lib64/libc-2.28.so'.

vtune: Warning: Cannot locate file `rdma_ucm.ko'.

vtune: Executing actions 20 % Resolving information for `librdmacm.so.1.2.29.0'

vtune: Warning: Cannot locate debugging information for file `/usr/lib64/librdmacm.so.1.2.29.0'.

vtune: Executing actions 20 % Resolving information for `ssh'

vtune: Warning: Cannot locate file `mlx5_core.ko'.

vtune: Executing actions 20 % Resolving information for `mlx5_core'

vtune: Warning: Cannot locate debugging information for file `/usr/bin/ssh'.

vtune: Executing actions 20 % Resolving information for `libcrypto.so.1.1.1g'

vtune: Warning: Cannot locate debugging information for file `/opt/intel/oneapi/mpi/2021.2.0/libfabric/lib/prov/librxm-fi.so'.

vtune: Executing actions 20 % Resolving information for `libnl-3.so.200.26.0'

vtune: Warning: Cannot locate debugging information for file `/usr/lib64/libcrypto.so.1.1.1g'.

vtune: Warning: Cannot locate debugging information for file `/usr/lib64/libnl-3.so.200.26.0'.

vtune: Executing actions 21 % Resolving information for `libbnxt_re-rdmav25.so'

vtune: Warning: Cannot locate debugging information for file `/usr/lib64/libibverbs/libbnxt_re-rdmav25.so'.

vtune: Executing actions 21 % Resolving information for `libibverbs.so.1.8.29.0

vtune: Warning: Cannot locate debugging information for file `/usr/lib64/libibverbs.so.1.8.29.0'.

vtune: Executing actions 21 % Resolving information for `libverbs-fi.so'

vtune: Warning: Cannot locate debugging information for file `/opt/intel/oneapi/mpi/2021.2.0/libfabric/lib/prov/libverbs-fi.so'.

vtune: Warning: Cannot locate file `bnxt_re.ko'.

vtune: Executing actions 21 % Resolving information for `libfabric.so.1'

vtune: Warning: Cannot locate debugging information for file `/opt/intel/oneapi/mpi/2021.2.0/libfabric/lib/libfabric.so.1'.

vtune: Executing actions 21 % Resolving information for `libnss_files-2.28.so'

vtune: Warning: Cannot locate debugging information for file `/usr/lib64/libnss_files-2.28.so'.

vtune: Executing actions 21 % Resolving information for `libiomp5.so'

vtune: Warning: Cannot locate debugging information for the Linux kernel. Source-level analysis will not be possible. Function-level analysis will be limited to kernel symbol tables. See the Enabling Linux Kernel Analysis topic in the product online help for instructions.

vtune: Warning: Cannot locate file `ib_uverbs.ko'.

vtune: Executing actions 22 % Resolving information for `libmlx5.so.1.13.29.0'

vtune: Warning: Cannot locate debugging information for file `/usr/lib64/libmlx5.so.1.13.29.0'.

vtune: Executing actions 75 % Generating a report Elapsed Time: 59.306s

SP GFLOPS: 0.000

DP GFLOPS: 0.267

x87 GFLOPS: 0.000

CPI Rate: 1.297

| The CPI may be too high. This could be caused by issues such as memory

| stalls, instruction starvation, branch misprediction or long latency

| instructions. Explore the other hardware-related metrics to identify what

| is causing high CPI.

|

Average CPU Frequency: 3.261 GHz

Total Thread Count: 37

Effective Physical Core Utilization: 13.1% (7.334 out of 56)

| The metric value is low, which may signal a poor physical CPU cores

| utilization caused by:

| - load imbalance

| - threading runtime overhead

| - contended synchronization

| - thread/process underutilization

| - incorrect affinity that utilizes logical cores instead of physical

| cores

| Explore sub-metrics to estimate the efficiency of MPI and OpenMP parallelism

| or run the Locks and Waits analysis to identify parallel bottlenecks for

| other parallel runtimes.

|

Effective Logical Core Utilization: 6.6% (7.403 out of 112)

| The metric value is low, which may signal a poor logical CPU cores

| utilization. Consider improving physical core utilization as the first

| step and then look at opportunities to utilize logical cores, which in

| some cases can improve processor throughput and overall performance of

| multi-threaded applications.

|

Memory Bound: 31.5% of Pipeline Slots

| The metric value is high. This can indicate that the significant fraction of

| execution pipeline slots could be stalled due to demand memory load and

| stores. Use Memory Access analysis to have the metric breakdown by memory

| hierarchy, memory bandwidth information, correlation by memory objects.

|

Cache Bound: 25.6% of Clockticks

| A significant proportion of cycles are being spent on data fetches from

| caches. Check Memory Access analysis to see if accesses to L2 or L3

| caches are problematic and consider applying the same performance tuning

| as you would for a cache-missing workload. This may include reducing the

| data working set size, improving data access locality, blocking or

| partitioning the working set to fit in the lower cache levels, or

| exploiting hardware prefetchers. Consider using software prefetchers, but

| note that they can interfere with normal loads, increase latency, and

| increase pressure on the memory system. This metric includes coherence

| penalties for shared data. Check Microarchitecture Exploration analysis

| to see if contested accesses or data sharing are indicated as likely

| issues.

|

DRAM Bound: 8.4% of Clockticks

DRAM Bandwidth Bound: 0.0% of Elapsed Time

NUMA: % of Remote Accesses: 0.0%

Bandwidth Utilization

Bandwidth Domain Platform Maximum Observed Maximum Average % of Elapsed Time with High BW Utilization(%)

--------------------------- ---------------- ---------------- ------- ---------------------------------------------

DRAM, GB/sec 150 15.600 2.860 0.0%

DRAM Single-Package, GB/sec 75 8.600 1.440 0.0%

Vectorization: 0.0% of Packed FP Operations

Instruction Mix

SP FLOPs: 0.0% of uOps

Packed: 0.0% from SP FP

128-bit: 0.0% from SP FP

256-bit: 0.0% from SP FP

512-bit: 0.0% from SP FP

Scalar: 0.0% from SP FP

DP FLOPs: 0.9% of uOps

Packed: 0.0% from DP FP

128-bit: 0.0% from DP FP

256-bit: 0.0% from DP FP

512-bit: 0.0% from DP FP

Scalar: 100.0% from DP FP

x87 FLOPs: 0.0% of uOps

Non-FP: 99.1% of uOps

FP Arith/Mem Rd Instr. Ratio: 0.034

FP Arith/Mem Wr Instr. Ratio: 0.089

Collection and Platform Info

Application Command Line: mpiexec "-genv" "I_MPI_DEBUG=4" "-n" "16" "-ppn" "8" "-hosts" "c1,c2" "/home/hpcadmin/hys/hys_vtune/vtune_test_code/Cardiac_demo/build/heart_demo" "-m" "/home/hpcadmin/hys/hys_vtune/vtune_test_code/Cardiac_demo/mesh_mid" "-s" "/home/hpcadmin/hys/hys_vtune/vtune_test_code/Cardiac_demo/setup_mid.txt" "-t" "100"

User Name: root

Operating System: 4.18.0-240.el8.x86_64 \S Kernel \r on an \m

Computer Name: c2

Result Size: 421.9 MB

Collection start time: 02:00:53 05/07/2021 UTC

Collection stop time: 02:01:53 05/07/2021 UTC

Collector Type: Event-based sampling driver,User-mode sampling and tracing

CPU

Name: Intel(R) Xeon(R) Processor code named Icelake

Frequency: 2.195 GHz

Logical CPU Count: 112

Max DRAM Single-Package Bandwidth: 75.000 GB/s

If you want to skip descriptions of detected performance issues in the report,

enter: vtune -report summary -report-knob show-issues=false -r <my_result_dir>.

Alternatively, you may view the report in the csv format: vtune -report

<report_name> -format=csv.

vtune: Executing actions 100 % done

I want to know what causes this, what solutions are available to deal with these problems, and whether the multi node MPI program can collect information from multiple nodes. Thanks for your help.

- Tags:

- VTune

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Could you please run VTune collection in the following notation:

mpiexec -genv I_MPI_DEBUG=4 -n 16 -ppn 8 -hosts c1,c2 vtune -collect hpc-performance -k collect-affinity=true -trace-mpi -result-dir /home/hpcadmin/hys/hys_vtune/vtune_projects_dir/test_mpi_dir/mpi_heartdemo_hpc-performance_ppn8_omp3_2node_172 -- /home/hpcadmin/hys/hys_vtune/vtune_test_code/Cardiac_demo/build/heart_demo -m /home/hpcadmin/hys/hys_vtune/vtune_test_code/Cardiac_demo/mesh_mid -s /home/hpcadmin/hys/hys_vtune/vtune_test_code/Cardiac_demo/setup_mid.txt -t 100

So you need to run VTune CL under the MPI launcher. You can also switch off data limit to do collection for the whole run adding "-data-limit=0" to the VTune command line.

BTW: it might be worth to read VTune Cookbook on how to use it on MPI apps: https://software.intel.com/content/www/us/en/develop/documentation/vtune-cookbook/top/configuration-recipes/profiling-mpi-applications.html.

Thanks & Regards, Dmitry

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for posting in Intel forums. We are looking into your case. We will get back to you.

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Could you please run VTune collection in the following notation:

mpiexec -genv I_MPI_DEBUG=4 -n 16 -ppn 8 -hosts c1,c2 vtune -collect hpc-performance -k collect-affinity=true -trace-mpi -result-dir /home/hpcadmin/hys/hys_vtune/vtune_projects_dir/test_mpi_dir/mpi_heartdemo_hpc-performance_ppn8_omp3_2node_172 -- /home/hpcadmin/hys/hys_vtune/vtune_test_code/Cardiac_demo/build/heart_demo -m /home/hpcadmin/hys/hys_vtune/vtune_test_code/Cardiac_demo/mesh_mid -s /home/hpcadmin/hys/hys_vtune/vtune_test_code/Cardiac_demo/setup_mid.txt -t 100

So you need to run VTune CL under the MPI launcher. You can also switch off data limit to do collection for the whole run adding "-data-limit=0" to the VTune command line.

BTW: it might be worth to read VTune Cookbook on how to use it on MPI apps: https://software.intel.com/content/www/us/en/develop/documentation/vtune-cookbook/top/configuration-recipes/profiling-mpi-applications.html.

Thanks & Regards, Dmitry

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your reply, which solved my problem.

I can analyze MPI multiprocessor program with VTune now.

thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for the confirmation. This thread will no longer be monitored. If you have any other query you can post a new question.

Thanks

Rahul

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page