Intel has spun-off the Omni-Path business to Cornelis Networks, an independent Intel Capital portfolio company. Cornelis Networks will continue to serve and sell to existing and new customers by delivering leading purpose-built high-performance network products for high performance computing and artificial intelligence. Intel believes Cornelis Networks will expand the ecosystem of high-performance fabric solutions, offering options to customers building clusters for HPC and AI based on Intel® Xeon® processors. Additional details on the divestiture and transition of Omni-Path products can be found at www.cornelisnetworks.com.

Introduction

Adding a second 100Gb Intel® Omni-Path Architecture Host Fabric Interface (HFI) to an HPC server/node is an easy way to enable 200Gb network capacity to the server. In this article we demonstrate the MPI performance of a dual rail Intel Omni-Path Architecture (Intel® OPA) configuration on some of the latest Intel® Xeon® Gold 6252 processors.

Ease of Installing Dual Rail with Inte(R) Omni-Path Architecture (Intel(R) OPA)

We enabled 200Gb to the node by installing a total of two 100Gb HFIs, one per CPU socket on the node. Each HFI is plugged into the same 100Gb Intel OPA 100-series edge switch. Both HFIs automatically join the fabric and no additional configuration is necessary. While this is typical for a compute node, additional configuration may be necessary for other types of nodes such as storage targets. Consult the Intel OPA documentation for more detail (referenced below). Our complete configuration is provided at the end of the article.

How to Use Dual Rail?

While no special MPI or PSM flags are required to use both HFIs, there are two main points to understand:

- The default behavior is for each MPI rank to use only a single, NUMA-local HFI. If there is no local HFI, it uses the nearest one it can find on the server. In order to make sure both HFIs are used by an MPI application, ensure that there is at least one MPI rank on each NUMA that an HFI is installed on.

- Users can force each MPI rank to “stripe” its messages across all available HFIs by setting PSM2_MULTIRAIL=1 environment variable. This means that even when using a single rank per node, throughput to the server can approach 200Gb per second by using both HFIs automatically, no matter on which NUMAs they are installed. This is typically only recommended if there is only 1 MPI rank per node in use, or, if there are no MPI ranks sharing a NUMA node with an HFI.

Performance Results

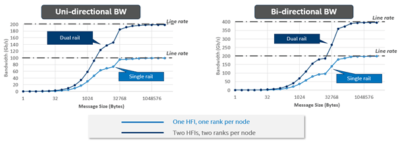

Uni- and bi-directional bandwidth results are shown below using Intel® MPI Benchmarks Uniband and Biband benchmarks. Throughput in gigabits per second is shown on the y-axis and message size in bytes on the x-axis. With one MPI rank per node (light blue line), only one HFI is used, which is the default behavior discussed earlier with one rank per node. Uni-directional and bi-directional 100Gb line rate is achieved for message sizes 32KB and greater and 128KB and greater, respectively.

Now consider using two MPI ranks per node (multiple MPI ranks per node are often used in HPC applications). On each node, one rank is running on NUMA 0, the other to NUMA 1. In this configuration, 200Gbps line rate is saturated in both the uni- and bi-directional benchmark. Note that no PSM2_MULTIRAIL flags are required in this scenario because we have at least one MPI rank per NUMA-local HFI.

Summary and Where to get More Information

It is very easy to enable 200Gbps with Intel OPA by installing two HFIs into HPC servers. Typically, no additional system administration or user-level adjustments are required to immediately benefit from dual rail Intel OPA. Applications that are written to demand high network bandwidth will automatically take advantage of the additional bandwidth. Look for future articles describing the benefits of 200Gb and Intel OPA!

For more information, refer to the Intel OPA technical publications (http://www.intel.com/omnipath/FabricSoftwarePublications). Specifically, the Host Software User Guide, the PSM2 Programmers Guide, and the Performance Tuning Guide discuss multi-rail Intel OPA in detail.

Configuration and Disclaimers

Tests performed on dual socket Intel® Xeon® Gold 6252 processors (0x500002c). 24 cores per socket. 192 GB DDR4, 2933 MHz RDIMM ECC memory. Red Hat Enterprise Linux Server 7.7 (Maipo). 3.10.0-1062.12.1.el7.x86_64 kernel. Intel® Turbo Boost Technology and Intel® Hyper-Threading Technology enabled. Intel MPI Benchmarks 2018 Update 1, Uniband and Biband using I_MPI_FABRICS=shm:ofi. Intel® MPI Library 2019 Update 5. Intel Fabric Suite (IFS) 10.10.1.0.36. HFI driver parameters: rcvhdrcnt=8192 cap_mask=0x4c09a01cbba. PSM2_MQ_EAGER_SDMA_SZ=16384 run time tuning. 2M copper cables with one 100 Series Intel® OPA 48-port switch hop. itlb_multihit:Processor vulnerable. l1tf:Not affected. mds:Not affected. meltdown:Not affected. spec_store_bypass:Mitigation: Speculative Store Bypass disabled via prctl and seccomp. spectre_v1:Mitigation: Load fences, usercopy/swapgs barriers and __user pointer sanitization. spectre_v2:Mitigation: Enhanced IBRS, IBPB. tsx_async_abort:Mitigation: Clear CPU buffers; SMT vulnerable. Intel S2600WFD with BIOS version SE5C620.86B.02.01.0010.010620200716.

Performance results are based on testing as of March 27 2020 and may not reflect all publically available security updates. See configuration disclosure for details. No product can be absolutely secure.

Software and workloads used in performance tests may have been optimized for performance only on Intel microprocessors.

Performance tests, such as SYSmark and MobileMark, are measured using specific computer systems, components, software, operations and functions. Any change to any of those factors may cause the results to vary. You should consult other information and performance tests to assist you in fully evaluating your contemplated purchases, including the performance of that product when combined with other products. For more complete information visit www.intel.com/benchmarks.

Intel technologies' features and benefits depend on system configuration and may require enabled hardware, software or service activation. Performance varies depending on system configuration. No computer system can be absolutely secure. Check with your system manufacturer or retailer or learn more at intel.com.

All information provided here is subject to change without notice. Contact your Intel representative to obtain the latest Intel product specifications and roadmaps.

The products described may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are available on request.

Intel provides these materials as-is, with no express or implied warranties.

Intel, the Intel logo, Intel Core, Intel Optane, Intel Inside, Pentium, Xeon and others are trademarks of Intel Corporation or its subsidiaries in the U.S. and/or other countries.

*Other names and brands may be claimed as the property of others.

©Intel Corporation

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.