Authors: Nikhil Deshpande, Senior Director of Security and Chief Business Strategist for Project Amber in the Office of the CTO & Inderpreet Singh, Senior Director of Business Strategy, Accenture

Federated learning, enabled by and feeding AI, can process high of volumes data in decentralized systems to deliver deep analytical insights. Confidential Computing provides the more secure execution required by the ML technique by running workloads in a Trusted Execution Environment (TEE) in public/private clouds and at the edge. Doing so protects against unauthorized viewing and tampering of code and data at rest, in flight, and in use. This emerging approach also helps secure the platform, providing trust, resilience and visibility control for application hosting and trusted third-party compute.

Organizations seeking to run federated learning and other confidential workloads across multiple cloud service providers (CSPs), however, quickly encounter a challenge: Today’s TEEs are self-attested by individual CSPs. That means the vendor provides both infrastructure and attestation security – a crucial function in confidential computing. Attestation verifies the identity and integrity of software and hardware to a remote server. Having providers at the center of the process reduces security confidence for the tenant. More fundamentally, it creates a solution that is not able to support a multi-cloud environment.

Neutral, cloud agnostic attestation service

The solution is straightforward: De-linking attestation verification from the cloud or edge infrastructure provider hosting the confidential computing workload. Introducing a neutral, operationally independent “third-party” between the CSP and end user greatly increases the assurance for sensitive workloads and opens the door for confidential computing in multi-cloud, multi-vendor environments. That’s the innovative new approach taken by Project Amber – a new planned Intel Software offering.

Project Amber 1.0 is a service implementation of a trust authority. It remotely verifies and asserts trustworthiness of compute assets (TEEs, devices, policies, Roots of Trust, etc.) based on Attestation, Policy, Reputation/Risk data. Project Amber hits the Trifecta in meeting end user needs for workloads. It delivers:

- Security via independent, neutral attestation

- Scalability: Multi-cloud, unified, consistent attestation

- Turnkey service and cost savings

These capabilities represent great progress in the Confidential Computing space and good reasons for large organizations to advance innovative initiatives, like the ones related to Federated Learning and cross-organization data collaboration.

Driving collaboration, preserving privacy

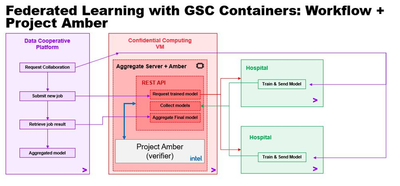

Accenture is integrating Project Amber into a new AI-based framework for privacy-protecting data cooperatives. Well-designed cooperatives let companies share data and collaborate and help reduce concerns around trust, compliance, privacy and data control or ownership. Advancing its previous work in this space, the Accenture proof of concept (POC) allows healthcare institutions to expand their knowledge by privately and more securely sharing data and an AI model for disease detection and prevention trained from multiple sources.

In an earlier phase, Accenture worked with Intel to demonstrate federated data learning for a practical use case, detecting sepsis. Sepsis is a life-threatening, infected-related condition for patients –– and a serious challenge for hospitals. After evaluating different techniques, Accenture chose Intel® Software Guard Extensions (SGX) secure enclave technology to aggregate AI models. The POC is a prototyped extension of Accenture Applied Intelligence’s AIP+ service, a collection of modular, pre-integrated AI services and capabilities designed to simplify the adoption of AI solution.

It’s an ideal use case. Federated learning takes place among mutually distrusting parties. So how do you know that all three parties can be trusted? How do we trust the infrastructure will protect their IP and policies? That’s where Project Amber comes in. The current phase of project introduces Project Amber as an independent, third-party attestation authority that can work across the multiple siloed data from participating hospitals.

Here’s how it works: Individual models are trained at the participating hospitals. The resulting models are then aggregated inside a more secure enclave. This creates a single, global and “more knowledgeable” AI model without any hospital directly sharing its data with any other hospital, nor with the cooperative itself. Project Amber provides independent attestation to verify the integrity of the enclave inside the aggregator.

Ultimately, each hospital can benefit from a globally trained model for local “predictions” on their private datasets. They can apply the model to near real-time data from their current patients to better detect and treat sepsis cases. And the cooperative framework can be reused for other use cases, with different data sets.

Project Amber launch and business model

With the evolution of privacy-preserving and confidential computing techniques, companies can reap the benefits of sharing and get richer, deeper intelligence without sharing the data itself. Accenture’s work is exciting; stay tuned for more!

And there will indeed be more.

Pilots of Project Amber begin in Q4 2022. General availability is planned for the first half of 2023, and will include support for cloud SaaS, and edge SaaS licensing.

We plan to offer customers two plans: A limited free service, ideal for developers who want to test drive confidential computing with test applications and a premium subscription service for production workloads which includes a large number of attestations (or quota), support for multiple custom policies, transaction records and immutability for reporting and auditing, and SLA support.

We look forward to sharing more updates soon! In the meantime, if you want to join the waitlist for Project Amber pilots, email projectamber@intel.com.

Notices and Disclaimers:

Intel technologies may require enabled hardware, software or service activation.

No product or component can be absolutely secure.

Code names are used by Intel to identify products, technologies, or services that are in development and not publicly available. These are not "commercial" names and not intended to function as trademarks.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others .

Nikhil M. Deshpande is currently the Senior Director of Security and Chief Business Strategist for Project Amber in the Office of the CTO at Intel. In prior roles, he led silicon security strategic planning in the Data Center Group as well as managed numerous security technologies research in Intel Labs including privacy preserving multi-party analytics. Nikhil has spoken at numerous conferences and holds 20+ patents. He holds M.S. and Ph.D. in Electrical & Computer Engineering from Portland State University. He also has M.S. in Technology Management from Oregon Health & Science University.

Nikhil M. Deshpande is currently the Senior Director of Security and Chief Business Strategist for Project Amber in the Office of the CTO at Intel. In prior roles, he led silicon security strategic planning in the Data Center Group as well as managed numerous security technologies research in Intel Labs including privacy preserving multi-party analytics. Nikhil has spoken at numerous conferences and holds 20+ patents. He holds M.S. and Ph.D. in Electrical & Computer Engineering from Portland State University. He also has M.S. in Technology Management from Oregon Health & Science University.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.