The purpose of a virtual platform is to run software, and to do that the software must be loaded into the virtual platform. This can take a lot of different forms depending on just how the software is built and the target system boots. We recently added a feature to the Intel® Simics® Quick-Start Platform that allows it to load a Linux* kernel binary directly into the platform. Previously, iterating on kernel variants required installing the images into a disk image before booting the virtual platform, which was a bit inconvenient in some cases.

To understand just how this works and why it helps, it is useful to go through the various ways that a virtual platform system can be set up to boot into Linux. The more sophisticated and realistic a virtual platform becomes, the harder it tends to be to “cheat” to offer user convenience beyond real hardware. The new Quick-Start Platform setup is interesting in that it manages to implement convenience without requiring any special changes to the virtual platform or the platform BIOS/UEFI (Basic Input-Output System/Unified Extensible Firmware Interface).

What’s in a “Linux”?

To run a “Linux system,” there are three critical pieces that need to be in place:

- The Linux kernel, i.e., the operating system core plus any modules compiled into it.

- The root file system, i.e., the file system that is used by the Linux kernel. Linux requires there to be some kind of file system in place in order to function.

- The kernel command line parameters. The command line provides a way to configure the kernel when it starts and override any defaults that have been compiled into the kernel itself. In particular, the command line typically tells the kernel which hardware device holds the root file system.

When a real hardware system starts, the first code that runs is always a bootloader that initializes the hardware and then starts the kernel (including providing it with its command-line parameters). Virtual platforms also follow this basic flow, but there are many variations on exactly how this works. In this blog post, we will go through five different ways to start a Linux on a virtual platform, from the simple to the sophisticated.

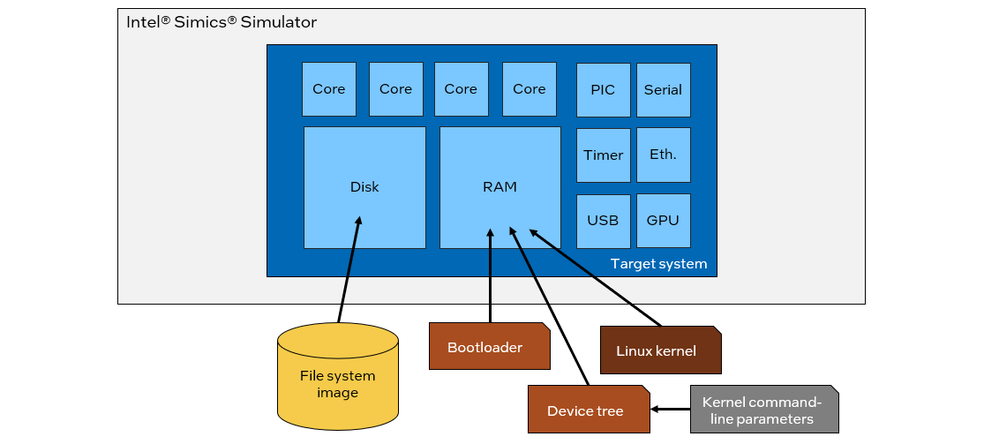

Simple Platform Direct Linux Kernel Boot

Simple virtual platforms that do not correspond to any particular real platform are quite common. They are used to run code requiring a certain instruction set architecture without either buying exotic hardware or modeling a real hardware platform that happens to contain the architecture. The Intel Simics Simulator RISC-V simple virtual platform is an example – it can be configured with a wide variety of processor core variants while providing the basic hardware features needed to run a Linux operating system.

The simple RISC-V platform boots from a set of binary files corresponding to the output from software builders like Buildroot. The files needed are the bootloader, the Linux kernel, the root file system image, and the binary device tree blob. Typically, the bootloader and device tree will be built once and reused, while the Linux kernel and file system will vary as software is being developed. Indeed, it is common for the bootloader and to be distributed with the platform while the user adds the Linux kernel and root file system.

The simulator startup script loads the bootloader, Linux kernel, and device tree into the target system RAM (Random-Access Memory). The precise load locations are determined by the parameters of the startup scripts. Certain changes to the software might require updating the addresses used.

In the RISC-V case, the bootloader is told about the location of the device tree through a register value set from the startup scripts, and it finds the address of the kernel in the device tree. The kernel command line parameters are also located inside the device tree. Thus, if a developer wants to change the parameters (in order to change the kernel behavior or move the root file system to a different disk), the device tree must be changed.

The file system image is used as the image for the primary disk in the system. Using a disk supports arbitrarily large file systems and makes it easy to save changes to the file system for the next system start.

Loading binaries directly into target memory is a simulator “cheat”, but once the bootloader is running, the handover from bootloader to the kernel works the same way as it would on real hardware.

There are variations on this flow. In particular, if the target system is using the U-Boot bootloader, the bootloader interactive command-line interface can be scripted and used to provide the device tree address to the kernel.

A common drawback of this type of boot flow is that rebooting from inside the Linux system does not work. The virtual platform startup flow does not follow the hardware startup flow, and thus, it does not provide what is needed for a proper system reset. The virtual platform might not even have a reset function implemented.

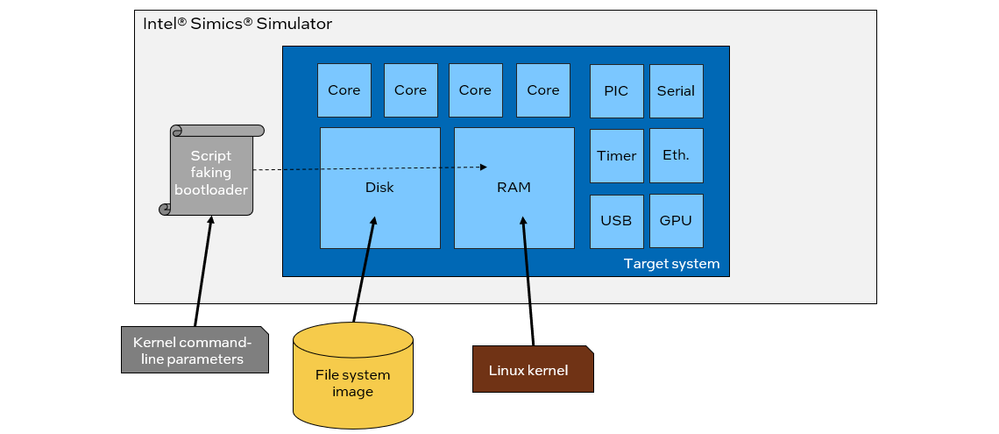

Direct Linux Kernel Boot

A further simplification to the above system startup is to skip the bootloader entirely. This means having the simulator setup script load the kernel into memory and jump to its entry point. The setup script would have to fake the bootloader-to-Linux interface. This means setting the processor state (stack pointer, memory management units, etc.) to what the operating system expects, as well as providing all required descriptors in RAM.

This method avoids the need to build and load a bootloader, but it also requires a script that essentially implements the bootloader inside the simulator. If the interface towards the kernel changes, the script must be updated. The script is likely complex for a “wide” bootloader-OS interface like ACPI. The approach is also not very useful for cases where system operation depends on code in the bootloader (such as System Management Mode, SMM).

How to pass the kernel command-line parameters depends very much on the circumstances, but they can often be provided through the faked bootloader.

Based on the Intel Simics simulator experience, it is typically easier to use a real bootloader.

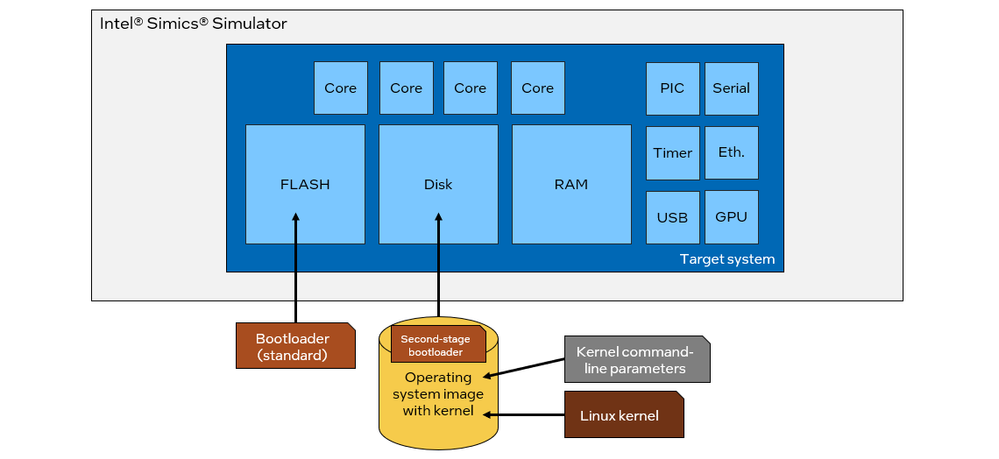

Booting from Disk

When using models of actual hardware designs, the virtual platform should boot in the same way as the real platform. This makes it easy to use real software stacks as-is since they are already built and packaged for this scenario. Having to build software in a special way for use on a virtual platform is not a good model.

Hardware setups typically store the bootloader in FLASH or EEPROM. When the system is reset, the bootloader is automatically run since it provides code located at the system “reset vector.” The bootloader starts an operating system stored on some kind of “disk” (which can be attached over NVMe or SATA or USB or SDCard or other interfaces). When working with Intel Architecture systems, the most common flow is to start from Unified Extensible Firmware Interface (UEFI) firmware. Alternatives include using the Slim Bootloader and Coreboot – the choice of bootloader is ultimately under the control of the system designer.

The handover between the bootloader and the operating system might involve additional bootloader steps, such as GNU GRUB (Grand Unified Bootloader, which is commonly used with Linux) or the Windows Boot Manager. These steps are part of the standard software flow and do not affect the simulator setup or require any scripting.

As illustrated above, this boot flow requires two files: a bootloader binary and a disk image. The bootloader would be in the same format as used on real hardware (and not an executable binary).

The Linux kernel and the kernel command-line parameters are included in the disk image. Just like on real hardware, changing the command-line parameters is achieved by starting the system, making changes to the saved configuration, and saving the updated disk image for use on the next boot.

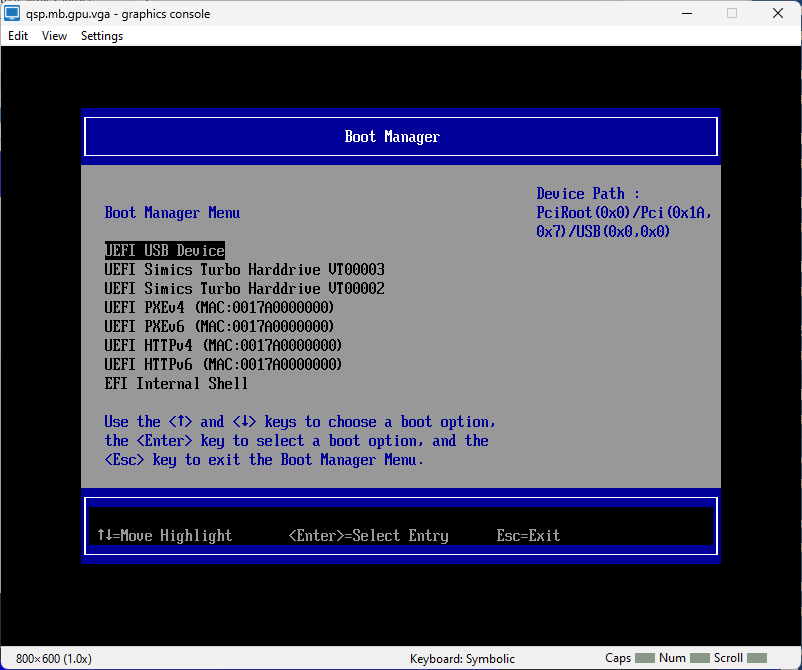

This way of booting should not require the virtual platform setup script to mimic user input to control the target system. The bootloader will be started as part of the modeled platform reset flow, and it will select a disk or device to boot from just like it does on hardware. The boot device selection depends on the target system and the bootloader. If there is only one bootable local device, you expect it to be found automatically and used as the boot device just like it works on a regular physical personal computer (PC).

Scripting might be needed in case a user wants to repeatedly boot from another device than the default – for a single boot, just interacting directly with the virtual target as if it were a real machine is the simplest solution.

The virtual platform runs the complete real software stack from the real platform, which is exactly what you want for use cases like pre-silicon software development and long-term software maintenance. “Cheating” in the virtual platform, such as described above, would reduce the proof value of the platform tests.

However, if a developer is making frequent changes to the Linux kernel, this flow is a bit inconvenient. Each newly compiled kernel binary must be integrated into a disk image before it can be tested, which obviously takes additional steps and time. This is why the new Linux kernel boot flow described below was invented – to allow the use of a real virtual platform with a real software stack but still let the Linux kernel be easily replaced.

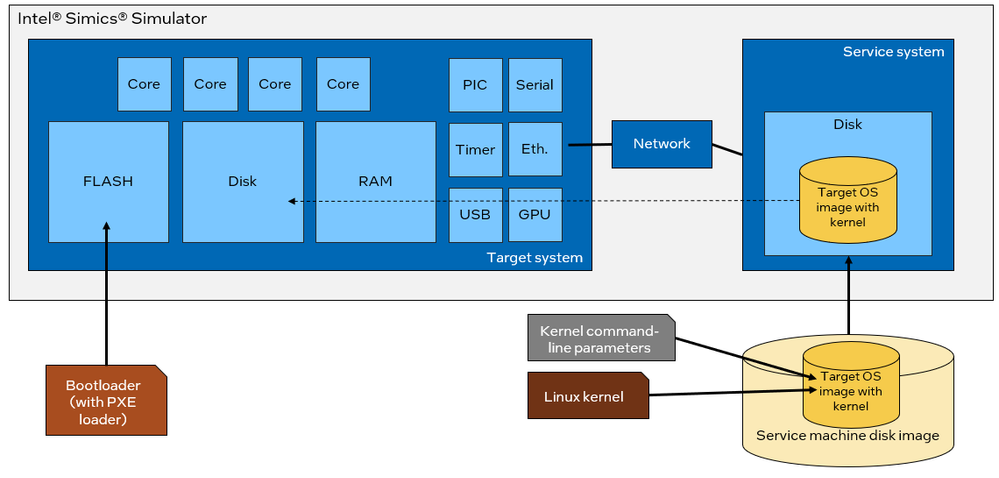

Network Boot

Not all real systems boot from disks. A common alternative, especially in data centers and rack-based embedded systems, is to boot over the network. This simplifies deployments as there is no need to individually update each and every system when upgrading or patching the software. Instead, bootable disk images are stored on a central server and handed out to systems when they start.

The standard method for network-based booting is the Intel-invented PXE (Preboot Execution Environment). In PXE, the bootloader reaches out to a network server to retrieve the disk image to boot instead of booting from a local disk. The network boot can be a multiple-stage process that pulls in successively more capable binaries and disk images.

The firmware image that does the initial boot of the target system is loaded into the virtual platform FLASH like in a standard disk-based boot scenario.

The key problem is establishing a network counterpart to the virtual platform. This can be accomplished by connecting the virtual platform to the local lab network of the machine that runs the virtual platform. Unfortunately, this basically requires that the virtual platform is connected directly to the lab network using a TAP solution or similar, which is not typically possible in IT-managed networks without special permissions. The problem is that the first phase of PXE booting relies on Dynamic Host Configuration Protocol (DHCP), which is not very good at traversing NAT routers.

A most robust and technically simpler solution is to add one or more additional machines to the simulated network that provides the services needed, as illustrated above. The boot image or images would be part of the file system of the service system, and the service system would be running all the required servers. The file system served this way is essentially the same as what is used in a standard disk boot, just provided in a different way.

Note that a disk-based boot requires that the target system is mature enough that it has a working network adapter and support for the network adapter in the bootloader.

The New Linux Kernel Boot Flow

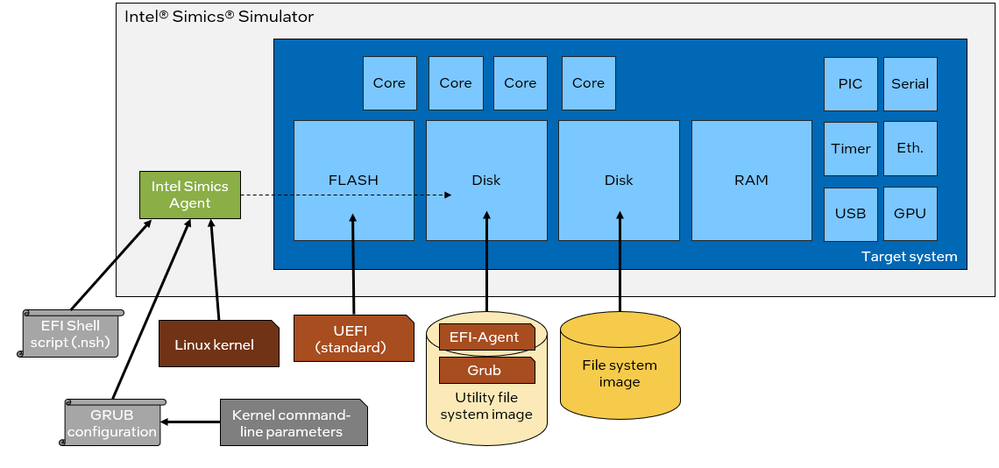

With that background, we are ready to describe the new Linux Kernel boot flow for UEFI-based targets running in the Intel Simics simulator. This flow starts from the same point as the direct kernel boots described above, with a Linux kernel binary, a kernel command-line parameter set, and a root filesystem image. The target system is assumed to have a UEFI bootloader already in place as part of the standard target setup, using a disk-based boot.

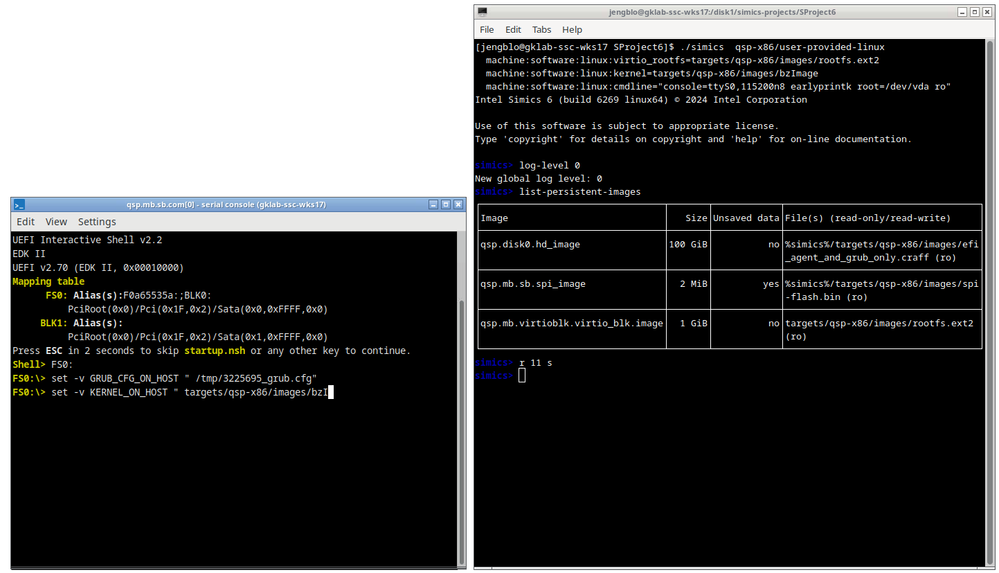

There are two disks in the target system. The first disk contains a prebuilt file system containing two critical elements for the flow: an Intel Simics Agent target binary, and a GRUB binary. The second disk is used to hold the root file system, set up in the same way as the standard disk boot discussed above. The key “trick” employed is to put the Linux kernel on the utility disk, allowing a standard disk-based boot from a disk image that is configured on the fly. This avoids the complexities of placing a kernel into RAM.

The Intel Simics Agent system is used to enable this flow. The agent system provides a fast and robust way to move files from the host into the target system software stack, driven from either the simulator side or from the target software side. The agent uses “magic instructions” to communicate directly between the target software and the simulator (and thus the host). The file transfers could, in theory, be accomplished using networking, but that would be much more brittle and slower.

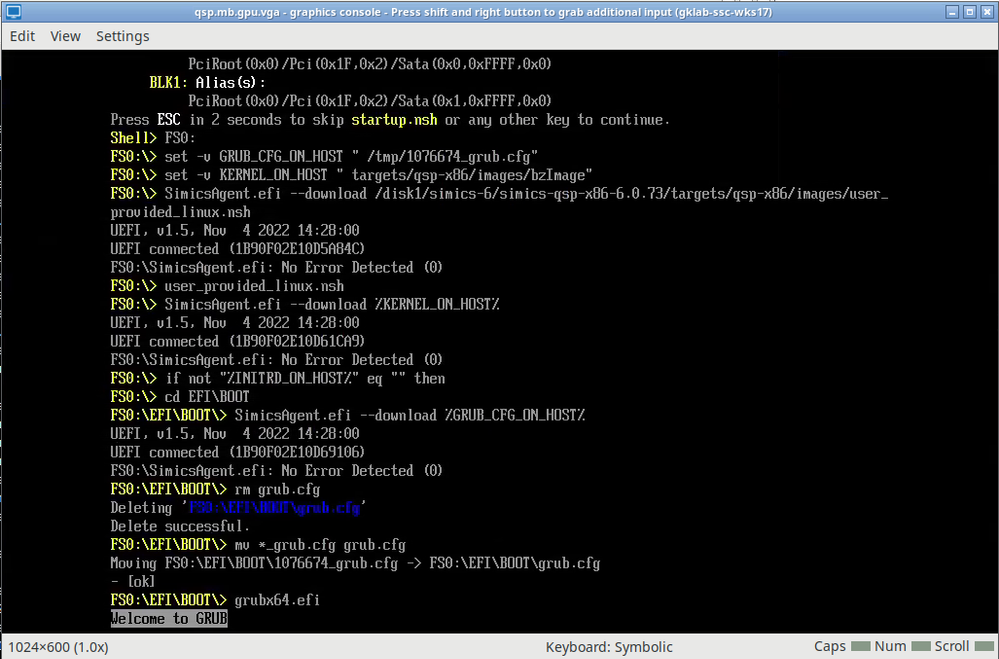

The system boot is automated from an Intel Simics simulator startup script. The script creates a temporary GRUB configuration file based on the kernel command-line parameters and the name of the kernel binary. It scripts the UEFI on the target to boot into the EFI shell and then fetches an EFI shell script from the host (using the agent). This script takes over, driving the boot operation from the EFI shell.

The kernel image and generated GRUB configuration file are copied from the host into the utility disk using the agent system (invoked from the EFI shell script). The final step is to invoke GRUB to boot into the Linux kernel stored on the utility disk.

This virtual platform setup flow is intended to support quick Linux kernel development and testing. A recompiled kernel can be applied directly to a basically unmodified Intel Simics virtual platform model (adding a disk does not change the core platform model since hot-pluggable interfaces are used). There is no need to package the kernel inside a disk image. Changing kernel command-line parameters is equally easy.

There is flexibility in just how the root file system is inserted into the platform. The default is to put a disk image on a PCIe-attached virtio block device, but it could also be put on a model of a hardware disk like NVMe or SATA. In addition, the root file system can be supplied using a virtiofs PCIe paravirtual device. In this case, the file system content is provided from a directory on the host instead of packaged into a file-system image. It requires running a virtiofs daemon on the host with privileges which can be an issue in some environments. The kernel command-line parameters tell the kernel where to find the root file system.

simics> load-target "qsp-x86/user-provided-linux" machine:software:linux:virtio_rootfs = targets/qsp-x86/images/rootfs.ext2 machine:software:linux:kernel = targets/qsp-x86/images/bzImage machine:software:linux:cmdline = "console=ttyS0,115200n8 earlyprintk root=/dev/vda ro"

Since all the files required by the UEFI boot are stored on a disk image, rebooting the platform just works. The UEFI will find a bootable device in the form of the utility disk, containing the GRUB, its configuration, and the custom Linux kernel.

It should also be noted that all target-operating-system dependencies are encapsulated in the EFI shell script. It could be rewritten to support booting other operating system kernels, provided they have the same kind of “separable” kernel that can live outside their variant of the root file system.

This Was Just the Beginning

Booting a virtual platform might seem like an easy problem, but there are many possible solutions, some rather intricate. As always, the key is to build a model that simulates the hardware enough to allow testing interesting scenarios, while also being as convenient as possible for the software developers. What that means obviously depends on the user and their use cases.

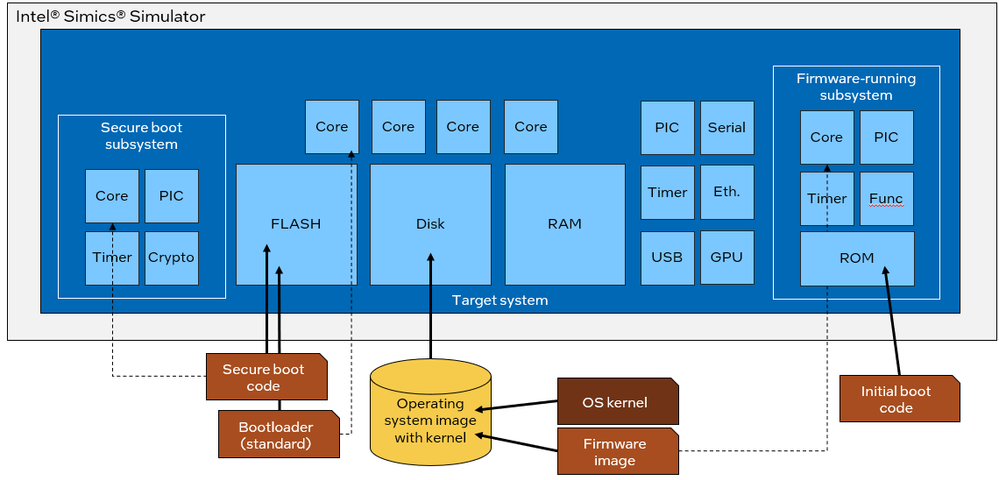

The models and boot flows can get a lot more intricate than what is described in this blog post. A real system usually does not start with the bootloader running on the main processor cores. Instead, various hidden subsystems are used for the very initial initialization and only hands over to the visible bootloader after some basic tasks have been completed.

For example, as illustrated in the picture above, the very early system start might be executed by a security subsystem with its own processor. That processor might find its boot code in the same FLASH as the general bootloader, or it might have its own local memory.

As the main operating system image is booting, it might also be starting programmable subsystems and loading firmware into them. The subsystem firmware is typically stored on the operating-system disk image. The subsystems usually also feature a small ROM (Read-Only Memory) providing the initial boot code for the subsystem – to get it to a state where the operating system driver can load the production firmware.

Jakob Engblom works at Intel in Stockholm, Sweden, as director of simulation technology ecosystem. He has been working with virtual platforms and full-system simulation in support for software and system acceleration, development, debug, and test since 2002. At Intel, he helps users and customers succeed with the Intel Simics Simulator and related simulation technologies - with everything from packaged training to hands-on coding and solution architecture. He participates in the ecosystem for virtual platforms including in Accellera workgroups and the DVCon Europe conference.

Jakob Engblom works at Intel in Stockholm, Sweden, as director of simulation technology ecosystem. He has been working with virtual platforms and full-system simulation in support for software and system acceleration, development, debug, and test since 2002. At Intel, he helps users and customers succeed with the Intel Simics Simulator and related simulation technologies - with everything from packaged training to hands-on coding and solution architecture. He participates in the ecosystem for virtual platforms including in Accellera workgroups and the DVCon Europe conference.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.