Authors: Rahul Unnikrishnan Nair, Ralph de Wargny, Ryan Metz, Eugenie Wirz

In 2023, we've witnessed not just growth in the AI and deep-tech sectors, but an explosion of innovation and disruption, reminiscent of the internet's wild days in the ‘90s. This report cuts through the noise to give you a clear view of what's on the horizon for 2024. Intel Liftoff startups have been significant contributors to the industry's progress over the past year. Their insights on the most notable trends and developments in areas such as AI, Machine Learning, and Data Analytics during 2023 are the barometers for the industry's direction and pace for the coming year.

AI: THE BIG PICTURE

The AI market is on a steep upward trajectory, projected to reach $407 billion by 2027 from $86.9 billion in 2022. Businesses anticipate AI to significantly impact productivity, with 64% expecting it to enhance their operations. Concurrently, AI is predicted to create 97 million new jobs, mitigating some concerns over automation-induced job displacement.

Intel Liftoff and its member startups have been integral to these developments. In 2023, the foundational work in GenAI set the stage for broader application in 2024, where real-world challenges are expected to be addressed more robustly. The report discusses Intel Liftoff's role in scaling with the opportunities presented by the evolving AI ecosystem, highlighting trends such as the adoption of multi-modal Generative AI, the emergence of GPT4-grade open-source models, and the rapid commoditization of AI capabilities via API. It also touches on the challenges ahead, including deep-fake proliferation and the need for specialized, industry-specific AI solutions.

The report provides an overview of the next wave of innovation and growth in the AI sector, focusing on the practical and strategic implications for businesses and the wider industry.

Intel Liftoff: 2024 Insights and Trends from the AI Ecosystem

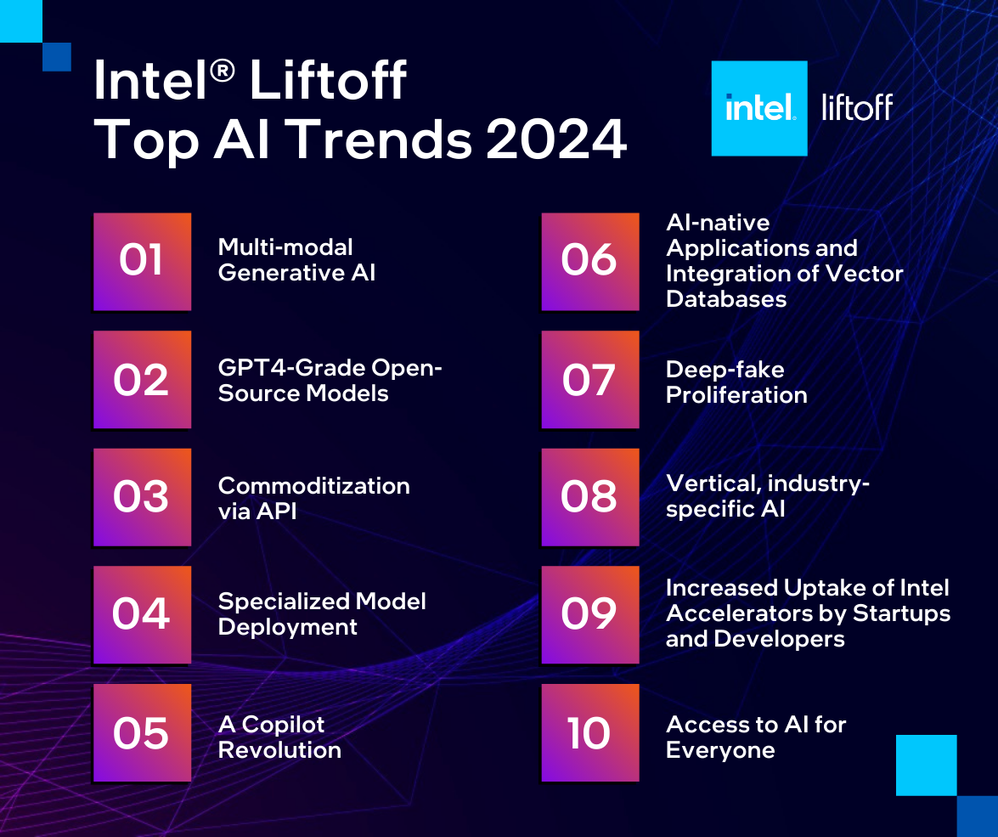

2023 was the year GenAI was invented and developed. In 2024 innovators will apply it to real-world problems and bring new solutions to market. Intel Liftoff will scale with the opportunity. The trends the Intel Liftoff team and program members are observing in the AI ecosystem include:

- Multi-modal Generative AI using a combination of text, speech, images, or video will be increasingly the focus of startup innovation. Many startups will push the boundaries and create real-world applications using multi-modal, Mixture of Experts (MoE) generative AI, like Mistral AI’s Mixtral release.

- GPT4-Grade Open-Source Models: The moment open-source models beat proprietary ones for specific use cases or in terms of demand will mark a significant turning point for the startup ecosystem. The decision to employ API-based or self-hosted models will no longer be purely focused on model quality, but will instead be greater in scope, taking into account privacy, control, convenience, and cost.

- Commoditization via API. APIs enable founders to quickly bootstrap SaaS startups, making it easier for developers to build complex applications. APIs from the likes of Hume AI, ElevenLabs, OpenAI, together.ai and Prediction Guard are used for most projects. From months to days, the time it takes to build an AI-powered SaaS service has dramatically decreased.

- Specialized Model Deployment: Increased deployment of smaller, specialized models rather than large, general-purpose ones, especially at the edge on client devices.

- Copilot Revolution: Anyone will be able to become a developer with development copilots. This will create a broad range of opportunities for founders to develop applications and services (TurinTech). Also, the productivity of knowledge workers will be boosted with specialized copilots (The Mango Jelly).

- Development of AI-native applications and integration of vector databases like Weaviate, agent frameworks like Langchain, Llama Index, LLMs as compilers, and Retrieval Augmented Generation (RAG) will lead to new modalities of products using composable AI.

- With advancements in multi-modal models, we expect to see increased deep-fake proliferation. The realism achieved using the latest models can be a substantial challenge—one that startups must meet.

- Vertical, industry-specific AI solutions will allow startups to leapfrog the competition in healthtech (e.g., drug discovery startup Scala Bio), manufacturing, fintech, edtech, and agritech.

- Increased use of Intel accelerators by startup developers: attracted to the equitable availability of a cost-efficient accelerator line-up for inference, finetuning and foundational model development, making it more sustainable for growing companies.

- AI Everywhere must mean access to AI for everyone, irrespective of geographical location or demographic. This will require it to be both ubiquitous and cost-effective. Intel is well-positioned to realize this vision, contrasting with solutions on the market, which currently offer more exclusive access.

Top 2024 Trends from Our Intel Liftoff Members: Startups Forecasting the Future in Their Own Words

Highlighting the growing trends impacting the AI and deep-tech industries, we interviewed a few of Intel Liftoff members.

Argilla

David Berenstein, Founder

Argilla is an open-source NLP (Natural Language Processing) company that focuses on simplifying processes for users and fostering collaboration within the open-source community.

What was the most significant development or trend in your specific specialization during 2023?

In the landscape of Natural Language Processing (NLP) and Machine Learning (ML), 2023 witnessed a pivotal shift with the ascendancy of ChatGPT and other closed-source Large Language Models (LLMs) providers. The utilization of these powerful models via an easy-to-use API underscored the transformative potential of advanced language models across a wide array of applications for both technical and non-technical end-users, leading to a surge in NLP adoption among enterprises.

For technical end-users specifically, this shift was characterized by a strong emphasis on three aspects:

- the development of larger models to achieve superior quality,

- the intense competition between closed-source models and their open-source counterparts,

- the imperative for data curation to enhance model quality and mitigate unwanted side-effects such as toxicity and dissemination of false information.

What do you expect will be the major driving factors or trends in Enterprise AI

in 2024?

Looking ahead to 2024, the industry is set for further evolution, marked by an emphasis on companies honing their own Large Language Models (LLMs) to diminish reliance on the few external LLM providers. This shift signifies a move away from a universal solution approach, as organizations recognize the necessity of customizing models to meet their unique needs and goals. Expectations are high for an upswing in the adoption of smaller, more specialized models, reflecting a strategic shift towards privacy, efficiency, and precision.

Notably, leading open-source LLMs such as Llama2, Mistral, and Zephyr are expected to play a crucial role as the foundation for generating data and distilling information from models. This collaborative approach suggests a trend where industry participants leverage established models to augment their capabilities, fostering a symbiotic relationship between innovation and practical application.

ASKUI

Jonas Menesklou, Founder

ASKUI is an automated UI testing tool that doesn’t go under the hood of the web app. Instead it sits in the same position as a user and uses artificial intelligence to identify and test out different UI elements.

What was the most significant development or trend in your specific specialization during 2023?

We have witnessed widespread adoption of Large Language Models (LLMs) in existing products, and enterprises have begun to explore use cases with them. The most significant aspect is how LLMs comprehend unstructured data.

What do you expect will be the major driving factors or trends in your industry in 2024?

Workflow automation and test automation will be further propelled by the integration of multiple AI models. Particularly, the combination of vision and language will play a crucial role in how we create and execute workflows.

Prediction Guard

Daniel Whitenack, Founder and CEO

Prediction Guard helps you deal with unruly output from Large Language Models (LLMs). Get typed, structured and compliant output from the latest models with Prediction Guard and scale up your production AI integrations.

What was the most significant development or trend in your specific specialization during 2023?

Companies caught a glimpse of the possibilities of LLM applications in 2023. Many created compelling prototypes integrating their own data into question answering, chat, content generation, and information extraction applications. At the same time, the deployment and scaling of these prototypes turned out to be more complicated than people initially thought both in terms of: (1) dealing with risk issues (like privacy, security, hallucinations, toxicity, etc.); and (2) maintaining reliable LLM integrations. Many enterprises are looking for a path forward from generative AI prototype to usage at scale, and they are turning to open models hosted in secure platforms like Prediction Guard to achieve their AI ambitions.

What do you expect will be the major driving factors or trends in your industry in 2024?

Now that the value of LLM-driven applications has been established, many more enterprises will achieve actual adoption of the technology in 2024. Open access models will drive much of this adoption as more and more models achieve GPT4 level performance (with many already surpassing GPT3.5 for many tasks). Companies will seek to deploy their own AI stacks with increasing good infrastructure support and tooling around the models (for validating outputs, preventing data leaks, and dealing with security risks).

Selecton Technologies Inc.

Yevgen Lopatin, Founder

Selecton Technologies is a company dedicated to exploring and researching AI technologies to make a breakthrough in human buying experience.

What was the most significant development or trend in your specific specialization during 2023?

Our specialization lies in AI and ML, with a focus on NLP models. The trend of 2023 was marked by an emerging variety of large-scale generative AI models, encompassing both NLP and computer vision. These models have achieved a level of understanding and generalization previously unseen.

Foundational LLMs are universal, offering capabilities to maintain dialogue, extract knowledge, solve tasks, and more. They are sufficiently flexible to be customized or fine-tuned for specific applications. Importantly, in 2023, many cutting-edge generative models were open-sourced, facilitating extensive independent research across various application domains.

We also observed the emergence of frameworks and tools like Langchain for creating complex solutions that integrate several generative and predictive ML models, tailored for specific use cases.

Another notable trend is the increased application of advanced Reinforcement Learning-based methods and algorithms, such as RLHF, Instruction Learning, and LORA, to language models. These algorithms enable the design and construction of domain-specific generative models with unique properties, such as enhanced logical reasoning, task-solving, and expertise.

What do you expect will be the major driving factors or trends in your industry in 2024?

For 2024, we can expect further advancements in Generative AI, with models and solutions inching closer to General Artificial Intelligence. One notable trend will be a shift towards multi-modality, involving the creation of generative models capable of handling text, image/video, and sound data simultaneously. These models will also be highly customizable, able to solve tasks involving numerical data, databases, or programming codes, and may include modalities like 3D models, game characters or levels, 3D environments, bioinformatics data, and more.

Another evolution in AI will be the development of generative agents that operate in various environments. These include engaging in dialogues with humans, completing cooperative tasks, exploring data and extracting knowledge, and applications in game worlds and robotics.

A significant driving factor will be the optimization of large ML models. They will achieve comparable performance and results with significantly fewer weights and reduced computational resource consumption.

Finally, we anticipate the emergence of new paradigms of data collection involving crowdsourcing from real users. This data will be gleaned from actual interactions of users with large generative AI models when fulfilling real-world requests.

SiteMana

Peter Ma, Founder

SiteMana makes AI prediction on the anonymous visitors. Machine-learning uncloaks your anonymous traffic's individual identity, likelihood to purchase, and how to act upon this, helping you cheat the conversion game.

What was the most significant development or trend in your specific specialization during 2023?

In 2023, SiteMana made groundbreaking strides in the realm of generative AI, particularly in enhancing Language Learning Models (LLMs) for crafting sales copy and opinion pieces. Leveraging the power of Intel’s Developer Cloud, we fine-tuned our LLMs to not only generate compelling content but also to realize significant site conversion lifts. This innovation has positioned us at the forefront of AI-driven content creation, offering unparalleled accuracy and effectiveness in our specialized field.

What do you expect will be the major driving factors or trends in your industry in 2024?

For 2024, we foresee a trend towards more specialized Gen AI applications. The evolving needs of our customers continually push the boundaries of generalized AI capabilities. At SiteMana, we're geared to lead this shift, particularly in the B2B sector, by honing our expertise in online sales copywriting and opinion content writing. We believe this focused approach will drive the industry to meet specific, nuanced business needs, marking a new era of AI specialization.

StarWit Technologies

Markus Zarbock, Founder

StarWit Technologies wants to make cities smarter and more livable via Computer Vision. That means e.g. improving public transport by adding computer vision data to a intelligent traffic simulation system and improving traffic planning.

What was the most significant development or trend in your specific specialization during 2023?

We launched our company in April 2023 and have since been developing software in the field of computer vision and AI. The most interesting technical insight we've discovered is that inferencing on video material can be performed quickly enough on regular CPUs for a number of use cases. We are conducting two pilot projects for smart city infrastructure in the US in Carmel, Indiana, and here in Wolfsburg, Germany. The upcoming months are shaping up to be very exciting!

What do you expect will be the major driving factors or trends in your industry in 2024?

We are striving to track moving objects in video streams using a purely CPU-based approach. Historically, these algorithms have only run on GPUs with reasonable performance, but we anticipate this changing in 2024. Our goal is to democratize AI by enabling everything to run on mainstream CPUs.

Titan ML (TyTN)

Meryem Arik, Co-founder and CEO

TyTN is building an enterprise training and deployment platform that compresses and optimises NLP models for deployment - allowing businesses to deploy significantly cheaper, faster models in the fraction of the time.

What do you expect will be the major driving factors or trends in Enterprise AI

in 2024?

In 2024, the landscape of Enterprise AI is expected to be influenced by several key trends and driving factors according to Meryem Arik, Co-founder and CEO of Titan ML. A major development will be the availability of GPT-4-quality open-source models, democratizing high-quality AI tools. The use of a mixture of expert models is anticipated to lead open source leaderboards, signaling a shift in model preference. The application of AI will become increasingly multi-modal, moving beyond chat applications to dominate the enterprise sphere with a variety of non-chat functionalities. There will also be a heightened focus on improving throughput and scalability to accommodate growing demands. Additionally, the integration of AMD and Intel Accelerators will become more prevalent, enhancing computing power and efficiency.

Lastly, there will be a significant emphasis on building enterprise applications with interoperability to ensure seamless integration and functionality across diverse systems and platforms.

TurinTech AI

Mike Basios, CTO

Powered by proprietary ML-based code optimization techniques and LLMs, TurinTech optimizes code performance for machine learning and other data-heavy applications, helping businesses become more efficient and sustainable by accelerating time-to-production and reducing development and compute costs.

What was the most significant development or trend in your specific specialization during 2023?

In 2023, the landscape for developer tools was revolutionized by GPT-4 and other Large Language Models (LLMs) tailored for programming, such as Meta's Code Llama. Fueled by these foundational models, generative AI code generation tools like GitHub Copilot have seen increased adoption in enterprises, with new contenders also entering the market, indicating robust demand for smarter developer tools. At TurinTech AI, we've stayed ahead of the curve with our proprietary LLMs, specifically trained to enhance code performance.

What do you expect will be the major driving factors or trends in your industry in 2024?

As we look towards 2024, the landscape is primed for an expansion of LLM-powered tools that will redefine the entire Software Development Lifecycle. The pace of GenAI innovation is crucial for staying ahead, yet the development and deployment of GenAI applications bear significant costs. There's a growing commitment to AI technologies that reduce expenses and increase operational efficiency. Intel's latest AI hardware and software portfolio leads this charge, making AI more accessible and cost-effective. Partnering with leaders like Intel, we're poised to empower more businesses to accelerate AI innovation and adoption, making it smarter and greener without breaking the bank.

Weaviate

Bob van Luijt, Founder and CEO

Weaviate is an open-source vector search engine that stores both objects and vectors, allowing for combining vector search with structured filtering with the fault-tolerance and scalability of a cloud-native database, all accessible through GraphQL, REST, and various language clients.

What was the most significant development or trend in your specific specialization during 2023?

In 2023, developers worldwide were empowered to build AI-native applications with capabilities and performance surpassing those of the past. One example is Stack Overflow, which is creating a more intuitive search experience using large language models that respond to human language and sentiment, moving beyond rigid keyword searches. As part of this evolution towards AI-native applications, vector databases such as Weaviate have become a fundamental component of the technology stack.

What do you expect will be the major driving factors or trends in your industry in 2024?

While 2023 was a year of learning and prototyping with generative AI, in 2024, companies—both large and small—will begin to standardize their AI-native tech stacks and move applications into production. Organizations will start to realize the business value of their investments in AI, as use cases continue to mature and evolve.

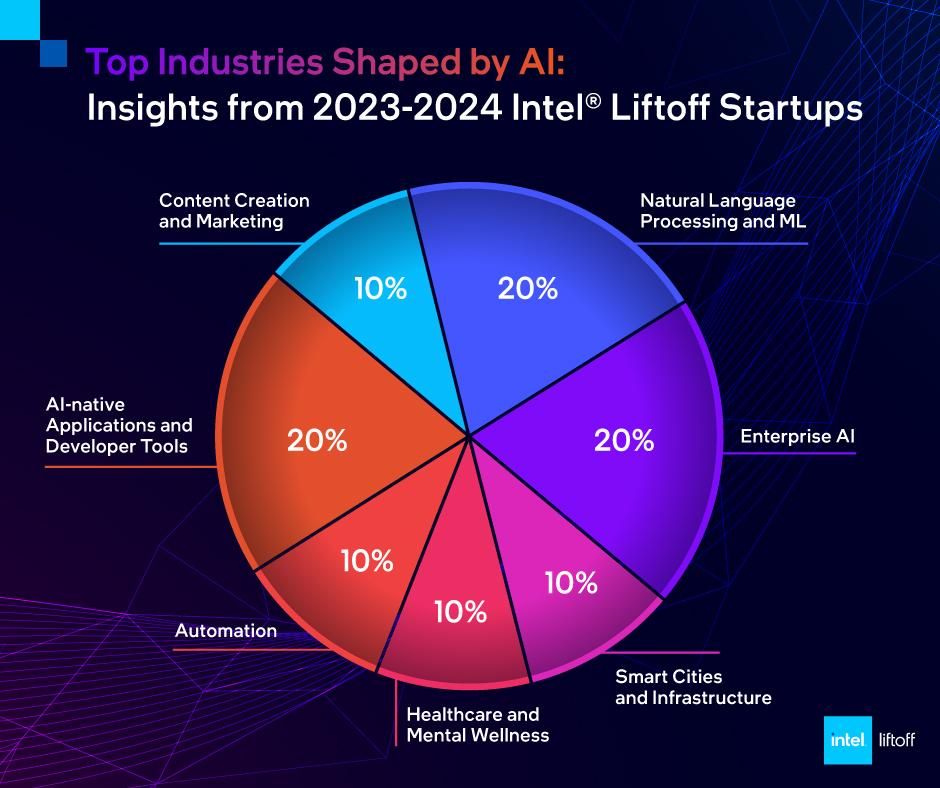

A visual representation of the sectors most influenced by current and upcoming technological trends.

Here Are Three Key Messages We Want To Leave With The AI Community:

Embrace Open Innovation: The rise of open-source models and tools is democratizing AI development. Engage with these resources to drive innovation and inclusivity in the field.

Prepare for Ethical Challenges: With the proliferation of deep-fakes and the increasing sophistication of AI, ethical considerations will become more pressing. This includes transparency, fairness, privacy, and security. AI developers and companies should commit to creating and deploying AI responsibly.

Focus on Specialization and Accessibility: The future of AI lies in specialized applications and making technology accessible to all. Strive for excellence in your niche while ensuring your innovations contribute to making AI ubiquitous and cost-effective.

Launchpad to Innovation: Join Intel® Liftoff for Startups

To capitalize on the trends outlined in this report and drive innovation in the deep-tech and AI space, consider participating in Intel® Liftoff for Startups.

Our virtual accelerator program offers early-stage tech startups access to Intel's technological leadership and resources. Apply now to align your startup with the industry's leading edge and transform insights into action.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.