Published May 10th, 2022

Image credit: REDPIXEL via Adobe Stock.

Gadi Singer is Vice President and Director of Emergent AI Research at Intel Labs leading the development of the third wave of AI capabilities.

Advancing Machine Intelligence: Why Context Is Everything

Most of us have heard the phrase, “Image is everything.” But when it comes to taking AI to the next level, it’s context that is everything.

Contextual awareness embodies all the subtle nuances of human learning. It is the ‘who’, ‘why’, ‘when’, and ‘why’ that inform human decisions and behavior. Without context, the current foundation models are destined to spin their wheels and ultimately interrupt the trajectory of expectation for AI to improve our lives.

This blog will discuss the significance of context in ML, and how late binding context could raise the bar on machine enlightenment.

Why Context Matters

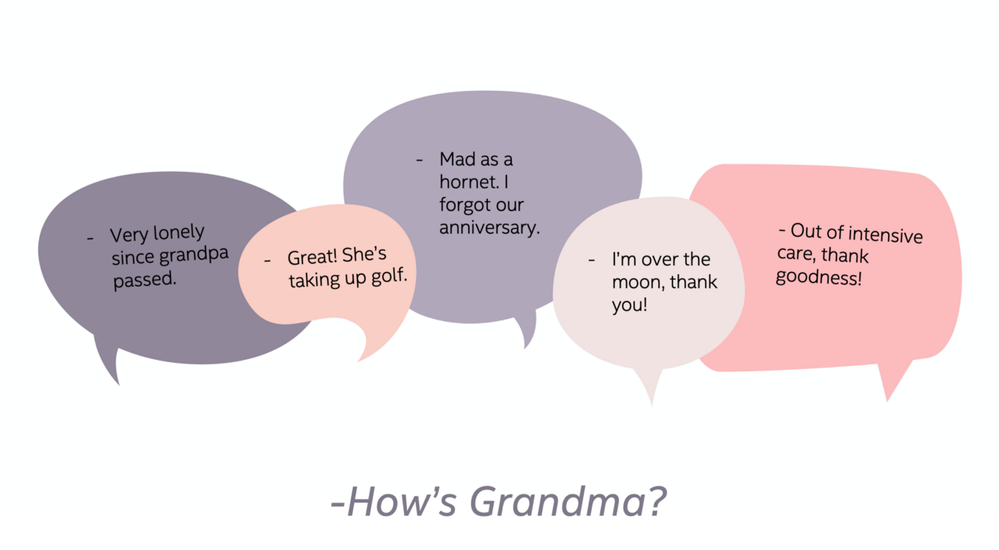

Context is so deeply embedded in human learning that it is easy to overlook the critical role it plays in how we respond to a given situation. To illustrate this point, consider a conversation between two people that begins with a simple question: How is Grandma?

In a real-world conversation, this simple query could elicit any number of potential responses depending on contextual factors, including time, circumstance, relationship, etc.:

Fig 1. A proper answer to “How’s Grandma?” is highly context-dependent. Image credit: Intel Labs.

The question illustrates how the human mind can track and take into account a vast amount of contextual information, even subtle humor, to return a relevant response. This ability to fluidly adapt to a variety of often subtle contexts is well beyond the reach of modern AI systems.

To grasp the significance of this deficit in machine learning, consider the development of reinforcement learning (RL)-based autonomous agents and robots. Despite the hype and success that RL-based architectures have had in simulated game environments like Dota 2 and StarCraft II, even purely gaming environments like NetHack pose a formidable obstacle to current RL systems due to the highly conditional nature and complexity of policies that are required to win the game. Similarly, as noted in many recent works, autonomous robots have miles to go before they can interact with previously unseen physical environments without the need of a serious engineering effort to either simulate the correct type of environment prior to deployment, or to harden the learned policy.

Current ML and Handling of Contextual Queries

With some notable exceptions, most ML models incorporate very limited context of a specific query, relying primarily on the generic context provided by the dataset that the model is trained or fine-tuned on. Such models also raise significant concerns about bias which makes them less suited for use in many business, healthcare, and other critical applications. Even state-of-the-art models like D3ST used in voice assistant AI applications require manually creating descriptions of schemata or ontologies with possible intents and actions that the model needs to identify context. While this involves a relatively minimal level of handcrafting, it nonetheless means that an explicit human input is required every time the context of the task is to be updated.

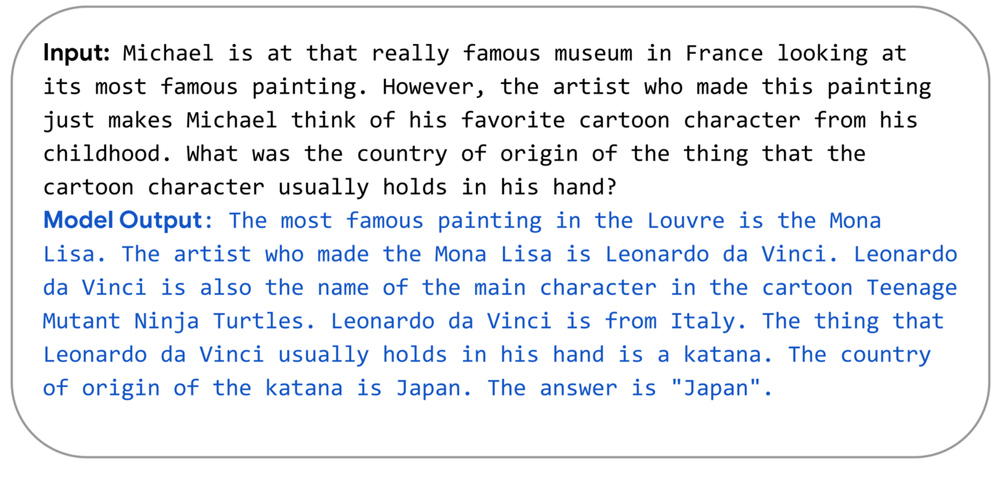

That’s not to say there haven’t been significant developments in context awareness for machine learning models. GPT-3, a famous large language model from the OpenAI team, has been used to generate full articles that rival human composition — a task that requires keeping track of at least local context. The Pathways Language Model (PaLM), introduced by Google in April 2022, demonstrates even greater capability, including the ability to understand conceptual combinations in appropriate contexts to answer complex queries.

Fig 2. PaLM is able to successfully handle queries that require jumping between different contexts for the same concept. Image credit: Google Research [13] under CC BY 4.0 license.

Many of the recent advancements have focused on retrieval-based query augmentation, in which the input into the model (query) is supplemented by automatic retrieval of relevant data from an auxiliary database. This has enabled significant advances in applications like question answering and reasoning over knowledge graphs.

With such a flurry of significant improvements in the quality of achievable outputs even within some contextual constraints, it may be tempting to assume that this demonstrates a more general context awareness in modern AI systems. However, these models still do not lend the kind of context that is needed for more complex applications, as might be used in manufacturing, medical practice, etc. Such applications often require a certain fluidity with regard to context — as discussed in the adaptability section in one of the previous blogs. For example, the relevant context might have to be conditioned on temporal information like the level of urgency of the user’s request, or objectives and sensitivities of an interaction. This adaptability would allow the appropriate context for a given query to evolve based on the progression of communication with the human. In simple human terms, the model would have to avoid jumping to any conclusions until it has all the relevant contextual information. This carefully-timed suspension of the final response to the original query is what can be called late binding context.

It bears mentioning that recent neural network models do have the capability to achieve some late binding context. For example, if the model is appended with an auxiliary database like Wikipedia, it can condition its response with the latest version of Wikidata, thus considering some degree of time-relevant context before offering a response to a particular query.

One of the domains that puts high premium on context is conversational AI and in particular multi-turn dialogue modeling. However, it is acknowledged that there are key challenges in providing topic awareness, and considering implied time, prior knowledge and intentionality.

The issue with most of the currently deployed AI technologies is that even if they can perform the process of conditioning in a particular case, conditioning over time remains a challenge for many applications, since it requires a combination of understanding of the task at hand as well as a memory of the sequence of events that happened before, which acts as a conditioning prior. To consider a more light-hearted, metaphorical example, one could recall the Canadian detective show “Murdoch Mysteries”, which is famous for its refrain “What have you, George?”. This is the phrase that is continuously being used by Detective Murdoch to query constable Crabtree on the latest developments, and the answer is always different and highly dependent on the events that have previously transpired in the story.

Building Context Into Machine Learning

So how could it be possible to incorporate and leverage late binding context in machine learning, at scale?

One way would be to create “selection masks” or “overlays” of meta-knowledgethat provide overlapping layers of relevant contextual information that effectively narrow down search parameters based on a particular use case. In case of a medical search for the correct prescription, for example, a doctor would consider the patient’s diagnosed condition, other comorbidities, age, a history of previous adverse reactions and allergies, etc, to constrain the search space to a specific drug. To address the late-binding aspects of context, such overlays must be dynamic to capture recent information, refinement of scope based on case-specific knowledge, comprehension of the objectives of the interaction in flight, and more.

Fig 3: Correct medical treatment decisions require a lot of patient-specific timely contextual considerations. Image credit: Intel Labs.

Source attribution is another key meta-knowledge dimension that can be particularly useful as a selection mask that could be used to enable late binding context. This is how a model would give more credence to a specific source over another–say the New England Journal of Medicine versus an anonymous Reddit post. Another application for source attribution would be selecting the correct set of decision rules and constraints that should be applied in a given situation — for example, laws of the local jurisdiction, or traffic rules in a specific state. Source attribution is also key for reducing bias by considering information within the context of the source creating it rather than assume correctness through the statistics of occurrences.

This blog has not touched a very important aspect — how can a human or a future AI system select the relevant pieces of information to consider as a context of a particular query? What is the data structure that one must search over in order to find the contextually relevant pieces of data, and how is this structure learned? More on these questions in future posts.

Avoid taking intelligence out of context

The field of AI is making strides in incorporating conditioning, compositionality and context. However, the next level of machine intelligence will require significant advances in incorporating the ability to dynamically comprehend and apply the multiple facets of late-binding context. When considered within the scope of highly-aware, in-the moment interactive AI, context is everything.

References

- Bommasani, R., Hudson, D. A., Adeli, E., Altman, R., Arora, S., von Arx, S., … & Liang, P. (2021). On the opportunities and risks of foundation models. arXiv preprint arXiv:2108.07258.

- Berner, C., Brockman, G., Chan, B., Cheung, V., Dębiak, P., Dennison, C., … & Zhang, S. (2019). Dota 2 with large scale deep reinforcement learning. arXiv preprint arXiv:1912.06680.

- Vinyals, O., Babuschkin, I., Czarnecki, W. M., Mathieu, M., Dudzik, A., Chung, J., … & Silver, D. (2019). Grandmaster level in StarCraft II using multi-agent reinforcement learning. Nature, 575(7782), 350–354.

- Küttler, H., Nardelli, N., Miller, A., Raileanu, R., Selvatici, M., Grefenstette, E., & Rocktäschel, T. (2020). The nethack learning environment. Advances in Neural Information Processing Systems, 33, 7671–7684.

- Kostrikov, I., Nair, A., & Levine, S. (2021). Offline reinforcement learning with implicit q-learning. arXiv preprint arXiv:2110.06169.

- Ibarz, J., Tan, J., Finn, C., Kalakrishnan, M., Pastor, P., & Levine, S. (2021). How to train your robot with deep reinforcement learning: lessons we have learned. The International Journal of Robotics Research, 40(4–5), 698–721.

- Loquercio, A., Kaufmann, E., Ranftl, R., Müller, M., Koltun, V., & Scaramuzza, D. (2021). Learning high-speed flight in the wild. Science Robotics, 6(59), eabg5810.

- Yasunaga, M., Ren, H., Bosselut, A., Liang, P., & Leskovec, J. (2021). Qa-gnn: Reasoning with language models and knowledge graphs for question answering. arXiv preprint arXiv:2104.06378.

- Mehrabi, N., Morstatter, F., Saxena, N., Lerman, K., & Galstyan, A. (2021). A survey on bias and fairness in machine learning. ACM Computing Surveys (CSUR), 54(6), 1–35.

- Zhao, J., Gupta, R., Cao, Y., Yu, D., Wang, M., Lee, H., … & Wu, Y. (2022). Description-Driven Task-Oriented Dialog Modeling. arXiv preprint arXiv:2201.08904.

- Brown, T., Mann, B., Ryder, N., Subbiah, M., Kaplan, J. D., Dhariwal, P., … & Amodei, D. (2020). Language models are few-shot learners. Advances in neural information processing systems, 33, 1877–1901.

- Reporter, G. S. (2020, September 11). A robot wrote this entire article. Are you scared yet, human? The Guardian.

- Chowdhery, A., Narang, S., Devlin, J., Bosma, M., Mishra, G., Roberts, A., … & Fiedel, N. (2022). Palm: Scaling language modeling with pathways. arXiv preprint arXiv:2204.02311.

- Lewis, P., Perez, E., Piktus, A., Petroni, F., Karpukhin, V., Goyal, N., … & Kiela, D. (2020). Retrieval-augmented generation for knowledge-intensive nlp tasks. Advances in Neural Information Processing Systems, 33, 9459–9474.

- Borgeaud, S., Mensch, A., Hoffmann, J., Cai, T., Rutherford, E., Millican, K., … & Sifre, L. (2021). Improving language models by retrieving from trillions of tokens. arXiv preprint arXiv:2112.04426.

- Singer, G. (2022, January 15). LM!=KM: The Five Reasons Why Language Models Fall Short of Supporting Knowledge Model Requirements of Next-Gen AI.Medium.

- Moore, A. W. (2022, April 14). Conversational AI’s Moment Is Now. Forbes.

- Xu, Y., Zhao, H., & Zhang, Z. (2021, May). Topic-aware multi-turn dialogue modeling. In The Thirty-Fifth AAAI Conference on Artificial Intelligence (AAAI-21).

- Li, Y., Li, W., & Nie, L. (2022). Dynamic Graph Reasoning for Conversational Open-Domain Question Answering. ACM Transactions on Information Systems (TOIS), 40(4), 1–24.

- Gao, C., Lei, W., He, X., de Rijke, M., & Chua, T. S. (2021). Advances and challenges in conversational recommender systems: A survey. AI Open, 2, 100–126.

- Singer, G. (2022b, May 9). Understanding of and by Deep Knowledge — Towards Data Science. Medium.

Gadi joined Intel in 1983 and has since held a variety of senior technical leadership and management positions in chip design, software engineering, CAD development and research. Gadi played key leadership role in the product line introduction of several new micro-architectures including the very first Pentium, the first Xeon processors, the first Atom products and more. He was vice president and engineering manager of groups including the Enterprise Processors Division, Software Enabling Group and Intel’s corporate EDA group. Since 2014, Gadi participated in driving cross-company AI capabilities in HW, SW and algorithms. Prior to joining Intel Labs, Gadi was Vice President and General Manager of Intel’s Artificial Intelligence Platforms Group (AIPG).

Gadi received his bachelor’s degree in computer engineering from the Technion University, Israel, where he also pursued graduate studies with an emphasis on AI.

Gadi joined Intel in 1983 and has since held a variety of senior technical leadership and management positions in chip design, software engineering, CAD development and research. Gadi played key leadership role in the product line introduction of several new micro-architectures including the very first Pentium, the first Xeon processors, the first Atom products and more. He was vice president and engineering manager of groups including the Enterprise Processors Division, Software Enabling Group and Intel’s corporate EDA group. Since 2014, Gadi participated in driving cross-company AI capabilities in HW, SW and algorithms. Prior to joining Intel Labs, Gadi was Vice President and General Manager of Intel’s Artificial Intelligence Platforms Group (AIPG).

Gadi received his bachelor’s degree in computer engineering from the Technion University, Israel, where he also pursued graduate studies with an emphasis on AI.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.