Authors: Zhuo Wu and Wanglei Shen

Automatic Device Plugin (AUTO) in the 2022.1 release of OpenVINO™ has enabled automatic selection of the most suitable target device for AI inferencing, and appropriate configuration to prioritize either latency or throughput for the target application, as well as the ability to accelerate first inference latency. Detailed introduction on this feature could be found on our blog “Improve AI Application Performance and Portability with Automatic Device Plugin”.

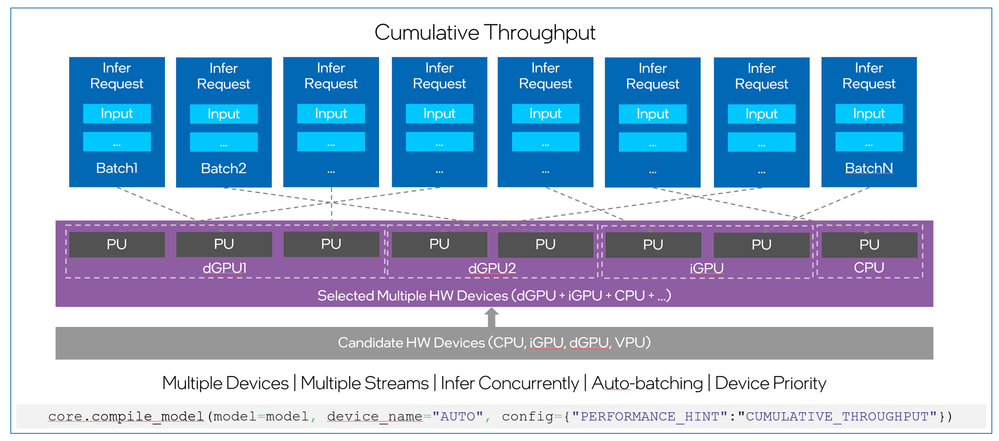

However, if you have both integrated and discrete GPU cards, CPU, VPU, etc. to run AI inferences, how do you obtain full-speed AI inferencing with multiple devices? The answer to this question is to use “CUMULATIVE_THROUGHPUT”, a new feature provided in AUTO of OpenVINO™ 2022.2 release.

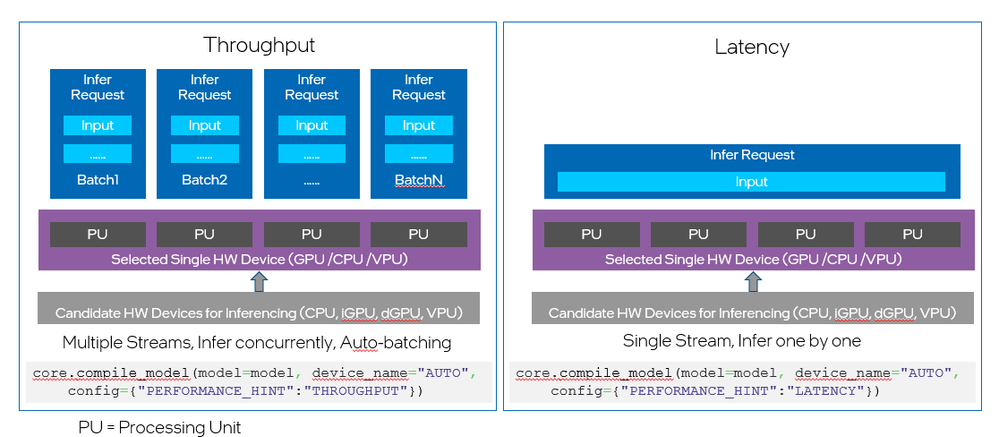

While the two performance hints in AUTO, LATENCY and THROUGHPUT, available from OpenVINO™ 2022.1, can select one target device with your preferred performance option (as shown in Fig.1), the CUMULATIVE_THROUGHPUT option enables running inference on multiple devices for higher throughput. With CUMULATIVE_THROUGHPUT, AUTO loads the network model to all available devices in the candidate list, and then runs inference on them based on the default or specified priority, as shown in Fig.2.

Fig.1. Hardware selection and inference requests in “Throughput” and “Latency”

Fig.1. Hardware selection and inference requests in “Throughput” and “Latency”

How to Configure CUMULATIVE_THROUGHPUT

The following code examples show how to configure CUMULATIVE_THROUGHPUT in OpenVINO, in C++ and Python respectively.

C++ example:

// Compile a model on AUTO with Performance Hint enabled:

// To use the “CUMULATIVE_THROUGHPUT” option:

ov::CompiledModel compiled_mode3 = core.compile_model(model, "AUTO", ov::hint::performance_mode(ov::hint::PerformanceMode::CUMULATIVE_THROUGHPUT));

Python Example:

# Compile a model on AUTO with Performance Hints enabled:

# To use the “CUMULATIVE_THROUGHPUT” mode:

compiled_model = core.compile_model(model=model, device_name="AUTO", config={"PERFORMANCE_HINT":"CUMULATIVE_THROUGHPUT"})

You could also specify the devices you’d like to run inferences on with the following commands:

compiled_model = core.compile_model(model=model, device_name="AUTO:GPU,CPU", config={"PERFORMANCE_HINT":"CUMULATIVE_THROUGHPUT"})

Advanced Control and Configuration with CUMULATIVE_THROUGHPUT

There are times when using AUTO, you have further requirements on the device selection for inferencing. For example, if you want to reserve CPU for the business logic of your application, you could use the following code examples to remove CPU as the candidate devices in CUMULATIVE_THROUGHPUT:

compiled_mode_1 = core.compile_model(model, "AUTO:-CPU", ov::hint::performance_mode(ov::hint::PerformanceMode:: CUMULATIVE_THROUGHPUT));

Similarly, if your application requires disabling CPU acceleration in the performance hint of THROUGHPUT, you could use the following command:

compiled_mode_2 = core.compile_model(model, "AUTO:-CPU", ov::hint::performance_mode(ov::hint::PerformanceMode:: THROUGHPUT));

How Does CUMULATIVE_THROUGHPUT Choose Devices?

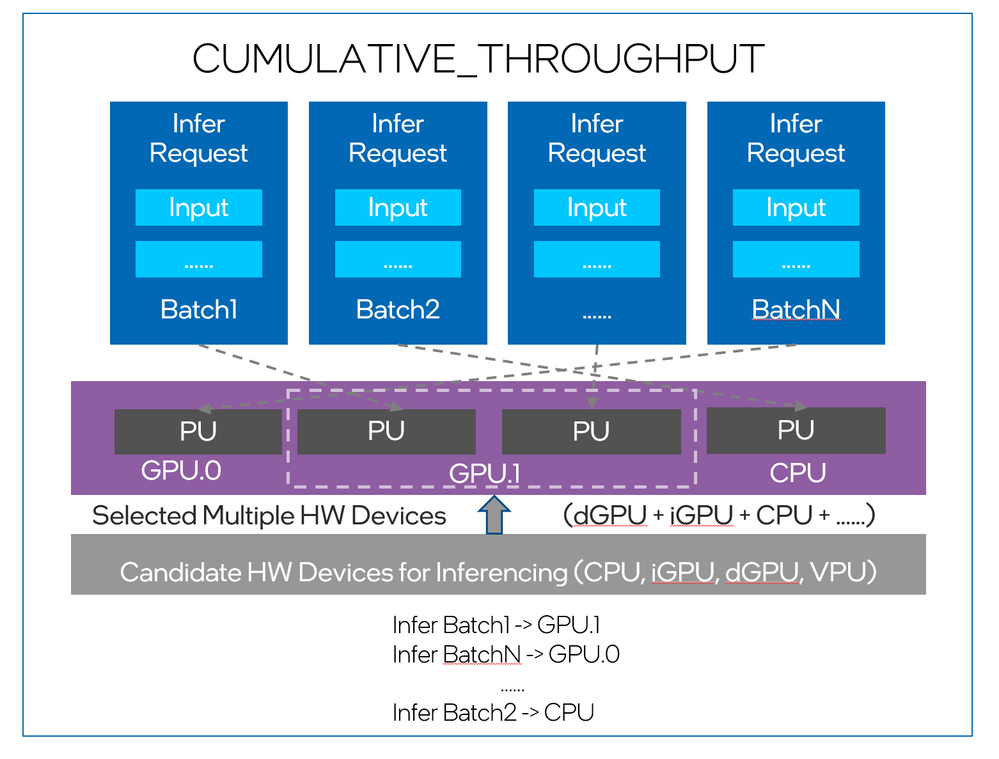

When you use CUMULATIVE_THROUGHPUT in the 2022.2 release of OpenVINO, the internal scheduler and the ie_core blob queue, which is set up according to the number of inference requests, could offload any infer request to any device within the selected multiple devices.

The AUTO scheduler offloads infer requests to higher priority device first. For example, if you have specified the priority of the devices for inferencing using the following command:

compiled_model = core.compile_model(model=model, device_name="AUTO:GPU.1,GPU.0,CPU", config={"PERFORMANCE_HINT":"CUMULATIVE_THROUGHPUT"})

It means that GPU.1 is the highest priority device for running the inference. Therefore, when running the inferencing, AUTO scheduler will offload the infer requests to GPU.1 first. If all the processing units in GPU.1 are fully loaded, then AUTO scheduler will offload infer requests to GPU.0. Note that there is additional memory copy between inference requests on CPU to GPU in the 2022.2 release of OpenVINO. Improvement on removing this memory copy would be expected in future release.

Fig.3 shows how AUTO scheduler offload inference batches consisting of infer requests to different devices.

Summary

To summarize, “CUMULATIVE_THROUGHPUT” is a new performance hint introduced in OpenVINOTM 2022.2 release. With “CUMULATIVE_THROUGHPUT”, developers could enjoy significant throughput performance improvement provided by multiple devices inferencing simultaneously.

For more information, see the AUTO documentation.

To learn more about how OpenVINO can boost your AI efforts, check out the

Edge AI Certification Program.

Notices & Disclaimers

Intel technologies may require enabled hardware, software or service activation.

No product or component can be absolutely secure.

Your costs and results may vary.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.