By uniting distributed architectures based on Kubernetes* with Intel® AI software tools and Intel optimized hardware, developers can unlock hardware-level acceleration, at scale, with just a few lines of code. Examples of these scalable accelerated workloads are housed in what are called the Intel® Cloud Optimization Modules (ICOMs), which are open-source codebases with codified Intel AI optimizations and instructions for selecting appropriate Intel hardware meant to run cloud-natively on one of three Cloud Service Providers (CSPs): Microsoft Azure*, Google Cloud Platform* (GCP), and Amazon Web Services (AWS)*.

Among the many workloads for Intel® Cloud Optimization Modules, one of the most recent releases, the Intel® Cloud Optimization Modules for Kubernetes, leverages XGBoost within the context of distributed Kubernetes applications. In this example, the infrastructure of the application, not the training itself, is distributed across the compute clusters. The application is composed of three API endpoints that handle the end-to-end pipeline from data processing all the way through training and inference. A second recent release, Intel® Cloud Optimization Modules for Amazon SageMaker, allows developers to implement Intel® oneAPI Base Toolkit libraries inside of Amazon SageMaker. While these two ICOMs focus on AWS, there are upcoming ICOMs that will take advantage of GCP and Azure cloud services.

Kubernetes* Overview

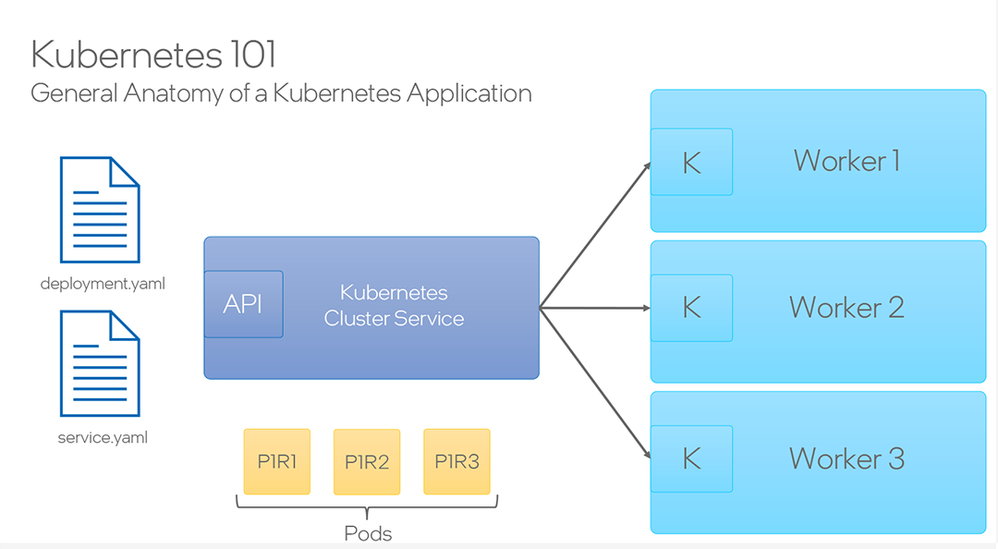

Kubernetes* is an open-source container orchestration system designed to automate the deployment, scaling, and management of containerized applications. The general anatomy of a Kubernetes application can be broken down as seen in the above diagram. The Kubernetes Cluster Service manages the clusters, which can be understood as a set of “workers” or nodes that run the containerized applications. Pods are essentially individual instances of an application. By deploying a docker container into the pods, the pods will then be deployed onto the worker machines thus allowing the application to run in a distributed fashion inside this cluster of workers. Lastly, at minimum the application needs to include a deployment.yaml file and a service.yaml file, which are the configuration files used to define various conditions, such as limiting CPU utilization per worker or specifying the allowed computer resource utilization thresholds when making requests to a server, just to name a few. With the service.yaml file specifically, services can be configured and enabled on the pods, such as a load balancer to handle varying loads across the available compute. Essentially by distributing an application with Kubernetes over multiple workers, we are increasing the capacity of the application to handle an increasing number of external requests to access the given application by splitting up the “effort” across multiple “workers” thus providing scalability and stability to this application.

Intel® Cloud Optimization Modules for Kubernetes

Looking more specifically into the Intel optimized solutions, first we will look at the Intel® Cloud Optimization Modules for Kubernetes and how they can be used to build and deploy AI applications on the AWS cloud. The architecture of this solution uses Docker* for application containerization and Elastic Container Registry (ECR) Storage on AWS. The application image is then deployed on a cluster of Amazon Elastic Compute Cloud*, otherwise known as EC2, instances managed by Elastic Kubernetes Service (EKS). Amazon Simple Storage Service, otherwise known as Amazon S3, is used for storing data and model objects, which are retrieved during various steps of the ML pipeline. The client interacts with this infrastructure through the Elastic Load Balancer, which in turn gets provisioned by the Kubernetes service. The goal of this optimization tool is to highlight use of Intel® Xeon® 4th Gen Scalable processors and AI Kit components to improve performance while enabling scale with Kubernetes based on a high-availability solution architecture.

Intel® Cloud Optimization Modules for Amazon SageMaker

The second optimization comes from Intel® Cloud Optimization Modules for Amazon SageMaker. Amazon SageMaker is a fully managed machine learning service on the AWS cloud. The motivation behind this platform is to simplify the process of building robust machine learning pipelines on top of managed AWS cloud services. Unfortunately, the abstractions that lead to its simplicity make it difficult to customize. This Optimization Module addresses this concern by showing developers how to inject custom training and inference code into a prebuilt SageMaker pipeline. The main goal is to enable Intel AI Analytics Toolkit accelerated software in SageMaker pipelines.

The solution architecture involves building and registering images to Amazon Elastic Container Register (ECR) for the xgboost-daal4py application and the lambda inference handler. (Details on the machine learning library daal4py can be viewed here.) The xgboost-daal4py ECR URI is provided to the SageMaker pipeline script so that when the pipeline is executed, it processes the data, trains and validates the model, and deploys a working model to the inference endpoint. The lambda image is then used to build a lambda function that handles raw data pre-processing in real-time and sends the processed data to the SageMaker inference endpoint. From here, access to the inference endpoint is managed by a REST API service called the AWS API Gateway. The goal of using the information in this module is to showcase how developers can build end-to-end machine learning pipelines in SageMaker by leveraging Intel hardware-optimized machine learning libraries like daal4py.

A Compute Aware AI Developer

As an AI developer, knowing that there are critical performance decisions to be made throughout the AI software stack and making conscious decisions about the software run and hardware you run it on are key to improving as AI Developers. Understanding the latest innovations and trends in this space is vital: hardware-optimized Python* packages like the Intel® Extension for PyTorch* and Intel® Extension for TensorFlow* that deliver updated optimizations on Intel's latest hardware; machine learning dedicated ISAs like AVX-512 Vector Neural Network Instructions and Advanced Matrix Extensions; and quantization and accuracy aware model compression. Being compute aware as an AI Developer will help you to know more about the stack you are using and the best ways to optimize your workflow, such as knowing how to validate cores, memory, and networking speeds; selecting optimized packages for an application; including the correct lines of code to activate these optimizations; and monitoring core utilization and benchmarking to ensure proper resource allocations.

The goal of presenting the Intel® Cloud Optimization Modules is to help developers enhance the performance and scalability of their applications with Intel software and hardware. With the increasing demand for high-performance cloud applications, it is crucial for developers to stay informed and be able to utilize the latest technologies and tools available. We also encourage you to check out Intel’s other AI Tools and Framework optimizations and learn about the unified, open, standards-based oneAPI programming model that forms the foundation of Intel’s AI Software Portfolio.

See the video: Kubernetes* Module: Deploy Cloud-Native, AI Workloads on AWS*

About our experts

Eduardo Alvarez

Senior AI Solutions Engineer

Intel

Eduardo Alvarez is a Senior AI Solutions Engineer at Intel, specializing in applied deep learning and AI solution design. With a degree in Geophysics from Texas A&M University, Eduardo previously worked in various Energy Tech startups, building software tools that democratized data-driven solutions for cross-disciplinary professionals in the energy sector. His primary research and engineering interests include Time-series Analysis/Forecasting, Reinforcement Learning, Computer Vision, Cloud Solutions Architecture, MLOps, and DevOps, and he led the team that delivered the energy industry’s first low-code ML cloud-native web application for subsurface analytics at Quantico.

Kelli Belcher

AI Solutions Engineer

Intel

Kelli is an AI Solutions Engineer at Intel with over 5 years of experience across the financial services, healthcare, and tech industries. In her current role, Kelli helps build Machine Learning solutions using Intel’s portfolio of open AI software tools and is a Developer Evangelist for the oneAPI AI Analytics Toolkit. Kelli has experience with Python, R, SQL, and Tableau and holds a Master of Science in Data Analytics from the University of Texas.

David Shaw

Communications Manager, Storyteller

Intel

David has been an artist since childhood and is on a mission to help others see things 'differently' through the power of creativity, play, and storytelling. He loves to observe people and the world around him to connect others to ideas and data that guides them down their path. He wants to inspire others to act, change, and develop in new ways. As a multi-skilled generalist – a multipotentialite – he looks at the world with a global, holistic view. His curiosity allows him to view diverse perspectives. David prefers to leverage his understanding of human nature to solve problems through unique and unexpected approaches. In addition, he’s a translator of languages and ideas, with the ability to simplify the complex while allowing others to understand difficult subjects. Over his career, he has worked across multiple business functions including R&D, Product, IT, Operations, and HR.

AI Software Marketing Engineer creating insightful content surrounding the cutting edge AI and ML technologies and software tools coming out of Intel

AI Software Marketing Engineer creating insightful content surrounding the cutting edge AI and ML technologies and software tools coming out of Intel

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.