Babu Pillai is a Senior Research Engineer at Intel Labs and has a strong interest in Systems Research, with a particular focus on distributed, interactive systems.

Highlights:

- Intel Labs, in a joint effort with Brown University and MIT through a DSAIL research collaboration, has been crowned as the new record holder for the JouleSort “Indy” Category, completing the task using just 63 kJ.

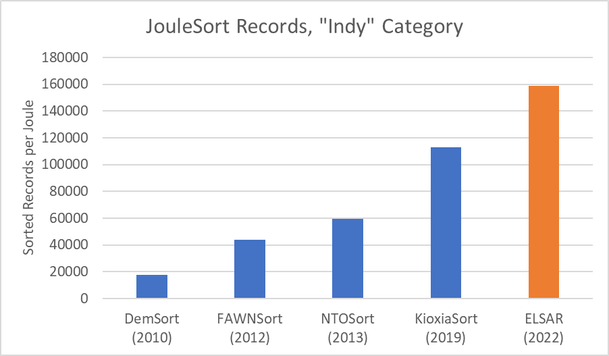

- The innovative system, External LearnedSort for ASCII Record (ELSAR), uses a novel sorting technique that leverages a form of machine learning to achieve 159,000 sorted records per Joule, a 40% improvement over the prior record.

- Intel Labs excitedly looks forward to continued innovation and technological progress on core systems and big data problems using novel machine learning, learning-enhanced techniques, and modern hardware designs.

Promoting Technical Innovation

Nascent industries are very exciting to follow. These are characterized by large levels of risk-taking, with startups shooting for the moon on an idea and a prayer, resulting in some spectacular successes and many, many failures. Thus, the overall level of innovation in fast-moving new industries is high. On the other hand, in more mature industries, innovation may drop off. For example, the basic design of automobiles matured many decades ago; cars generally became good enough in most meaningful metrics – they were fast, cheap, safe, and reliable enough for most day-to-day uses. In such mature industries, dominated by just a few large firms, what can spur further innovation?

In the car industry, one key driver of innovation is the sport of car racing. Almost every major automaker participates in some form of racing. Beyond the publicity value of winning a race, participating in racing forces the development and refinement of many technologies. Racing designs have pushed the limits on engine power, efficiency, materials for lower weight, improved handling, better traction, computer-controlled shifting and fuel injection, and aerodynamics. All of these innovations are driven by the very artificial conditions and constraints of a race environment, and a monomaniacal focus on winning at almost any monetary cost. Thus, many new technologies are inspired by racing that would never have been developed just for the commodity world of good-enough-for-real-world-use vehicles. Fortunately, many racing-inspired technologies do (eventually) trickle down to mass-produced vehicles, bringing the benefits of these innovations to the masses.

The computing industry is generally considered to be a vibrant, highly innovative space. However, even here, not all computing disciplines are equal. In particular, fields looking at fundamental, classical problems, and systems research looking into infrastructure, or the plumbing of computing systems generally do not get the same attention or love as, for example, the latest AI-based chat technologies. These fields may be perceived as largely “solved,” resulting in less attention, fewer research dollars, and a risk of reduced innovation. For these fields, some form of competition, analogous to car racing, could serve as motivation and a driver of innovation.

The late great computer science luminary and database pioneer, Jim Gray, created a set of standardized benchmarks in the mid-1980s in order to objectively compare commercial systems built by different companies. One benchmark focused on transaction throughput (eventually evolving into the widely used TPC family of benchmarks), and another focused on the sorting of records stored on disk. These benchmarks became a competition, as system developers vied annually for the crown of fastest system, spurring continued and relentless innovation in databases and general systems domain.

Why Sorting?

At first glance, a sorting problem may seem a strange thing on which to base a competition. It sounds too simple – after all, librarians and archivists have been sorting things for hundreds of years, long before computers. In any case, complexity analysis and implementation of optimal sorting algorithms are covered in any computer science textbook – what more innovation can one expect? The conceptual simplicity of sorting is actually its strength. People understand the idea of sorting, so there is no question of what needs to be done, or of determining whether software is working correctly. For a public benchmark, conceptual simplicity is a great feature, as it is more accessible to a broad audience with less of a learning curve than with more esoteric tasks.

Furthermore, the classic sorting algorithms studied in school (e.g., Quicksort) are mostly in-memory algorithms, where the unsorted input data already exist in memory, and the sorted output will be constructed in memory. In contrast, the sort benchmark, as envisioned by Jim Gray, is an external sort problem. Here, the data starts on disk (or another persistent storage medium), but is too large to fit in memory, and the sorted output should be written to disk. This requires a much more complex multi-phase procedure. Typically, in the first phase, the input is logically divided into chunks that are small enough to fit in memory. One at a time, each chunk is read into memory, sorted using a classical in-memory algorithm, and written back to disk. In the second phase, these sorted chunks are then merged; this involves reading all of the chunks, a few records at a time, and then writing the records according to the desired sort order to a single unified output file. External sort, therefore, involves multiple input and output steps and stresses multiple components of a computer, including the processor, memory, I/O busses, storage devices, operating system, and file system. For very large sort tasks run on clusters of machines, it also stresses the communications layers. External sort also presents many opportunities for optimization and innovation, e.g., overlapping I/O with compute, good use of multiple processor cores, tuning of chunk and I/O granularity, scheduling of I/O to maximize disk throughput, etc. Thus, external sort, as a competitive benchmark, is a good driver of innovation across many aspects of computer hardware and software design.

Current Generation of Sorting Benchmarks

The legacy of Jim Gray’s original sort benchmark lives on at the Sort Benchmark Homepage, which hosts the specification of the benchmarks and the official set of sorting records. A dedicated team oversees the site, organizes the annual sorting competition, and carefully checks each submission to ensure all requirements are met and that measurements are of sufficient quality to declare any new record-setting systems. The committee also updates the parameters of the sort benchmarks as needed, updates the set of rules and requirements, and occasionally introduces a new benchmark variant. For example, the CloudSort benchmark was added in 2014, with which the goal is to minimize the monetary cost of sorting a large dataset using rented cloud infrastructure. Some benchmarks are also removed as they become obsolete. For example, Jim Gray’s original sort benchmark (called Datamation Sort) used to require about an hour to complete for the top systems when introduced in 1985. By 2000, the top systems could complete this in less than a second. As a result, it was deprecated and replaced with a much larger version (called Gray Sort), which is still in use today. Top contenders in Gray Sort are large cluster computers consisting of hundreds of networked computing nodes with custom software to perform distributed sorting.

In 2007, a very different type of sort benchmark called JouleSort was introduced. Unlike most of the benchmarks that focus on speed, JouleSort seeks to minimize the total energy used to perform the sort. To do well on JouleSort, a system needs to be fast but energy efficient. Instead of the largest, fastest computer clusters, winning JouleSort systems tend to be more modest single computers that balance power and performance. Past winners include entries based on small servers, desktop-class machines, and laptops. These are very similar to the kind of computers that most people use on a day-to-day basis, so any innovations may be more broadly relevant than those affecting computer clusters.

Interestingly, no system based on low-power smartphones or microcontrollers has held the JouleSort record; likely, these devices are still too limited to handle the large storage and processing demands of the sort task. The JouleSort task is to sort a 1 TB file, consisting of 10 billion 100-byte records. Each record includes a 10-byte ASCII string that serves as the sorting key. The data starts on disk (or other persistent storage device, like flash memory), and the output must be written out as a single large file to disk. The metric for the competition is to minimize the total energy consumed to perform this sorting task. For mains-powered systems, this requires the use of a special device to measure and log the electrical power draw at the wall outlet. For battery-powered systems, a means of accurately logging the current draw from the battery should be used. Systems are compared in terms of total Joules consumed or, equivalently, in terms of the higher-is-better metric, sorted records per Joule.

Our Record-Setting System for JouleSort

The Sort Benchmark committee recently announced the new records set in 2022. We are proud that our submission, a joint effort between Brown University, MIT, and Intel Labs, has been crowned as the new record holder for the JouleSort “Indy” Category, completing the task using just 63 kJ. Thus, we achieved 159,000 sorted records per Joule, a 40% improvement over the prior record.

Figure 1. Records for energy-efficient sorting. Our system (ELSAR) is the new record holder, achieving 40% higher sorting performance per Joule of energy used over the prior record holder.

Last year, Intel Labs announced a new phase of our Data Systems and Artificial Intelligence Lab (DSAIL) university research program at the Massachusetts Institute of Technology (MIT). One of the major thrusts of DSAIL’s continued research agenda is to build instance-optimized systems. These systems self-adjust to handle a workload with near-optimal performance under a given set of operating conditions as if built from scratch for that specific use case.

As part of the DSAIL program, we created the External LearnedSort for ASCII Records (ELSAR) system, which uses a novel sorting technique that leverages a form of machine learning. The algorithm first samples the unsorted records to learn the distribution of keys; more precisely, it builds a model of the Cumulative Distribution Function of the key distribution. Using the model, it runs through the input data in parallel, and redistributes the records into 1,000 equal sized, non-overlapping partitions of the key space. Each of these partitions can easily fit in memory. In the second phase, parallel threads read and sort the partitions using LearnedSort 2.0, an in-memory sort algorithm that also uses a learned model of the CDF of record keys. Using the CDF model, LearnedSort 2.0 can accurately predict where in the output each record will appear, letting it scan through the input records and placing them into the (nearly) correct final locations in one pass. A very simple, fast second pass corrects any local placement errors. Each sorted partition is written back to disk, and all are concatenated to form the final sorted file.

On the hardware side, we built a system that carefully balances performance and power. Our system is built around Intel’s 12th generation series of CPUS, known for high performance and low power. We use a mid-tier part, the Intel® Core™ i5-12600K, with six performance cores and four efficiency cores. This processor hits the sweet spot in the power-performance tradeoff: faster processors tend to use too much energy, while lower power parts tend to be quite a bit slower. An advantage of the 12th generation is that it lets us use DDR5 memory, which uses less power than similar-performance DDR4 memory. Since the benchmark is very I/O intensive, we use four fast NVMe drives, each capable of up to 5GB/s of write throughput. These were configured using software RAID in pairs – we read from one pair and write to the other in each phase of the sort. This influenced our choice of motherboard, as most only have two NVMe connectors. An often-overlooked part of the system is the power supply. We carefully chose one that achieves 90% efficiency at low 100-200W power levels; most power supplies are efficient only at much higher power levels.

This description leaves out the months of testing, tuning, and tweaking we had to do to get the system running as fast as possible at the lowest average power. This included adjustments to the software to fully utilize all ten cores, tweaks to avoid file locking that can serialize parallel threads, and elimination of all sources of parasitic power draw (e.g., extra fans, unused built-in devices, network, video, etc.). We may have missed a few opportunities for improvement as well. For example, our current software does not leverage the difference between power and efficiency cores. In any case, in the end, we were able to decisively demonstrate a new record for energy-efficient sorting.

Our collaborators, Ani Kristo (then a graduate student at Brown University) and his advisor from MIT, Tim Kraska, were instrumental to this achievement. The development of the LearnedSort algorithm and the ELSAR system ultimately became a part of Ani’s PhD thesis. Programs such as DSAIL make long-term, mutually-beneficial research engagements possible between Intel Labs and leading academic institutions. We expect to see continued innovations and productive research results from the DSAIL program.

Looking forward, it would be personally quite gratifying to see our new record stand for some time. However, from a big-picture perspective, it would be even better if our record is promptly beaten next year, and new records established every year going forward. This would be a great indicator of rapid innovation and technological progress in systems research. Systems research may not be the most visible or flashiest areas of computer science, but it provides the foundational technologies on which other areas depend. Thus, a vibrant systems research field is valuable to the entire computing industry.

Padmanabhan (Babu) Pillai is a senior research scientist at

Intel's Parallel Computing Lab (PCL). His core research interests

are in distributed systems, edge computing, and low-power systems.

His recent work includes the use of edge computing to perform

real-time understanding of the world, and development of

distributed, parallel array libraries for Python. Babu received

his M.S. and Ph.D. in computer science from University of

Michigan, and holds a B.S. in electrical and computer engineering

from Carnegie Mellon University.

Padmanabhan (Babu) Pillai is a senior research scientist at

Intel's Parallel Computing Lab (PCL). His core research interests

are in distributed systems, edge computing, and low-power systems.

His recent work includes the use of edge computing to perform

real-time understanding of the world, and development of

distributed, parallel array libraries for Python. Babu received

his M.S. and Ph.D. in computer science from University of

Michigan, and holds a B.S. in electrical and computer engineering

from Carnegie Mellon University.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.