Author list:

Ramesh N. Chukka, Yamada Koichi, Ed Groden, Ro Shah, Thomas Atta-fosu, Badhri Narayanan Suresh, Keith Achorn, Haihao Shen, Roger Feng, Ciyong Chen, Wang Jingyu, Xu Wenhao, Cao Zhong Z., Deng Daisy, Shi Yuankun, Zhang Jianyu, Chang Hanwen, Dong Bo1, Chen Jian Ping, Nilesh Jain, Pablo Muñoz, Chaunté Lacewell W., Chua Vui Seng

Key Takeaways

- Intel is still in an exclusive club as the only data center CPU vendor to have MLPerf inference results on a broad set of models, not only submitting our own results but also closely collaborating with Dell, HP, and Quanta to deliver 4th Gen Xeon processor submissions, further showcasing AI performance scalability and portability on servers powered by Intel® Xeon processors.

- 4th Gen Xeon processors deliver remarkable gains of up to 5x across MLPerf workloads compared to 3rd Gen Intel® Xeon® Scalable processors – based on v1.1 to v2.x results from last August – due in large part to specialized AI hardware accelerators, including the Intel® Advanced Matrix Extension (Intel® AMX).

- But there’s more! Compared to our 4th Gen Xeon preview results last August, we were able to improve performance by 1.2x and 1.4x for Offline and Server scenarios respectively, underscoring how we are continuously optimizing the AI software stack to realize full utilization of the unique capabilities of our Xeon CPUs. Furthermore, we showcase additional up to 9x (offline) and 16x (server) performance improvement thru hardware-algorithm co-design in our open submission.

The introduction and unprecedented adoption of ChatGPT is indicative of the speed in which artificial intelligence and deep learning is moving, and how it’ll soon be built into nearly every enterprise application. Given diverse use case requirements and the rapidly evolving nature of AI, it’s even more critical to understand the performance capabilities of the Xeon CPUs that are already running your business.

4th Gen Intel® Xeon® Scalable processors feature an all-new level of built-in AI accelerator for deep learning, a comprehensive set of data preprocessing, modeling, and deployment tools and optimizations for the most popular AI frameworks and libraries. This powerful combination of hardware and software is designed to address the needs of any AI professional, from data scientists who want to customize models for large-scale data center deployments to application engineers who want to quickly integrate models into applications and deploy at the edge.

Our goal for the MLPerf Inference v3.0 submissions remains the same as always: Demonstrate how 4th Gen Xeon processors can run any AI workload – including vision, language processing, recommendation, speech and audio translation – and deliver remarkable gains, of up to 5x across these tasks, compared to 3rd Gen Intel® Xeon® Scalable processors – based on v1.1 to v2.x results from last year – due in large part to specialized AI hardware accelerators, including the Intel® Advanced Matrix Extension (Intel® AMX).

To simplify the use of these new accelerator engines and extract the best performance, we remain laser-focused on software. Our customers tend to use the mainstream distributions of the most popular AI frameworks and tools. Intel’s AI experts have been working for years with the AI community to co-develop and optimize a broad range of open and free-to-use tools, optimized libraries, and industry frameworks to deliver the best out-of-the-box performance regardless of the Xeon generation.

And that’s where the additional performance kicker comes in. Compared to our 4th Gen Xeon preview results last August, we were able to improve performance by 1.2x and 1.4x for Offline and Server scenarios respectively, which underscores how we are continuously optimizing the AI software stack to realize full utilization of the unique capabilities of our Xeon CPUs. We’ve also closely collaborated with our OEM partners – namely HPE, Dell, and Quanta – to deliver their own submissions, further showcasing AI performance scalability and portability on servers powered by Intel® Xeon processors.

Furthermore, Intel is developing tools and capabilities (e.g., OpenVINO, AI Kit, etc.) to further co-optimize algorithm-hardware to deliver additional performance while improving the developer experience by making them easy for them. To showcase these capabilities, Intel has made open submissions for BERT and RetinaNet models that demonstrates the trade-off between model performance and accuracy while automating end-to-end process of model optimizations. The submissions demonstrate impressive gains such as 9x in offline and 16x in server configurations.

Bottom line: Xeon CPUs can run every AI code, enabling you to deploy a single hardware and software platform from the data center to the factory floor. If you need acceleration beyond that to meet your service level agreements (SLAs), we can help there as well, but that’s a story for another blog.

Intel Closed Submission Results for MLPerf v3.0 Inference

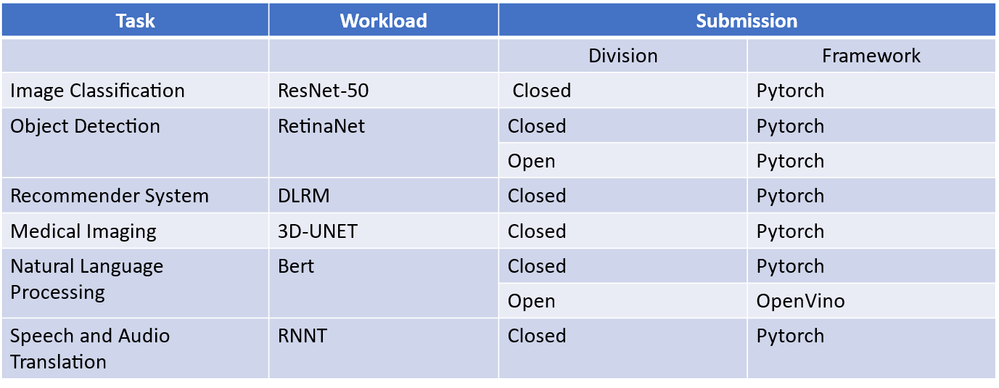

Intel’s submission covers all six data center benchmarks and demonstrated the leading CPU performance and software efficiency across the data center benchmark suite. See the task and framework coverage in Table 1 below. Summary results of data center submissions can be found at MLCommons results page.

Table 1 MLPerf v3.0 Inference submission coverage

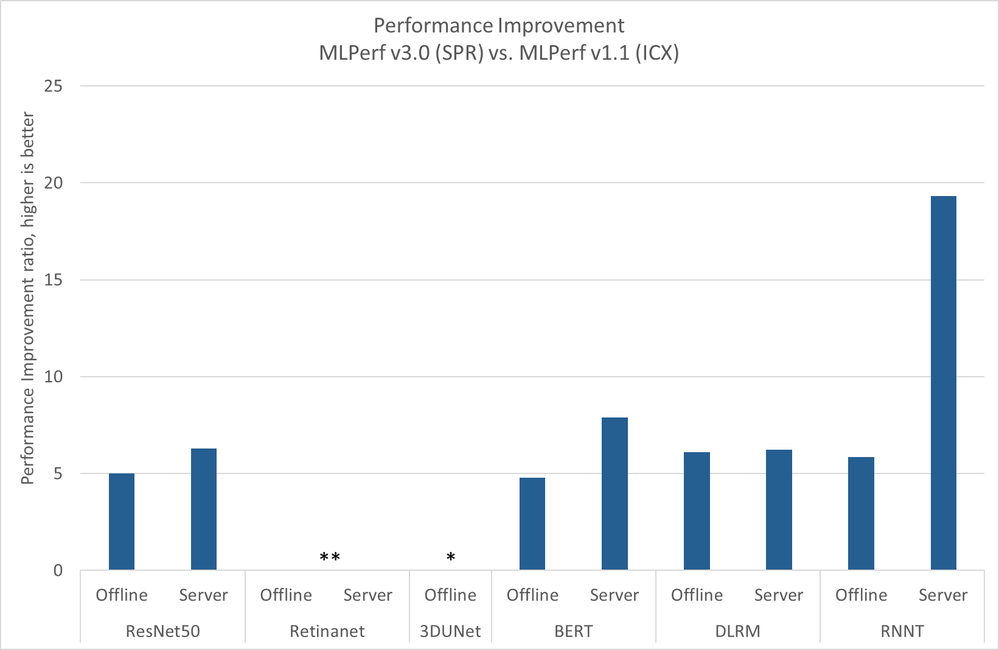

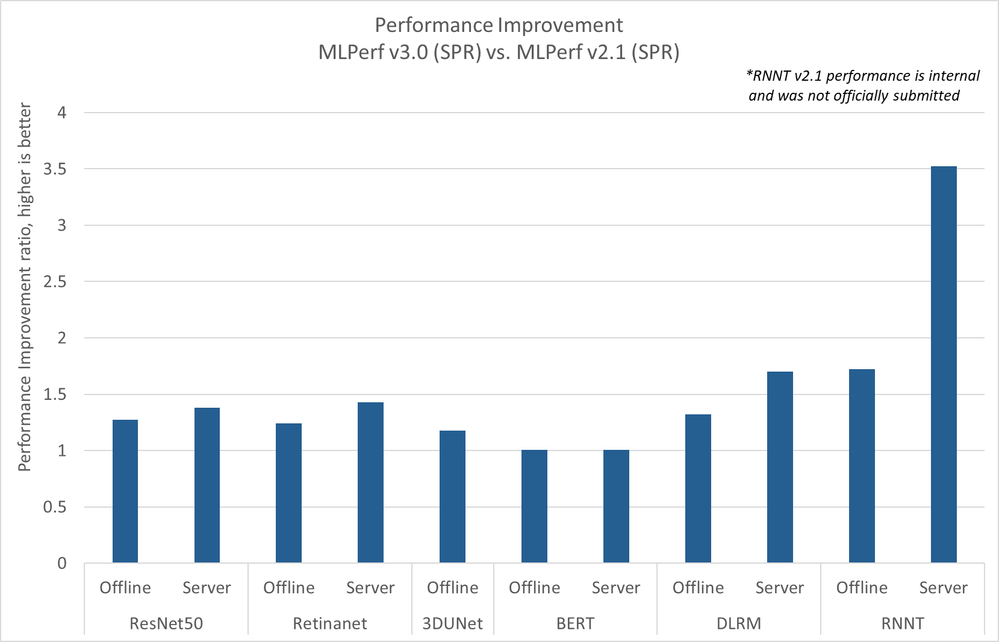

In Figure 1, we summarize the Gen-to-Gen performance ratios between 3rd and 4th Gen Intel® Xeon® Scalable processors (codenamed Ice Lake and Sapphire Rapids respectively). In Figure 2, we show the performance improvements over our preview submission in v2.1, which is achieved through several software optimizations including Intel® oneAPI Deep Neural Network Library (oneDNN), and workload-specific optimizations.

The v3.0 submission features an average improvement of 1.2x for Offline and 1.4x for Server over v2.1 “Preview” submission on Sapphire Rapids. Compared to v1.1 submission on Ice Lake, this submission on Sapphire Rapids represents over 5x improvement in Offline, and a 10x improvement in Offline performance.

Figure 1 Comparison between MLPerf v1.1 submission on Ice Lake and v3.0 submission on Sapphire Rapids. *3D-UNET model changed from v1.1 to v3.0. **RetinaNet is a new benchmark in v3.0

Figure 2 Comparison between preview submission (v2.1) on Sapphire Rapids vs current submission (v3.0). The observed performance due to software optimizations (See section on workload optimizations for details). *RNNT v2.1 performance is internal and was not submitted to MLPerf v2.1 Inference submission

Now let’s dive deeper into the software engineering behind the scenes for how we made it happen. The optimization techniques that we describe benefit the model classes in general, beyond the specific models listed. You can find the implementation details in our latest MLPerf inference v3.0 submission.

Software Optimization Highlights in MLPerf v3.0 Submission Results

We use Intel® Deep Learning Boost (Intel® DL Boost) technology, Intel® Advanced Matrix Extension (Intel® AMX), including Vector Neural Network Instructions (VNNI), in our INT8-based submissions on ResNet50-v1.5, RetinaNet, 3D UNET, DLRM, and BERT. We use INT8 and bfloat16 (mixed precision) in our Recurrent Neural Network Transducer (RNN-T) submissions.

Apart from low precision inference, the following optimization techniques are used in the submissions. This is not an exhaustive list since software optimization for inference is a broad area with many exciting research and innovations in industry and academia.

3D-UNet

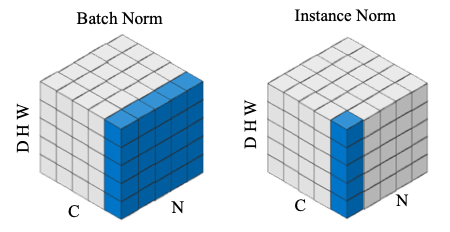

Optimizations performed with 3D-UNET closed division implementation Instancenorm is one of the normalization methods. Unlike BatchNorm, Instancenorm normalizes each instance on the batch independently. Since tensor has two memory formats, channel first and channel last, we need to consider optimization methods separately for these two cases.

Figure 3 Picture shows the difference between BatchNorm and InstanceNorm. Blue blocks are the regions used to calculate mean and variance

Channel First (NCDHW)

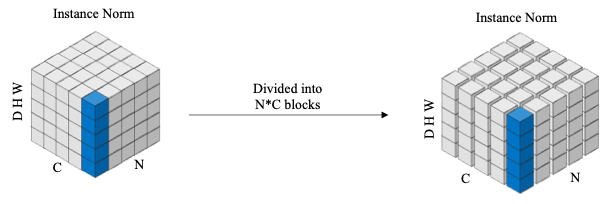

We refer to the DHW direction as the reduce direction. For the channel first tensor, the memory is continuous in the reduce direction, so we can directly load the data into the register along the reduce direction to calculate the mean. And in the process of this calculation, each instance does not interfere with each other, so the tensor can be divided into N×C blocks for parallel calculation. The specific calculation method is shown in Figure 4.

Figure 4 Tensor is divided into N×C blocks for parallel calculation.

Channel Last (NDHWC)

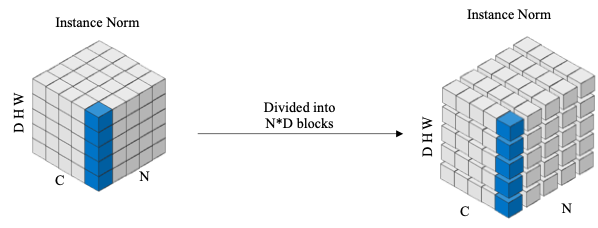

For the channel last tensor, since the memory is continuous in the channel direction, we can only load data along the channel direction.

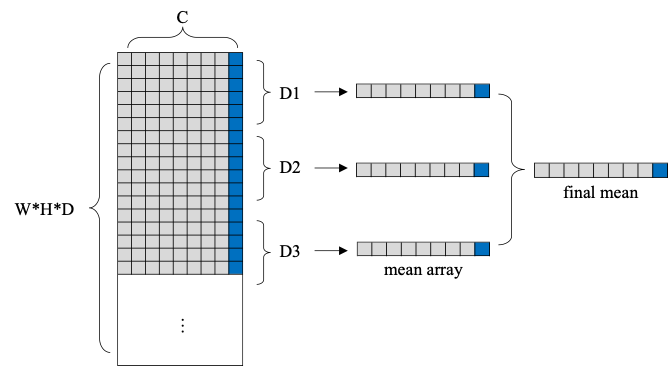

To improve efficiency, we divide the reduce direction into blocks, such as D blocks, and calculate the mean and variance in parallel for each block, so that N×D means and variances are obtained. The specific steps are shown in the Figure 5 and 6.

Figure 5 Tensor is divided into N*D blocks for parallel

Figure 6 Calculate each blocks mean and variance, and then calculate final mean and variance.

BERT

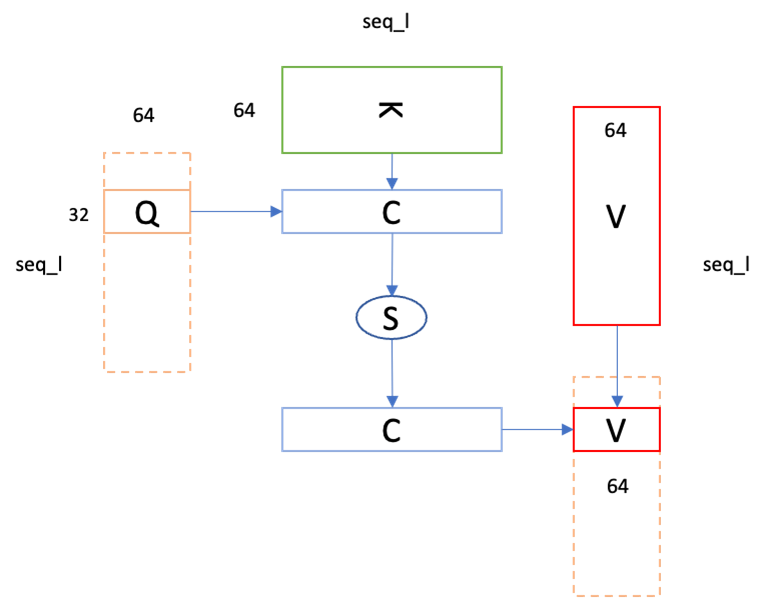

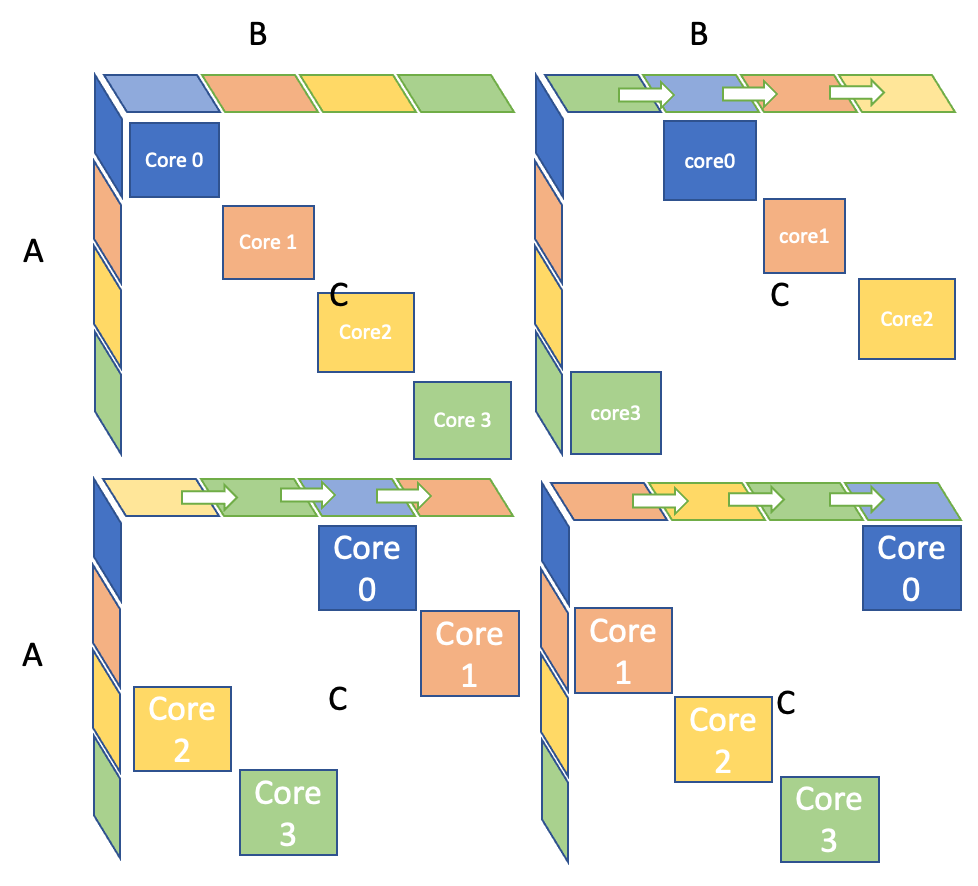

Optimizations performed with BERT closed division implementation implemented Fused MHA with AMX GEMM. Each core computes a complex depicted in Figure 7, maximizing both AMX efficiency and parallelism.

Figure 7 Fused MHA compute complex for each parallel unit.

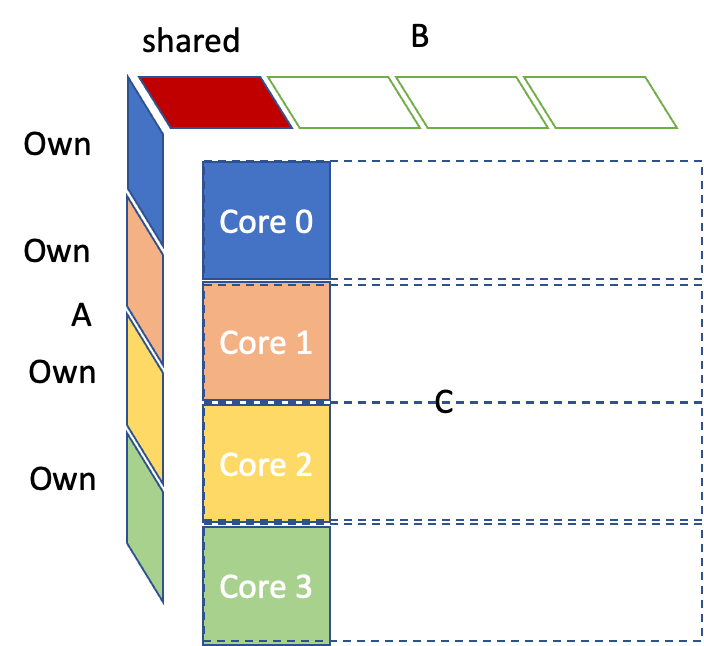

Memory and Cache Management

AMX Linear optimization targets maintaining most of the data inside L2. We maximize weights reuse by minimizing shared states of L2. Specifically, we shard each weight in L2 as depicted in figure 6, compared to suboptimal situation (figure

Figure 8 weights sharding inside L2, distribute loads amount address span of A/B matrices

Figure 9 Unoptimized B matrix access cause L2 data in shared states, shrink effective L2 size.

DLRM

In the DLRM closed submission, we leverage Intel AMX instruction to speed up MLP and interaction with oneDNN and customized kernels MLP operator is fused with following elementwise operator, such as relu, sigmoid. oneDNN also supports hybrid datatype fuse. For example, top mlp5 is fused with sigmoid, the weight, and input of this fused operator are s8, while output is fp32. Meanwhile, the other MLP operators are fused with relu, datatype of their output is s8. Row wise quantization is also supported in oneDNN to improve accuracy. The interaction operator is fused with embedding operator in a customized kernel to speed up memory bound operation. We optimize this kernel and reduce the number of instructions. The prefetch function provided by Intel x86 is also leveraged to overlap compute and communication so that the bandwidth utilization can reach peak.

Figure 10 Layout of Interaction

Other major optimizations we have done so far are:

- Adjust cache locality for whole model to fit Sapphire Rapids’ cache.

- Fuse pre-processing and post-processing OPs.

- Leverage the intrinsic functions from Intel SVML library for speedup.

ResNet50

We utilized graph compiler technology to generate a performance-oriented kernel with various optimizations aimed at improving compute efficiency and reducing memory overhead, as outlined below:

Optimizations from Computational Graph Level

We have enabled the use of large partition to include multiple stages in the same outer loop along with batch axis. The layers within stage 1 and stage 2 are combined into the same outer loop and run with batch size 1, the layers within stage 3 are combined into another outer loop and run with batch size 2, while the layers within stage 4 are running separately, as the weight size becomes larger to be held in L2 cache for multiple layers.

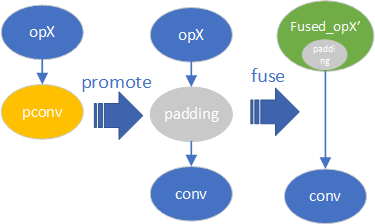

We have improved the handling of padding attributes by promoting them into convolution’s preceding op. This reduces the extra runtime overhead of memory copy during computational graph transformation, as shown in Figure 11.

Figure 11 Padding promotion transformation

We have made improvements to the residual-add operation for all bottlenecks with direct INT8, without the need for extra conversion overhead between FP32 and INT8 data types.

RetinaNet

Optimizations performed with RetinaNet closed division implementation RetinaNet optimizations range from weight invariant model architecture modification to customized post-processing subroutines.

Avoiding Memory Expensive Concatenation

For each of regression and classification head, we eliminate the concatenation of the 5 tensors generated based on the output of feature pyramid network, saving significant memory ops. Instead, we keep the tensors in a tuple, with post-processing adapted accordingly to work on a tuple instead of one big tensor for each of regression and classification outputs.

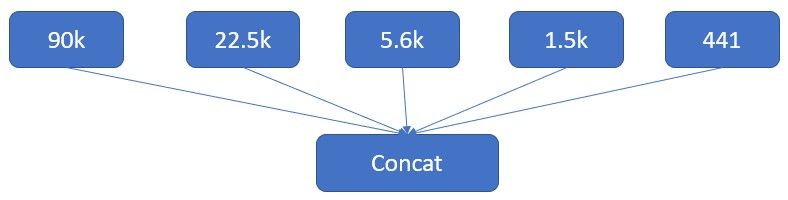

Figure 12 Original model architecture concats all 5 outputs of the regression head into one 120k x 4 tensor. Similarly for Classification heads: 120k x num_class (num_class=264)

Skipping Sigmoid Computation

Selection of candidate bounding boxes for IOU calculation relies on the logits from the classification scores passing a set threshold (usually set to c=0.05). We postpone this sigmoid compute, utilizing monotonicity of the sigmoid, and applying a modified threshold directly on input classification scores.

Detection Boxes Post-processing

We utilized custom OpenMP parallelization to threshold and collect IDs of detection boxes meeting the above threshold. Unlike the default post-processing implementation, which uses the expensive masking complimentary routines of torch’s greater and where methods.

Other optimizations include passing quantized int8 inputs directly to the graph, reducing framework overhead. We also use a histogram observation method to collect quantization statistics, helping to preserve accuracy while keeping activations in signed int8 format.

RNN-T

Optimization for LSTM

We shard inputs and split weights between the cores to maximize the reuse of L2 cache. We also adjust the order of computation by fusing the first Linear of LSTM Cells along layer axis to reuse the shared weight inside LSTM Layer. We also leverage AVX512-FP16 instructions to speed up post-ops in LSTM with rational polynomial approximated Sigmoid and Tanh implementation.

Optimization for Other Linear Layers

For other Linear layers, we split the first Linear of Joint Network into two separated Linear and an elementwise accumulation, fuse the two Linear, elementwise Add and ReLU to reduce memory footprint.

Intel Open Submission Result for MLPerf v3.0 Inference

Intel submitted models to the Open category with additional algorithmic optimizations, in addition to the submissions to the Closed division mentioned above. The Open division models include BERT and RetinaNet models. Hardware-algorithm co-optimization techniques help further improve the performance of these benchmark models on 4th Gen Intel® Xeon® processors.

BERT

Pruning and Compression with Intel Neural Compressor

Neural network pruning (briefly known as pruning or sparsity) is one of the most promising model compression techniques. It removes the least important parameters in the network and achieves compact architectures with minimal accuracy drop and maximal inference acceleration. As current state-of-the-art models have increasingly more parameters, pruning plays a crucial role in enabling them to run on devices whose memory footprints and computing resources are limited.

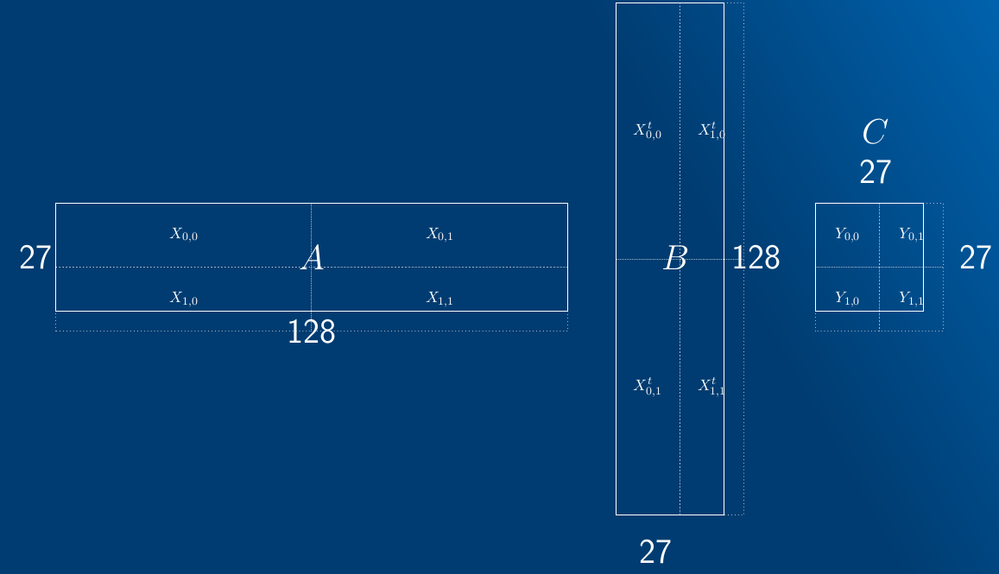

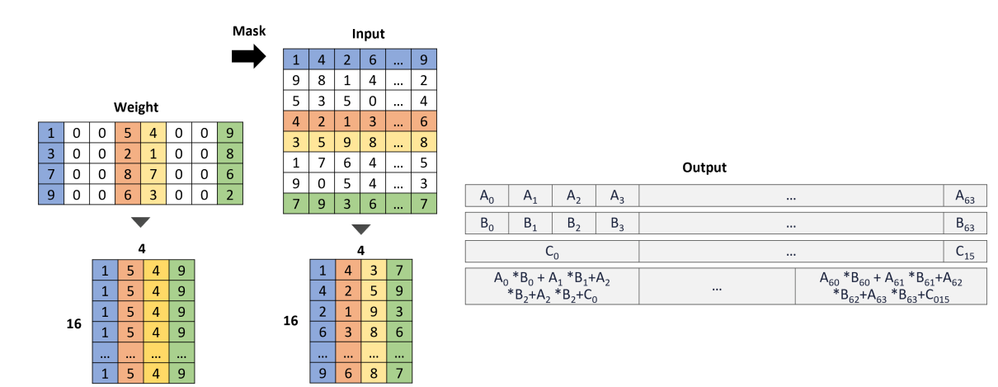

Figure 13 Sparsity construction

We implement INT8 sparse GEMM kernel to accelerate operations between dense input and sparse weights leveraging AVX512-VNNI instructions. Figure 1 illustrates how the kernel operates. Given a block-wise sparse weight, we broadcast the non-zero weight block to form a VNNI-format block A. Based on the mask in the sparse weight, we re-organize the corresponding input as another VNNI-format block B. Then, the kernel uses VNNI to produce the intermediate output given A and B, and add bias C as the final output.

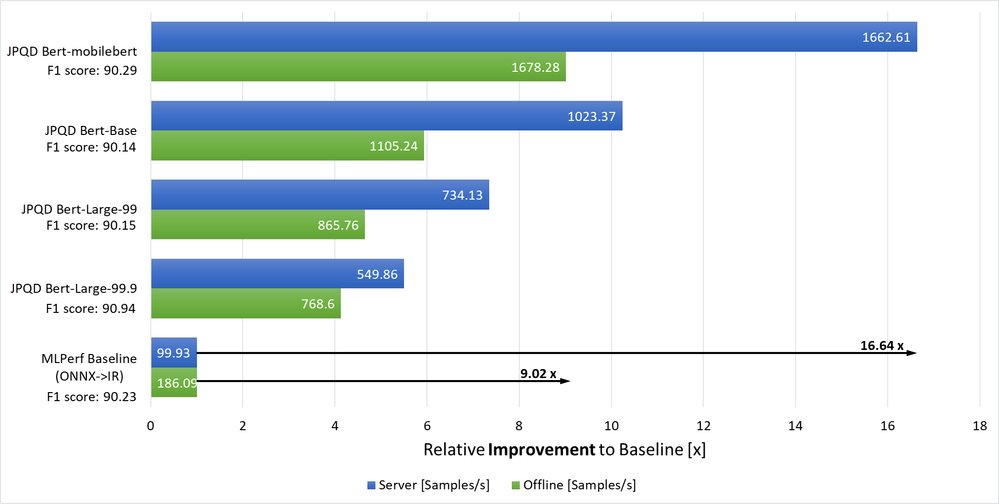

Optimization with Joint Pruning Quantization and Distillation (JPQD)

Intel developed JPQD as a single joint-optimization pipeline to improve transformer inference performance by pruning, quantization, and distillation in parallel during transfer learning of a pretrained transformer. JPQD alleviates the developer complexity of sequential optimization of different compression techniques. The output of JPQD is a structurally pruned, quantized model in OpenVINO IR, which is ready to deploy with OpenVINO runtimes optimized for a range of Intel devices. JPQD feature is available in OpenVINO suite of tools and can be invoked via Hugging Face Optimum-Intel.

In this submission, we applied JPQD on 3 pretrained BERT models, BERT-large, BERT-base, and MobileBERT, dialing structured sparsity to attain target F1 accuracy for SQuADv1.1, as well as MLPerf p99.9% and p99% target latency. We have submitted 4 optimized models (i.e., JPQD-BERT-large-99.9, JPQD-BERT-large-99, JPQD-BERT-base-99, JPQD-MobileBERT-99) to showcase flexibility of JPQD technology to optimize models for accuracy vs performance. Optimized models achieved up to 9X throughput improvement in offline scenario whereas server configuration achieved up to 16.6X improvement.

Figure 14 Open submission for BERT

See here for more information.

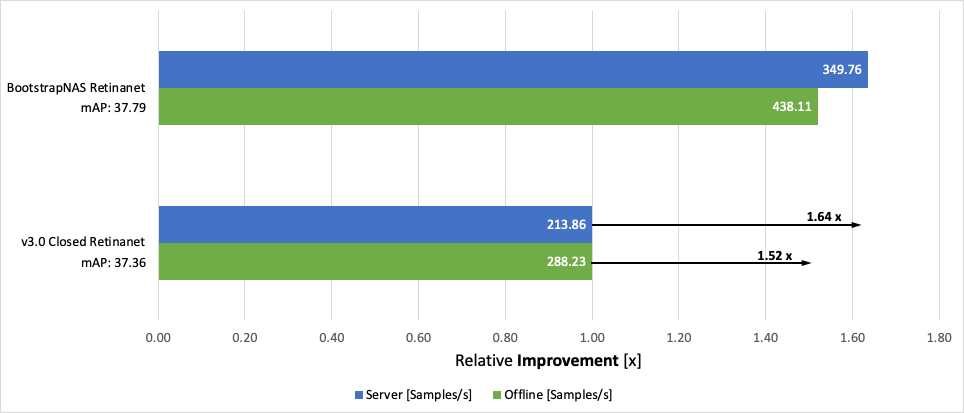

RetinaNet Optimization using BootstrapNAS Automated Neural Architecture Search

Intel developed BootstrapNAS, a Neural Architecture Search (NAS)-based model optimization solution available in the Neural Network Compression Framework (NNCF). It uses a pre-trained model as input to automatically generate a weight-sharing super-network, and once this super-network has been trained, it searches for high-performing subnetworks. We used BootstrapNAS to discover a 4th Gen Intel Xeon-optimized RetinaNet model. As illustrated in the figure below, we achieved an additional 1.64x improvement in MLPerf’s Server configuration and 1.52x in the Offline configuration. BootstrapNAS models are more efficient and improve the mean average precision (mAP) compared to the baseline models. More details about BootstrapNAS are available in our paper and submission results here.

Figure 15 Open submission for RetinaNet

Ease of use for Intel Submission of MLPerf Inference v3.0 Benchmarks

MLPerf is an industry standard benchmark to measure platform AI capabilities for different usage scenarios. To save enabling effort for customers we also provided containers for four PyTorch based workloads. This allows users to enable MLPerf benchmark with minimal effort. Please refer to the README.md of each workload at https://github.com/mlcommons/inference_results_v3.0/tree/master/closed/Intel/code to pull MLPerf Intel containers and evaluate the AI workload performance on Sapphire Rapids.

Looking Ahead

Intel is uniquely positioned to provide an ecosystem that caters to the demands of the ever-expanding frontiers of artificial intelligence. Our MLPerf v3.0 submissions underscores our industry commitment to providing recipes to our customers that unleash the full capabilities of Intel Xeon Scalable processors. We’ll continue to innovate and make it easy to build and deploy Intel AI solutions at scale, along with delivering significant generational performance gains on follow-on products like Emerald Rapids and Granite Rapids.

All workload optimizations presented herein are either upstreamed to the respective frameworks or in the process.

Notices & Disclaimers

Performance varies by use, configuration and other factors. Learn more at our Performance Index page.

Performance results are based on testing as of dates shown in configurations and may not reflect all publicly available updates. See backup for configuration details. No product or component can be absolutely secure.

Your costs and results may vary.

Intel technologies may require enabled hardware, software or service activation.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.

References

- MLPerf v1.1 Inference Data Center Closed Results https://github.com/mlcommons/inference_results_v1.1/tree/master/closed/Intel/results

- MLPerf v2.1 Inference Data Center Closed Results https://github.com/mlcommons/inference_results_v2.1/tree/master/closed/Intel/results

- MLPerf v3.0 Inference Data Center Closed Results https://github.com/mlcommons/inference_results_v3.0/tree/master/closed/Intel/results

- MLPerf v3.0 Inference Data Center Open Results https://github.com/mlcommons/inference_results_v3.0/tree/master/open/Intel/results

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.