Key Takeaways

- Find out about a very successful 3.5x speedup of ResNet-50, using only OpenVINO's 8-bit quantization, for several CPUs types, on the task of image classification.

- Discover how OpenVINO, applied together with Deci’s Automated Neural Architecture Construction optimizer (AutoNAC), achieves over 11x in throughput over vanila ResNet-50, leading to a significant advantage over various MLPerf submissions.

- Learn how a 1.4GHz Intel quad core i5, with a model optimized with OpenVINO+AutoNAC, successfully fights powerful datacenter CPUs in inference throughput performance (in per-core comparison).

On September 18, Deci AI in collaboration with Intel submitted several models to the Open division of MLPerf v0.7. The models were optimized using Deci’s AutoNAC and compiled and quantized with Intel’s OpenVINO to 8-bit precision. The submitted models reduced latency by a factor of up to 11.8x with respect to ResNet-50 v1.5 and increased throughput by up to 11x--all while preserving the ResNet-50 accuracy (within 1%). Here’s the story.

MLPerf is a non-profit organization that provides fair and standardized benchmarks for measuring training and inference performance of machine learning hardware, software, and services. MLPerf began in February 2018 with a series of meetings between engineers and researchers from Baidu, Google, Harvard University, Stanford University, and UC Berkeley.

There are two independent benchmark suites in MLPerf: training and inference. Each inference benchmark is defined by a model, dataset, quality target, and latency constraint. Every benchmark also contains two divisions: Open and Closed. While the Closed division is restricted to benchmarking a given neural architecture on specific hardware platforms and optimization techniques, the Open division is intended to foster innovation and therefore allows modification of the neural architecture. The Deci-Intel collaboration of submission to the Open, ImageNet, ResNet-50 track included results for the Single Stream and Offline scenarios, which demonstrate latency and throughput, respectively. We ran our models on three different hardware: single and 8 core 2.8GHz Intel Cascade Lake CPU (n2-highcpu-2, n2-highcpu-16 GCP instances, respectively), and 1.4GHz Intel quad core i5-8257U Macbook Pro 2019.

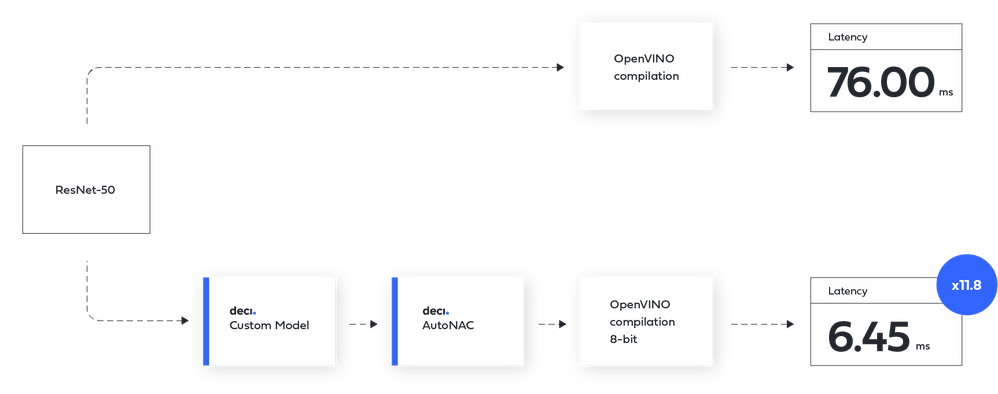

Figure 1: Submitted model. Without optimization, ResNet-50 achieves 76 ms latency. After Deci optimization, the new model reaches 6.45 ms

The starting point for the submission was ResNet-50 v1.5 with 76.45% top-1 accuracy. The objective was to minimize the inference latency, or maximize throughput, while keeping the accuracy above 75.7%. Deci’s proprietary technology can determine which operations can be compiled better and based on that replaces the architecture with the optimal compiled architecture. Therefore, our first step was to replace ResNet-50 with a model that achieves similar accuracy but has “compiler aware” operations.

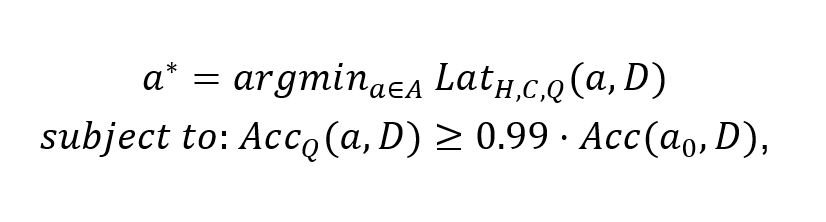

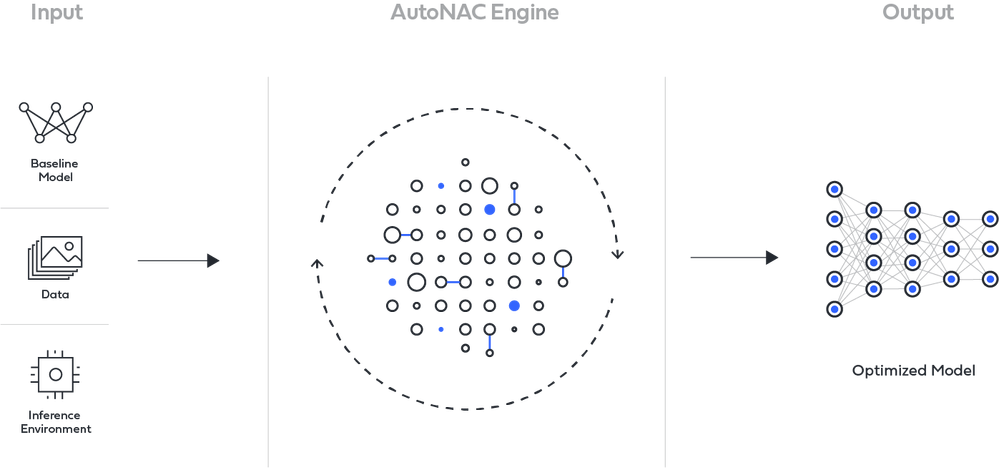

The second step was to apply Deci’s Automated Neural Architecture Construction engine, called AutoNAC, to reduce the model size. Starting from a baseline architecture (ResNet-50 in this case), AutoNAC applies a clever AI-based search to a large set of candidate revisions of the baseline. Its goal is to find new architectures that reduce latency but maintain the accuracy of the baseline model. Formally, we are searching for architecture that approximates the solution to the following constrained optimization problem:

where A is the set of all possible architectures in the search space, a0 is the baseline architecture (ResNet-50), D is the given dataset, ImageNet in our case, and LatH,C,Q (a , D) is the latency of a given network a on data D over a specific hardware H, compiler C and quantization level Q. Thus, the constraint we apply here for candidate networks is accuracy within 1% of the accuracy achieved by ResNet-50 on ImageNet, after the desired quantization.

Our search space is discrete, starting from the Resnet-50 baseline architecture. At every step, we apply a set of neural architecture modification operators on the current architecture. These operators may replace a layer, change its size, remove a layer, or change hyperparameters such as strides or filter size. The application of these operators one after the other spans a search tree where each node is an architecture. Such a node is associated with accuracy and latency. However, this search space is intractable, thus we search in a clever way that is based on a heuristic AI-guided tree search algorithm. For each candidate architecture, we measure the latency and estimate the accuracy. Clearly, training every candidate to full accuracy is very expensive, thus we use sophisticated techniques to estimate the architecture’s accuracy without applying full training.

Figure 2: Illustration of the AutoNAC procedure. Data, target hardware, and target compiler are used as input. The algorithm produces a new model with the lowest latency (highest throughput) and the target accuracy.

Deci’s AutoNAC optimization for MLPerf was performed in only 400 GPU hours (which is slightly more than three times training a single ResNet 50 on ImageNet), in contrast to known architecture optimization algorithms, which can take tens of thousands of GPU hours. In addition to being hardware-aware, AutoNAC is also compiler-aware, a powerful feature that can make the most of the optimizations available from Intel’s OpenVINO compiler. The combined process is illustrated in Figure 3 showing the entire inference acceleration stack, starting with hardware devices at the bottom and ending with the AutoNAC optimizer at the top level, which offers pure algorithmic acceleration via architecture search. AutoNAC is complementary to the other levels, and can accelerate neural models while amplifying the acceleration achieved by hardware devices, graph compilers, and quantizers.

Using the trained model from the AutoNAC, the third step of the optimization was to compile the model with Intel’s OpenVINO. Specifically, we used the OpenVINO post-training optimization toolkit to perform 8-bit quantization on the model and convert the network’s trained weights to 8-bit precision.

Unlike 32-bit or 16-bit quantization levels, the 8-bit quantization generally comes at the cost of accuracy. But here, the selected optimal model was robust to 8-bit quantization and the accuracy degradation was negligible (around 0.3%). Moreover, the resulting speedup was more than 2.5x in terms of run-time, relative to vanilla compilation.

Figure 3: Deci’s end-to-end inference acceleration stack, featuring its AutoNAC technology which resides on the pure algorithmic level. AutoNAC is hardware aware and takes into account and amplifies all layers in the stack, such as quantization and graph compiler.

Read more about AutoNAC on the technology white paper.

Results

We made submissions on three different hardware platforms: Single and 8 core 2.8GHz Intel Cascade Lake CPU, and 1.4GHz 8th-generation Intel quad core i5 MacBook Pro 2019 . For each machine, we submitted to the latency and throughput scenarios. In accordance with the MLPerf rules, our submissions were within the 1% ResNet-50 accuracy, while improving inference time by factors ranging from 2.6x to 11.8x. The largest speedup was achieved over a ResNet-50 compiled with Intel’s OpenVINO.

Let’s dive into the results. The first scenario we submitted was the Single Stream MLPerf scenario, i.e., latency measurement. As shown in Table 1, our improvement ranged from 5.16x to 11.8x when compared to vanilla ResNet-50. To further distinguish the improvements due to the AutoNAC, we compare a compiled 8-bit ResNet-50 precision to our submitted model. This comparison shows an improvement of between 2.6x and 3.3x.

Hardware |

ResNet-50 OpenVINO 32-bit[1] |

ResNet-50 OpenVINO 8-bit[1] |

Deci[2] |

Deci’s Boost

|

1.4GHz 8th-generation Intel quad core i5 Macbook Pro 2019 |

83 |

83 |

7 |

11.8x |

1 Intel Cascade Lake Core |

76 |

21 |

6.45 |

3. 3 - 11.8x |

8 Intel Cascade Lake Cores |

11 |

5.5 |

2.13 |

2.6 - 5.16x |

Table 1: Single stream scenario - Latency (ms). Three hardware types were tested. ResNet-50 Vanilla is ResNet-50 compiled with OpenVINO to 32-bit. The third column shows the same hardware with compilation to 8-bit, and the column labelled Deci shows the custom model after AutoNAC with 8-bit compilation.

Our second submission was in the Offline scenario, namely, the throughput category. The throughput was measured with batch size 64 in a single data stream. As shown in Table 2, the improvement over ResNet-50 Vanilla was between 6.9x and 11x. Moreover, when we isolate the net effect of AutoNAC, we can observe an improvement factor in the range 2.7x to 3.1x.

Hardware |

ResNet-50 OpenVINO 32-bit[3] |

ResNet-50 OpenVINO 8-bit[3] |

Deci[4] |

Deci’s Boost |

1.4GHz 8th-generation Intel quad core i5 Macbook Pro 2019 |

30 |

30 |

207 |

6.9x |

1 Intel Cascade Lake Core |

14 |

50 |

154 |

3.1 - 11x |

8 Intel Cascade Lake Cores |

110 |

410 |

1092 |

2.7 - 9.9x |

Table 2: Offline scenario - Throughput (images per second). Three hardware types were tested. ResNet-50 Vanilla is ResNet-50 compiled with OpenVINO to 32-bit. The third column is the same with compilation to 8-bit, and the Deci column shows the custom model after AutoNAC with 8-bit compilation.

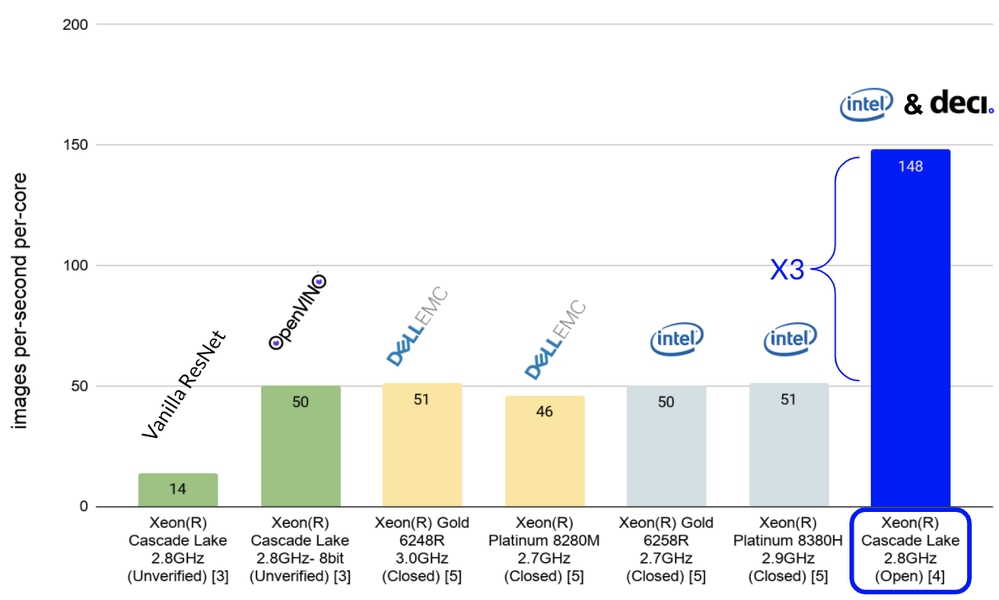

Shining bright compared to other submissions

We used the “throughput per core” measure to compare the speedup rate of the different submissions - apples to apples. Namely, we normalized the throughput of each submission by the number of cores. Note however that different submissions use different hardware and were submitted to different MLPerf divisions. Hence, the comparison is limited in this sense but still offers a good general picture of where we stand.

As shown in Figure 4, we compared our submissions to six other submissions; four submissions to the Closed division[5], and two unverified scores of the open division[1] [3]. It is evident that our submission for the Cascade Lake hardware achieves a 3x improvement in throughput per core relative to all other submissions[6].

Figure 4: Comparison of throughput per-core[6] among submissions. On the x-axis the submissions from other companies, and the Intel Cascade Lake Core submission. The y-axis displays the throughput per core of the submission. The green bars are unverified results[3], the yellow and azure are Close division submissions[5] and the blue is our Open division submission[4].

The future of CPU in deep learning inference

The acceleration of deep learning inference on CPU has been a longstanding challenge for the deep learning community. Many research groups around the globe are trying to reduce both the latency and memory footprint of neural networks to enable more extensive use of deep learning inference on CPU devices.

MLPerf enables companies and practitioners from around the world to compare their model with a fair benchmark. The model we submitted improved the performance of ResNet-50 by a factor of 11x. This was achieved through the synergy between Intel’s OpenVINO compiler and Deci’s AutoNAC.

The Deci-Intel collaboration takes a significant step towards enabling deep learning inference on CPU. The acceleration of the latency of inference by a factor of 11x has numerous direct implications. Communication time and costs associated with cloud GPU machines are reduced, enabling new applications of deep learning inference on CPU edge devices. New deep learning tasks can be performed in a real-time environment on edge devices. And companies that use large scale inference scenarios can dramatically cut cloud costs, simply by changing the inference hardware from GPU to CPU. We are looking forward to the next MLPerf to set new records.

[1] MLPerf v0.7 Inference Open ResNet-v1.5 Single-stream; Result not verified by MLPerf. Source: Intel

[2] MLPerf v0.7 Inference Open ResNet-v1.5 Single-stream, entry Inf-0.7-158-159,173; Retrieved from www.mlperf.org 21 October 2020

Configuration details: 1.4GHz 8th-generation Intel quad core i5 Macbook Pro 2019, 1 Intel Cascade Lake Core, and 8 Intel Cascade Lake Core

[3] MLPerf v0.7 Inference Open ResNet-v1.5 Offline; Result not verified by MLPerf. Source: Intel

[4] MLPerf v0.7 Inference Open ResNet-v1.5 Offline, entry Inf-0.7-158-159,173; Retrieved from www.mlperf.org 21 October 2020

Configuration details: 1.4GHz 8th-generation Intel quad core i5 Macbook Pro 2019, 1 Intel Cascade Lake Core, and 8 Intel Cascade Lake Core

[5] MLPerf v0.7 Inference Close ResNet-v1.5 Offline, entry Inf-0.7-87,78,100,122; Retrieved from www.mlperf.org 21 October 2020

[6] Throughput per-core is not the primary metric of MLPerf. See www.mlperf.org for more information.

Notices & Disclaimers

Software and workloads used in performance tests may have been optimized for performance only on Intel microprocessors.

Performance tests, such as SYSmark and MobileMark, are measured using specific computer systems, components, software, operations and functions. Any change to any of those factors may cause the results to vary. You should consult other information and performance tests to assist you in fully evaluating your contemplated purchases, including the performance of that product when combined with other products. For more complete information visit www.intel.com/benchmarks.

Performance results are based on testing as of dates shown in configurations and may not reflect all publicly available updates. See backup for configuration details. No product or component can be absolutely secure.

Your costs and results may vary.

Intel technologies may require enabled hardware, software or service activation.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.

Optimization Notice: Intel's compilers may or may not optimize to the same degree for non-Intel microprocessors for optimizations that are not unique to Intel microprocessors. These optimizations include SSE2, SSE3, and SSSE3 instruction sets and other optimizations. Intel does not guarantee the availability, functionality, or effectiveness of any optimization on microprocessors not manufactured by Intel. Microprocessor-dependent optimizations in this product are intended for use with Intel microprocessors. Certain optimizations not specific to Intel microarchitecture are reserved for Intel microprocessors. Please refer to the applicable product User and Reference Guides for more information regarding the specific instruction sets covered by this notice.

Intel does not control or audit third-party data. You should consult other sources to evaluate accuracy.

Mary is the Community Manager for this site. She likes to bike, and do college and career coaching for high school students in her spare time.

Mary is the Community Manager for this site. She likes to bike, and do college and career coaching for high school students in her spare time.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.