Our goal is to empower developers with choice, allowing them to build the way they want and then deploy on the Intel hardware that works best.

GitHub: https://github.com/microsoft/onnxruntime/blob/master/docs/execution_providers/OpenVINO-ExecutionProvider.md

URL: https://software.intel.com/openvino-toolkit

Deep learning is one of the most dynamic areas of artificial intelligence (AI) today. Using deep learning, medical personnel are improving their ability to spot tumors in hard-to-read images. Retailers are delivering innovative shopping experiences. Cities are enhancing everything from air quality to traffic safety to security. Robots that “see” are bringing unprecedented efficiencies to factory floors and assembly lines.

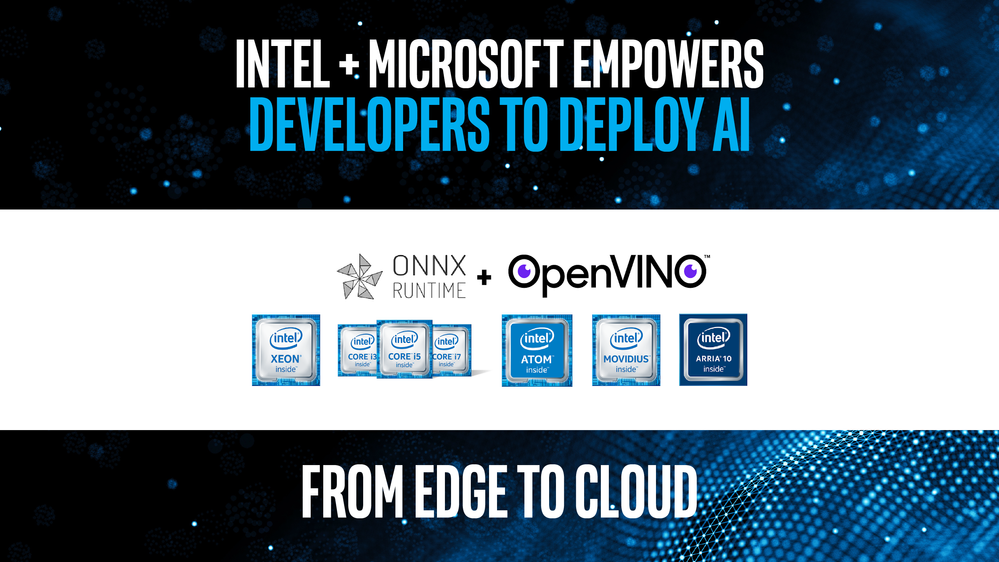

To enable developers with the ability to deploy deep learning-powered applications with a write-once-deploy-anywhere approach, Intel introduced the Intel® Distribution of OpenVINO™ toolkit back in 2018. It enables developers to build deep learning applications the way they want with the flexibility of deploying on a variety of Intel® hardware, including CPU, integrated GPU, Intel® Movidius™ VPU, and FPGAs. Microsoft introduced ONNX Runtime (RT), the inference engine for Open Neural Network Exchange (ONNX) models, which allows developers the flexibility to choose deep learning framework and run models efficiently anywhere.

In a blog I published in 2019, we discussed how Intel and Microsoft are advancing edge to cloud inference for AI. We publicly introduced how ONNX Runtime leverages the Intel® Distribution of OpenVINO™ toolkit via the execution provider (EP) plugin for high-performance deep learning deployed on Intel® architecture. Paired together, developers are empowered to build the way they want with the flexibility of choice among deep learning frameworks and deployment environments, thus bridging the gap between deploying cloud-developed AI solutions to edge devices.

Our goal this year remains the same – to empower developers with choice. Allowing them to build the way they want and then deploy on the Intel hardware that works best for their solution, no matter which framework or hardware type they use. The latest EP for OpenVINO™ toolkit expands operator coverage, introduces new graph capabilities, and serves as the single, unified execution provider for running inference workloads across all Intel® architecture-based devices from edge to cloud. What’s more, developers can take advantage of the latest improvements in an expanded set of validated developer kits along with remote prototyping, development and testing environments.

Improvements to Enable End-to-End AI Deployments

Last year, Intel & Microsoft announced the general availability of the ONNX Runtime EP plugin for OpenVINO™ toolkit. The EP plugin enables developers to take advantage of optimizations powered by OpenVINO™ toolkit on diverse Intel® hardware types, including CPUs, integrated GPUs, FPGAs and VPUs.

The latest release, ONNX Runtime EP for OpenVINO™ toolkit 2020.2, utilizes the newest release of Intel® Distribution of OpenVINO™ toolkit, such as graph transformation engine, reshape and graph construction APIs, powered by the recently integrated EP for nGraph. Capabilities previously available through the EP for nGraph have now been merged with this version of the EP for OpenVINO™ toolkit, and EP for nGraph will be soon deprecated. With the merge, Intel® Distribution of OpenVINO™ toolkit now includes improved performance and portability for deep learning by providing graph-level optimizations.

Building Toward the Next Generation of Deep Learning

As deep learning continues to advance and device footprints grow ever smaller, ONNX Runtime EP for OpenVINO™ toolkit 2020.2 adds a variety of features that make it easier to create leading-edge deep learning applications. In addition, the newly added features such as dynamic input shape support and sub-graph partitioning provide additional flexibility and coverage along with device fallback option for partially supported models. With this release, developers will also see a reduction in memory footprint by almost 40% across most of the supported models. These and other enhancements help enable a richer set of deep learning use cases.

The docker files for ONNX Runtime and OpenVINO are available on GitHub page. Developers can continue to utilize prebuilt Docker container base images to integrate with their application code using a custom or pretrained models from ONNX Model Zoo and deploy them on the cloud or the edge for inferencing. This capability has been validated with new and existing developer kits.

Greatly Simplified Workflow with Validated Developer Kits

Developers can also take advantage of the Intel® DevCloud for the Edge, which makes it possible to actively prototype and experiment with deep learning on a full range of Intel® hardware powered by Intel® Distribution of OpenVINO™ toolkit prior to purchasing developer kits. Intel® DevCloud for the Edge lets you upload and try your deep learning models using the Intel® Distribution of OpenVINO™ toolkit. Users can access reference implementations and pretrained models to help explore real-world workloads and hardware solutions using both Intel® Distribution of OpenVINO™ toolkit and ONNX Runtime. For Azure developers, the Intel® DevCloud for the Edge is also listed through the Azure Marketplace.

Previously, I shared developer kits that we have successfully been working together with select partners to offer kits validated for the latest ONNX Runtime and OpenVINO™ toolkit 2020.2. With the full range of CPU and accelerator options, you can choose the right combination and level of compute you need to deploy AI. With these developer offerings from our robust ecosystem, developers get a validated bundle of hardware, software and services that allow them to prototype, test and deploy and end-to-end solution.

· From iEi. The iEi TANK AIoT Developer Kit offers commercial production-ready development with deep learning, video analysis, computer vision and AI, including uses in security and factory automation. iEi’s FLEX-BX200 offers enormous computational power to perform accurate inference and prediction in near-real time, especially in harsh environments.

· From AAEON. The Boxer-6841M UP Squared and UP Core Plus offer turnkey development on the AAEON IoT platform, which are based on Azure services and enables developers and system integrators to quickly evaluate their solutions. AAEON’s UP Squared AI Vision X Developer Kit supports computer vision and deep learning development from prototype to production. Lastly, UP Xtreme Edge Kit is a powerful edge platform that is well suited use cases, including safety and security in public spaces and retail stores.

To help developers get started, we have released a AI vision app in the Microsoft Azure Marketplace, which allows developers to quickly train or retrain their models with Azure Machine Learning and deploy on these validated developer kits.

Download the latest version of ONNX Runtime with OpenVINO™ toolkit now

The Intel® Distribution of OpenVINO™ toolkit and ONNX Runtime provide an integrated toolset to simplify and accelerate the work of developing and deploying cloud-to-edge AI—so developers have the flexibility to choose their preferred deep learning framework and deployment hardware. To learn more about how you can streamline taking AI from cloud to edge, download the unified native installation of our EP plug-in along with your choice of framework today.

Try it today and join the conversation in our forum!

Attend our workshops

As part of Microsoft Build, experts from Intel and Microsoft will provide an overview of the EP v2 plug-in at a on-demand session at Microsoft Build on May 19th to 21st.

In addition, on May 26th, we’ll take a technical deep dive look at the capabilities of the new EP plug-in in our Intel® Distribution of OpenVINO™ toolkit Webinar, titled “Microsoft Azure and ONNX Runtime for Intel® Distribution of OpenVINO™ toolkit.”

Additional resources

Check out these resources to help you speed your AI solution development:

· GitHub repository — ONNX Runtime with OpenVINO EP along with Azure

IoT edge dependencies (Docker versions)

· Intel® Distribution of OpenVINO™ toolkit — See what high performance

deep learning makes possible

· Intel tutorials and more – Kick start your development and learn more

about Intel® Distribution of OpenVINO™ toolkit

· Intel® DevCloud for the Edge—Build, test and remotely deploy AI models

powered by Intel® Distribution of OpenVINO™ toolkit across Intel®

architecture

· Intel® AI: In Production — Find information on developer kits and partner

offers for developers, including details on delivering solutions for AI at the

edge

· Intel® IoT RFP Ready Kit Solutions Playbook — Explore information for

system integrators about Intel’s currently available IoT solutions

· Microsoft tutorials and more — Get help starting with ONNX Runtime

Notices and Disclaimers

FTC Optimization Notice

Intel technologies may require enabled hardware, software or service activation.

No product or component can be absolutely secure.

Your costs and results may vary.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.

Mary is the Community Manager for this site. She likes to bike, and do college and career coaching for high school students in her spare time.

Mary is the Community Manager for this site. She likes to bike, and do college and career coaching for high school students in her spare time.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.