Key Takeaways

- Learn how a new training add-on that’s compatible with the Intel® Distribution of OpenVINO™ toolkit optimizes deep neural networks for inference.

Overview

Deep Neural Networks (DNNs) have made a tremendous breakthrough in many domains in the past few years, bringing the accuracy of algorithms to a new level. However, it turns out that deploying such models in production is not a trivial task, and it requires adaptations for the hardware and computational methods. Thus, a separate direction has emerged aimed at the optimization of DNN models for inference. Many universities and companies show a high interest in this topic by doing research and development and providing tools for model optimization. Now, almost every DL framework has its own toolset implementing the most famous optimization methods, such as 8-bits quantization.

Despite some generalization that is present amongst the academic community, model optimization is most efficient when done with particular hardware consideration in mind. Each AI accelerator is unique when it comes to supported features and the absolute best performance is only possible if the model is optimized for a given hardware. As an example, in the case of widespread quantization technology, AI accelerators could be capable of performing more complicated asymmetric quantization with zero overhead and this generally results in better quality/performance combination compared to simple symmetric quantization.

The portfolio of powerful AI hardware at Intel continues to grow (new VPU generations, AI-specific instruction sets within recent CPUs, etc.), and to unleash the full performance of this hardware, we have produced our own model optimization pipeline that we presented in one of the previous posts. One of the components of this pipeline is optimization with fine-tuning which is used at a time when all other techniques do not let to get the desired accuracy and performance of DL models. It is represented by what’s called the Neural Network Compression Framework (NNCF) and aligned with the Intel® Distribution of OpenVINO™ toolkit in terms of the supported optimization techniques and models. The NNCF is based on the popular PyTorch framework and open-sourced so that anybody can use it and contribute. In this post, we briefly present its capabilities for model optimization and discuss further plans. For more details, please refer to our paper.

Features

We called our software a framework because we position it as a platform for DL optimization algorithms research and development with a unified interface and high level of automation in terms of the ability to apply supported optimization to real tasks by the end-user. We pay a lot of attention to usability aspects and simplify the usage and configuration process as much as possible. The main features of NNCF are the following:

- Automatic model transformation by the optimization method, no need for modification from the user’s side.

- Unified API for optimization methods. All compression methods are based on the certain abstractions introduced in the framework.

- Ability to combine algorithms into the pipelines. It allows applying several algorithms at the same time and gets an optimized model after one stage of fine-tuning. As an example, it is possible to perform optimization for sparsity and lower precision by combining two separate algorithms in the pipeline.

- HW-accelerated optimization primitives for fast model fine-tuning.

- Distributed training support. The fine-tuning can be organized on the multi-node distributed cluster.

- Uniform configuration of the optimization methods through the JSON configuration file.

- Ability to export to ONNX format, which is an informal standard for NNs representation. Such optimized models can be converted to OpenVINO Intermediate Representation for further inference.

Optimization methods

In this section, we briefly describe the optimization methods implemented and supported in the NNCF. It worth noting that not all of the methods are generic i.e. can be applied to optimize models for general purpose HW, such as CPU or GPU. Some of them, e.g. sparsity, require specific HW blocks to be implemented to exploit the optimization technique for efficient inference.

Quantization-Aware Training

The first and most common compression method is quantization - reducing the bit width for representing a number, which allows more efficient integer arithmetic on a hardware.

Quantization is represented by the affine mapping of real numbers to integers numbers by dividing to a scale factor and adding a zero-point. We train the scale factor and the zero point jointly with network weights using so-called "fake" quantization operations, which NNCF automatically inserts to the model graph.

NNCF supports symmetric and asymmetric schemes for activations and weights as well as the support of per-channel quantization of weights which helps to quantize even lightweight models produced by NAS, such as EfficientNet-B0.

Though NNCF supports quantization with an arbitrary number of bits to represent weights and activations values, choosing ultra-low bitwidth could noticeably affect the model's accuracy. A good trade-off between accuracy and performance is achieved by assigning different precisions to different layers. NNCF utilizes the HAWQ-v2 method to automatically choose optimal mixed-precision configuration by taking into account the sensitivity of each layer, i.e. how much lower-bit quantization of each layer decreases the model's accuracy. The most sensitive layers are kept at higher precision. The sensitivity of the i-th layer is calculated by multiplying the average Hessian trace with the L2 norm of quantization perturbation. The sum of the sensitivities for each layer forms a metric that is used to determine the specific bit precision configuration. The optimal configuration is found by calculating this metric for all possible bitwidth settings and selecting the median one. To reduce exponential search the following restriction is used: layers with a small value of average Hessian trace are quantized to lower bits, and vice versa. The Hessian trace is estimated with the randomized Hutchinson algorithm

without the explicit formation of the Hessian operator by 2 backpropagation passes: first - with respect to the loss and second - with respect to the product of the gradients and a random vector.

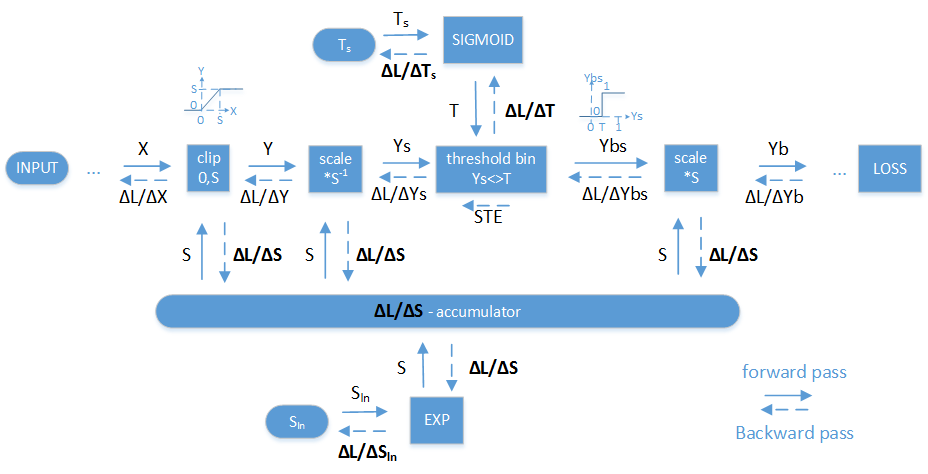

Binarization

The binarization method ultimately reduces the number of bits to one for weights and activations representation. This allows replacing original 32-bits floating-point multiplication to 1-bit logical xnor operation and significantly speed up neural networks. To binarize weights XNOR and DOREFA methods are used. To binarize activations the special binarization layers are inserted before each binarized convolution. The layer maps continuous floating-point activations X into binary activations Yb which can take only 2 values {0,S} (or {-S,S}) using a per-channel threshold vector T. The parameters T and S are tuned using a special gradient calculation (see Fig.1).

These activations binarization approach is based on the PACT method. The method calculates gradients for scale parameter S based on clipping error. In addition to this the scheme above includes 3 additional important things:

- Calculates gradients for S also for activation inside the [0,S] range that takes into account not only clipping error but also binarization error when activation value is inside the clipping range.

- Per-channel threshold T is used and tuned to minimize binarization error.

- S and T parameters are not optimized directly. Instead, hidden Ts and Sln parameters are used to automatically normalize optimization steps. (see picture above)

Our observations show that activation binarization gives more accuracy drop than weight binarization. At the same time, binary weights are harder to tune due to its highly discrete nature. So after activation binarization layers are inserted in the original model the weights are tuned in the original floating-point format. When optimal activation binarization scales and thresholds are found and original convolutional weights are tuned for new binary activations then the convolutional weights are also binarized by XNOR [1] or DoReFa [2] approaches and a fully (activation+weights) binary network are tuned further.

Sparsity

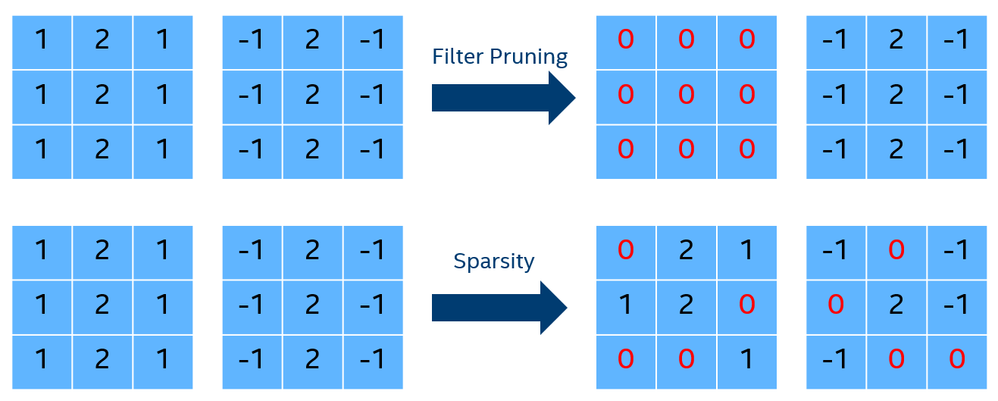

One more approach to compression of CNN is sparsification. There are two types of sparsification methods:

- Structured sparsification (also known as pruning). As a result of structured sparsity, we get a new neural network that is smaller than the original network (fewer channels, filters, etc.).

- Unstructured sparsity. As a result of unstructured sparsity, we get a new network the same size as the original once, but weight tensors are sparse now. Using unstructured sparsification, we can remove more weights than via pruning. In this section, we will discuss only unstructured sparsity methods.

The main idea of all sparsification algorithms is based on the fact that many modern DNNs are over-parameterized. It means that DNN contains more weights than it is needed to solve the problem (or more than we can effectively train). Thus the target of any sparsification algorithm is to find a subset of weights that contribute maximum result in accuracy and remove all other weights. So the contributions of sparsity algorithms are as follows:

- Minimization of the physical size of weights, using some sparse data representation methods.

- Improve the inference time using an implementation of sparse arithmetics (software or hardware).

Currently, there are two main approaches to make sparse networks:

- Magnitude-based. The idea of this approach is based on the fact that the contribution of weight is proportional to its absolute value. It means that we can remove weights with a small absolute value (based on some threshold) to make the network sparse. The main advantage of this method is the high speed of convergence because, at any moment, we can choose a threshold that provides the required sparsification level. But to do that usually, we should use aggressive weight decay regularization, because we should make weights smaller before removing it. It can negatively contribute to the final accuracy.

- Regularization-based. These methods assume usage of an extra term in the loss function that is aimed to provide the required sparsity level. It means that the network converges to a sparse sub-network that has the desired sparsity rate and minimal accuracy drop. In practice, this term does not affect weights directly. Instead, we add an extra parameter for each weight of the network that means an "importance" of corresponding weight and optimize it. The main advantage of this type of method is potentially better accuracy because we don't corrupt weights. However, since we should optimize extra parameters (which are usually trained in a bayesian-like fashion), regularization based methods need a lot of iteration to convergence.

The NNCF framework includes the implementation of both approaches. As a general recommendation for use, you can apply the following heuristics:

- In the case of a small training dataset for fast experimentation, it is recommended to use the magnitude-based method.

- If the training dataset is large enough or the accuracy drop is critical, you can use a regularization-based approach.

- Regardless of the sparsity method, it is recommended to increase the sparsity ratio smoothly to get a better accuracy/compression trade-off.

Filter pruning

NNCF also supports the aforementioned structured sparsity methods such as filter pruning. The important advantage of this method is that it is generic and does not require special HW instructions. Currently, two filter pruning techniques are supported:

- Simple magnitude-based filter pruning. The method is close to the one described in the following paper and can be also used with the progressive pruning ratio which is increasing during the pruning process.

- Geometric median pruning. A more sophisticated but still simple method that performs slightly better than the former approach.

In general, the filter pruning methods can achieve fewer compression ratios than unstructured sparsity methods at the same accuracy drop. Also, it is worth noting that a long fine-tuning is required to restore the accuracy.

Usage examples

NNCF provides the use of implemented optimization methods in two different ways: by means of supported training samples or through integration into the custom training code.

Using NNCF within your training code

Let us describe the steps required to modify an existing PyTorch training pipeline to integrate NNCF into it. The use case implies that the user already has a training pipeline that reproduces model training in the floating-point precision and a pre-trained model snapshot. The objective of NNCF is to prepare this model for accelerated inference by simulating the compression at train time.

Below are the steps needed to integrate NNCF into an existing PyTorch project:

Step 1: Create an NNCF configuration file.

A JSON configuration file is used for an easier setup of the compression parameters to be applied to your model. Reference configuration files can be found within example training scripts that are packaged with NNCF.

Step 2: Modify the training pipeline code.

NNCF enables compression-aware training by being integrated into regular training pipelines. The framework is designed so that the modifications to the original training code are minor.

- The following imports are required for NNCF:

import nncf

from nncf import Config, create_compressed_model, load_state

- Load the NNCF JSON configuration file that you prepared during Step 1:

nncf_config = Config.from_json("/path/to/nncf_config.json")

- Right after the model instance is created in the training pipeline and the weights for compression-aware training to start from are loaded, wrap the model via the following call:

compression_ctrl, model = create_compressed_model(model, nncf_config)

where compression_ctrl is a "controller" object that can be used during compressed model training to adjust compression algorithm parameters or gather statistics.

- In the case of multi-GPU training, wrap your model using the DataParallel or DistributedDataParallel PyTorch classes as usual. In the case of the distributed setting, call the compression_ctrl.distributed() method after that as well.

- Call the compression_ctrl.initialize(data_loader) method before the start of your training loop to initialize model parameters related to its compression (e.g. parameters of FakeQuantize layers) by feeding it some data (via the data_loader for the training dataset).

- The following changes have to be applied to the training loop code: after model inference is done on the current training iteration, the compression loss should be added (using the + operator) to the common loss. For instance, for the cross-entropy loss in a classification task:

loss = cross_entropy_loss + compression_ctrl.loss()

- Call the compression algorithm scheduler step() after each training iteration: compression_ctrl.scheduler.step()

- Call the compression algorithm scheduler epoch_step() after each training epoch: compression_ctrl.scheduler.epoch_step()

Step 3: Run the training pipeline.

At this point, NNCF is fully integrated into your training pipeline. You can run it as usual and monitor your original model's metrics and/or compression algorithm metrics and balance model metrics quality vs. the level of compression.

Training samples

Training samples provided with NNCF are separated into three general categories according to the computer vision task type considered within each sample (image classification, object detection, and semantic segmentation samples, respectively).

The image classification sample contains training pipelines on standard classification benchmark datasets (ImageNet, CIFAR-100 & CIFAR-10) using the zoo of classification nets from the torchvision package. Configuration files provided within the sample include examples of INT8 quantization, sparsification, combined quantization+sparsification and binarization for some common classification nets. The sample training pipeline supports multi-GPU training and allows exporting the compressed models into ONNX files that are supported by the OpenVINO toolkit.

The object detection sample contains an analogous training pipeline for the Pascal VOC benchmark dataset and SSD300 and SSD512 models (with VGG backbones).

The semantic segmentation sample contains the training pipelines for UNet and ICNet models on several benchmark datasets for segmentation tasks (CamVid, Cityscapes, Mapillary Vistas, Pascal VOC).

Integration into third party code

If you wish to use a more complex training pipeline than those present in the training samples, or to apply NNCF algorithms within the frameworks of larger training code/model collections, you can install NNCF and import as a regular Python package into the corresponding code base and use the full extent of neural network compression with only minor adjustments to the rest of the training pipeline.

NNCF ships with code patches to the well-known mmdetection and transformers GitHub repositories, which, when applied, enable model compression during training without disruption of the regular training process in corresponding repositories. These patches illustrate the general process of integrating NNCF into third-party model training code and also allow the user to instantly enable NNCF-based compression fine-tuning capabilities for the state-of-the-art object detection and NLP models found in mmdetection and transformers with <1% accuracy drop. The list of patches will be extended with other prominent repositories, but the patches mostly serve the purpose of results showcasing and guiding the user on the integration of NNCF into any Pytorch-based training pipeline by example.

Results

You can find most of the official results that we claim for NNCF under the corresponding links below:

- NNCF repository, you can see more results here under training examples links.

- Our paper.

We also provide ready-to-use configuration files that can be used to reproduce the results.

Conclusion

We introduced a new NNCF framework aimed at DL model optimization. One of the important advantages of the framework is an automatic model transformation when applying optimization methods. It does not require expertise in model optimization and simplifies user flow a lot. The framework is compatible with Intel® Distribution of OpenVINO™ toolkit and helps to achieve a substantial inference speedup using the latest OpenVINO low-precision runtime. Along with the Post-training Optimization Toolkit, NNCF presents a holistic approach to DL model acceleration. We are constantly improving our framework and bringing new state-of-the-art features. Please follow up on GitHub.

If you have any ideas in ways we can improve the product, we welcome contributions to the open-sourced OpenVINO™ toolkit. Finally, join the conversation to discuss all things Deep Learning and OpenVINO™ toolkit in our community forum.

Notices and Disclaimers

Intel technologies may require enabled hardware, software or service activation.

No product or component can be absolutely secure.

Your costs and results may vary.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.

Mary is the Community Manager for this site. She likes to bike, and do college and career coaching for high school students in her spare time.

Mary is the Community Manager for this site. She likes to bike, and do college and career coaching for high school students in her spare time.

Pour ajouter un commentaire ici, vous devez être inscrit. Si vous êtes déjà inscrit, connectez-vous. Dans le cas contraire, inscrivez-vous puis connectez-vous.