Optimizing Dental Insurance and Value-Based Payments with Intel® OpenVINOTM.

Authors: Ravi Panchumarthy, Deb Bharadwaj, Prashant Shah

Guest Authors from Retrace.ai: Vasant Kearney, Hamid Hekmatian, Wenxiang Deng

Introduction

Not everyone hates the dentist! With the help of Retrace .ai and Intel’s OpenVINO, the oral healthcare industry can leverage Artificial Intelligence to drive precision dentistry to better solve problems. The solution aims to make dentistry better, faster, and cost effective by blending seamlessly into clinical workflows and ordinary clinical dental practice. The Retrace AI dental ecosystem is trained by thousands of expert dentists to deliver concrete insights that take the guesswork and subjectivity out of dental decisions. It provides cutting edge dental computer vision and electronic health records solutions and provides dental analytics in a web-based interface blending seamlessly into the customer's current practice management system (https://retrace.ai/).

At present Insurance companies take a long time to process payouts and are not always transparent about their adjudication process. Because of the lack of transparency patients are often subject to surprise bills, which disrupts both the providers and payers since patient satisfaction is compromised. Retrace uses AI to make dental claims processing more predictable. By utilizing automation, Retrace is able to let providers know if a claim is likely to be covered and if any documentation is missing.

Claim packet completeness and clinical necessities require a lot of computer vision processing, which can be very costly and time-consuming. Insurance claims need to be packaged appropriately to meet the doctor’s needs and often the package is designed by non-medical professionals who need to appropriately analyze a lot of data for the consumption by administrative professionals of insurance companies. Retrace develops solutions with deep learning algorithms to aid claim packet completeness. Retrace uses Intel’s OpenVINO to optimize and deploy deep learning AI inferencing that in turn, supports reduced fees and increased inferencing speeds such that they can help provide the lowest possible costs back to their partners. This low-cost solution ultimately benefits the patients.

The Challenge

Retrace leverages Amazon Web Services (AWS) infrastructure to develop and deploy their AI applications. Their architecture is based on the container-first paradigm and uses microservice based design patterns that have become the standard for modern application development and deployment. This allows their developers and DevOps to improve the scalability, efficiency, and portability of their applications. Cost associated with compute resources, inference speed, and ML model deployment flexibility can be challenges to achieve positive ROI.

When using GPU instances, there is an inherent limitation to exploiting the elastic nature of compute in the public cloud. The AI workloads cannot be scaled appropriately based on demand with GPU instances. When deploying in the cloud, efficiency is determined by how well the shared infrastructure can be utilized. Although Kubernetes technology helps in maximizing resource usage, GPU instances do not support running multiple processes/containers at the same time. Using a single GPU instance for serving multiple AI models is not feasible when the application uses several AI models. With GPU instances, memory cannot be shared among ML models, forcing more instances to be deployed, thereby increasing costs.

The Solution

Retrace utilizes Intel® Xeon® processors for AI inferencing, which enables them to seamlessly scale up or down their compute resources based on demand. Alongside, Intel’s OpenVINO™ toolkit, Retrace.ai can scale their AI workloads appropriately based on demand for automatic load balancing and maintaining the same level of accuracy for the deep learning models. OpenVINO™ accelerates a variety of AI applications such as computer vision, speech recognition, natural language processing, and recommendation systems in production. The latest Intel processors are the only CPUs that have built-in AI acceleration. This acceleration can be exploited with tools like OpenVINO™ to deliver the AI inference performance needed by the application at lower cloud costs.

With that, OpenVINO™ allows Retrace to deploy multiple AI containers on a single Intel instance, which allows for compute sharing across multiple AI models and sub-processes. This allows hardware resources to be utilized more efficiently than having sequestered compute resources. For example, Retrace might distribute 10 AI models on a single C5.4xlarge instance instead of distributing them across 10 separate instances which can have profound cost and performance implications.

Retrace also utilizes Kubernetes with OpenVINO™ to dynamically scale up and down infrastructure. By using OpenVINO™ with Kubernetes infrastructure in AWS, deploying appropriate compute resources needed for the AI workloads using Intel® based instances became more cost effective and easier to manage. Clinics tend to have high AI module utilization during normal business hours with sporadic spikes in demand scattered throughout the day. For example, at 1pm on a Wednesday, Retrace might have 750 Intel® based instances running 7500k OpenVINO™ models, but Retrace might scale down to only 3 instances during the evening because no new patients are being seen for the day.

OpenVINO™ mixed with Kubernetes allows Retrace to ramp up infrastructure to deliver highly responsive user experiences, while simultaneously managing internal compute costs.

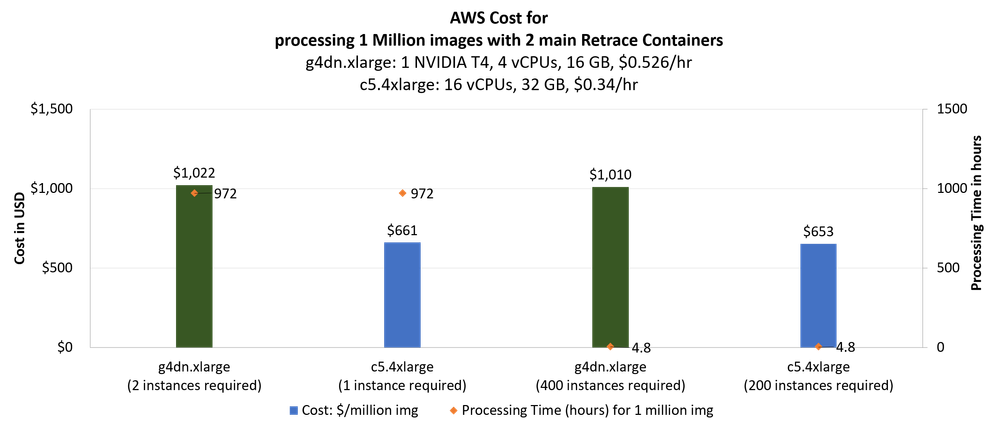

Retrace predicts thousands of anatomical landmarks per image and uses multi-task inferences to reduce the number of models to about 20 unique deep learning sub-modules depending on the task. Figure 1 depicts a performance comparative analysis for a microservices API architecture between AWS GPU instances and AWS CPU instances with OpenVINO™.

Conclusion

The confluence of AWS cloud-based infrastructure, Retrace cutting edge Dental AI and Intel’s hardware and software is energizing the proliferation of ever more intelligent and robust medical AI solutions.

About OpenVINO™

The Intel® Distribution of OpenVINO™ is an open-source toolkit for high-performance, deep learning inferencing for ultra-fast, amazingly accurate real-world results deployed across Intel® hardware from edge to cloud. Therefore, simplifying the path to inferencing with a streamlined development workflow. Developers can write an application or algorithm once and deploy it across Intel® architecture, including CPU, iGPU, Movidius VPU, and GNA.

Resources:

• Retrace: https://retrace.ai/media-and-news/

• Intel instances in AWS: https://aws.amazon.com/intel/

• Get started with OpenVINO™: https://docs.openvinotoolkit.org/latest/index.html

• Intel's AI and Deep Learning Solutions: https://www.intel.com/content/www/us/en/artificial-intelligence/overview.html

Notices & Disclaimers

Performance varies by use configuration, and other factors. Learn more at www.Intel.com/PerformanceIndex

Performance results are based on testing as of dates shown in configurations and may not reflect all publicly available updates. See backup for configuration details. No product or component can be absolutely secure.

Intel does not control or audit third-party data. You should consult other sources to evaluate accuracy.

Your costs and results may vary.

Intel technologies may require enabled hardware, software or service activation.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.