Published March 23rd, 2021

Gadi Singer is Vice President and Director of Emergent AI Research at Intel Labs leading the development of the third wave of AI capabilities.

Class 2 - Semi-Structured Information Repository

Second in a series on the choices for capturing information and using knowledge in AI systems.

Information-Centric Classification and Class 1 recap

The previous blog in this series introduced the concept of an information-centric classification of AI systems as a highly valuable view that is complementary to processing-based classifications such as Henry Kautz’ taxonomy for neural symbolic computing. It also previewed a classification that emphasizes the high-level architectural choice related to information in the AI system. The information-centric classification proposed here includes three key classes of AI systems:

- Class 1 - Fully Encapsulated Information

- Class 2 - Semi-Structured Adjacent Information (in retrieval-based systems)

- Class 3 - Deeply Structured Knowledge (in retrieval-based systems)

The first class of systems in this classification system with its ‘Fully Encapsulated Information’ was detailed in the previous blog of this series. Systems in this class incorporate all information required for AI tasks in the weights and model parameters without leveraging any additional adjunct sources of information. Other than the limited information contained in the input to the model (for example, a particular image or text query), the rest of the information is encoded in the neural network’s parametric memory. Petroni et al. layout a formal analysis of systems with fully encapsulated information. One highly innovative use of a language model (LM) as an information source can be found in Dynamic Neuro-Symbolic Knowledge Graph Construction for Zero-shot Commonsense Question Answering. In this work, a transformer-based LM is used to dynamically generate knowledge graphs for commonsense reasoning. All relevant information is extracted from the LM at inference time based on the question posed to the model. In some contexts, language models can also be referred to as Pretrained Language Models (PLM), emphasizing the characteristic of being first pre-trained over a large text corpus and then finetuned for a downstream task. Transformer-based models like BERT, GPT, and T5 are great examples of PLMs.

Rise of Retrieval-based AI System Architectures

Despite the prevalence of Class 1 systems, the inherent scaling inefficiencies of this approach are giving rise to architectural paradigms based on retrieval. Simply put, if the architecture relies on including all relevant information in the model parameters, the model size will grow as additional information is added. Whether the growth factor is linear to the amount of information or shows a slightly different correlation, it has been observed that a model which incorporates more information will be materially larger. The original authors of GPT-3 show for example how the zero-shot performance of the model on the TriviaQA benchmark increased from 5% accuracy for a model with 100M parameters to 63% accuracy when the model increases in size to 175B parameters.

Systems dubbed “retrieval-based” are rapidly emerging as a key class of AI solutions that extract information from an external source at runtime while performing their tasks – for example, answering questions in QA systems. In retrieval-augmented (or retrieval-based) systems, a large portion of the information utilized by the system is stored outside the parametric model and is accessed during test time by the neural network. The term ‘injection’ has also been used in the literature to describe this mechanism: the taxonomy described in Combining pre-trained language models and structured knowledge uses the term ‘knowledge injection’ to capture what this information-based classification will refer to as information- or knowledge-retrieval.

While retrieval-augmented neural network systems have existed for some years, they have seen major growth in interest and popularity starting circa 2019. The current implementations of such systems primarily utilize very large information bases such as Wikipedia, as seen in leaderboards and recent competitions - for example, all winning solutions of Google’s Efficient QA challenge announced at NeurIPS 2020 were retrieval-augmented systems accessing a knowledge structure that is auxiliary to the parametric memory. Similarly, the Knowledge Representation & Reasoning Meets Machine Learning (KR2ML) workshop (initiated at NeurIPS 2019 with a successful second installment at NeurIPS 2020) is another indication of the rising role of retrieval-based systems that extract information from an adjacent information source.

Class 2: Semi-structured Information Repository

Current (2021) retrieval-augmented systems are architected to extract needed information from a large corpus of text, tables, or shallow graphs. For applications relying on broad facts and an information base such as question answering tasks, the choice of information source is in many cases a corpus of plaintext documents such as Wikipedia articles. These AI systems have a language model as part of the neural network (for example, BERT or similar transformer-based LM) which encapsulates some of the information needed for inference, while a significant share of the information is retrieved at execution time.

A good illustration of such systems is Retrieval-Augmented Generation (RAG) for Knowledge-Intensive NLP Tasks. As is typical with retrieval-augmented systems, the RAG architecture utilizes two sets of information – a pre-trained parametric memory and an adjacent information source. The parametric memory incorporates a pre-trained seq2seq model with all the linguistic information embedded in its language model. The model also includes a non-parametric memory with a dense vector index of Wikipedia, accessed with a pre-trained neural retriever. When performing language generation tasks, RAG combines information embedded in parametric memory with retrieved information from the indexed text corpus (Wikipedia). Class 2 systems with semi-structured information allow for major increases in scale and volume of information as well as more accurate manipulation of specific sequences of information. In particular, such retrieval systems can retrieve factual data, verbatim sentences or entire sections of the text source. This is evidenced by the fact that top entries in relevant leaderboards such as EfficientQA are retrieval-based. An indexed sentence or paragraph can be provided as a full answer or used for a more tailored output. The term “semi-structured information” is used to describe data that has some explicit organizational pattern such as sentences in a plaintext document, entries in a table, or nodes and edges in a graph. While it provides structure for organization and access of information, it does not contain much metadata, models information or advanced knowledge constructs (such as causality or inheritance).

Explainability is another advantage of retrieval-augmented architectures such as RAG. By providing the user with external knowledge that was used in determining a given result, the systems substantially improve the provenance and interpretability of the results over fully encapsulated information (Class 1) systems like GPT3. Improved provenance and interpretability are demoed by Hugging Face, where a user can obtain an example-level contribution of the top-k Wikipedia passages that are retrieved from Wikipedia to answer a given query.

Another excellent implementation of a Class 2 retrieval-based system is the kNN-LM model created by a joint team from Stanford University and Facebook AI Research. In this system, information can be drawn from any text collection, including the original LM training data. The interaction between the neural network and the information sources is mediated through an index - a datastore with representations for each entry in the training dataset and its encoding. When information is being sought, the system calculates the most likely candidates through the nearest neighbor of their embedding.

Through mediated access to information, this approach illustrates another major advantage of retrieval-based systems: the information being served by the system can be efficiently increased to any degree by scaling up to larger datasets, allowing for effective domain adaptation by simply varying the nearest-neighbor-indexed adjacent datastore without further training or fine-tuning. This is a fundamental advantage over Class 1 systems where any change in the scope of information requires expensive and complex computation to ingest the information into the neural network’s parametric memory. Such retrieval through mediated access from a large and flexible information source was nicely demonstrated by the kNN-LM paper, which showed that it may be better to index and retrieve from a text corpus than to do the traditional gradient descent-based training to update the weights of the DL model.

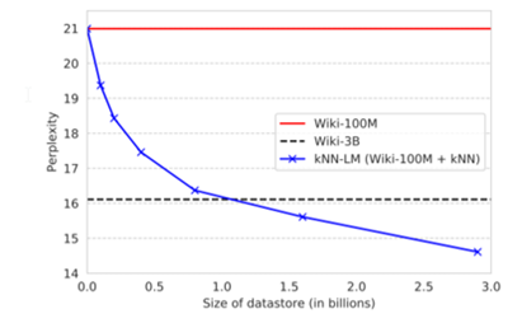

Figure 1 – kNN-LM: Effect of datastore size on perplexities

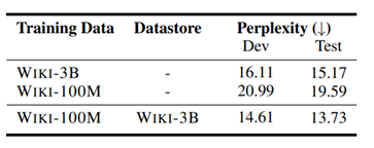

Figure 2 - kNN-LM: Experimental results on WIKI-3B. The model trained on 100M tokens is augmented with an adjacent datastore with 3B training examples and outperforms the plain vanilla LM trained on the entire WIKI-3B training set.

As can be seen in Figure 1, a model trained on 100M tokens (Wiki-100M) can achieve results that are materially better than a model trained on 3B tokens (Wiki-3B) by providing an effective retrieval mechanism (kNN-LM in this example) from a large and scalable datastore. The performance of the system in this case is driven by its mediated access to the information in the adjacent datastore rather than by the need to add more parameters to the neural network model or perform more gradient-based DL training on additional data.

As expected, the kNN-LM approach required substantially less computational cost to train the compact model, which could be accomplished with some CPUs instead of a large construct of dedicated GPUs. Due to the reduction in complexity of the DL inference model, run-time retrieval is performed on a CPU using a vector-similarity search library.

Key Elements of a Class 2 System

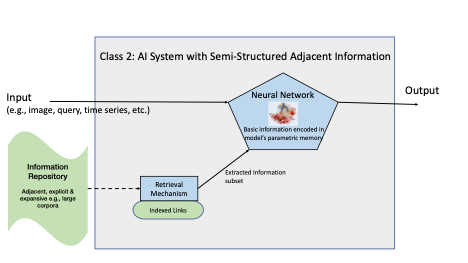

Figure 3 depicts the high-level architecture of a Class 2 system and its key components:

Figure 3 - Key elements of a Class 2 system

The Neural Network contains information such as a language model. However, unlike AI systems of Class 1, the information encoded within the parametric memory represents only a fraction of the overall information required to successfully complete the tasks of the system. The neural network system has the additional capability to accept retrieved information at execution time and integrate this extracted information into its model. This incorporation requires some mapping of the retrieved information into the latent embedding space of the NN.

In this architecture, the training of the neural network needs to be done together with the retrieval mechanism and some representation of the adjacent information repository.

The Semi-Structured Information Repository contains data required for the performance and correct output of the AI system. When used to improve scalability, this auxiliary source contains substantially more information than what is encapsulated within the NN parametric memory. When used to improve flexibility or extensibility, the adjacent information repository contains the specific information that needs to be modifiable or extensible without retraining the NN model.

In many cases, the information in the repository is represented as corpora of plaintext documents that sit outside the AI system. In more specialized cases, the information source is included within a self-contained system such as that required for the size-constrained solutions to EfficientQA. For example, the UCL-FAIR submission, which was one of the winners in the constrained categories of the competition, incorporates a QA Database comprised of preselected pairs of questions and answers – 2.4M QA pairs in the 500MB track, and 140K QA pairs in the smallest-size track. The key architectural characteristic of Class 2 is clear in this system as the information resides in a separate explicit construct outside the NN and is being accessed for retrieval during execution time. This characteristic of Class 2 systems offers the opportunity to improve robustness to adversarial attacks which have been demonstrated on Class 1 systems, such as training data extraction. Since the source of knowledge resides outside the parametric memory of the model, access to information can be more easily controlled to prevent leakage of sensitive training data at inference time.

In addition to open-domain QA, retrieval-based systems have excelled in tasks like entity linking, slot filling, dialog, and fact-checking, as evidenced by the Knowledge Intensive Language Tasks leaderboard. Among others, the authors of knowBERT show that a knowledge-enhanced version of BERT performs better on LM perplexity and other downstream semantic challenging tasks like Word-in-Context (WiC).

Finally, the Retrieval Mechanism with its associated Indexed Links is used to extract the relevant subset of information needed to resolve a particular inference and serve it to the NN for integration and resolution. It mediates between the embedded latent space of the NN and the adjacent – usually explicit and intelligible -- information repository. The retrieval mechanism selects the most appropriate subset of information from what can be very a large-scale information source (such as selecting one particular passage from the 21M passages overall in Wikipedia). Methods can include associative approaches that operate directly in the embedded space such as with kNN. It should be noted that the retrieval mechanism could be implemented in code such as an associative library to extract from a database, or in a query language such as SPARQL to manipulate data in RDF format (for example, Question Answering over Knowledge Bases by Leveraging Semantic Parsing and Neuro-Symbolic Reasoning).

The Indexed Links can be a set of embeddings that represent the information source. Selecting a particular embedding (for example, with the shortest distance to a query embedding) will allow retrieval of the relevant information from the adjacent source. In several systems, the indexed links can be modified or extended without retraining the NN. The scope of the system deployed at inference time can be as large as the space covered by its indexed links and can be broader than what was used during training.

Going Deeper, Going Higher

The information-centric classification presented here is complementary to processing-centric taxonomies from researchers such as Kautz’. The type that aligns closely with Class 2 information-based systems is Kautz’s Neuro[Symbolic] type, where symbolic reasoning is implemented inside a neural engine. In our Class 2, the retrieval mechanism accesses a symbolic information repository and can be symbolic (as in Reading Wikipedia to Answer Open-Domain Questions) or neural (as in Dense Passage Retrieval for Open-Domain Question Answering) . Other Kautz types can utilize retrieval of information at execution-time to improve the scale and extensibility of the neural networks. Nevertheless, as is demonstrated in the above discussion of Class 2, the classification and characterization of systems based on the way they represent, maintain and access their information provides an additional valuable prism for architecting AI systems.

In the next blog, I will discuss Class 3 systems, which have deeply structured knowledge. While they are less common today, I expect them to play an increasingly central role in future AI systems. Class 3 systems will enable higher degrees of contextualization, reasoning, multi-modality, and will support the upcoming emergence of cognitive AI. The pace of evolution in AI is truly remarkable. Making the right information-centric architectural choices upfront will be crucial for enabling the next wave of material innovations.

Read more

- Seat of Knowledge - Information-centric Classification in AI:

- Age of Knowledge Emerges:

Gadi joined Intel in 1983 and has since held a variety of senior technical leadership and management positions in chip design, software engineering, CAD development and research. Gadi played key leadership role in the product line introduction of several new micro-architectures including the very first Pentium, the first Xeon processors, the first Atom products and more. He was vice president and engineering manager of groups including the Enterprise Processors Division, Software Enabling Group and Intel’s corporate EDA group. Since 2014, Gadi participated in driving cross-company AI capabilities in HW, SW and algorithms. Prior to joining Intel Labs, Gadi was Vice President and General Manager of Intel’s Artificial Intelligence Platforms Group (AIPG).

Gadi received his bachelor’s degree in computer engineering from the Technion University, Israel, where he also pursued graduate studies with an emphasis on AI.

Gadi joined Intel in 1983 and has since held a variety of senior technical leadership and management positions in chip design, software engineering, CAD development and research. Gadi played key leadership role in the product line introduction of several new micro-architectures including the very first Pentium, the first Xeon processors, the first Atom products and more. He was vice president and engineering manager of groups including the Enterprise Processors Division, Software Enabling Group and Intel’s corporate EDA group. Since 2014, Gadi participated in driving cross-company AI capabilities in HW, SW and algorithms. Prior to joining Intel Labs, Gadi was Vice President and General Manager of Intel’s Artificial Intelligence Platforms Group (AIPG).

Gadi received his bachelor’s degree in computer engineering from the Technion University, Israel, where he also pursued graduate studies with an emphasis on AI.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.