Published February 16th, 2021

Gadi Singer is Vice President and Director of Emergent AI Research at Intel Labs leading the development of the third wave of AI capabilities.

Class 1 – Fully Encapsulated Information

First in a series on the choices for capturing information and using knowledge in AI systems.

There is much discussion on the processing needed to propel further advancement in artificial intelligence (AI). Some debate the value of integrating symbolic information and processing with neural networks and end-to-end deep learning systems. Among others exploring this space, Henry Kautz proposed a taxonomy for neural-symbolic computing, parsing the integration of differentiable and symbolic information at the processing level and introducing six types of systems. In this series of blogs, I offer a different perspective: an information-centric classification with emphasis on the type, structure, and representation of knowledge and its implications for the attributes of the systems that deploy them.

Such classification is needed for categorizing and assessing the structure and representation of the information integrated as AI systems learn and perform. In this blog, I will offer that an information-centric characterization of solutions can bring clarity to the underlying choices made and their material implications on the quality and efficiency of AI systems. This is a highly beneficial perspective for any non-trivial AI system and has special consequences in large complex systems as well as those targeting higher levels of machine intelligence. In my last blog on the role of deep knowledge, I explored how a major inflection point for AI is approaching, with a categorical shift in capabilities that include a deeper comprehension of the world based on cognitive competencies such as abstraction, reasoning, logical decision-making, and the ability to adapt to new circumstances. While the structure and representation of information is an integral part of advanced-level cognitive AI, the corresponding system architectural choices are similarly relevant and will add value to complex AI tasks at all levels of machine cognition.

Information-Centric Classification of AI System Architectures

Many modern deep learning (DL) systems achieve expert performance on tasks that do not require higher cognitive functions to be successfully resolved. Recognition tasks, such as object recognition and the syntactic part-of-speech labeling, do not demand large volumes of semantic or ontological information. Feature identification and the mapping of patterns or tokens in a latent embedding space are examples of tasks primarily based on the processing of shallow, unstructured input data. This processing is great at leveraging structural dependencies and statistical correlation. Natural language processing (NLP) tasks such as translation and next-word prediction are based on the tracking of all relevant tokens (for example, words in a vocabulary or word combinations), and information about relationships between them such as the statistics of their co-occurrence.

The cognitive task of understanding requires deeper semantic information. Several key questions in AI systems cannot be answered by statistical correlation only, such as those that require underlying commonsense knowledge or complex reasoning over a broad base of information. The design of AI systems needs to take into consideration the information being used -- where is the persistent information sitting, how is it represented, and what are the access mechanisms for using the relevant information. This refers to persistent information either captured within the models or accessed by the models during inference to complete a task.

As will be clear in the examples associated with the various classes, an information-centric classification of approaches to data or knowledge integration and structure can help explain the overall efficiency and effectiveness of the AI system and provide key clues in selecting the best approach for a given task. In this series, we will discuss three key classes and their characteristics - Class 1 of AI systems with fully encapsulated information; Class 2 of retrieval-augmented systems with semi-structured information; Class 3 of retrieval-based systems with deep structured knowledge.

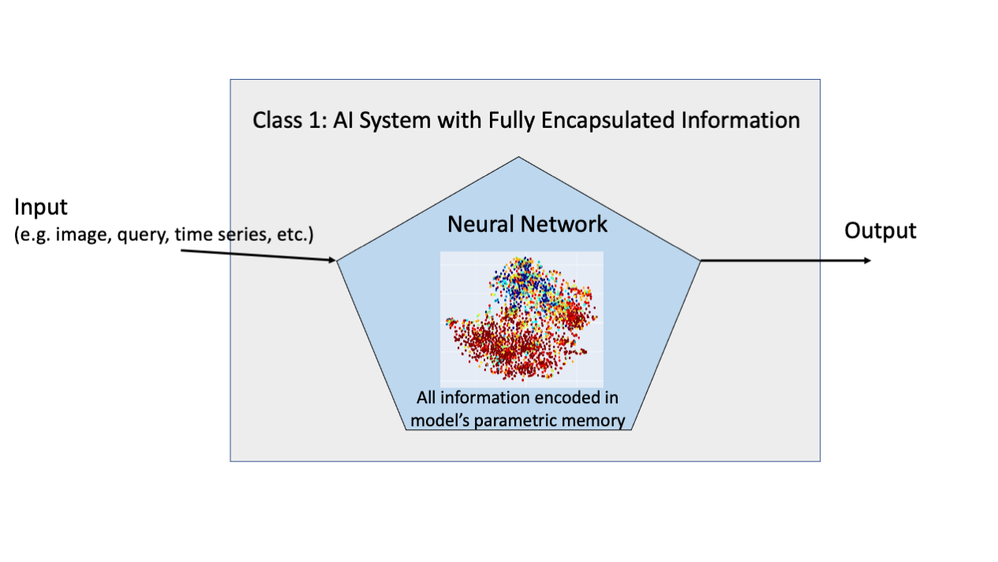

Class 1: Fully Encapsulated Information

The first category encompasses the prototypical DL system. This represents what can be referred to as “fully encapsulated information”: All persistent information is stored in the model’s parametric memory and fully memorized by the system. During inference, there is no access to auxiliary information external to the model. This has been the primary approach as neural networks (NNs) evolved, and this trend has been extended with the recent development of the Transformer models. These models have consistently gained in performance in part by the addition of more data and more parameters, generating outstanding progress in NLP and other tasks. In the prototypical neural network architecture, the inference flow includes only the input query to be processed and the NN model with its internal structure and parameters.

There are many advantages to a DL solution with fully encapsulated information: An end-to-end NN model is the most straightforward way to train a machine by utilizing stochastic gradient descent. As all relevant information and function are represented in the model, the iterative learning process has access to utilize and update the complete parametric space.

However, while elegant and very effective, this approach of incorporating all required information within the parametric memory of the NN model has a few drawbacks. Most significantly, unsustainable growth in model size and complexity is required to scale up in capabilities. For example, the impressive GPT-2 language model by OpenAI was introduced in early 2019 and has 1.5 billion parameters (a measure of model complexity and size). OpenAI introduced their follow-up GPT-3 model in mid-2020 with 175 billion parameters. That’s 100x growth in just 18 months! This large model size is dwarfed by the more recent introduction of the Switch Transformers model from Google Brainwhich surpasses one trillion parameters.

The rapid growth in the number of parameters in DL models is strongly impacted by the volume of information that is being captured. This growth impacts the processing/computing needs: Extensive DL models require large, mostly dedicated compute and significant time during training. This makes them expensive, complex, and less accessible beyond large companies or institutions. For example, training GPT-3's neural network requires millions of dollars and hundreds of GPU compute years. These large language models are also harder to move from the cloud and data centers to the edge and end devices, where they are sometimes needed for inference.

Furthermore, the only way to change the information in a model with fully encapsulated information is to retrain or fine-tune the DL model. This is expensive and requires significant data science expertise, limiting the extensibility and adaptation of the system to new information and domains. It also curtails access to this technology for small and medium businesses without sufficient resources and expertise to modify such hugely complex models and adapt them to their particular data and needs.

Finally, there is the risk of memorized personally identifiable information from the training set to leak at query time. There are techniques that utilize adversarial attacks to extract memorized training data. This could pose a challenge when private or protected information is used at training.

The Duality of Processing- and Information-Centric Classifications

As a complementary view to Kautz’s processing-centric taxonomy of neural-symbolic systems, the information-centric classification being proposed is founded on the representation and location of the data and knowledge being integrated. When mapping between the taxonomies, the class of ‘fully encapsulated’ information is equivalent to Kautz’s category 1 of symbolic Neuro symbolic, where all information used by the system (excluding the input query and the delivered output) is fully encapsulated within the parametric memory of neural network.

The full value of understanding the various classes of information representation and storage becomes evident as we look at the rapidly evolving family of solutions that rely on retrieval of information from sources outside the DL model. In upcoming blogs, I will discuss other approaches to information representation including retrieval-augmented systems with semi-structured information (class 2) and retrieval-based systems with deep structured knowledge (class 3). These architectures represent an exciting potential to alleviate the challenges of DL with fully encapsulated information and address issues of the ever-growing size and complexity of DL models, as well as getting us closer to AI systems with higher machine intelligence.

Read more:

- Age of Knowledge Emerges:

- Part 1: Next, Machines Get Wiser

- Part 2: Efficiency, Extensibility and Cognition: Charting the Frontiers

- Part 3: Deep Knowledge as the Key to Higher Machine Intelligence

Gadi joined Intel in 1983 and has since held a variety of senior technical leadership and management positions in chip design, software engineering, CAD development and research. Gadi played key leadership role in the product line introduction of several new micro-architectures including the very first Pentium, the first Xeon processors, the first Atom products and more. He was vice president and engineering manager of groups including the Enterprise Processors Division, Software Enabling Group and Intel’s corporate EDA group. Since 2014, Gadi participated in driving cross-company AI capabilities in HW, SW and algorithms. Prior to joining Intel Labs, Gadi was Vice President and General Manager of Intel’s Artificial Intelligence Platforms Group (AIPG).

Gadi received his bachelor’s degree in computer engineering from the Technion University, Israel, where he also pursued graduate studies with an emphasis on AI.

Gadi joined Intel in 1983 and has since held a variety of senior technical leadership and management positions in chip design, software engineering, CAD development and research. Gadi played key leadership role in the product line introduction of several new micro-architectures including the very first Pentium, the first Xeon processors, the first Atom products and more. He was vice president and engineering manager of groups including the Enterprise Processors Division, Software Enabling Group and Intel’s corporate EDA group. Since 2014, Gadi participated in driving cross-company AI capabilities in HW, SW and algorithms. Prior to joining Intel Labs, Gadi was Vice President and General Manager of Intel’s Artificial Intelligence Platforms Group (AIPG).

Gadi received his bachelor’s degree in computer engineering from the Technion University, Israel, where he also pursued graduate studies with an emphasis on AI.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.