Published February 21st, 2022

Mariano Tepper is a researcher with the Brain-Inspired Computing Lab at Intel Labs. This post is a joint work with Sourabh Dongaonkar, Mark Hildebrand, Cecilia Aguerrebere, Jawad Khan, and Ted Willke.

Similarity search, also known as approximate nearest neighbor (ANN) search, constitutes the backbone of many AI applications in production and datacenter contexts that require search, recommendation, and ranking operations on web-scale databases. In these settings, accuracy, speed, scale, and cost are critical dimensions with stringent quality-of-service constraints. In this blog, we describe a solution that reaches new heights along all of these dimensions by leveraging the computational capabilities of Intel® Xeon® and the access characteristics of Optane™ Persistent Memory. To showcase our developments, we participated in the NeurIPS 2021 "Billion-Scale Approximate Nearest Neighbor Search Challenge," winning the custom hardware track. Our results offer an 8x~19x reduction in total cost of ownership (TCO) over the next-best solution and democratize similarity search in the modern large-scale, high-accuracy, and high-performance scenario.

What Is Similarity Search?

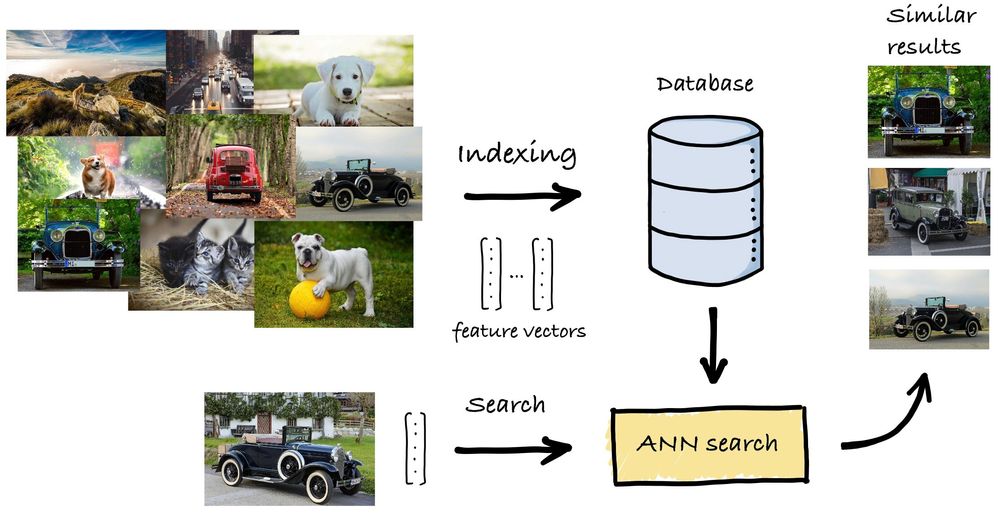

Given a database of high-dimensional feature vectors and a query vector of the same dimension, the objective of similarity search is to retrieve the database vectors that are most similar to the query, based on some similarity function. In modern applications, these vectors represent the content of data (images, sounds, text, etc.), extracted and summarized using deep learning systems such that similar vectors correspond to items that are semantically related.

To be useful in practice, a similarity search solution needs to provide value across different dimensions:

- Accuracy: The search results need to be of good quality to be actionable, that is, the retrieved items need to be similar to the query.

- Performance: The search needs to be fast, often meeting stringent quality-of-service constraints.

- Scaling: Databases are quickly becoming larger and larger, both in terms of the number of items they contain and in terms of the dimensionality of said items.

- Cost: Being deployed in production and datacenter scenarios, the solution needs to minimize the TCO, often measured as a combination of capital expenditures and operating expenses.

A natural solution is to linearly scan over each vector in the database, compare it with the query, rank the results in decreasing order of similarity, and return the most similar ones. However, the sheer volume and richness of data preclude this approach and make large-scale similarity search an extremely challenging problem that is both compute and memory-intensive. To achieve acceleration, dedicated solutions are needed, which commonly involve two phases:

- During indexing, each element in the database is converted into a high-dimensional vector. Then, an advanced data structure, called an index, is set up such that the search can be carried out as efficiently as possible by effectively accessing only a small fraction of the database.

- At search time, given a query vector, an algorithm sifts through the database using the index. Its results are used to take different informed actions, depending on the final application, based on these semantically relevant results.

The NeurIPS 2021 "Billion-Scale Approximate Nearest Neighbor Search Challenge"

In December 2021, the first billion-scale similarity search competition was run as part of the NeurIPS conference. The goal of the competition was to provide a comparative understanding of the state-of-the-art across a curated collection of datasets, and to promote the development of new solutions.

We participated in the competition's custom hardware track, where we could take full advantage of Intel®'s hardware offerings. In particular, we developed a solution that fully leverages the capabilities of Intel® Xeon® CPUs and Optane™ Persistent Memory, creating a one-two approach that led us to win the competition.

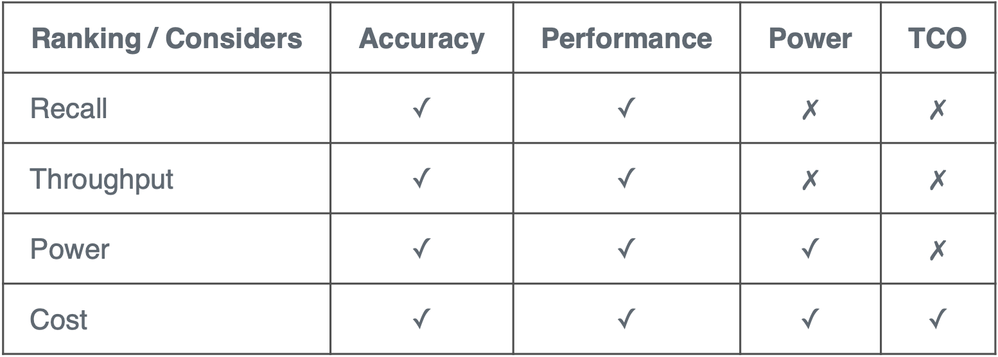

Before going to the actual results, we offer a high-level view of what goes into each ranking:

For example, the "Cost ranking" does not just measure cost, it measures cost (TCO) but only after controlling for all three other metrics. As such, it is the most comprehensive ranking.

We provide a snapshot of the competition's final leaderboard. Further details about the competition's custom hardware track are available here.

Our solution performed well in all categories, ranking first in two and second in the other two. No other solution achieves this balance. For example, diskann takes the first spot in recall but is fifth in throughput. Conversely, cuanns_multigpu leads in throughput but is fifth in recall.

Regarding the recall and throughput rankings:

- The recall ranking measures accuracy and is computed at a fixed performance (throughput). Although in second place, we are within 0.25% of the leader, with the third placement being more than 10% lower.

- The throughput ranking measures performance and is computed at a fix accuracy (recall). Here, we are again in second place, with a performance that is 3.47 times lower than the leader. However, as we will see next, our solution is much less expensive!

Perhaps more importantly, we dominate the comprehensive Cost ranking by a significant margin. Digging deeper, our solution is between 8.85 and 19.7 times better than the second-place solution, cuanns_ivfpq, that uses an Nvidia DGX™ A100 GPU system. In all datasets, except for the most challenging one (in terms of raw size), our solution offers a TCO of $15,000~$16,000, versus cuanns_ivfpq's TCO of $150,000~$300,000. For the most challenging dataset, our TCO is approximately $100,000, versus cuanns_ivfpq's TCO of $900,000. Our TCO savings democratize the access to similarity search systems in the modern large-scale, high-accuracy, and high-performance scenario.

The key behind our results is a characterization of the computational and memory access patterns behind similarity search. On the one hand, we identified that CPUs, and Xeon® in particular, are the optimal hosts for the computations. On the other hand, we realized how to strategically place different data structures in different memory tiers, thus lowering the cost while sustaining performance with the usage of Optane™. For the readers that want to know more, we offer a peek under the hood after the conclusions.

Conclusions

Our solution impugns common wisdom surrounding similarity search that says: if you need performance use GPUs, if you need accuracy use CPUs, and if you need both go home. By combining Xeon® and Optane™, we demonstrate that it is possible to simultaneously achieve high accuracy and high performance. Moreover, the solution comes with the added benefits of low power consumption and an affordable TCO!

If you are as thrilled about the future of similarity search as we are, stay tuned! We are planning to release our solution as an open-source library in 2022.

A Peek Under the Hood

One of the premier similarity search methods employs graphs as indices. Here, two main data structures are used: the feature vectors and the graph, with one node per feature vector and a strategically and parsimoniously selected edge set. We carefully studied this family of algorithms, characterizing its compute and memory access patterns. The key findings were:

- The search algorithm is not massively parallelizable. The main search procedure consists of a fast graph traversal, with a pointer-chasing memory access pattern. The sequential nature of the incumbent operations favors CPUs over massively-parallel architectures, like GPUs.

- Multiple searches can be performed in parallel. Although each search is not intrinsically parallelizable, Xeon®'s multi-threading and multi-core capabilities enable executing multiple searches in parallel without noticeably slowing each search individually.

- The similarity comparisons are amenable to single instruction, multiple data (SIMD) parallelism. The dominant similarity measures used for comparisons are cosine similarity, Euclidean distance, and inner product, all of which can be implemented with extreme efficiency using Intel® Xeon®'s AVX512 instruction set.

- While all memory accesses are random, the graph and the vectors have different access patterns. Throughout the search, the feature vectors are accessed much more frequently than the graph. The graph, even if sparse, occupies a significant amount of memory and can hardly be compressed. This situation is ideal for placing the graph in Optane™ Persistent Memory, which enables an affordable increase in the memory capacity per socket while retaining a low latency. We keep the feature vectors in DRAM memory as they are compressible, occupying less memory. (For the competition, we did not compress the feature vectors; however, future solutions will incorporate this feature)

Following these findings, careful architecture-specific optimizations, such as memory alignment and allocation and thread management were necessary. All in all, our solution fully leverages Xeon®'s and Optane™'s capabilities and features.

The main hardware specifications of our system are:

- Dual socket Intel® Xeon® Gold 6330N Processor

- 16 DIMMs of Intel® Optane™ Persistent Memory 200 Series

- 16 DIMMs of 32GB DDR4 DRAM

Further hardware details and cost are available here and here.

The benchmarking performed by the competition organizers, according to the competition rules, and published on Dec 5, 2021, is explained on the competition website.

Notices & Disclaimers

Performance varies by use, configuration and other factors. Learn more at www.Intel.com/PerformanceIndex.

Performance results are based on testing as of dates shown in configurations and may not reflect all publicly available updates. See backup for configuration details. No product or component can be absolutely secure.

Your costs and results may vary.

Intel does not control or audit third-party data. You should consult other sources to evaluate accuracy.

Intel technologies may require enabled hardware, software or service activation.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.

Mariano Tepper is a researcher with the Brain-Inspired Computing Lab at Intel Labs. He is interested in image processing and analysis, computer vision, pattern recognition, machine learning, optimization, and network analysis. He is also interested in their application to very diverse fields, such as neuroscience, medicine, social sciences, and art. Mariano Tepper holds a Master’s Degree in applied mathematics from the École Normale Supérieure de Cachan and a Ph.D. in Computer Science from the University of Buenos Aires.

Mariano Tepper is a researcher with the Brain-Inspired Computing Lab at Intel Labs. He is interested in image processing and analysis, computer vision, pattern recognition, machine learning, optimization, and network analysis. He is also interested in their application to very diverse fields, such as neuroscience, medicine, social sciences, and art. Mariano Tepper holds a Master’s Degree in applied mathematics from the École Normale Supérieure de Cachan and a Ph.D. in Computer Science from the University of Buenos Aires.

Sie müssen ein registrierter Benutzer sein, um hier einen Kommentar hinzuzufügen. Wenn Sie sich bereits registriert haben, melden Sie sich bitte an. Wenn Sie sich noch nicht registriert haben, führen Sie bitte eine Registrierung durch und melden Sie sich an.