Authors: Narasimha Lakamsani, Devang Aggarwal, Sesh Seshagiri, Jian Sun

In a previous blog, we talked about how developers, like yourself, can utilize Windows Subsystem for Linux (WSL 2), a product that allows developers to run a Linux environment directly on Windows, and leverage the power of the Intel® iGPU on your laptop for inferencing of your applications.

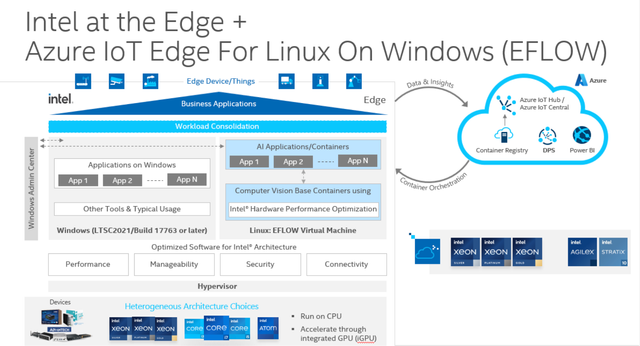

In this blog, we will talk about a similar product, Azure IoT Edge for Linux on Windows (EFLOW). The similarities between both EFLOW and WSL is that they both use the same underlying technology and type of containerized workloads. However, they are created with different audiences and use cases in mind. WSL is meant to build, test, and run Linux code and applications. Whereas EFLOW is used for deployment of Azure IoT Edge modules and docker images running in containers.

In fact, you can use WSL for development and bundle your developed application in an Azure IoT Edge container to deploy it in EFLOW.

How EFLOW Works

Azure IoT Edge for Linux on Windows allows you to run containerized Linux workloads by running a curated or systematically organized Linux virtual machine on windows. EFLOW is primarily meant for running production services in IoT deployment, including processes that run round the clock for years.

Unleash the power of Intel® iGPU with the OpenVINO™ Toolkit

Intel’s Integrated Graphics Processing Unit (iGPU) is included in the same die as your CPU. You can use this added power on your machine to accelerate your AI inferencing workloads. The graphics processing unit comes in two flavors: integrated (built into the CPU) and discrete (stand-alone graphics card), branded as Intel® Iris® Xe MAX.

To unleash the full potential of Intel hardware for AI workloads you can use the OpenVINO™ toolkit, available in open-source version here: https://github.com/openvinotoolkit/openvino

Now, you can access Intel® iGPU through Azure IoT Edge for Linux on Windows (EFLOW) with the help the OpenVINO™ Toolkit.

System Setup for EFLOW

-

Our system(works on other versions)

-

Windows 10/11 Insider preview

- Windows Internet of Things Enterprise LTSC 2H21 (Build 19044)

-

-

Enable Hyper-V

- Open PowerShell with administrator privileges and run the following command:

Enable-WindowsOptionalFeature -Online -FeatureName Microsoft-Hyper-V -All - Press “y” to reboot and complete the operation

- Open PowerShell with administrator privileges and run the following command:

-

Install Intel® Graphics Driver

- Download and install the latest GPU host driver from https://www.intel.com/content/www/us/en/download/19344/intel-graphics-windows-dch-drivers.html

- Make sure driver the version is 30.0.100.9955 or later, executable file igfx_win_100.9684.exe

-

WSL Installation

- Open PowerShell with administrator privileges and run the following command:

-

wsl --install - Restart the system to finish the installation

EFLOW installation

-

Download EFLOW

- Open PowerShell with administrator privileges and run the following commands:

-

$msiPath = $([io.Path]::Combine($env:TEMP,'AzureIoTEdge.msi')) -

$ProgressPreference = 'SilentlyContinue'

-

Invoke-WebRequest "https://aka.ms/AzEflowMSI" -OutFile $msiPath

-

Install EFLOW

-

Start-Process -Wait msiexec -ArgumentList "/i","$([io.Path]::Combine($env:TEMP, 'AzureIoTEdge.msi'))","/qn"

-

EFLOW Deployment

-

Deploy EFLOW

- Open PowerShell with administrator privileges and run the following commands:

-

Set-ExecutionPolicy -ExecutionPolicy RemoteSigned -Force

-

Import-Module AzureEFLOW

-

cpu_count = 4

-

$memory = 4096

-

$hard_disk = 30

-

$gpu_name = (Get-WmiObject win32_VideoController | where{$_.name -like "Intel(R)*"}).caption

-

Deploy-Eflow -acceptEula yes -acceptOptionalTelemetry no -headless -cpuCount $cpu_count -memoryInMB $memory -vmDiskSize $hard_disk -gpuName $gpu_name -gpuPassthroughType ParaVirtualization -gpuCount 1

-

SSH into EFLOW VM

-

Connect-EflowVM

-

-

Ensure that GPU-PV is enabled

- Within the EFLOW VM, run the following command:

-

ls -al /dev/dxg - The expected output should be similar to:

-

crw-rw-rw- 1 root 10, 60 Sep 8 06:20 /dev/dxg

Deploy a Docker container with OpenVINO™ Runtime and Run Benchmarking Using the Intel® iGPU

-

Create the Docker File

-

mkdir -p openvino_docker_dir && cd openvino_docker_dir -

vi Dockerfile - Use the sample Dockerfile listed below to update your file:

FROM ubuntu:22.04RUN rm /bin/sh && ln -s /bin/bash /bin/shENV DEBIAN_FRONTEND noninteractiveENV INTEL_OPENVINO_DIR /opt/intel/openvino_2021ENV MO_DIR ${INTEL_OPENVINO_DIR}/deployment_tools/model_optimizerENV OPENVINO_VERSION 2021.4.752ENV OPENVINO_VERSION_OMZ 2021.4.2# Installing python 3.8RUN apt update && apt install -y software-properties-commonRUN add-apt-repository -y ppa:deadsnakes/ppa && \apt install -y python3.8 python3.8-distutils && \ln -sf /usr/bin/python3.8 /usr/bin/python3RUN apt install -y clinfo wget gnupg gpg-agent software-properties-common nfs-commonRUN apt install -y libgtk-3-0 python3.8-dev python3-setuptools python3-pip git build-essential cmake vim imagemagick ghostscriptRUN pip3 install --upgrade pipRUN pip3 install virtualenv openvino defusedxmlRUN pip3 install future yacsRUN pip3 install torch==1.8.2+cpu torchvision==0.9.2+cpu torchaudio==0.8.2 -f https://download.pytorch.org/whl/lts/1.8/torch_lts.htmlRUN pip3 install pycocotools pypi-kenlm pdflatex opencv-python numpy==1.19.5# Openvino installation as documented at https://docs.openvinotoolkit.org/latest/openvino_docs_install_guides_installing_openvino_apt.htmlRUN unset no_proxy && \apt-key add GPG-PUB-KEY-INTEL-OPENVINO-2021 && \echo "deb https://apt.repos.intel.com/openvino/2021 all main" | tee /etc/apt/sources.list.d/intel-openvino-2021.list && \apt update && \apt install -y intel-openvino-runtime-ubuntu20-${OPENVINO_VERSION} \intel-openvino-ie-samples-${OPENVINO_VERSION} \intel-openvino-model-optimizer-${OPENVINO_VERSION} \intel-openvino-pot-${OPENVINO_VERSION}# Install the Open Model Zoo Accuracy CheckerWORKDIR /rootRUN git clone https://github.com/openvinotoolkit/open_model_zoo.git && cd open_model_zoo && git checkout ${OPENVINO_VERSION_OMZ}WORKDIR /root/open_model_zoo/tools/accuracy_checkerRUN pip3 install -r requirements.in && python3 setup.py install# Install the Model optimizer dependenciesWORKDIR ${MO_DIR}/install_prerequisitesRUN unset no_proxy && \source ${INTEL_OPENVINO_DIR}/bin/setupvars.sh && \sed -i 's/sudo -E//g' install_prerequisites.sh && \./install_prerequisites_tf2.sh && \./install_prerequisites_caffe.sh && \./install_prerequisites_onnx.sh && \./install_prerequisites_mxnet.sh# Environment SetupRUN echo "source /opt/intel/openvino_2021/bin/setupvars.sh" | tee -a /root/.bashrcENV LD_LIBRARY_PATH=/opt/intel/openvino_2021/deployment_tools/inference_engine/external/tbb/lib/:/opt/intel/openvino_${OPENVINO_VERSION}/deployment_tools/inference_engine/lib/intel64/:/opt/intel/openvino_${OPENVINO_VERSION}/deployment_tools/ngraph/lib/# Install Intel OpenCL release and dependenciesWORKDIR /root/neoRUN wget https://github.com/intel/intel-graphics-compiler/releases/download/igc-1.0.12149.1/intel-igc-core_1.0.12149.1_amd64.deb && \wget https://github.com/intel/compute-runtime/releases/download/22.38.24278/libigdgmm12_22.1.8_amd64.debRUN dpkg -i /root/neo/*.deb

-

-

Build the Docker Image

- Within the directory where the docker file is located, run the following command:

-

sudo docker build -t ovbuild .

-

Launch the Docker Container with access the GPU-PV functionalities

-

sudo docker images - Use the output to find the <IMAGE ID> for the container you built

-

sudo docker run -it --device /dev/dxg:/dev/dxg -v /usr/lib/wsl:/usr/lib/wsl <IMAGE ID> bash

-

Run OpenVINO™ Benchmarking Using the iGPU

-

Within the deployed container, run the following commands:

-

cd /opt/intel/openvino_2021/inference_engine/samples/cpp &&

./build_samples.sh

-

cd ~/inference_engine_cpp_samples_build/intel64/Release

-

wget https://download.01.org/opencv/2021/openvinotoolkit/2021.2/ope

n_model_zoo/models_bin/3/face-detection-retail-0004/FP32/face-detection-retail-0004.xml -

wget https://download.01.org/opencv/2021/openvinotoolkit/2021.2/ope

n_model_zoo/models_bin/3/face-detection-retail-0004/FP32/face-detection-retail-0004.bin -

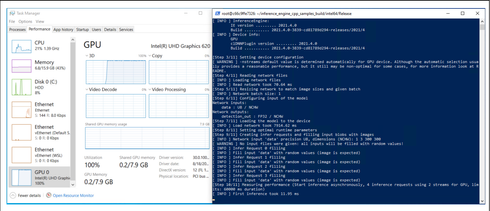

./benchmark_app -m face-detection-retail-0004.xml -d GPU

- The output should look something like the image shown below with clear indication of iGPU usage in the task manager

Azure IoT Hub Deployment

Learn how to deploy Azure IoT Edge modules from Azure cloud to your Windows device here.

Conclusion

We hope that with this blog, we were able to show you just how easy it is to:

- Setup and deploy your application or docker container in Linux VMs using EFLOW on a windows device.

- Offload your AI workloads by harnessing the power of iGPU.

- Get a significant performance boost by simply using OpenVINO.

Notices & Disclaimers

Performance varies by use, configuration and other factors. Learn more at www.Intel.com/PerformanceIndex .

Performance results are based on testing as of dates shown in configurations and may not reflect all publicly available updates. See backup for configuration details. No product or component can be absolutely secure.

Your costs and results may vary.

Intel technologies may require enabled hardware, software or service activation.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others

Devang is an AI Product Manager at Intel. He is part of Internet of Things (IoT) Group, where his focus is driving OpenVINO™ Toolkit integrations into popular AI Frameworks like ONNX Runtime. He also works with CSPs to enable cloud developers to seamlessly go from cloud to edge.

Devang is an AI Product Manager at Intel. He is part of Internet of Things (IoT) Group, where his focus is driving OpenVINO™ Toolkit integrations into popular AI Frameworks like ONNX Runtime. He also works with CSPs to enable cloud developers to seamlessly go from cloud to edge.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.