Sometimes we want to run the same model code using different type of AI accelerators. For example, this can be required if your development laptop has a GPU, but your training server is using Gaudi. Or another situation may be if you would like to dynamically choose between a local GPU server and a cloud DL1 instance that is powered by Gaudi. While writing code for each type of AI accelerator is easy, we need to pay attention to the “little things” when we try to enable multiple hardware platforms from a common code base.

In this tutorial we will learn how to write code that automatically detects what type of AI accelerator is installed on the machine (Gaudi, GPU or CPU), and make the needed changes to run the code smoothly.

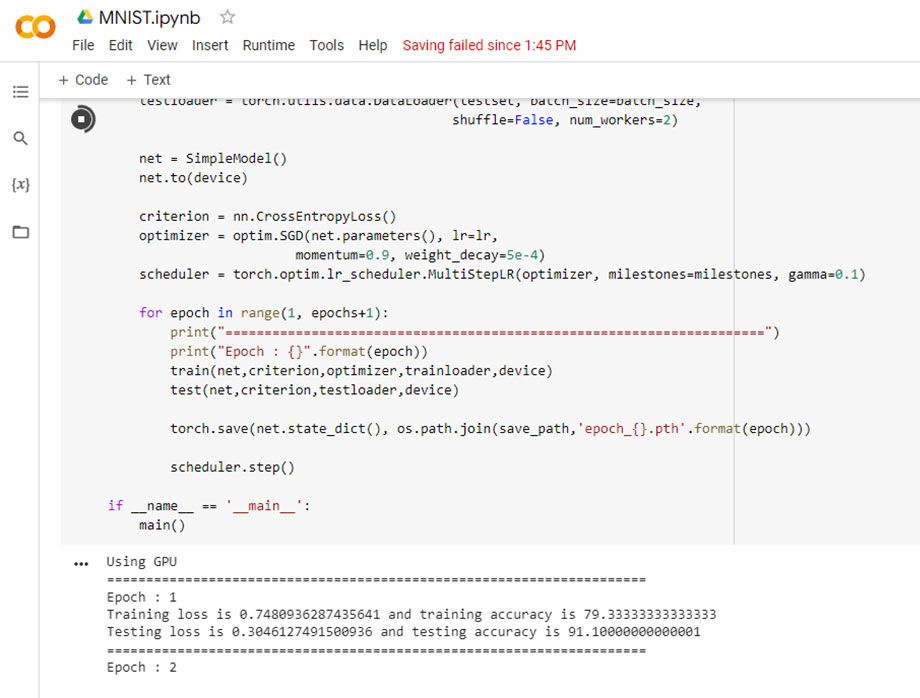

We will use the “Getting Started with PyTorch and Gaudi” MNIST training example, and will show how to write cross hardware code with a few tweaks.

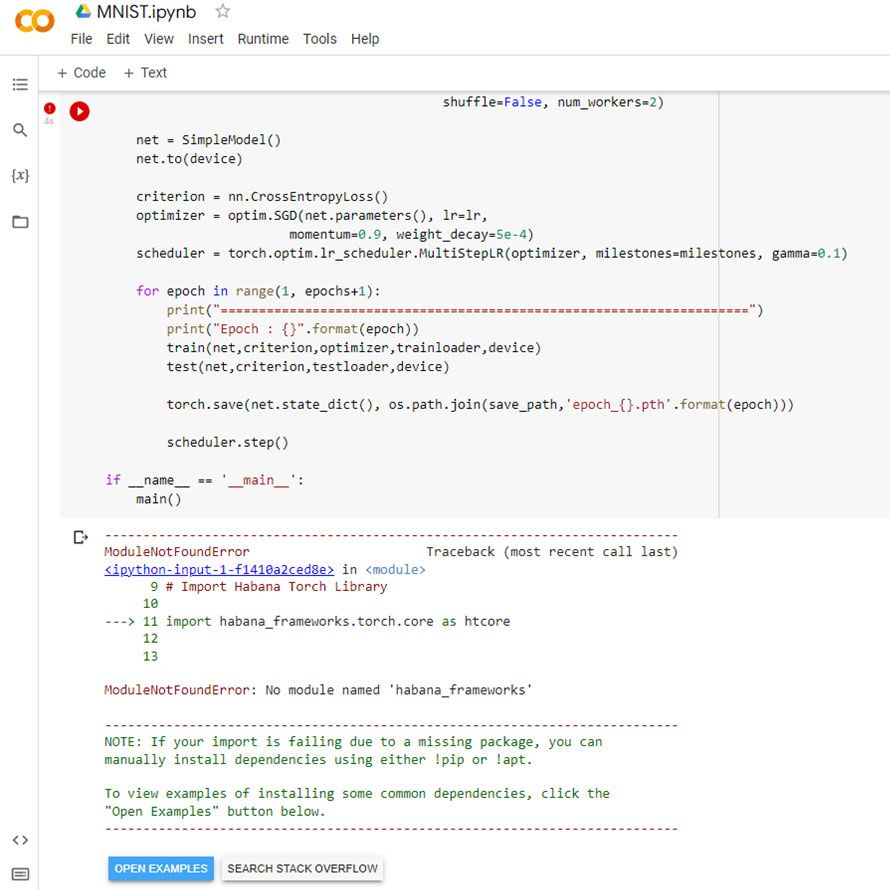

Trying to simply run the code in a non-SynapseAI/Gaudi environment will generate the following error:

ModuleNotFoundError: No module named 'habana_frameworks'

We get this error because the module habana_frameworks is not installed on this machine. To overcome this obstacle we need to wrap the code with a try/except block as follows:

try:

import habana_frameworks.torch.core as htcore

import habana_frameworks.torch.hpu as hthpu

except:

htcore = None

hthpu = None

Pay attention that if we catch the exception, we assign to both htcore and hthpu a None value. This will help us later to know that SynapseAI is not installed on the machine.

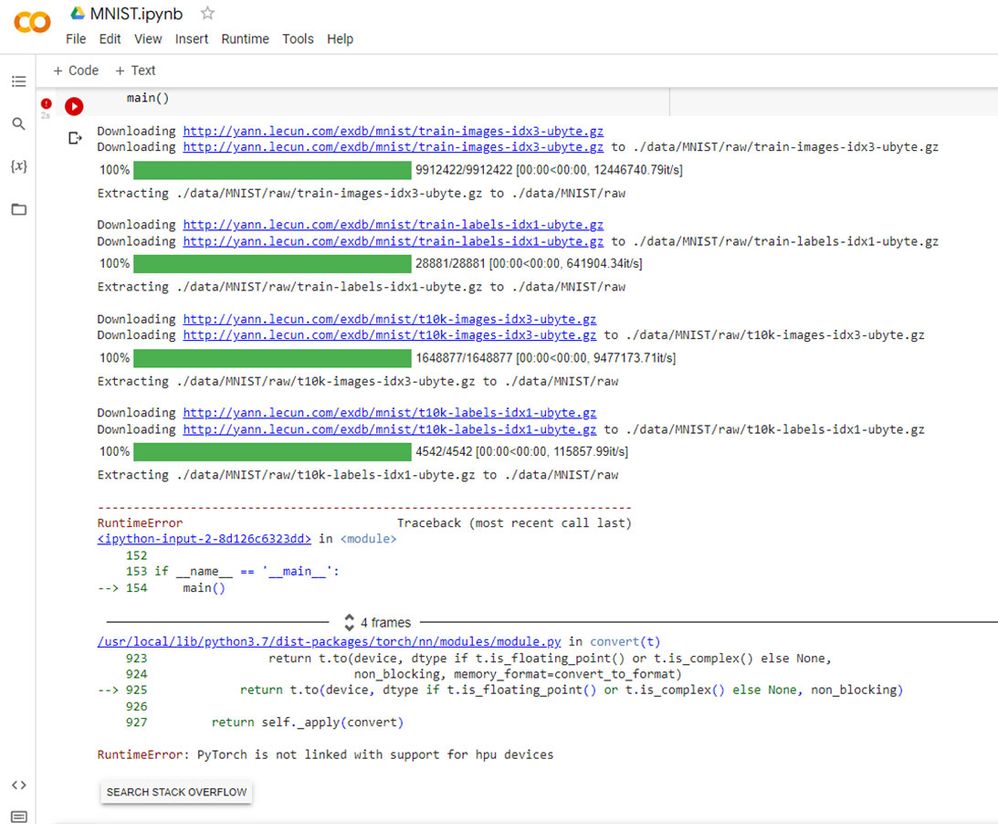

Now let’s try to run the code again.

The original code is trying to move the model to the HPU backend, which is of course not supported without SynapseAI and Gaudi. We will replace the original line

device = torch.device("hpu")

with a code that will dynamically use the best available hardware. See the following code:

if hthpu and hthpu.is_available():

target = "hpu";

print("Using HPU")

elif torch.cuda.is_available():

target = "cuda";

print("Using GPU")

else:

target = "cpu"

print("Using CPU")

device = torch.device(target)Remember that we assigned None to hthpu when the habana_frameworks module was not installed? Now we can use it to identify that SynpaseAI is installed. If it is installed, we are using the is_available() API to dynamically identify that the server has a Gaudi device. If Gaudi is not available, we will use the similar CUDA API to check if the server has a GPU, and if nothing is there, we will just use CPU.

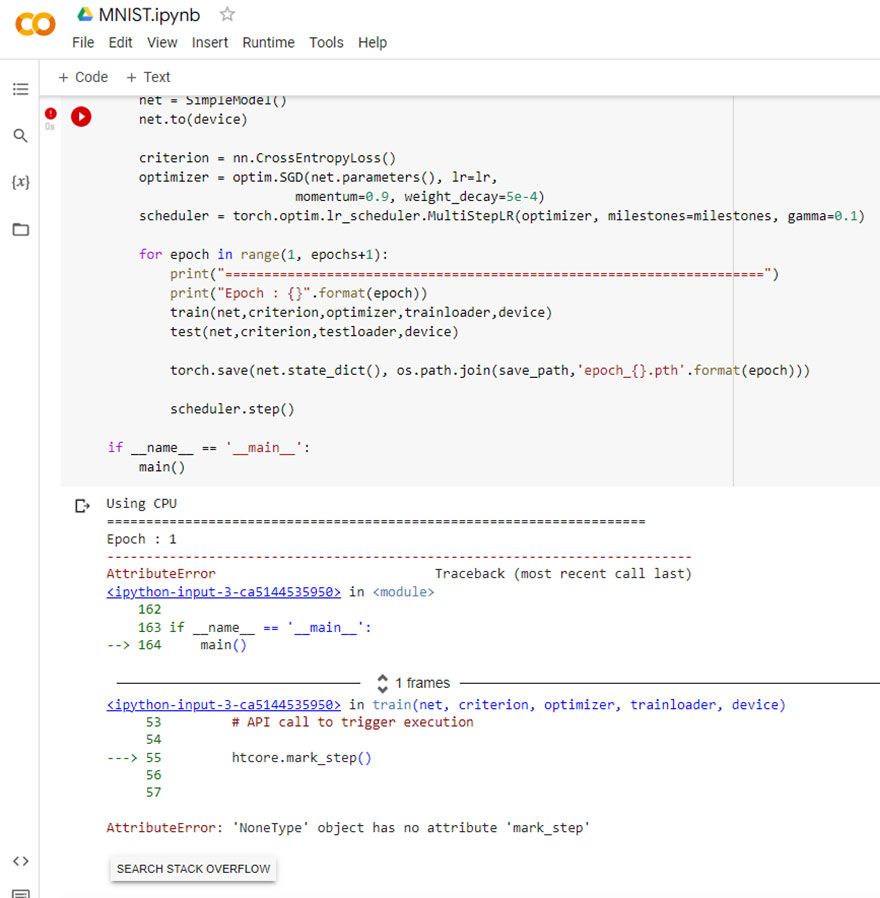

Next we will have to make sure that we are invoking the mark_step() calls only when Gaudi is present.

Same as before we will use the is_available API to make sure we call the SynapseAI APIs only when Gaudi is present. We will use the following code:

if hthpu and hthpu.is_available():

htcore.mark_step()

Make sure to replace all the occurrences of mark_step() in the code.

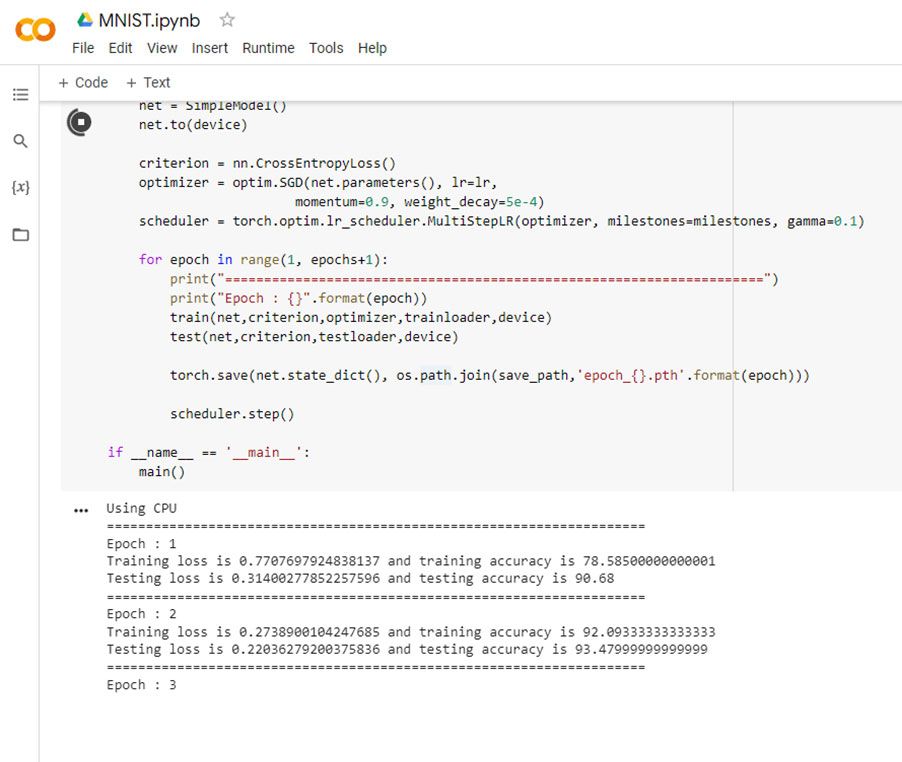

That’s it! The code will work nicely on all three different types of hardware, Gaudi, GPU and CPU.

What’s next

You can learn more on Habana PyTorch Python APIs here

Originally published at https://developer.habana.ai on November 1, 2022.

PM @ Habana Labs, Ex Meta, PayPal, Conversation.one

Loves ML, DL, Gardening and Board Games

PM @ Habana Labs, Ex Meta, PayPal, Conversation.one

Loves ML, DL, Gardening and Board Games

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.