AWS Well-Architected Framework:

AWS Well-Architected[i] helps cloud architects build secure, high-performing, resilient, and efficient infrastructure for a variety of applications and workloads. The AWS Well-Architected Framework describes key concepts, design principles, and architectural best practices for designing and running workloads in the cloud. The AWS Well-Architected Framework currently has six pillars across (1) Operational Excellence, (2) Security, (3) Reliability, (4) Performance Efficiency, (5) Cost Optimization & (6) Sustainability. In this article, we will focus on three of the pillars relating to performance, cost, and sustainability.

Figure 1: Pillars of the AWS Well Architected Framework[ii]

Cloud Economics and Cost Management:

In this tough economic climate, enterprises are looking at their cloud costs and seeking ways to optimize their footprint. The prevalent view from cloud providers like AWS has been to cut costs[iii] by migration to the Graviton platform, which Amazon claims to be low power, by re-platforming some of the applications that can run on both Intel and Graviton instances. The same assumption is used for cloud migration tools for customers, that make cost of an instance as the primary consideration for instance recommendations.

The aspects relating to different capabilities of Intel processors versus AMD or Graviton and performance optimizations available are completely ignored with comparisons being primarily based on cost with core count[iv] used as the metric. Not all processors and platforms are created equal and using costs per core as the primary factor to make architectural decisions is flawed and a more balanced approach needs to be considered.

Workload sizing considerations:

Workloads have their own unique compute requirements, and it is usually a mix of CPU, Memory, Disk and Network requirements. Cloud services providers like AWS have specialized instances that can be leveraged for different workloads such as

As part of the design and sizing exercise for a workload, one would choose the appropriate instance type, the number of cores, memory, network bandwidth and optimal storage. These steps are common across all processor types. Sizing a workload and just choosing the cheapest instance based on the number of cores, memory and storage profile is flawed and causes undue risk to the cloud migration.

A very important factor that needs to be considered in addition to sizing is tuning and optimization of the workloads. One must consider the application, the CPU hardware accelerations available, software optimizations and performance tuning to ensure that the three pillars of AWS Well Architected for performance, cost optimizations and sustainability are completely addressed.

The sustainability pillar for AWS Well Architected looks at power consumption of different processors to formulate best practices. The related whitepaper[v] for sustainability makes an assumption that Intel, AMD and Graviton cores are equal in their performance and capabilities to postulate design anti-patterns that discourages the use of x86 or sticking with just one family of instances. These anti-patterns discourage the use of Intel x86 instances on AWS. The ARM based Graviton is a chip that Amazon claims to have lower power consumption and that is the driving factor for its preference over x86 on cost. No consideration is made for optimizations and related sizing adjustments. In this paper, we will demonstrate the effect of these performance improvements when leveraging these hardware and software optimizations and their effect on sizing of these instance types and its impact on cost and sustainability.

Re-platforming and Risk:

When a customer is migrating their workload to the public cloud one of the biggest factors to consider is risk as they are moving their critical workloads from an on-premises environment where they control all aspects of the infrastructure, to the cloud. The goal of the initial migration is to ensure that the workload meets the customer SLAs for performance and reliability. The migration and cost management tools use re-platforming as a strategy to cut costs, while completely ignoring the risks involved. One of the core philosophies in risk reduction is to reduce the amount of change that happens during a migration. Re-platforming is a major risk, that if introduced into the migration process for apparent cost savings, can derail the entire move to the cloud. Though cost is important, there are many ways to optimize it without taking undue risk.

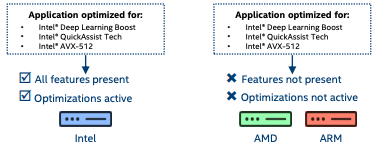

IT organizations and software vendors frequently optimize their application to take advantage of Intel-specific innovations and instructions. Application capabilities and performance gains based on those Intel innovations may be lost on a different architecture.

Figure 2: Intel HW features absent in AMD and Graviton

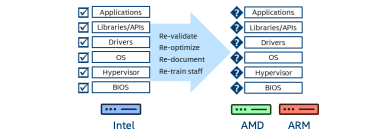

IT organizations carefully validate, tune, and document their software stacks for a known environment. Changing the CPU architecture can introduce incompatibilities or sub[1]optimizations that likely require time-consuming, expensive re-validation or troubleshooting.

Figure 3: Platform migration requires re-validation, re-optimization, and additional training for staff

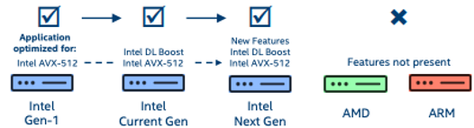

Since Intel features and instructions are broadly retained across generations, optimizations for Intel-specific innovations continue to pay off even as applications migrate to newer Intel servers but may be lost on a different architecture.

Figure 4: Intel HW features are retained across multiple generations of the processor

Before considering re-platforming, other options available should be evaluated such as leveraging available optimizations that can improve performance & sustainability while reducing costs. Intel invests a lot of R&D on its processor family and their optimization. Let us look at the optimizations and other capabilities available with the modern family of Intel Xeon scalable Xeon processors.

HW and SW Acceleration for Intel Xeon Scalable processors:

Intel Xeon® Scalable HW Acceleration:

3rd and 4th Gen Intel® Xeon® Scalable processors offer a balanced architecture with built-in acceleration and advanced security capabilities, designed over decades of innovation for the most in-demand workload requirements—all with the consistent, open Intel architecture. Not all features mentioned are available in Amazon EC2 instances. The features mentioned for the 4th Gen Intel® Xeon® Scalable will be available over time in newer instances.

Intel HW Acceleration Features for Workloads:

Latest generation Intel Xeon Scalable processors offers many hardware optimizations [vi] that can potentially increase the performance of workloads many folds. These include:

- Intel AVX-512[vii] overcomes the CPU’s architectural limitations by packing more operations into each clock cycle, for parallel processor-like performance and can accelerate performance for workloads and usages such as scientific simulations, financial analytics, artificial intelligence (AI)/deep learning, 3D modeling and analysis, image and audio/video processing, cryptography, and data compression.

- Intel® Deep Learning Boost (Intel® DL Boost)[viii] combined with Intel features for AI such as lowering numerical precision to increase deep learning performance and enhancing workloads with built-in accelerators facilitates a highly performant HW platform for AI.

- Intel QAT[ix] helps accelerate data encryption for applications for cloud networking, storage, content delivery and databases.

- Intel® vRAN Boost[xi] for virtualized radio access networks (vRAN) optimizes modern telecommunications, reduces complexity, improves power savings, and eliminates the need for an external accelerator card.

The latest 4th Gen Intel® Xeon® Scalable processors have more built-in accelerators of any CPU on the market to improve performance for the fastest-growing workloads. Some prominent features include:

- Intel® Advanced Matrix Extensions (Intel® AMX)[xii] significantly accelerates HPC, deep learning training and inference, ideal for workloads like risk and fraud detection, genomic sequencing, climate science, natural language processing, recommendation systems and image recognition.

- Intel® In-Memory Analytics Accelerator (Intel® IAA)[xiii] helps run database and analytics workloads faster, ideal for in-memory databases, open-source databases, and data stores.

- Intel® Data Streaming Accelerator (Intel® DSA)[xiv] drives high performance for storage, networking, and data-intensive workloads by improving streaming data movement and transformation operations.

- Intel Xeon CPU Max Series provides high bandwidth memory[xv] that helps reduce bottlenecks of memory-bound applications and deliver much improved performance for modeling, AI, HPC and data analytics.

Intel Software Optimizations:

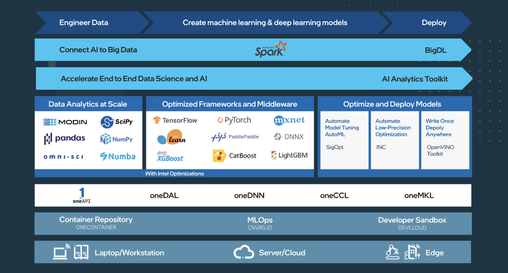

Intel is usually perceived to be a hardware only company, but it is far from the truth. Intel has more than 18000 software engineers, the majority of whom focus on performance optimizations. Intel’s oneAPI framework supports an open, cross-architecture programming model that frees developers to use a single code base across multiple architectures. The result is accelerated compute without vendor lock-in. The latest oneAPI and AI 2023 tools continue to empower developers with performance and productivity, delivering optimized support for Intel’s upcoming portfolio of CPU and GPU architectures and advanced capabilities.

Intel oneAPI Toolkits:

Intel oneAPI toolkits helps developers, data scientists and engineers build, analyze, and optimize high-performance applications on CPUs with best-in-class compilers, performance libraries, frameworks, analysis, and debug tools that include:

- Intel® oneAPI Base Toolkit[xvi] helps developers create performant, data-centric applications across Intel® CPUs, GPUs, and FPGAs with this foundational toolset.

- Intel® oneAPI HPC Toolkit[xvii] helps HPC developers build, analyze, and scale applications across shared- and distributed-memory computing systems.

- Intel® AI Analytics Toolkit[xviii] helps data scientists and AI developers to accelerate end-to-end data science and machine learning pipelines using Python* tools and frameworks.

- Intel® Distribution of OpenVINO™ toolkit (Powered by oneAPI)[xix] helps deep learning inference developers deploy high-performance inference applications from edge to cloud.

- Intel® oneAPI Rendering Toolkit[xx] helps visual creators, scientists and engineers create high-fidelity, photorealistic experiences that push the boundaries of visualization.

- Intel® oneAPI IoT Toolkit[xxi] helps edge device and IOT developers fast-track development of applications and solutions that run at the network's edge.

- Intel® System Bring-up Toolkit[xxii] helps system engineers to strengthen system reliability with hardware and software insight and optimize power and performance.

Figure 5: Intel AI Tools, Libraries and Optimization[xxiii]

Intel is a major player in Open-Source Software Ecosystem:

Intel believes that innovation thrives in collaborative environments that encourage the free exchange of ideas. Intel is committed to building an open ecosystem[xxiv] that is transparent, secure and accessible to all. Intel actively participates on open source projects[xxv] like TensorFlow, PyTorch, Linux, KVM, Kubernetes by encouraging its software engineers to participate in these projects. Many of the Intel optimizations are embedded in these open-source solutions and available by default like AMX support in PyTorch and TensorFlow.

TensorFlow* and PyTorch* frameworks running on 4th generation Intel Xeon Scalable Processors deliver leading AI performance with Intel AMX support and extended optimization capabilities enabled through the Intel® oneAPI Deep Neural Network Library (oneDNN). With Intel SW optimizations being included by default in many popular open-source software and frameworks, developers can use these embedded optimizations with minimal effort.

Benefits of leveraging Intel hardware and software optimizations on AWS:

AWS and Intel have a 16+ year relationship dedicated to developing, building, and supporting cloud services that are designed to manage cost and complexity, accelerate business outcomes, and scale to meet current and future computing requirements. Intel® processors provide the foundation of many cloud computing services deployed on AWS. Amazon Elastic Compute Cloud (Amazon EC2) instances powered by Intel® Xeon® Scalable processors have the largest breadth, global reach, and availability of compute instances across AWS geographies.

Intel has the greatest number of EC2 instances available on AWS and many of the Intel HW features are exposed in these EC2 instances and can be used to accelerate customer workloads. The below schematic shows the gen over gen increases in performance seen in some of the common workloads. This represents the gains available because of hardware accelerators for workloads and is based on the unique capabilities of the latest Intel Xeon Scalable processors.

Figure 6: 4th Xeon Accelerators differentiated performance on real workloads

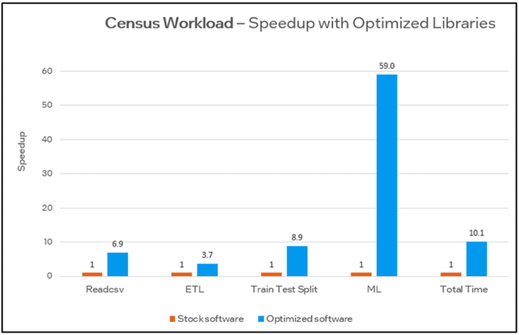

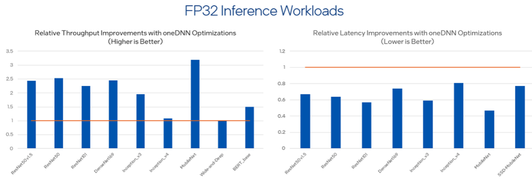

Intel workload specific software optimizations can further improve performance and help reduce the footprint of the instances on AWS. Below are examples of gains from SW specific optimizations that are available through the Intel AI Analytics Toolkit.

Figure 7: End-to-End Performance for Census workload[xxvi]

Figure 8: Performance Benefits of Intel oneAPI Deep Neural Network Library with TensorFlow 2.8[xxvii]

Conclusion:

In this article we looked at three of the pillars of the AWS well-architected framework relating to performance, cost, and sustainability in relation to Intel based instances.

- We analyzed the thought process for AWS behind cost saving recommendations to recommend Graviton instances with cost of the cores as the metric. This recommendation implies that the application will have to be re-platformed from x86 to Arm (Graviton).

- We looked at risks associated with migrations if re-platforming is the main approach to cost reduction.

- There is a potential loss in performance due to the absence of Intel hardware and software features in the target platform and additional operational burdens in supporting a new platform.

- There are significant opportunities to improve performance & sustainability and reduce costs by leveraging the hardware acceleration and the software optimizations for workloads running on Intel instances.

- It is prudent and risk mitigating to optimize workloads for Intel instances and adjust the sizing of the instances appropriately and benefit from the associated cost savings rather than re-platforming.

Call to Action:

While migrating your workloads to AWS:

- Carefully analyze the profile of the workload.

- Research the hardware accelerations and software optimizations available for Intel instances on AWS.

- Adjust instance sizes and costs based on expected benefits on Intel

- Perform true risk analysis for migrations and new workloads

- Reconsider re-platforming decisions based on all the pertinent factors and not just cost

- Leverage Intel Xeon Performance Tuning and Solutions Guide to optimize your workloads[xxviii]

Bibliography

[i] AWS Well-Architected: https://aws.amazon.com/architecture/well-architected

[ii] Six Pillars of AWS Well Architected: https://www.romexsoft.com/blog/six-pillars-of-the-aws-well-architected-framework-the-impact-of-its-usage-when-building-saas-application/

[iii] Optimize AWS without architectural changes or engineering overhead: https://aws.amazon.com/blogs/aws-cloud-financial-management/optimize-aws-costs-without-architectural-changes-or-engineering-overhead/

[iv] Optimizing your cost with Rightsizing Recommendations: https://docs.aws.amazon.com/cost-management/latest/userguide/ce-rightsizing.html

[v] Sustainability Pillar “AWS Well-Architected Framework”: https://docs.aws.amazon.com/pdfs/wellarchitected/latest/sustainability-pillar/wellarchitected-sustainability-pillar.pdf#sustainability-pillar

[vi] How to get the most out of Intel Xeon Scalable processors with Built-In Accelerators: https://www.intel.com/content/www/us/en/now/xeon-accelerated/accelerators-eguide.html

[vii] Accelerate your compute intensive workloads with Intel AVX-512: https://www.intel.com/content/www/us/en/architecture-and-technology/avx-512-overview.html

[viii] Intel Deep Learning Boost (Intel DLBoost): https://www.intel.com/content/www/us/en/artificial-intelligence/deep-learning-boost.html

[ix] Intel QuickAssist Technology (Intel QAT): https://www.intel.com/content/www/us/en/developer/topic-technology/open/quick-assist-technology/overview.html

[xi] Accelerate Innovation Opportunities with vRAN: https://www.intel.com/content/www/us/en/wireless-network/5g-network/radio-access-network.html

[xii] Intel Advanced Matrix Extensions (Intel AMX): https://www.intel.com/content/www/us/en/products/docs/accelerator-engines/advanced-matrix-extensions/overview.html

[xiii] Intel In-Memory Analytics Accelerator Architecture: https://www.intel.com/content/www/us/en/content-details/721858/intel-in-memory-analytics-accelerator-architecture-specification.html

[xiv] Scale IO between Accelerators and Host Processors: https://www.intel.com/content/www/us/en/developer/articles/technical/scalable-io-between-accelerators-host-processors.html

[xv] Product brief: Intel Xeon CPU Max Series: https://www.intel.com/content/www/us/en/products/details/processors/xeon/max-series.html

[xvi] Intel® oneAPI Base Toolkit: https://www.intel.com/content/www/us/en/developer/tools/oneapi/toolkits.html#base-kit

[xvii] Intel® oneAPI HPC Toolkit: https://www.intel.com/content/www/us/en/developer/tools/oneapi/toolkits.html#hpc-kit

[xviii] Intel AI Analytics Toolkit: https://www.intel.com/content/www/us/en/developer/tools/oneapi/toolkits.html#analytics-kit

[xix] Intel Distribution of OpenVino Toolkit: https://www.intel.com/content/www/us/en/developer/tools/oneapi/toolkits.html#openvino-kit

[xx] Intel oneAPI Rendering Toolkit: https://www.intel.com/content/www/us/en/developer/tools/oneapi/toolkits.html#rendering-kit

[xxi] Intel oneAPI IOT Toolkit: https://www.intel.com/content/www/us/en/developer/tools/oneapi/toolkits.html#iot-kit

[xxii] Intel System Bring-up Toolkit: https://www.intel.com/content/www/us/en/developer/tools/oneapi/toolkits.html#bring-up-kit

[xxiii] oneAPI benefits for AU developers and practitioners: https://gestaltit.com/sponsored/intel/intel-2021/adriaromero/oneapi-benefits-for-ai-developers-and-practitioners/

[xxiv] Intel Open Ecosystem: https://www.intel.com/content/www/us/en/developer/topic-technology/open/overview.html

[xxv] Intel Open-Source Projects: https://www.intel.com/content/www/us/en/developer/topic-technology/open/project-catalog.html?s=Newest

[xxvi] Intel oneAPI oneDNN: https://www.intel.com/content/www/us/en/developer/tools/oneapi/onednn.html#gs.n0qkjg

[xxviii] Leverage Intel Xeon Performance Tuning and Solutions Guide to optimize your workloads: https://www.intel.com/content/www/us/en/developer/articles/guide/xeon-performance-tuning-and-solution-guides.html

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.