In part 1 of the blog series we looked at the HPC landscape and the tools available on AWS for HPC deployment. In this part of the blog series we will compare performance between Intel and AMD HPC instances on AWS for GROMACS.

GROMACS:

GROMACS (GROningen Machine for Chemical Simulations) is a widely used open-source molecular dynamics (MD) simulation software package. It is designed to simulate the behavior of biomolecules, such as proteins, nucleic acids, lipids, and other complex molecular systems, at the atomic level.

GROMACS provides a suite of tools and algorithms that enable researchers to simulate the motion and interactions of atoms over time, allowing for the study of various biological, chemical, and physical phenomena. Some key features of GROMACS include:

- Molecular Dynamics Simulations: GROMACS performs classical MD simulations, which compute the trajectories of atoms based on Newton's laws of motion. It integrates the equations of motion to simulate the behavior of molecular systems, capturing their dynamic properties and conformational changes.

- Force Fields: GROMACS supports a wide range of force fields, including CHARMM, AMBER, OPLS-AA, and GROMOS, which describe the potential energy function and force interactions between atoms. These force fields provide accurate descriptions of the molecular interactions, allowing researchers to study various biological processes and molecular systems.

- Parallelization and Performance: GROMACS is designed for high-performance computing (HPC) and can efficiently utilize parallel architectures, such as multi-core CPUs and GPUs. It employs domain decomposition methods and advanced parallelization techniques to distribute the computational workload across multiple computing resources, enabling fast and efficient simulations.

- Analysis and Visualization: GROMACS offers a suite of analysis tools to extract and analyze data from MD simulations. It provides functionalities for computing properties such as energy, temperature, pressure, radial distribution functions, and free energy landscapes. GROMACS also supports visualization tools, allowing users to visualize and analyze the trajectories of molecular systems.

- User-Friendly Interface: GROMACS provides a command-line interface (CLI) and a set of well-documented input and control files, making it accessible to both novice and expert users. It offers flexibility in defining system parameters, simulation conditions, and analysis options through easily modifiable input files.

- Integration with Other Software: GROMACS can be integrated with other software packages and tools to perform advanced analysis and extend its capabilities. It supports interoperability with visualization tools like VMD and PyMOL, analysis packages like GROMACS Analysis Tools (GROMACS Tools) and MDAnalysis, and scripting languages such as Python, allowing users to leverage a wide range of complementary tools.

GROMACS has been used extensively in various scientific fields, including biochemistry, biophysics, pharmaceutical research, and material science. It has contributed to significant advancements in understanding bio-molecular structures, protein folding, protein-ligand interactions, membrane dynamics, and many other areas of research. As an open-source software, GROMACS is actively developed and maintained by a dedicated community of researchers and developers. Its availability under the GNU General Public License (GPL) ensures that it remains free to use and modify, fostering collaboration and contributions from the scientific community.

Performance testing for GROMACS:

A couple of benchmarks were chosen to compare the performance of HPC instances for Intel and AMD on AWS. Details of the benchmarks are shown below.

PEP Benchmark for GROMACS:

The PEP (Performance Evaluation Project)[i] benchmark for GROMACS is a widely recognized benchmark used to assess the performance of computer systems and architectures in running molecular dynamics simulations using GROMACS. It provides a standardized workload that represents a realistic scientific application and allows for fair and comparable performance comparisons across different hardware configurations.

The PEP benchmark consists of a set of molecular dynamics simulations of a small protein in a water box. The benchmark is designed to reflect typical usage scenarios and includes multiple stages that represent different aspects of the simulation workflow. These stages involve various computational tasks, including force calculations, neighbor searching, and particle movement.

The key metrics evaluated by the PEP benchmark include the total simulation time, time per simulation step, performance in terms of nanoseconds simulated per day (ns/day), and scaling efficiency across different numbers of CPU cores or GPU accelerators.

The PEP benchmark is regularly updated to ensure its relevance and representativeness of modern computing systems. It allows researchers and users to assess and compare the performance of different hardware configurations, software optimizations, and parallelization strategies for running GROMACS simulations.

It's important to note that the PEP benchmark provides a standardized measure of performance, but the actual performance of GROMACS on specific hardware and systems can vary depending on factors such as CPU or GPU architecture, memory bandwidth, interconnects, storage systems, and system configurations. Additionally, optimizing the performance of GROMACS often requires tailoring the simulation parameters, hardware configurations, and software settings to the specific problem being studied.

STMV Benchmark:

Satellite Tobacco Mosaic Virus (STMV ) [ii]is a small, icosahedral plant virus that worsens the symptoms of infection by Tobacco Mosaic Virus (TMV). SMTV is an excellent candidate for research in molecular dynamics because it is relatively small for a virus and is on the medium to high end of what is feasible to simulate using traditional molecular dynamics in a workstation or a small server. STMV virus benchmark (1,066,628 atoms, periodic, PME) is useful for demonstrating scaling to thousands of processors.

GROMACS Testing:

Category | Attribute | Config1 | Config2 |

Run Info |

|

|

|

| Cumulus Run ID | N/A | N/A |

| Benchmarks | GROMACS PEP & STMV | GROMACS PEP & STMV |

| Date | April 2023 | April 2023 |

| Test by | Intel | Intel |

CSP and VM Config |

|

|

|

| Cloud | AWS | AWS |

| Region |

|

|

| Instance Type | HPC6a in AWS ParallelCluster | HPC6id in AWS ParallelCluster |

| CPU(s) | 384 | 384 |

| Microarchitecture | AWS Nitro | AWS Nitro |

| Instance Cost |

|

|

| Number of Instances or VMs (if cluster) | 4 | 6 |

| Iterations and result choice (median, average, min, max) | 3 | 3 |

Memory |

|

|

|

| Memory | 1534 GB | 6144 GB |

| DIMM Config |

|

|

| Memory Capacity / Instance |

|

|

Network Info |

|

|

|

| Network BW / Instance | 100 GBPS EFA | 200 GBPS EFA |

| NIC Summary |

|

|

Storage Info |

|

|

|

| Storage: Network Storage | FSX Lustre 1.2 TB | FSX Lustre 1.2 TB |

Table 1: Instance and Benchmark Details for GROMACS

The tests were performed in April 2023 on AWS ParallelCluster in Amazon region us-east-2. All Head Nodes and Compute nodes for the testing used general Purpose SSD gp3 storage as local storage. The benchmarks were run on 6 nodes of Intel based Amazon EC2 hpc6id instances and compared with the run of 4 nodes of AMD based Amazon EC2 hpc6a instances. The goal was to match the number of total cores in each cluster and so 6 nodes of hpc6id were compared with 4 nodes of hpc6a for a total of 384 cores.

Category | Attribute | HPC6a | HPC6id |

Run Info |

|

|

|

| Benchmark | GROMACS PEP & STMV | GROMACS PEP & STMV |

| Dates | April 2023 | April 2023 |

| Test by | Intel | Intel |

Software |

|

|

|

| Workload | GROMACS | GROMACS |

Workload Specific Details |

|

|

|

Command Line | mpirun -np 384 gmx_mpi mdrun -nsteps 10000 -ntomp 1 -s /shared/input/gromacs/benchPEP.tpr -resethway mpirun -np 384 gmx_mpi mdrun -nsteps 10000 -ntomp 1 -s /shared/input/gromacs/benchPEP-h.tpr –resethway mpirun -np 384 gmx_mpi mdrun -nsteps 30000 -ntomp 1 -s /shared/input/gromacs/topol.tpr -resethway | mpirun -np 384 gmx_mpi mdrun -nsteps 10000 -ntomp 1 -s /shared/input/gromacs/benchPEP.tpr -resethway mpirun -np 384 gmx_mpi mdrun -nsteps 10000 -ntomp 1 -s /shared/input/gromacs/benchPEP-h.tpr -resethway mpirun -np 384 gmx_mpi mdrun -dlb yes -nsteps 30000 -ntomp 1 -s /shared/input/gromacs/topol.tpr -resethway |

Table 2: GROMACS Test run configuration

GROMACS Results:

Multiple test runs were run for the different benchmarks and the data was tabulated as shown below in Table 3:

hpc6a | PEP | STMV | PEP-h |

3.872 | 33.475 | 3.972 | |

3.752 | 33.455 | 3.970 | |

3.873 | 33.399 | 3.964 | |

Average | 3.832 | 33.443 | 3.969 |

hpc6id | |||

PEP | STMV | PEP-h | |

4.781 | 39.381 | 4.885 | |

4.756 | 39.657 | 4.872 | |

4.756 | 39.796 | 4.894 | |

Average | 4.764 | 39.611 | 4.884 |

Absolute Diff | 0.932 | 6.168 | 0.915 |

Percentage Diff | 24.32% | 18.44% | 23.06% |

Table 3: Raw Data from GROMACS testing

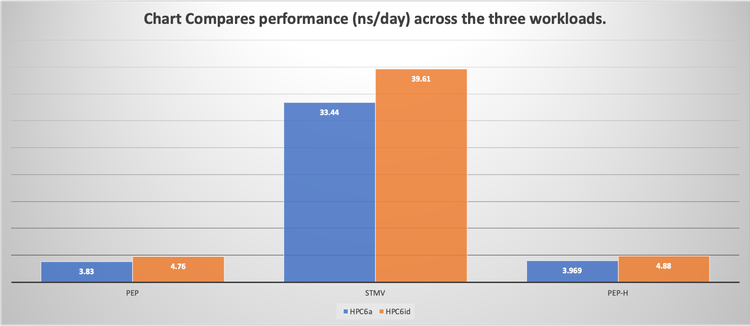

The chart comparing the results is shown in Figure 1 below. The performance measures ns/day across the three workloads and larger is better in the chart.

Figure 1: Chart comparing absolute performance in ns/day between Intel and AMD instance types.

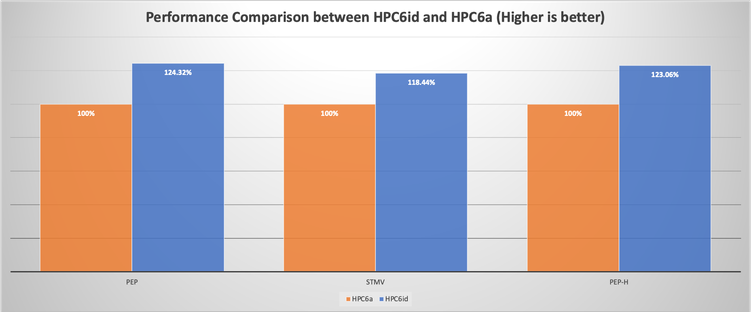

The absolute difference between Intel and AMD instances was also charted as a percentage difference in performance as shown in Figure 2.

Figure 2: Performance comparison by percentage for GROMACS benchmarks

GROMACS Results:

The results clearly show that Intel based HPC6id instances perform 18-24% faster than AMD based HPC6a instances. Intel is the established player in the HPC market with many SW optimizations for HPC workloads that could further improve performance for its instances over that of AMD. Any potential cost differences between the instances can be easily addressed with the improved performance shown in the results.

In part 3 of blog series we will compare performance for OpenFoam between Intel and AMD instances on AWS.

Bibliography:

[i] Amazon Elastic Compute Cloud (Amazon EC2) Hpc6id[1] instances, powered by 3rd Generation Intel Xeon Scalable processors, offer cost-effective price performance for memory-bound and data-intensive high-performance computing (HPC) workloads in Amazon EC2.

[ii] Amazon EC2 AMD based HPC6a instances: AMD is claiming that Amazon EC2 Hpc6a [1]instances offer the best price performance for compute-intensive high-performance computing (HPC) workloads in Amazon EC2. Most of the cost savings claims can be attributed to increased core count for AMD over Intel per socket.

[iii] The PEP (Performance Evaluation Project) benchmark for GROMACS is a widely recognized benchmark used to assess the performance of computer systems and architectures in running molecular dynamics simulations using GROMACS.

[iv] Satellite Tobacco Mosaic Virus (STMV) is a small, icosahedral plant virus that worsens the symptoms of infection by Tobacco Mosaic Virus (TMV).

[v] This benchmark offers a free comparison of various CPU processors. The test case is a well-known Motor Bike tutorial which is a standard part of OpenFOAM installation.

[vi] The Intel® oneAPI Base Toolkit includes powerful data-centric libraries, advanced analysis tools, and Intel® Distribution for Python* for near-native code performance of core Python numerical, scientific, and machine learning packages.

Disclosure text:

Tests were performed April-June 2023 on AWS in region us-east-1. Full configuration details are shown in table 1 and 4. The total number of cores of the AWS ParallelCluster configs were matched for Intel and AMD at 384 cores for the comparison.

Instance Size | Physical Cores | Memory (GiB) | EFA Network Bandwidth (Gbps) | Network Bandwidth (Gbps)* |

hpc6id.32xlarge | 64 | 1024 | 200 | 25 |

6 x HPC6id instances were used in an AWS ParallelCluster with the following configuration:

The Amazon Elastic Compute Cloud (Amazon EC2) Hpc6id instances, powered by 3rd Generation Intel Xeon Scalable processors, offer cost-effective price performance for memory-bound and data-intensive high performance computing (HPC) workloads in Amazon EC2.

Instance Size | Physical Cores | Memory (GiB) | EFA Network Bandwidth (Gbps) | Network Bandwidth (Gbps)* |

Hpc6a.48xlarge | 96 | 384 | 100 | 25 |

4 x HPC6a instances were used in an AWS ParallelCluster with the following configuration:

The Amazon EC2 Hpc6a instance features the 3rd generation AMD EPYC 7003 series processors with up to 3.6 GHz all-core turbo frequency built on a 7nm process node for increased efficiency.

DB Client machine details: For the client machine, we used the EC2 instance type: c6i.4xlarge with 16vCPU (8 core), with 32 GB Memory, 75 GB GP2 Storage volume with 12.5GB Network bandwidth powered by 3rd Generation Intel Xeon Scalable processors. The client machines use the following Software Image (AMI) with Canonical, Ubuntu, 20.04 LTS, amd64 focal image build on 2022-09-14 & ami-0149b2da6ceec4bb0. All Instances, as well as the client Instances were run in US-EAST-1 region. Benchmarking Software:

FSX Shared storage details:

- File system type: Lustre

- Deployment type: Persistent 2

- Data compression type: NONE

- Storage type: SSD

- Storage capacity: 1.2 TiB

- Throughput per unit of storage: 125 MB/s/TiB

- Total throughput: 150 MB/s

- Root Squash: Disabled

- Lustre version: 2.12

Notices & Disclaimers:

Performance varies by use, configuration, and other factors. Learn more on the Performance Index site.

Performance results are based on testing as of dates shown in configurations and may not reflect all publicly available updates. See backup for configuration details. No product or component can be absolutely secure.

Your costs and results may vary. For further information please refer to Legal Notices and Disclaimers.

Intel technologies may require enabled hardware, software, or service activation.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.

Mohan Potheri is a Cloud Solutions Architect with more than 20 years in IT infrastructure, with in depth experience on Cloud architecture. He currently focuses on educating customers and partners on Intel capabilities and optimizations available on Amazon AWS. He is actively engaged with the Intel and AWS Partner communities to develop compelling solutions with Intel and AWS. He is a VMware vExpert (VCDX#98) with extensive knowledge on premises and hybrid cloud. He also has extensive experience with business critical applications such as SAP, Oracle, SQL and Java across UNIX, Linux and Windows environments. Mohan Potheri is an expert on AI/ML, HPC and has been a speaker in multiple conferences such as VMWorld, GTC, ISC and other Partner events.

Mohan Potheri is a Cloud Solutions Architect with more than 20 years in IT infrastructure, with in depth experience on Cloud architecture. He currently focuses on educating customers and partners on Intel capabilities and optimizations available on Amazon AWS. He is actively engaged with the Intel and AWS Partner communities to develop compelling solutions with Intel and AWS. He is a VMware vExpert (VCDX#98) with extensive knowledge on premises and hybrid cloud. He also has extensive experience with business critical applications such as SAP, Oracle, SQL and Java across UNIX, Linux and Windows environments. Mohan Potheri is an expert on AI/ML, HPC and has been a speaker in multiple conferences such as VMWorld, GTC, ISC and other Partner events.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.