Authored by: Ligang Wang (Intel, Principal Engineer), Yunge Zhu (Intel, Cloud Software Engineer), Bing Duan (Volcengine, Senior Machine Learning R&D Engineer).

1 Introduction

With huge amounts of data accumulated in various industries, people are now understanding the value of big data. To maximize the value of the data, an organization’s internal data needs to be fully used, and inter-entity data collaborations are becoming more popular and an essential requirement in some areas. While many organizations are eager for data collaborations, they also care deeply about the confidentiality of their sensitive data. In some critical areas, the sensitive data is at risk of being leaked to either external malicious attackers or even partners in the collaboration. Data security is a fundamental requirement for such collaborations, and recognized globally by many major countries or areas including the US, EU, and China, who have enacted laws on data security. The need for data security technologies has never been more important.

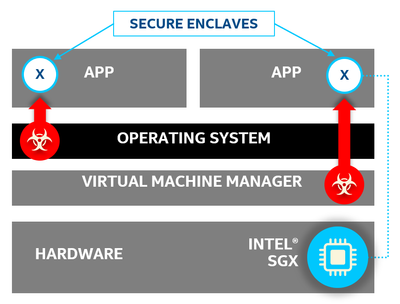

Hardware Trusted Execution Environment (TEE) based Confidential Computing is the most promising technology to fulfill the data security requirement with well-balanced security and performance. Intel® introduced Intel® Software Guard Extensions (SGX) with the 6th Gen Intel® CoreTM processor. SGX provides a hardware protected encrypted memory region called an enclave, to help protect the sensitive data and code in an application. Data in an enclave won’t be leaked or tampered with even if privileged code including the OS kernel or hypervisor is compromised.

In this article, we will discuss how we integrate SGX into Volcengine’s Fedlearner solution to provide enhanced security capability for various data collaboration scenarios with Federated Learning.

2 Background of Volcengine Fedlearner

2.1 Federated Learning

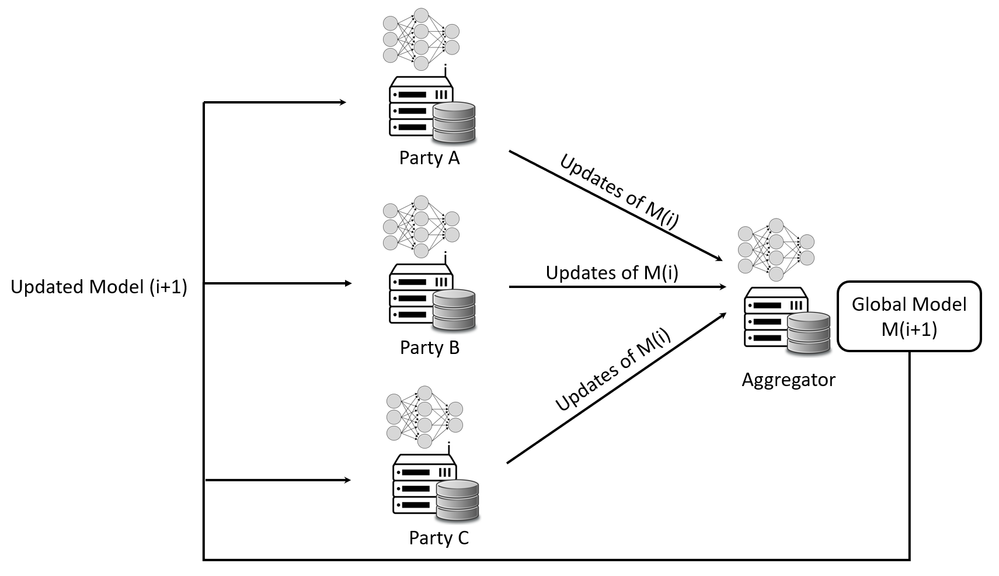

Federated Learning (FL) is a Machine Learning (ML) scheme where multiple participants collaborate in solving a ML problem with a central coordinator (or aggregator). Each participant’s raw data is stored locally and won’t be transmitted to others. The data is trained locally, and the model updates are transmitted to the coordinator for aggregation. With increasing concerns on data security and user privacy, FL becomes a promising solution to face privacy and security challenges. Figure 1 shows the general FL architecture. The aggregator creates the machine learning model with initial parameters and transmits the model and parameters to each participant party. Based on the model and parameters from the aggregator, each participant party uses the unified machine learning framework to train a new model based on its local data. Then the participant party transmits the new parameters or parameter delta to the aggregator. The aggregator aggregates the parameters or delta to compute the new parameters. After rounds of iterations, FL can construct a much more precise model without letting participant parties share their private local data, which also reduces network traffic.

FL can be classified into Horizontal FL (HFL), Vertical FL (VFL), Federated Transfer Learning (FTL) according to how data is partitioned among various participant parties in the feature and sample spaces. HFL is also known as sample-based FL. In HFL, the features are similar but vary in terms of data samples. VFL is also known as feature-based FL. In VFL, data sets have overlapping data samples but differ in their features. FTL aims to build an effective model for a target domain with scarce resource by transferring knowledge learned from a source domain with rich resource.

2.2 Volcengine Fedlearner

Fedlearner is an open source FL solution developed by Volcengine, that enables joint modeling of data distributed between institutions.

A major usage of Fedlearner is to analyze advertising collaboratively between Volcengine and their advertising customers, usually e-commerce companies. The goal is to improve precise targeting of ads. This is a typical VFL case where Volcengine and their customers share the user samples but own different user features.

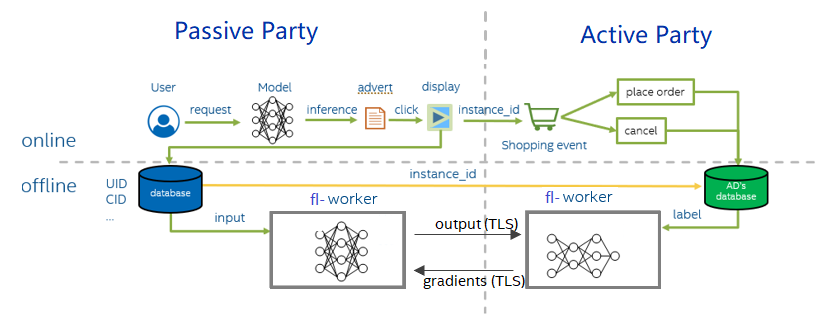

Figure 2 shows the Fedlearner workflows for advertising analysis. In this VFL scheme, the active party has the true labels. The other party is referred to as the passive party. These two parties jointly train a model in order to use more features to achieve higher model accuracy. In the figure, the left is the passive party, which is Volcengine. The right is the active party, which is one of Volcengine’s ad customers. The upper part is online inference, and the bottom part is offline training.

Online inference: A user visits an online media platform supported by the Data Management Platform (DMP), such as Toutiao or Douyin, then DMP uses the CTR (Click Through Rate) / CVR (Click Conversion Rate) model to find the ads with the highest CTR/CVR value and displays the ads to the user. After the user clicks the ad, the user will be redirected to the shopping page on the side of the e-commerce advertiser. At the same time, the e-commerce advertiser and DMP will record the click event, and both sides will use the same instance_id to mark the event. On the shopping page, the user may choose to purchase or not, and the advertiser will record the user behavior as a label.

Offline training: During offline training both the active party and the passive party use the instance_id recorded online to align the data and label, and then read the data in the order of alignment. The model is divided into two parts. The passive party inputs the data into the first half, obtains the intermediate result (embedding) and then sends it to active party. Active party calculates the second half of the model, then uses the label to calculate the loss and gradient, and then passes the gradient back to the passive party. Finally, the active party and passive party update their models.

3 Motivation for Hardening Fedlearner with SGX

3.1 Motivation for Enhancing Fedlearner Security

Like other FL solutions, Fedlearner has the following advantages from the data perspective:

- It helps protect the data privacy of each party. The behavior data of the phone user is not shared with the advertiser. This is extremely important for phone users and also important for Volcengine. For the advertiser, the shopping behavior data is not shared with Volcengine, which protects the advertiser’s internal business data.

- It saves much of the network bandwidth by avoiding transmitting unnecessary data. The collected raw data is absolutely huge considering the enormous number of Volcengine users and the large number of advertisers.

Although Fedlearner has these advantages, attackers may still have chances to undermine the data or model security of this solution. On each side, it is possible for the raw data and the model parameters to be leaked or altered maliciously by internal or external attackers. Therefore, the raw data and the model are at risk if there is no extra protection for the execution environment. After exploring different security technologies, Volcengine and Intel engineers achieved a consensus that Intel SGX would be a great technology to minimize this risk.

The SGX hardened Fedlearner is also very friendly to algorithm developers for Cloud Native training and serving. Developers could write code once and run it in either an untrusted or a trusted environment. This functionality not only reduces the burden of algorithm developers to implement a new FL algorithm, but also fills the gap of applying privacy-preserving capabilities to existing model training.

3.2 Intel SGX Overview

Intel Software Guard Extensions (SGX) is an application-level hardware Trusted Execution Environment (TEE) implementation. SGX defines a system of architectural enhancements to help protect application integrity and confidentiality of data, and to withstand certain software and hardware attacks.

With SGX, developers can protect application secrets by placing the sensitive data and code of an application into a hardware-protected encrypted memory region called an enclave. SGX ensures application security without dependency on the correctness of the OS, hypervisor, BIOS, drivers, etc. Under the CPU architectural access control, only the code in an enclave can access the data in that enclave. Malware or codes that exploit system vulnerabilities through privilege escalation cannot access the secrets of an application under the protection of SGX. With SGX, Cloud Service Providers (CSPs) could provide more assurance that the data and code of the tenant in the cloud platform is not tampered with by either other users/attackers or CSPs.

Because SGX runs in a CPU core, it is highly scalable. Consequently, SGX is anticipated to help meet the requirements for confidentiality and integrity on open platforms. SGX begins to be available on Xeon® Scalable Processors (SP) server platforms since the 3rd Gen Xeon SP, code named Ice Lake.

Remote attestation is a key feature of SGX. It allows a hardware entity or a combination of hardware and software to gain a remote relying party’s trust.

Remote attestation gives the relying party increased confidence that the software is running in a trusted environment:

- Running on a fully updated genuine SGX platform at the latest security level, also referred to as the Trusted Computing Base (TCB) version, which has deployed the mitigations to the known vulnerabilities.

- Running inside an SGX enclave.

Remote attestation can also authenticate the software running in an SGX enclave by providing its measurement and security attributes, which are endorsed by SGX hardware. This is extremely important for the remote relying party to know this is the software it wants to talk to, not a fake one.

SGX supports two types of remote attestation:

- Enhanced Privacy Identification (EPID) Attestation. A central attestation service called Intel® SGX Attestation Service utilizing Intel® EPID provides this type of attestation. EPID attestation is only for selected client platforms, selected Xeon E3, and selected Xeon E platforms.

- Elliptic Curve Digital Signature Algorithm (ECDSA) Attestation. This type of attestation is enabled by Intel® Software Guard Extensions Data Center Attestation Primitives (Intel® SGX DCAP). ECDSA attestation is available on Xeon SP server platforms since 3rd Gen and selected Xeon E platforms.

4 Architecture of Fedlearner with SGX

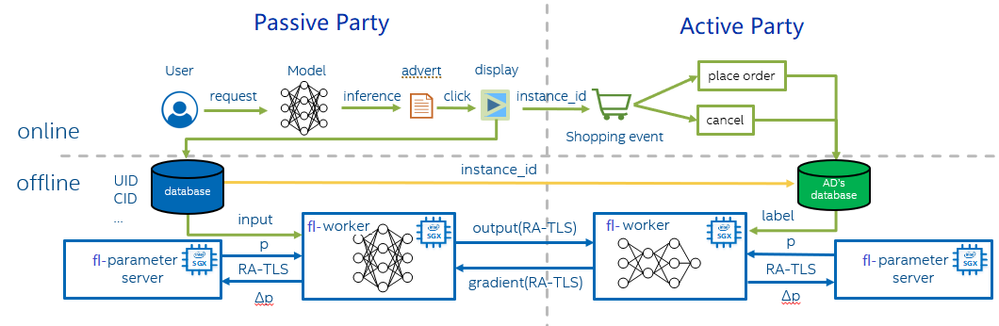

Volcengine and Intel proposed the solution of hardened Fedlearner with SGX. The architecture of the security enhanced solution is shown in Figure 4.

This solution focuses on offline training, and the main involved modules are as below:

- AI Framework - Fedlearner, Volcengine end-to-end open-source framework, based on TensorFlow for machine learning, provides the interfaces that facilitate Federated Learning tasks.

- Runtime Security - AI framework Fedlearner will run into Intel® SGX Enclave. SGX Enclave offers hardware-based memory encryption that isolates specific application code and data in memory.

- Security Isolation LibOS - Gramine, an open-source project for Intel SGX, run applications in an isolated environment with no modification in Intel SGX.

- Containerization using Docker - The framework of this solution is built in Docker and can be automating deployment, management of containerization with Kubernetes.

- Model Protection - Protecting the confidentiality and integrity of the model by encryption when training takes place on an untrusted platform.

- Data Transmission Protection - Establishing a secure communication channel (RA-TLS enhanced gRPC) from parameter server to worker and worker to worker. To establish the secure channel, remote attestation is conducted during handshake to authenticate each communicating party.

Usually, there are two roles during model training - the parameter server and workers. Each worker computes the gradient of the loss on its portion of the data, and then a parameter server sums each worker’s gradient to yield the full mini-batch gradient. After using this gradient to update the model parameters, the parameter server must send back the updated weights to the worker. Workers from the active party and passive party will transfer labels and gradients via gRPC.

In this solution, we put the offline training into Intel SGX enclaves on both DMP and the e-commerce advertiser. We built the parameter server and workers, with Gramine LibOS, which helps run these applications unmodified in an SGX enclave. To guarantee the application integrity, we built an attestation server on both sides to do the cross check. As workers from DMP and the e-commerce advertisers communicate with each other via gRPC, we designed the secure channel by encrypting the certificate and public key.

Gramine

With the Intel® Software Guard Extensions Software Development Kit for Linux* OS (Intel® SGX SDK for Linux* OS), developers can only develop the enclave code in C/C++. In addition, an SGX program must be divided into two parts: the trusted part in an SGX enclave and the untrusted part outside the enclave. This programming model limits using SGX for legacy workloads, especially the large legacy workloads where it would be infeasible to divide them into two parts. To solve this problem, the Library Operating System (LibOS) approach was used to run legacy workloads in SGX enclaves without division or modification.

Gramine, which was formerly known as Graphene, is a very popular LibOS for this purpose.

Attestation and Authentication

In this solution, when two workers build the connection via gRPC, before the communication channel is established successfully, the workers will verify each other with two parts: remote attestation and identity authentication.

In remote attestation, generally the attesting SGX enclave collects attestation evidence in the form of an SGX Quote using the Quoting Enclave. This form of attestation is used to gain the remote partner's trust by sending the attestation evidence to a remote party (not on the same physical machine).

When workers begin communication via gRPC, the SGX Quote will be integrated into TLS (Transport Layer Security) and be verified by Intel SGX Quote Verification library on the other worker side.

Besides verifying the Enclave Quote, it also checks the identity information (mr_enclave, mr_signer, isv_prod_id, isv_svn) of the Enclave. This is a hardware based enhanced authentication method rather than using a user identity.

Once the verification and identity check pass, the communication channel between workers will be built successfully. More details please refer to RA-TLS enhanced gRPC.

Data at-rest security

We encrypt models with cryptographic (wrap) key by using Protected-File mode in LibOS Gramine to guarantee the integrity of the model file by metadata checking method. Any changes of the model will have a different hash code, which will be denied by Gramine.

5 Run Fedlearner with SGX

5.1 Download Source Code

git clone -b fix_dev_sgx https://github.com/bytedance/fedlearner.git

cd fedlearner

git submodule init

git submodule update

5.2 Build Docker Image

img_tag=Your_defined_tag

./sgx/build_dev_docker_image.sh ${img_tag}

Note: build_dev_docker_image.sh provides parameter proxy_server to help you set your network proxy. It can be removed from this script if it is not needed.

You will get the built image:

REPOSITORY TAG IMAGE ID CREATED SIZE

fedlearner-sgx-dev latest 8c3c7a05f973 45 hours ago 15.2GB

5.3 Start Container

In terminal 1, start container to run leader:

docker run -it \

--name=fedlearner_leader \

--restart=unless-stopped \

-p 50051:50051 \

--device=/dev/sgx_enclave:/dev/sgx/enclave \

--device=/dev/sgx_provision:/dev/sgx/provision \

fedlearner-sgx-dev:latest \

bash

In terminal 2, start container to run follower:

docker run -it \

--name=fedlearner_follwer \

--restart=unless-stopped \

-p 50052:50052 \

--device=/dev/sgx_enclave:/dev/sgx/enclave \

--device=/dev/sgx_provision:/dev/sgx/provision \

fedlearner-sgx-dev:latest \

bash

5.3.1 Configure PCCS (Provisioning Certificate Caching Service)

- If you are using public cloud instance, please replace the PCCS URL (Uniform Resource Locator) in /etc/sgx_default_qcnl.conf with the new PCCS URL provided by the cloud.

Old: PCCS_URL=https://pccs.service.com:8081/sgx/certification/v3/

New: PCCS_URL=https://public_cloud_pccs_url

- If you are using your own machine, please make sure, the PCCS service is running successfully in your host with command systemctl status pccs. And add your host IP address in /etc/hosts under container. For example:

cat /etc/hosts

XXX.XXX.XXX.XXX pccs.service.com #XXX.XXX.XXX.XXX is the host IP

5.3.2 Start AESM (Architectural Enclave Service Manager) Service

Execute below script in both leader and follower container:

/root/start_aesm_service.sh

5.4 Prepare Data

Generate data in both leader and follower container:

cd /gramine/CI-Examples/wide_n_deep

./test-ps-sgx.sh data

5.5 Compile Applications

Compile applications in both leader and follower container:

cd /gramine/CI-Examples/wide_n_deep

./test-ps-sgx.sh make

Please find mr_enclave, mr_signer from the following print log:

+ make

+ grep 'mr_enclave\|mr_signer\|isv_prod_id\|isv_svn'

isv_prod_id: 0

isv_svn: 0

mr_enclave: bda462c6483a15f18c92bbfd0acbb61b9702344202fcc6ceed194af00a00fc02

mr_signer: dbf7a340bbed6c18345c6d202723364765d261fdb04e960deb4ca894d4274839

isv_prod_id: 0

isv_svn: 0

Then, update the leader's dynamic_config.json under current folder with follower's mr_enclave, mr_signer. Update follower's dynamic_config.json with leader's mr_enclave, mr_signer.

dynamic_config.json:

{

......

"sgx_mrs": [

{

"mr_enclave": "",

"mr_signer": "",

"isv_prod_id": "0",

"isv_svn": "0"

}

],

......

}

5.6 Configure Leader and Follower's IP

In leader's test-ps-sgx.sh, for --peer-addr, please replace localhost with follower_container_ip

elif [ "$ROLE" == "leader" ]; then

make_custom_env

rm -rf model/leader

......

taskset -c 4-7 stdbuf -o0 gramine-sgx python -u leader.py \

--local-addr=localhost:50051 \

--peer-addr=follower_container_ip:50052

In follower's test-ps-sgx.sh, for --peer-addr , please replace localhost with leader_container_ip

elif [ "$ROLE" == "follower" ]; then

make_custom_env

rm -rf model/follower

......

taskset -c 12-15 stdbuf -o0 gramine-sgx python -u follower.py \

--local-addr=localhost:50052 \

--peer-addr=leader_container_ip:50051

Note: Get the container IP under your host:

docker inspect --format '{{ .NetworkSettings.IPAddress }}' container_id

5.7 Run the Distributing Training

Under leader container:

cd /gramine/CI-Examples/wide_n_deep

./test-ps-sgx.sh leader

Under follower container:

cd /gramine/CI-Examples/wide_n_deep

./test-ps-sgx.sh follower

Finally, the model file will be placed at

./model/leader/id/saved_model.pd

./model/follower/id/saved_model.pd

6 Conclusion

The SGX hardened Fedlearner is the first commercial Confidential Computing solution based on Volcengine SGX infrastructure. It improves data and model security at runtime and during transit between partnered nodes, which will benefit Volcengine and its ad customers. Since SGX was introduced to the 3rd Generation Xeon-SP, SGX Enclave Page Cache (EPC) size per socket was enlarged significantly from 128MB to 512GB. This enables large workloads like machine learning to be executed in an SGX enclave. This is a giant step for both SGX and the potential Confidential Computing usages. We believe Volcengine and Intel will have more collaborations based on Intel TEE technologies and Volcengine. The SGX hardened Fedlearner can be applied for use at https://www.volcengine.com/product/FedLearner.

Acknowledgement

Many thanks to Yuan Wu (Intel), Siyuan Hui (Intel), Jianlin Bu (Intel), Xiaoguang Li (Volcengine) for their contributions to this project and blog.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.