Michael Mesnier is a Principal Engineer at Intel Labs and has contributed to a variety of storage research areas.

Highlights:

- Intel Labs has developed a server-based computational storage research platform.

- The research platform is now available for customers and partners.

- We will demo our work with MinIO, NGD Systems and VMware, at this year’s Flash Memory Summit, August 2-4 in Santa Clara, CA.

Computational storage research has been around for decades, but only recently has the industry pushed to bring it to production. Advancements in computational storage strive to bring computation closer to the data, to reduce I/O bottlenecks and accelerate computation. The benefits of computational storage are enticing to cloud service providers (CSPs) and storage vendors alike. This is no surprise given that on average, nearly 63% of total system energy is spent on data movement. The adoption of data-intensive apps and fast, dense storage (NAND) further drives the need for reduced data movement.

But despite all the recent industry activity, little attention has been paid to the software ecosystem and how it can make (or break) computational storage. Interaction with the host file system, for example, is crucial. This is where users store their data and where computational storage starts. And the challenges only increase as you progress further down the stack – from programming models, challenges with striped data (like erasure coding), disaggregated storage, and heterogenous accelerators. Without a top-to-bottom platform solution, including both hardware and software, we’ll likely see another decade of computational storage research with limited adoption.

As a data platform company, Intel is uniquely positioned to advance computational storage through a novel, platform-centric approach. Specifically, our work here in Intel Labs presents a computational storage platform built upon the existing server-based storage industry. Our approach aims to move compute functions to the data by processing data within storage servers and utilizing programming models and APIs to enable the full ecosystem.

We will demo a real-world use case with MinIO, NGD Systems, and VMware, at this year’s Flash Memory Summit, August 2-4 in Santa Clara, CA. This is the industry’s first multi-vendor demonstration of server-based computational storage.

Intel Labs’ Computational Storage Research Platform

Our computational storage platform is based on two kinds of servers – compute servers and storage servers. The compute server runs application code and the user’s file system, and the storage server represents a disaggregated storage solution, like NVMe/TCP. Rather than change the basic block storage protocol, our approach extends the block protocol with compute descriptors that describe the locations of file data (blocks) and the operations to be performed. Then, within the storage server, a variety of silicon and systems software that can be used to accelerate compute and I/O-intensive operations. The silicon includes Intel Xeon processors, acceleration engines (like Intel’s Data Streaming Accelerator), FPGAs, GPUs, IPUs (Smart NICs), and NAND and Optane SSDs.

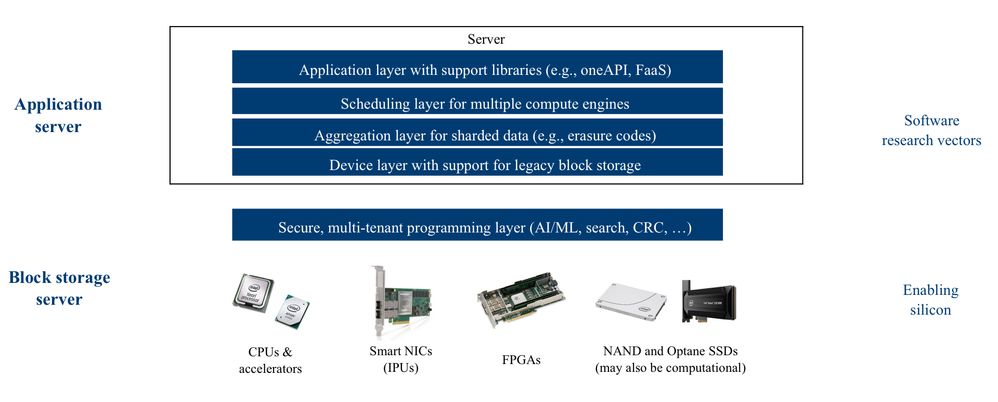

Figure 1. A representation of the various layers of our computational storage stack.

In Figure 1 above, you can see the numerous layers involved in our work. At the top of the stack we introduce support libraries that will interface with applications. Below that is a scheduling layer that can maintain a bird’s eye view of the system and make dynamic scheduling decisions. Next is an aggregation layer that comprehends that user data may be spread over multiple storage servers and devices. Finally, the device layer provides a gateway to existing block storage.

Then we have the block storage system itself, which is also a server with compute silicon. Access to this silicon will be gated by a secure, multi-tenant programming layer. Given the variety of compute silicon, the storage server will also be a dynamic environment. For example, we could utilize the Xeon processor in the storage server, or we might use an FPGA attached to the storage server. Such decisions will be based on application requirements.

Intel Labs chose oneAPI as a foundation for researching computational storage. We are actively working with the industry on prototype solutions. As an open standard for unified application programming, and with open source compiler and library implementations available, oneAPI not only gives you access to conventional accelerators, like GPUs and FPGAs, but it can also be extended to support computational storage.

Block-Compatible Compute Descriptors

Our research began at the device layer. It was in this device layer that we defined our block-compatible compute descriptors. These descriptors are used to communicate with the storage server; detailing data locations, operations to be performed on this data, and any input arguments. In effect, the compute descriptors provide a simple RPC mechanism that the host can use to communicate with the target, and they require no modification to the existing block-based transports (NVMe and SCSI). Instead, we introduce a new opcode (EXEC – short for “Execute”) that we implement as new NVMe or SCSI commands.

Our compute descriptors contain file-like data structures, which we refer to as virtual objects. A virtual object, like a file, is a collection of blocks with a length. This is our fundamental unit of computation, whereby operations (e.g., hash, search, compress, filter) are performed against objects. We say “virtual” because the storage is still a block storage. Admittedly, computational storage would be much easier with native object or key-value stores, as the object (or key) already provides a convenient handle for expressing computation (e.g., search this object, compress that object). However, our goal from the outset was to leverage existing block storage, thereby making our solution a computational blockstorage solution – applicable to both storage arrays and individual devices. Computational object storage (like S3 Select) can build upon such a foundation and push computation all the way to the block layer.

So, in general, we see data objects as the key to computational storage. For example, we can teach the storage server to differentiate between tables, directories, files, images, etc. Each of these represents an object, and they become distinct units of computation by treating them as such within the block storage system. Figure 2 illustrates this for a simple file object.

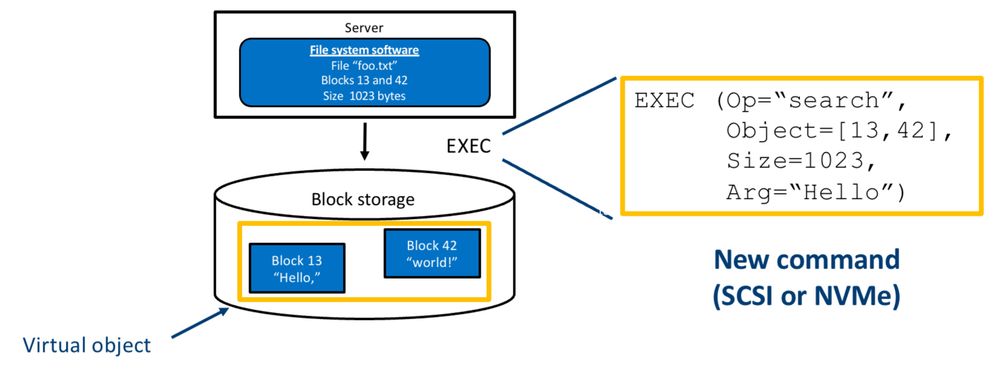

Figure 2. An example of how data application engineers can search a file in block storage.

As shown in the figure above, the application server contains a file system with all the metadata to tell you where a file’s blocks are located within storage. In this case, the file contains two blocks (13 and 42), the file length is 1023 bytes, and we want to search the file for the string “Hello.” All this information (i.e., block allocation, file sizes, operations, arguments) is contained in a single EXEC command (NVMe or SCSI) and sent to the storage system for processing.

Now, within the block storage, we can reconstruct the file from the virtual object mapping. The mapping lets the block storage see the object just long enough to run the computation; it can then discard the mapping and return the result to the user. This virtual object approach is beneficial because, just like a real object, a virtual object contains the metadata that is needed to process the data.

After establishing the device layer solution, we continued working up the stack. This subsequent research focuses on aggregation challenges, such as handling data that is physically split and distributed across many devices and servers as shown in the figure below.

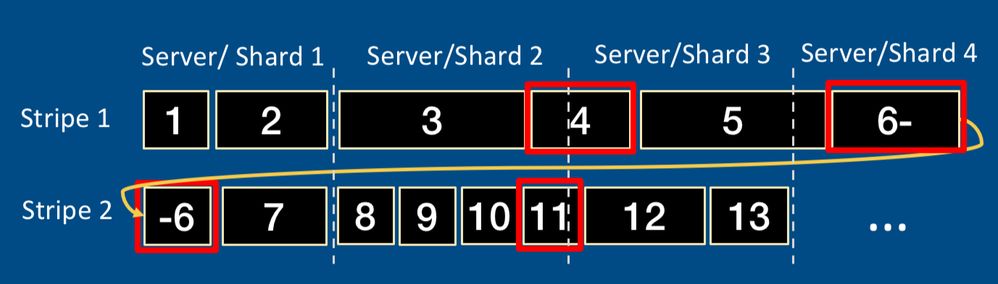

Figure 3. A representation of data crossing shard and stripe boundaries.

This is a very challenging aspect of computational storage because modern distributed storage systems do not respect strict boundaries. As shown in the figure, it is very common for blocks of data to cross stripes or shards. This means we now have to manage coordination across multiple servers. Intel Labs is actively exploring solutions and will detail the results of this research in a future post.

Demonstration at the Flash Memory Summit

While our research platform is not yet production-ready, we are demonstrating our solution end-to-end, through our collaboration with VMware, MinIO, and NGD Systems. This is the first cross-industry demonstration of server-based computational storage.

The focus of this cross-industry demo is improved data integrity (aka “data scrubbing”), where we use computational storage to perform end-to-end data integrity checks for two commonly used storage systems – Cassandra and MinIO. These storage systems are representative of most storage systems, in that they ensure the integrity of data through end-to-end checksums. CRC-32 and Highway Hash are used, respectively, to detect and guard against data corruption.

As most users of these stores are aware, data integrity checks are expensive. They consume considerable I/O bandwidth and compete with other resources on the compute server (CPU and memory). Because of this, they are an ideal candidate for computational storage. Our demonstration shows I/O traffic reductions upwards of 99%, as well as improvement in data scrubbing performance. Faster and more efficient scrubbing means less corruption, and less corruption means better availability. It’s a practical use case with tangible benefits.

The cross-industry nature of this demonstration also serves to highlight the various opportunities at play – including opportunities for storage software vendors, virtualization software vendors, server vendors, and storage device vendors.

Email michael.mesnier@intel.com to learn more and join us in this collaborative effort!

Mike Mesnier is a Principal Engineer in Intel Labs. He joined Intel in 1997 and has contributed to a variety of storage research projects - including Internet SCSI (iSCSI), Object-based Storage Devices (OSD), Relative Fitness Modeling, Differentiated Storage Services (storage QoS), Storage Analytics (ML for storage) and, most recently, Computational Storage. Mike received his PhD in Computer Engineering from Carnegie Mellon University. In his free time, Mike enjoys sailing on the Columbia River in Portland, Oregon.

Mike Mesnier is a Principal Engineer in Intel Labs. He joined Intel in 1997 and has contributed to a variety of storage research projects - including Internet SCSI (iSCSI), Object-based Storage Devices (OSD), Relative Fitness Modeling, Differentiated Storage Services (storage QoS), Storage Analytics (ML for storage) and, most recently, Computational Storage. Mike received his PhD in Computer Engineering from Carnegie Mellon University. In his free time, Mike enjoys sailing on the Columbia River in Portland, Oregon.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.