- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

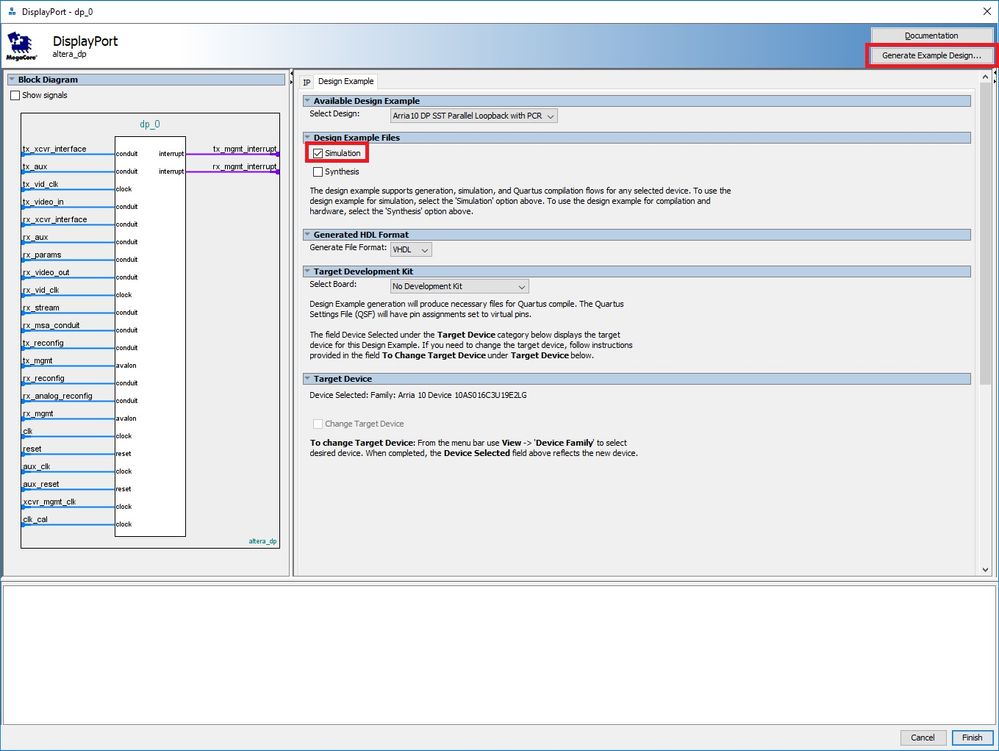

I am trying to simulate the Intel DisplayPort Arria 10 Sample Project(Quartus Prime v17.0) in Aldec Active-HDL (v10.3 64 bit) and don't think the simulation is working correctly.

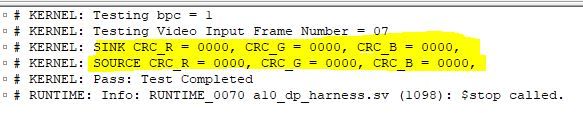

The testbench executes all the way to the end with CRCs reported back to be all 0's for R, G & B. The vbid[3] - NoVideoStream_Flag is high during the entire simulation.

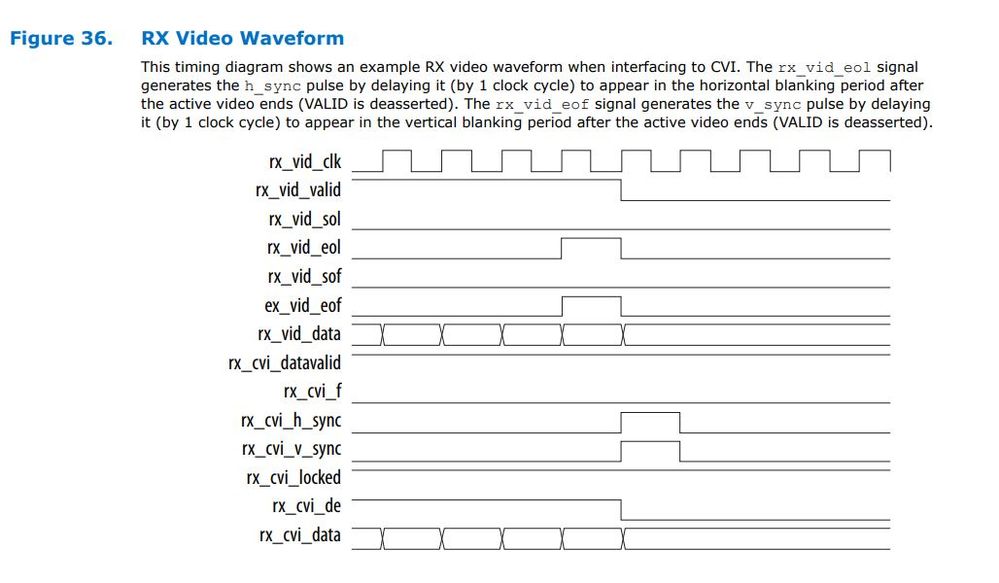

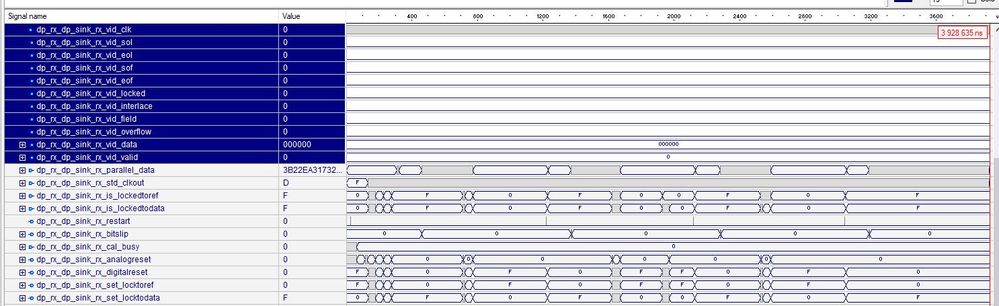

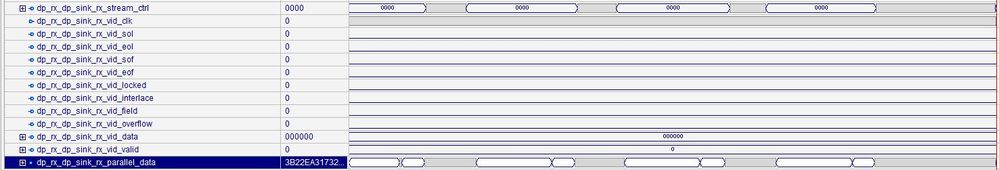

All of the signals mentioned in Figure 36 of UG-01131 (RX Video Waveform) are static for the entire simulation. I would expect the received video to look similar to the timing diagram of that figure.

Is there something wrong with the simulation or am I not setting it up correctly?

- Tags:

- simulation

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi DZukc1

Signal "rx_vid_clk" is input signal and this frequency is ex. 162MHz.

So, would you input rx_vid_clk as 162MHz ?

This module (dp_sink) is generated some signals (ex. rx_vid_sol, rx_vid_eol, rx_vid_sof, r_vid_eof and so on) by rx_vid_clk.

BTW, if rx_vid_clk already toggled, would you wait for one frame ?

These signals are generated by MSA, VBID and so on.

Best regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I see.

Would you make sure rx_parallel_data signal on native_phy_rx, if it exist ?

I'm using DP Sink IP on Arria V.

So, it might not exist this module. But different name...

Best regards,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I see.

Would you make sure CDC setting at parameter in this IP ?

This module has many CDC logic.

If clock frequency setting is wrong, DP Sink malfunction.

Best regards,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I used the User Guide's process to generate the example design and that is what I am trying to simulate.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Sir, do you have chance to try the simulation with latest version 18.1 or other simulator like modelsim?

By the way, Arria 10 DP do not have CDC or CRC setting.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks!

I run the simulation in modelsim_se edition and I also see the same observation (all zero). Let me check further on this.

But frankly speaking, for DP, usually we directly validate the IP on actual hardware and seldom run the simulation.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page