- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm posting the same question from "https://stackoverflow.com/questions/72357676/its-like-opencl-kernel-instance-ends-abruptly"

I'm new to OpenCL and I'm working on converting an existing algorithm to OpenCL. In this process, I am experiencing a phenomenon that I cannot solve on my own, and I would like to ask some help.

Here's details.

My kernel is applied to images of different size (to be precise, each layer of the Laplacian pyramid).

I get normal results for images of larger size such as 3072 x 3072, 1536 x 1536. But I get abnormal results for smaller images such as 12 x 12, 6 x 6, 3 x 3, 2 x 2.

At first, I suspected that clEnqueueNDRangeKernel had a bottom limit for dimensions, causing this problem. So, I added printf to the beginning of the kernel as follows. It is confirmed that all necessary kernel instances are executed.

__kernel void GetValueOfB(/* parameters */)

{

uint xB = get_global_id(0);

uint yB = get_global_id(1);

printf("(%d, %d)\n", xB, yB);

// calculation code is omitted

}

So after wandering for a while, I added the same printf to the end of the kernel. When I did this, it was confirmed that printf works only for some pixel positions. For pixel positions not output by printf, the calculated values in the resulting image are incorrect, and as a result, I concluded that some kernel instances terminate abnormally before completing the calculations.

__kernel void GetValueOfB(/* parameters */)

{

uint xB = get_global_id(0);

uint yB = get_global_id(1);

printf("(%d, %d)\n", xB, yB);

// calculation code is omitted

printf("(%d, %d)\n", xB, yB);

}

It seems that there is no problem with the calculation of the kernel. If I compile the kernel turning off the optimization with the -cl-opt-disable option, I get perfectly correct results for all images regardless of their size. In addition to that, with NVIDA P4000, it works correct. Of course, in theses cases, I confirmed that the printf added at the bottom of the Kernel works for all pixels.

Below I put additional information and attach a part of the code I wrote.

Any advice is welcomed and appreciated. Thank you.

SDK: Intel® SDK For OpenCL™ Applications 2020.3.494

Platform: Intel(R) OpenCL HD Graphics

for all images

{

...

const size_t globalSize[2] = { size_t(vtMatB_GPU_LLP[nLayerIndex].cols), size_t(vtMatB_GPU_LLP[nLayerIndex].rows) };

err = clEnqueueNDRangeKernel(_pOpenCLManager->GetCommandQueue(), kernel, 2,

NULL, globalSize, NULL, 0, NULL, NULL);

if (CL_SUCCESS != err)

return -1;

// I tried with this but it didn't make any difference

//std::this_thread::sleep_for(std::chrono::seconds(1));

err = clFinish(_pOpenCLManager->GetCommandQueue());

if (CL_SUCCESS != err)

return -1;

err = clEnqueueReadBuffer(_pOpenCLManager->GetCommandQueue(), memMatB, CL_TRUE,

0, sizeof(float) * vtMatB_GPU_LLP[nLayerIndex].cols *

vtMatB_GPU_LLP[nLayerIndex].rows, vtMatB_GPU_LLP[nLayerIndex].data, 0, nullptr, nullptr);

if (CL_SUCCESS != err)

return -1;

...

}

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for reaching out to us.

Could you please provide us with a complete reproducer and the steps you have followed so that we can try it at our end?

Thanks & Regards,

Noorjahan.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thank you very much for your attention.

Here is the link for minimum code to reproduce phenomenon.

https://github.com/heysweetethan/GPUOpenCLProjectforWindows

I really appreciate if you take a look.

Best Regards,

Ethan Kim

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I'm sorry but may I ask someone to take a look at this?

I'm still working on it but I cannot solve the issue myself.

Best Regards,

Ethan Kim

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thank you for providing the source code.

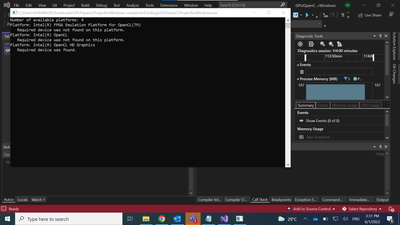

We have tried the reproducer that you have provided and we got some issues at runtime.

when we run the code, the program just hangs and we cannot see any output.

Please refer to the below screenshot:

Could you please help us in reproducing your issue?

Thanks & Regards,

Noorjahan.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thank you for your reply and for paying attention to my issue.

Would you "git clone https://github.com/heysweetethan/GPUOpenCLProjectforWindows.git" , build it, and start it over again?

I tested it again on another newly installed computer and checked that it runs(with errors in different way).

If it still doesn't work on your side I will add more printfs in cpp.

And about the issue,

I guess that the for loop in the kernel code might cause the problem.

On my second PC(i7-10700 CPU), clEnqueueNDRangeKernel returns CL_OUT_OF_RESOURCES. This is completely okay to me and I think I can handle it.

But what I'm confused is that on my main PC(i9-9900 CPU), it just, instead of returning CL_OUT_OF_RESOSURCES, runs in a way that I cannot understand.

I know that it's not well designed kernel if there is a for loop that iterates too many times in it and I need to modify the kernel so that it can be distributed over processing elements or computer units. But I want to figure out why my kernel does not return CL_OUT_OF_RESOSURCES and just runs awkwardly in i9-9900.

Thank you again.

Best regards,

Ethan Kim

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, I don't think your kernel instance ends abruptly, rather I think it is taking a very long time to execute.

A few things I noticed:

1. Your ND-range dimensions are pretty small:

>>>> clEnqueueNDRangeKernel( GetValueOfB Because the global work size is 12x12, you only have 144 work-items. Even on a small integrated GPU this will only use a fraction of the GPU compute resources.

2. The kernel contains a doubly-nested for loop.

for (int nY = 0; nY < nROIHeight; nY++)

{

for (int nX = 0; nX < nROIWidth; nX++)

{

// loop body

}

}

By itself this isn't bad, but the loop bounds are quite large, so the loop body ends up executing several million times per work-item. Furthermore, the loop body contains quite a bit of floating-point math, including several calls to the (rather expensive) double-precision pow() function.

Taken together, this means that the few compute resources that are being used are being asked to do a LOT of work, and it's taking a very long time to complete.

A few suggestions of things to try:

a) If at all possible, try to use single-precision floating point math rather than double-precision (float rather than double). Most GPUs will perform single-precision math significantly faster than double-precision math.

Just (a) seems to be enough to run your kernel to completion, but here are a few other things to check that may also improve performance:

b) If possible, can you replace some calls to pow() with calls to powr()?

c) If you can tolerate lower precision, you can also try using the native powr() function or the -cl-fast-relaxed-math program build option.

c) Can you use the built-in function smoothstep() vs. defining your own? See link .

e) Is it possible to refactor your algorithm to add more work-items that individually do less work? This won't be trivial, but this is likely to produce the biggest improvements.

Hope this helps!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

First, I really appreciate your reply.

With all due respect, I would like to have some clarifications.

I did not stop the program. Rather I waited until the program had stopped.

Having this in mind, I have two questions,

1) If I did not make a wrong code(regarding clFinish or something) , is there inherent limitation regarding kernel execution time?

2) Why can I make the program run correctly if I compile the kernel with '-cl-opt-disable' although it presumably takes more time for kernel to execute.

Thank you.

Regards,

Ethan Kim

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good questions!

(1) Regarding an upper-bound kernel execution time, this actually gets a little complicated. It depends whether your kernel can be preempted, and this can depend on both your kernel and the hardware you are running on. The short answer is that the operating system will try to preempt your kernel to let other things run if it is taking too long to execute. If the preemption is successful, the kernel can effectively run for an unbounded amount of time. If the preemption is unsuccessful though (and there can be many reasons for this), the operating system will stop your kernel and reset the device so e.g. the GUI remains responsive.

(2) Regarding disabling optimizations, I am unable to reproduce this behavior, and I still see the very long execution when using -cl-opt-disable. Are you sure something else isn't going on here?

I'm very interested to see if you are able to run the kernel in finite time on an integrated if you use single-precision calculations. Have you been able to try this? Should be as simple as a search-and-replace of "double" with "float" and then maybe fixing up a few double-precision literals (or using -cl-single-precision-constant).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Once again, thank you for spending your invaluable time to help me.

Actually before I posted the first question, I had done the test with float instead of double and confirmed it worked correctly(with less accuracy).

But I wanted to figure out why the code with double was not running the way I expected.

Now, I think I need to investigate the behavior of the codes more on my own.

I will try to use GDB debugger if I can learn anything from using it.

Actually I tried GDB debugger but it didn't go like 'Set Up Intel® Distribution for GDB* to Debug GPUs on Windows*'.

That's because I failed to start 'gdbserver-gt' although I referred to 'trouble shooting'.

I might need to ask another question regarding GDB debugger.

By the way, one more question. I really appreciated if you give me any opinion on this.

I was stunned to see the same program(with double) run correctly after installing GDB debugger(oneAPI\debugger\latest\target\gen_debugger_target.msi).

I swear there was nothing else other than installing GDB.

I did the test three times install/uninstalling GDB(with reboot) and running the program.

I'm sure the debugger make a difference.

Regards,

Ethan Kim

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@heysweetethan wrote:

By the way, one more question. I really appreciated if you give me any opinion on this.

I was stunned to see the same program(with double) run correctly after installing GDB debugger(oneAPI\debugger\latest\target\gen_debugger_target.msi).

I swear there was nothing else other than installing GDB.

I did the test three times install/uninstalling GDB(with reboot) and running the program.

I'm sure the debugger make a difference.

This is a mystery I can't explain, so I've asked a couple of our debugger folks why this might be happening. Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your reply.

However, I’m not going to be obsessed on this anymore. Actually I spent 'a lot of time' on this and I've been being so obsessed on this.

At the beginning I’ve posted a question because I think I made a problem by making a wrong code regarding OpenCL things.

Now I concluded that basic rules were abided by in my code and I cautiously suggest a probability of an issue in 'Intel SDK for OpenCL' which only happens in impractical situation.

I meant, by ‘impractical situation’, for example as you pointed out, a doubly-nested for loop which iterates 9 million times in a single kernel instance which runs much faster in CPU code(I did not have intention to use it at all but I sticked to this code just for curiosity). I think this made all the messes I experienced.

I'm sure that does not happen in practical GPU code and I will continue to convert my CPU algorithm to OpenCL GPU version.

I really appreciate all the helps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Since I know how frustrating it can be to be "obsessed" without closure, our current theory as to what is happening here is that something is going awry with preemption, which is causing the kernel to run forever. The debugger part was an important clue, because one of the things that the debugger installer does is to disable preemption, thereby allowing the kernel to be debugged.

If you want to experiment more with this directly, please see:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Finally done!!! Thank you so much.

By setting TdrLevel=0, I could solve my problem.

Just for others who may concern about this, I put links below.

https://docs.microsoft.com/en-us/windows-hardware/drivers/display/tdr-registry-keys

Although the phenomenon with '-cl-opt-disable' is still curious to me, now I might be able to delve into it myself.

Thank you again.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just FYI, there's one last thing to consider.

PC1 with i7-10700 : clEnqueueNDRangeKernel returns CL_OUT_OF_RESOURCES(-5) when TDR steps in.

PC2 with i9-9900: clEnqueueNDRangeKernel return CL_SUCCESS(0) even if TDR steps in.

Thank you.

Best Regards,

Ethan Kim

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thank you for sharing the solution with us.

Glad to know that your issue is resolved. If you need any additional information, please post a new question as this thread will no longer be monitored by Intel.

Thanks & Regards,

Noorjahan.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page