- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i try to run python code using tensorflow, and get an error,

The error fIle:

2021-03-07 02:04:46.112487: W tensorflow/stream_executor/platform/default/dso_loader.cc:60] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory; LD_LIBRARY_PATH: /opt/intel/inteloneapi/intelfpgadpcpp/latest/board/intel_a10gx_pac/linux64/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/itac/2021.1.1/slib:/glob/development-tools/versions/oneapi/gold/inteloneapi/ipp/2021.1.1/lib/intel64:/glob/development-tools/versions/oneapi/gold/inteloneapi/ccl/2021.1.1/lib/cpu_gpu_dpcpp:/glob/development-tools/versions/oneapi/gold/inteloneapi/rkcommon/1.5.0/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/vpl/2021.1.1/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/dal/2021.1.1/lib/intel64:/glob/development-tools/versions/oneapi/gold/inteloneapi/openvkl/0.11.0/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/tbb/2021.1.1/env/../lib/intel64/gcc4.8:/glob/development-tools/versions/oneapi/gold/inteloneapi/compiler/2021.1.2/linux/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/compiler/2021.1.2/linux/lib/x64:/glob/development-tools/versions/oneapi/gold/inteloneapi/compiler/2021.1.2/linux/lib/emu:/glob/development-tools/versions/oneapi/gold/inteloneapi/compiler/2021.1.2/linux/lib/oclfpga/host/linux64/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/compiler/2021.1.2/linux/lib/oclfpga/linux64/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/compiler/2021.1.2/linux/compiler/lib/intel64_lin:/glob/development-tools/versions/oneapi/gold/inteloneapi/compiler/2021.1.2/linux/compiler/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/mkl/latest/lib/intel64:/glob/development-tools/versions/oneapi/gold/inteloneapi/dnnl/2021.1.1/cpu_dpcpp_gpu_dpcpp/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/ospray_studio/0.5.0/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/embree/3.12.0/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/ospray/2.4.0/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/oidn/1.2.4/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/mpi/2021.1.1//libfabric/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/mpi/2021.1.1//lib/release:/glob/development-tools/versions/oneapi/gold/inteloneapi/mpi/2021.1.1//lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/ippcp/2021.1.1/lib/intel64:/glob/development-tools/versions/oneapi/gold/inteloneapi/debugger/10.0.0/dep/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/debugger/10.0.0/libipt/intel64/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/debugger/10.0.0/gdb/intel64/lib

2021-03-07 02:04:46.112618: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

2021-03-07 02:05:17.962111: I tensorflow/compiler/jit/xla_cpu_device.cc:41] Not creating XLA devices, tf_xla_enable_xla_devices not set

2021-03-07 02:05:17.981292: W tensorflow/stream_executor/platform/default/dso_loader.cc:60] Could not load dynamic library 'libcuda.so.1'; dlerror: libcuda.so.1: cannot open shared object file: No such file or directory; LD_LIBRARY_PATH: /opt/intel/inteloneapi/intelfpgadpcpp/latest/board/intel_a10gx_pac/linux64/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/itac/2021.1.1/slib:/glob/development-tools/versions/oneapi/gold/inteloneapi/ipp/2021.1.1/lib/intel64:/glob/development-tools/versions/oneapi/gold/inteloneapi/ccl/2021.1.1/lib/cpu_gpu_dpcpp:/glob/development-tools/versions/oneapi/gold/inteloneapi/rkcommon/1.5.0/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/vpl/2021.1.1/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/dal/2021.1.1/lib/intel64:/glob/development-tools/versions/oneapi/gold/inteloneapi/openvkl/0.11.0/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/tbb/2021.1.1/env/../lib/intel64/gcc4.8:/glob/development-tools/versions/oneapi/gold/inteloneapi/compiler/2021.1.2/linux/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/compiler/2021.1.2/linux/lib/x64:/glob/development-tools/versions/oneapi/gold/inteloneapi/compiler/2021.1.2/linux/lib/emu:/glob/development-tools/versions/oneapi/gold/inteloneapi/compiler/2021.1.2/linux/lib/oclfpga/host/linux64/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/compiler/2021.1.2/linux/lib/oclfpga/linux64/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/compiler/2021.1.2/linux/compiler/lib/intel64_lin:/glob/development-tools/versions/oneapi/gold/inteloneapi/compiler/2021.1.2/linux/compiler/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/mkl/latest/lib/intel64:/glob/development-tools/versions/oneapi/gold/inteloneapi/dnnl/2021.1.1/cpu_dpcpp_gpu_dpcpp/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/ospray_studio/0.5.0/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/embree/3.12.0/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/ospray/2.4.0/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/oidn/1.2.4/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/mpi/2021.1.1//libfabric/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/mpi/2021.1.1//lib/release:/glob/development-tools/versions/oneapi/gold/inteloneapi/mpi/2021.1.1//lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/ippcp/2021.1.1/lib/intel64:/glob/development-tools/versions/oneapi/gold/inteloneapi/debugger/10.0.0/dep/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/debugger/10.0.0/libipt/intel64/lib:/glob/development-tools/versions/oneapi/gold/inteloneapi/debugger/10.0.0/gdb/intel64/lib

2021-03-07 02:05:17.981351: W tensorflow/stream_executor/cuda/cuda_driver.cc:326] failed call to cuInit: UNKNOWN ERROR (303)

2021-03-07 02:05:17.981391: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:156] kernel driver does not appear to be running on this host (s001-n017): /proc/driver/nvidia/version does not exist

2021-03-07 02:05:17.981900: I tensorflow/core/platform/cpu_feature_guard.cc:142] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 AVX512F FMA

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

2021-03-07 02:05:17.985486: I tensorflow/compiler/jit/xla_gpu_device.cc:99] Not creating XLA devices, tf_xla_enable_xla_devices not set

2021-03-07 02:05:18.347235: I tensorflow/compiler/mlir/mlir_graph_optimization_pass.cc:116] None of the MLIR optimization passes are enabled (registered 2)

2021-03-07 02:05:18.366534: I tensorflow/core/platform/profile_utils/cpu_utils.cc:112] CPU Frequency: 3400000000 Hz

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi DL_student

The log that is stored in the error file are mainly warnings and not show stopper errors. These warnings occur because devcloud does not have a GPU and tensorflow informs a GPU is not found. These warnings would not be an issue as your tensorflow code would run without issues on devcloud CPU.

Additionally I would like to point out that you do not have to install tensorflow by yourself on devcloud. Devcloud has a conda environment named tensorflow which has keras and tensorflow pre installed for you. You could just activate the tensorflow environment and run your code there. Please find below cfx magic example which submits a job activating tensorflow environment.

%%qsub

cd $PBS_O_WORKDIR

source activate tensorflow

python myfile.py

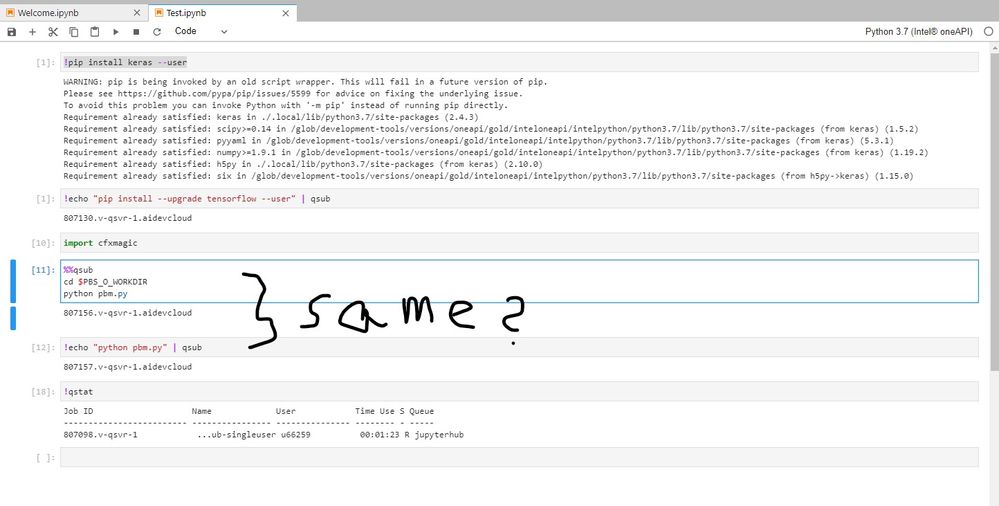

From your screenshot you seem to be asking if both of your qsub commands are same. When you do qsub you are submitting your job to a different machine which executes your commands and gives you output. By default you reach "/home/{userid}" directory of the new node when you submit a job.

In your screenshot first set of qsub commands(%%qsub cfx magic) has a "cd $PBS_O_WORKDIR", which changes directory to the same directory from where you submit your job. ie if you have your python file pbm.py in a different directory other than "/home/{userid}" you will have to use the first set of commands. Using magic commands(%%qsub) also allows you to execute multiple commands in the same shell.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You are welcome DL_student. Let us know if there is anything else you need help with regarding this issue. If not let us know if we could stop monitoring this space.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the confirmation. If you need any additional information, please submit a new question as this thread will no longer be monitored.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page