- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have found a bug on Parallel Studio 16.0.2 where I get an error when computing the SVD with GESDD in the Python package SciPy. It can be reproduced on an MKL-built scipy with this array, which is finite (contains no NaN or inf) as:

>>> import numpy as np

>>> from scipy import linalg

>>> linalg.svd(np.load('fail.npy'), full_matrices=False)

Traceback (most recent call last):

File "<string>", line 1, in <module>

File "/home/larsoner/.local/lib/python2.7/site-packages/scipy/linalg/decomp_svd.py", line 119, in svd

raise LinAlgError("SVD did not converge")

numpy.linalg.linalg.LinAlgError: SVD did not converge

I am curious if anyone has insight into why this fails, or can reproduce it themselves. I do have access to older MKL routines so if it's helpful I could see if I get the error elsewhere, too.

I have tried this with MKL-enabled Anaconda, and it does not fail, although I do experience similar failures with other arrays with the Anaconda version, which seem to only happen on systems with SSE4.2 but no AVX extensions.

I recently worked on SciPy's SVD routines to add a wrapper for a GESVD backend (to complement the existing GESDD routine) here, and this command passes on bleeding-edge SciPy, so it does seem to be a problem with the GESDD implementation specifically:

>>> linalg.svd(np.load('fail.npy'), full_matrices=False, lapack_driver='gesvd')

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Eric,

I didn't succeed to reproduce your problem, however I was able to see "similar failures with other arrays", namely with bad.npy file.

Indeed, the problem took place only on non-AVX architectures. I also checked it with pre-release MKL 11.3.3 and the test passed. I hope that this issue will completely go away with this new release.

Best regards,

Konstantin

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great! I saw something about signing up for the MKL beta, I'll look into that to see if it fixes my problem locally.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After recomiling latest numpy and scipy and running:

>>> import numpy as np >>> from scipy.linalg import svd >>> svd(np.load(fname), full_matrices=False)

On 16.0.2 and 16.0.3 (just got an email about it today), "bad.npy" works but still "fail.npy" fails as above.

Konstantin, do you have access to a machine with on AVX but with SSE4.2 to see if you can replicate?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have found another matrix that fails on current Anaconda with MKL:

https://staff.washington.edu/larsoner/bad_avx.npy

This passes on my machine (SSE4.2 support only), but fails on a machine with AVX and up-to-date Anaconda. Unfortunately I can't build scipy on that machine (it's not mine), but in case anyone wants to try another matrix, there it is.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

FWIW this actually isn't with the Intel distribution for Python, but rather with Ubuntu default system Python with custom-built SciPy routines. In theory it shouldn't matter, though, since hopefully the same arrays get passed to the underlying Fortran routines either way.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Intel Python Beta has MKL 11.3.2. I was not able to reproduce the problem with https://staff.washington.edu/larsoner/fail.npy on SSE4.2 (e.g. Nehalem) or a modern machine with avx

I was able to reproduce the problem with https://staff.washington.edu/larsoner/bad.npy on NHM, but not AVX. Our next release will have 11.3.3 and should fix the problem.

You can emulate different machines with SDE: https://software.intel.com/en-us/articles/intel-software-development-emulator.

sde-external-7.41.0-2016-03-03-win\sde -nhm -- c:\IntelPython35\python fail.py

The -nhm argument makes it emulate a Nehalem, which has SSE4.2, but not AVX.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the info. Do you have time to try my third bad one, which fails on Anaconda with AVX:

https://staff.washington.edu/larsoner/bad_avx.npy

I'll look into trying the SDE. I'm on a Nahelem CPU, so no need for me to emulate to get the SSE4.2 failures. I'll see if the emulator can allow me to run AVX code, that could indeed be useful.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I could not get https://staff.washington.edu/larsoner/bad_avx.npy to fail with AVX. I tried both Anaconda and Intel Python. Can you give more information about the system you are testing? Linux/Windows python 2, python 3. Haswell, or a SDE target that fails (-hsw).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The system that fails has Linux mint 17 x84_64, with 2 Intel(R) Xeon(R) CPUs (E5-2630 v2 @ 2.60GHz). The other failures I reference above (on non-AVX, SSE4.2 systems) were also on Linux 64-bit.

Anaconda Python is up to date, and Python launches with:

Python 2.7.11 |Continuum Analytics, Inc.| (default, Dec 6 2015, 18:08:32)

[GCC 4.4.7 20120313 (Red Hat 4.4.7-1)] on linux2

Anaconda has the following packages (trimmed list here) installed:

conda 4.0.5 py27_0

conda-env 2.4.5 py27_0

libgcc 5.2.0 0

libgfortran 3.0.0 1

mkl 11.3.1 0

numpy 1.11.0 py27_0

scipy 0.17.0 np111py27_3

Let me know if you need any other information. It will make my day if you also have access to a 64-bit Linux platform to test on :)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried with up to date anaconda python27 on ubuntu and i5-5300U (broadwell with AVX) and cannot reproduce the problem with bad_avx.npy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm a bit baffled, I just re-confirmed from a fresh start:

$ mkdir temp $ cd temp $ wget -q http://repo.continuum.io/miniconda/Miniconda-latest-Linux-x86_64.sh -O miniconda.sh $ chmod +x miniconda.sh $ ./miniconda.sh -b -p ./miniconda $ export PATH=./miniconda/bin:$PATH $ conda update --yes --quiet conda $ conda install numpy scipy The following NEW packages will be INSTALLED: libgcc: 5.2.0-0 libgfortran: 3.0.0-1 mkl: 11.3.1-0 numpy: 1.11.0-py27_0 scipy: 0.17.0-np111py27_3 $ wget https://staff.washington.edu/larsoner/bad_avx.npy $ which python ./miniconda/bin/python $ python -c "import numpy as np; from scipy import linalg; linalg.svd(np.load('bad_avx.npy'), full_matrices=False)" Traceback (most recent call last): File "<string>", line 1, in <module> File "/home/ericlarson/temp/miniconda/lib/python2.7/site-packages/scipy/linalg/decomp_svd.py", line 105, in svd raise LinAlgError("SVD did not converge") numpy.linalg.linalg.LinAlgError: SVD did not converge

Could there be some difference in extensions between my machine and yours? Here's mine from /cat/proc/cpuinfo:

fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc aperfmperf eagerfpu pni pclmulqdq dtes64 monitor ds_cpl vmx smx est tm2 ssse3 cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm ida arat epb xsaveopt pln pts dtherm tpr_shadow vnmi flexpriority ept vpid fsgsbase smep erms

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I found a Haswell (HSW) and followed your steps exactly and do not see the error. Here is the cpuinfo.

processor : 15

vendor_id : GenuineIntel

cpu family : 6

model : 63

model name : Intel(R) Xeon(R) CPU E5-2630 v3 @ 2.40GHz

stepping : 2

microcode : 0x2b

cpu MHz : 1200.000

cache size : 20480 KB

physical id : 1

siblings : 8

core id : 7

cpu cores : 8

apicid : 30

initial apicid : 30

fpu : yes

fpu_exception : yes

cpuid level : 15

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc aperfmperf eagerfpu pni pclmulqdq dtes64 monitor ds_cpl vmx smx est tm2 ssse3 fma cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm abm ida arat epb xsaveopt pln pts dtherm tpr_shadow vnmi flexpriority ept vpid fsgsbase tsc_adjust bmi1 avx2 smep bmi2 erms invpcid

bogomips : 4796.36

clflush size : 64

cache_alignment : 64

address sizes : 46 bits physical, 48 bits virtual

power management:

rscohn1@fxsatlin01:~/temp$

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could it be something in the environment that is different? Do you see the problem with:

env -i miniconda/bin/python -c "import numpy as np; from scipy import linalg; linalg.svd(np.load('bad_avx.npy'), full_matrices=False)"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for looking into it a bit. Comparing the lists, it looks like your CPU has [FMA, MOVBE, ABM] that neither my Xeon system (which I listed before) nor my Intel 980X system (SSE4.2 only; list not shown here yet) has. I don't know if that would matter at all, but I wouldn't be surprised if FMA were used in the underlying code. Is it possible in your emulator to disable that instruction set?

In any case, I get the error even with that (from a clean login to the machine):

~ $ cd temp/

~/temp $ env -i miniconda/bin/python -c "import numpy as np; from scipy import linalg; linalg.svd(np.load('bad_avx.npy'), full_matrices=False)"

Traceback (most recent call last):

File "<string>", line 1, in <module>

File "/home/ericlarson/temp/miniconda/lib/python2.7/site-packages/scipy/linalg/decomp_svd.py", line 105, in svd

raise LinAlgError("SVD did not converge")

numpy.linalg.linalg.LinAlgError: SVD did not converge

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

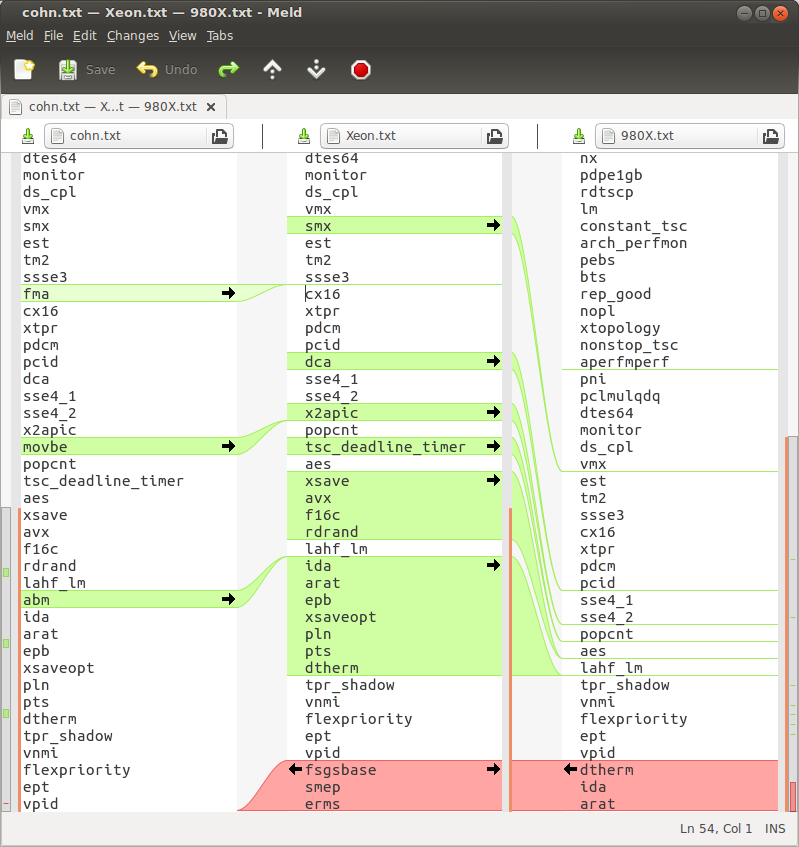

In case you're curious I diff'ed the CPU instruction sets across your system (cohn.txt), my Xeon/AVX system (Xeon), and my 980X system (980X):

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I showed your cpuinfo to the developer of SDE, who says -ivb should be the right target. It still doesn't fail. I will ask Arturov to pick this up since he supports MKL (I am Intel python) and he might have some ideas.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great, thanks for taking the time to look into this. Let me know if there's anything else I can do at my end, other than play with SDE (which I hope to do soon).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I found an SandyBridge (SNB) system and don't see the problem. Can you send me the full cpuinfo info. Here is mine:

processor : 31

vendor_id : GenuineIntel

cpu family : 6

model : 45

model name : Intel(R) Xeon(R) CPU E5-2670 0 @ 2.60GHz

stepping : 7

microcode : 0x70d

cpu MHz : 1200.000

cache size : 20480 KB

physical id : 1

siblings : 16

core id : 7

cpu cores : 8

apicid : 47

initial apicid : 47

fpu : yes

fpu_exception : yes

cpuid level : 13

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc aperfmperf eagerfpu pni pclmulqdq dtes64 monitor ds_cpl vmx smx est tm2 ssse3 cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic popcnt tsc_deadline_timer aes xsave avx lahf_lm ida arat epb xsaveopt pln pts dtherm tpr_shadow vnmi flexpriority ept vpid

bogomips : 5188.49

clflush size : 64

cache_alignment : 64

address sizes : 46 bits physical, 48 bits virtual

power management:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here is my 980X/SSE4.2 system:

processor : 11

vendor_id : GenuineIntel

cpu family : 6

model : 44

model name : Intel(R) Core(TM) i7 CPU X 980 @ 3.33GHz

stepping : 2

microcode : 0xc

cpu MHz : 1596.000

cache size : 12288 KB

physical id : 0

siblings : 12

core id : 0

cpu cores : 6

apicid : 1

initial apicid : 1

fpu : yes

fpu_exception : yes

cpuid level : 11

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc aperfmperf pni pclmulqdq dtes64 monitor ds_cpl vmx est tm2 ssse3 cx16 xtpr pdcm pcid sse4_1 sse4_2 popcnt aes lahf_lm tpr_shadow vnmi flexpriority ept vpid dtherm ida arat

bugs :

bogomips : 6633.57

clflush size : 64

cache_alignment : 64

address sizes : 36 bits physical, 48 bits virtual

power management:

And here is the Xeon/AVX system:

processor : 23

vendor_id : GenuineIntel

cpu family : 6

model : 62

model name : Intel(R) Xeon(R) CPU E5-2630 v2 @ 2.60GHz

stepping : 4

microcode : 0x416

cpu MHz : 1200.000

cache size : 15360 KB

physical id : 1

siblings : 12

core id : 5

cpu cores : 6

apicid : 43

initial apicid : 43

fpu : yes

fpu_exception : yes

cpuid level : 13

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc aperfmperf eagerfpu pni pclmulqdq dtes64 monitor ds_cpl vmx smx est tm2 ssse3 cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm ida arat epb xsaveopt pln pts dtherm tpr_shadow vnmi flexpriority ept vpid fsgsbase smep erms

bogomips : 5201.68

clflush size : 64

cache_alignment : 64

address sizes : 46 bits physical, 48 bits virtual

power management:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just to summarize. I was unable to reproduce the problem for https://staff.washington.edu/larsoner/bad_avx.npy on SNB & HSW machines, and IVB SDE emulation. Next to try is IVB hardware, but I don't have one.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page