- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, I have a custom trained yolov5 model, When i convert to fp32 and run accuracy checker on it i am facing a reshape error. to verify my configuration yaml file i have tried the same configuration file in pretrained yolov5 fp32 model and the accuracy checker works fine there . Is there anyways to merge two channels in openvino ?? Have attached the error screen grab

The yaml configuration file i used

models:

- name: yolo_v5

launchers:

- framework: dlsdk

model: /home/nga_hitech_ib/yolov5_MBU/yolov5s.xml

weights: /home/nga_hitech_ib/yolov5_MBU/yolov5s.bin

device: CPU

adapter:

type: yolo_v5

anchors: "10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326"

num: 3

coords: 4

classes: 80

threshold: 0.001

anchor_masks: [[0, 1, 2], [3, 4, 5], [6, 7, 8]]

raw_output: True

outputs:

- '345'

- '404'

- '463'

datasets:

- name: COCO2017_detection_80cl

data_source: /home/nga_hitech_ib/model_test/coco_2017/val2017

annotation_conversion:

converter : mscoco_detection

annotation_file: /home/nga_hitech_ib/model_test/coco_2017/instances_val2017.json

images_dir: /home/nga_hitech_ib/model_test/coco_2017/val2017

has_background: false

use_full_label_map: false

preprocessing:

- type: resize

size: 640

postprocessing:

- type: resize_prediction_boxes

- type: filter

apply_to: prediction

min_confidence: 0.001

remove_filtered: true

- type: nms

overlap: 0.5

- type: clip_boxes

apply_to: prediction

metrics:

- type: map

integral: 11point

ignore_difficult: true

presenter: print_scalar

- name: AP@0.5

type: coco_precision

max_detections: 100

threshold: 0.5

- name: AP@0.5:0.05:95

type: coco_precision

max_detections: 100

threshold: '0.5:0.05:0.95'

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @Wan_Intel

So I remembered I used only 4 classes , and changed 80 to 4 in the configuration file its working now, Thank you so much for help.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ashwin_J_S,

Thanks for reaching out to us.

For your information, I have downloaded yolov5s.pt from v6.1 - TensorRT, TensorFlow Edge TPU and OpenVINO Export and Inference GitHub page, and converted the pre-trained model into Intermediate Representation using the following command:

python export.py --weights yolov5s.pt --include openvino

Next, I used Accuracy Checker with the generated IR files, I encountered a similar error as you. The outputs from the IR file (pre-trained model) are the same as your custom model:

<Output: names[output] shape{1,25200,85} type: f32>

<Output: names[339] shape{1,3,80,80,85} type: f32>

<Output: names[391] shape{1,3,40,40,85} type: f32>

<Output: names[443] shape{1,3,20,20,85} type: f32>

Referring to Public Pre-Trained Models Device Support, yolov5s is not a supported model yet.

On another note, could you please share your source model and custom model with us for further investigation?

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Wan_Intel ,thank you for looking into this issue, Unfortunately I cannot share the custom model with you because it is a IP of my company and I am prohibited to do the same. Is there any preprocessing steps which I can apply to change the output layers.

Also I exported my base model to onnx first, and then using mo.py I converted it to FP32

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ashwin_J_S,

Thanks for your information.

Referring to your previous post, you exported your base model to ONNX and then used Model Optimizer to convert it into Intermediate Representation FP32 data type.

I have validated your steps from converting the base yolov5s model to Intermediate Representation, and the IR files were able to use with the Accuracy checker.

Could you please try the following steps to convert your base model to IR and use the IR file with Accuracy Checker again?

Note: I’m using OpenVINO™ toolkit version 2022.1

Step 1: Convert the ONNX model to IR files:

mo \

--input_model yolov5s.onnx \

--output_dir <output_dir> \

--output Conv_198,Conv_233,Conv_268 \

--data_type FP32 \

--scale_values=images[255] \

--input_shape=[1,3,640,640] \

--input=images

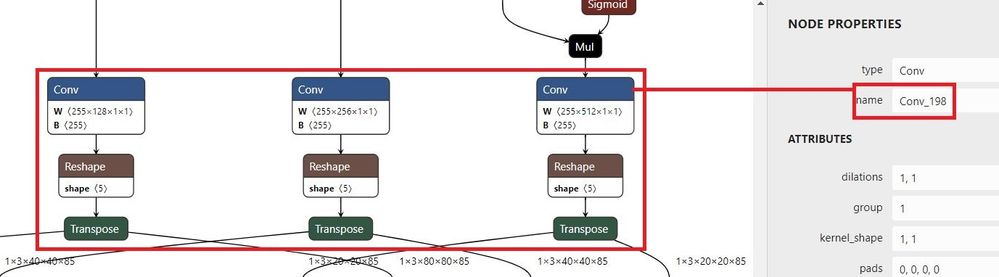

For your information, the list of --output can be viewed in Netron app as follow:

CTRL + F search for Transpose, and click on the Conv node

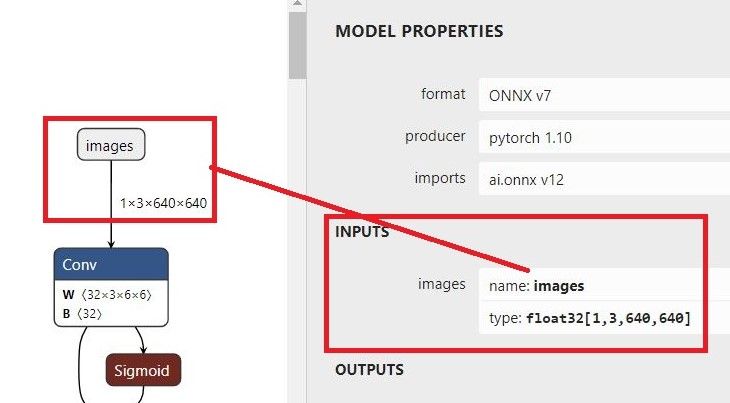

Next, the --input can be viewed in Netron app as follow:

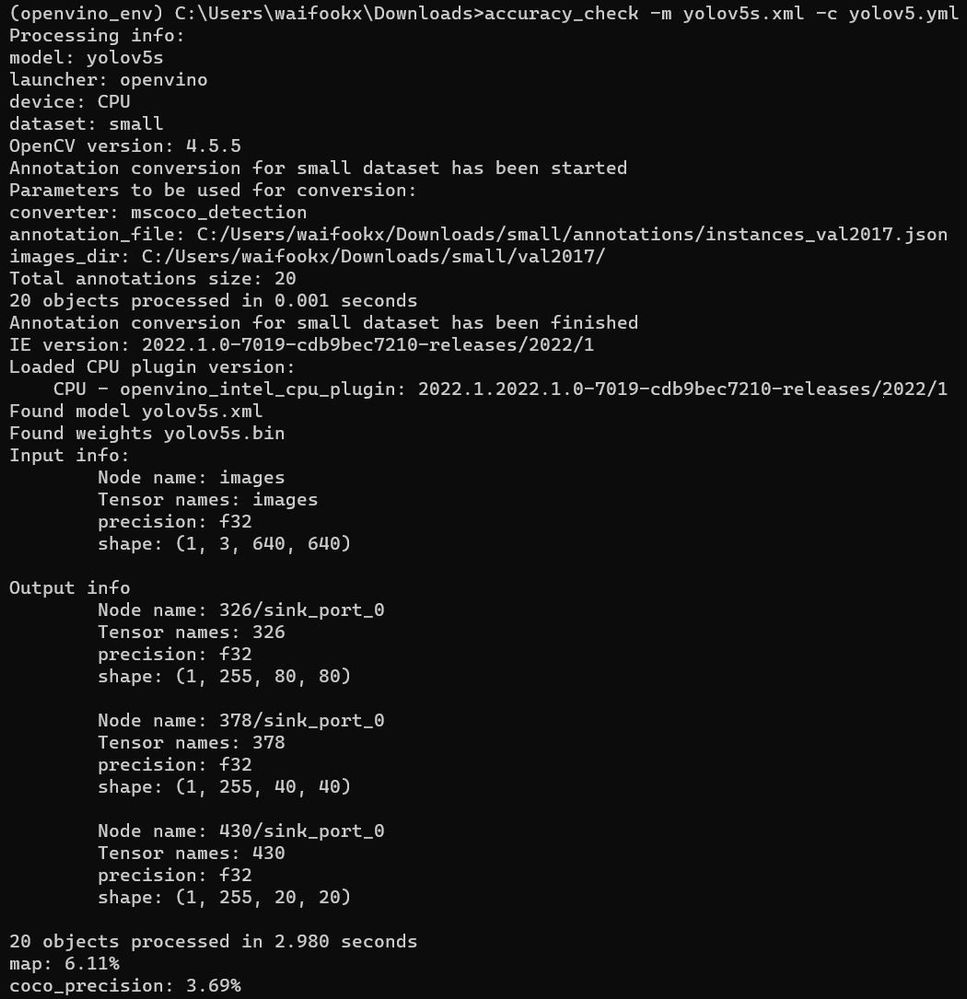

Step 2: Use the IR files with Accuracy Checker:

The outputs from the new IR files are shown as follows:

<Output: names[326] shape{1,255,80,80} type: f32>

<Output: names[378] shape{1,255,40,40} type: f32>

<Output: names[430] shape{1,255,20,20} type: f32>

The YAML configuration file I used:

models:

- name: yolov5s

launchers:

- framework: openvino

device: CPU

adapter:

type: yolo_v5

anchors: "10,13, 16,30, 33,23, 30,61, 62,45, 59,119, 116,90, 156,198, 373,326"

num: 9

coords: 4

classes: 80

anchor_masks: [[6, 7, 8], [3, 4, 5], [0, 1, 2]]

outputs:

- '326'

- '378'

- '430'

datasets:

- name: small

data_source: "C:/Users/waifookx/Downloads/small/val2017/"

annotation_conversion:

converter: mscoco_detection

annotation_file: "C:/Users/waifookx/Downloads/small/annotations/instances_val2017.json"

images_dir: "C:/Users/waifookx/Downloads/small/val2017/"

preprocessing:

- type: resize

size: 640

postprocessing:

- type: resize_prediction_boxes

- type: filter

apply_to: prediction

min_confidence: 0.001

remove_filtered: True

- type: nms

overlap: 0.5

- type: clip_boxes

apply_to: prediction

metrics:

- type: map

integral: 11point

ignore_difficult: true

presenter: print_scalar

- type: coco_precision

max_detections: 100

threshold: 0.5

The result:

Hope it helps.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Wan_Intel , I tried your mo.py method but i am still facing issues in my custom trained model.

Few observations from my side , in my custom model there is a always a reshape layer before transpose layer i have attached the netron images. Is there any preprocessing steps i am missing here.

Also I was able to run the accuracy checker on the pretrained yolov5s model previously.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ashwin_J_S,

I have checked the pre-trained model. Referring to the previous post, you will notice that there is also a reshape layer before transpose layer for pre-trained model.

Could you please try to add do_reshape in the YAML file under Yolov3/5 Adapter?

do_reshape - forces reshape output tensor to [B,Cy,Cx] or [Cy,Cx,B] format, depending on output_format value ([B,Cy,Cx] by default). You may need to specify cells value.

For more information, please refer to classes from Deep Learning Accuracy Validation Framework.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Wan_Intel , I added the parameters in the yaml file, but I am facing issues in defining cell values, can you help me with the right way.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ashwin_J_S,

Thanks for your information. Sorry for the inconvenience. It’s kind of difficult for us to replicate the actual error without the model.

On another note, I noticed that the error we encountered: "Cannot reshape array of size XXX to [X,X,X,X]" will occur if the Intermediate Representation's output data layout is NCDHW. When I check the ONNX's output data layout using Netron App, the output data layout of the ONNX model was NCDHW. If I convert the ONNX model to Intermediate Representation using the export.py from the Ultralytics/yolov5 repo, the output data layouts of the Intermediate Representation will be NCDHW as well.

Next, I convert the ONNX model into Intermediate Representation using the latest Model Optimizer from the latest OpenVINO Development Tools by specifying additional Model Optimizer arguments which are --output, --input, and --scale_values, the output data layout of the Intermediate Representation is NCHW. Then, the Intermediate Representation was able to work with the latest Accuracy Checker with the YAML file that I shared in the previous post.

To make sure that your custom model can be converted to the Intermediate Representation with the output data layout of NCHW instead of NCDHW, please try the steps below to convert your custom yolov5s.pt into the ONNX model using the repo from Ultralytics/yolov5 GitHub repo, and then convert the ONNX model into Intermediate Representation using the latest Model Optimizer from the latest OpenVINO Development Tools.

1. Git clone the latest release of v6.1 - TensorRT, TensorFlow Edge TPU and OpenVINO Export and Inference. Refer to Section Quick Start Examples to clone repo and install requirements.txt in a Python>=3.7.0 environment, including PyTorch>=1.7.

2. Export your custom yolov5s.pt into ONNX model with the following command:

python export.py --weights yolov5s.pt --include onnx

Refer to Usage examples (ONNX shown) in here.

3. Install the latest OpenVINO™ Development Tools. Refer to Section Install the OpenVINO™ Development Tools Package for more information.

4. Use the following command to convert your ONNX model into Intermediate Representation:

mo --input_model <ONNX_model> --output <output_names> --data_type FP32 --scale_values=<input_names>[255] --input_shape= <input_shape> --input=<input_names>

Note, please refer to the previous post on how to define output_names, input_shape, and input_names. For more information on when to specify the Model Optimizer arguments, please refer to Convert Model with Model Optimizer to explore further.

After you have successfully converted your custom model to the ONNX model and Intermediate Representation, please check your Intermediate Representation output data layout, to make sure it is NCHW, not NCDHW. If the output data layout of the Intermediate Representation is remain as NCDHW, please share the command that you used to convert your ONNX model with us. Also, please share the output_names of the ONNX model, input_names of the ONNX model, and input_shapes of the ONNX model with us to confirm that the Model Optimizer command that you have used to convert the ONNX model into Intermediate Representation is correct.

If the Intermediate Representation's output data layout is NCHW, please use the Intermediate Representation with the latest Accuracy Checker from the OpenVINO Development Tools. Also, please create a YAML file that I shared in the previous post. You have to check the output names of the Intermediate Representation and use them in the YAML file. If you still encountered the following error: "Cannot reshape array of size XXX into shape [X,X,X,X]", please get back to us.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi , I have Updated openvino to the latest version and tried your code but the model optimizer is still in the older version. How should I fix it

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ashwin_J_S,

Thanks for your information.

I recommend you use a virtual environment to avoid dependency conflicts.

Please follow the steps below to create virtual environment, activate virtual environment and install OpenVINO development package:

Step 1: Set up Python Virtual Environment

sudo apt install python3-venv python3-pip

python3 -m pip install --user virtualenv

Step 2: Activate Virtual Environment

python3 -m venv openvino_env

source openvino_env/bin/activate

Step 3: Set up and Update PIP to the highest version

python -m pip install --upgrade pip

Step 4: Install package

pip install openvino-dev[tensorflow2,mxnet,caffe,kaldi, onnx, pytorch]

Step 5: Verify that the package is installed

· To verify that the developer package is properly installed, run the command below

mo -h

You will see the help message for Model Optimizer if installation finished successfully.

· To verify that OpenVINO Runtime from the runtime package is available, run the command below:

python -c "from openvino.runtime import Core"

If the installation was successful, you will not see any error messages (no console output).

Hope it helps.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In the latest version I am getting output layers is just 1. I have used the configuration file you mentioned above by changing the output layer.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ashwin_J_S,

Great to know that you can convert your model using the latest OpenVINO.

Seems like my YAML is not suitable to use with your custom model. May I know did you encountered a different error when using your own YAML file?

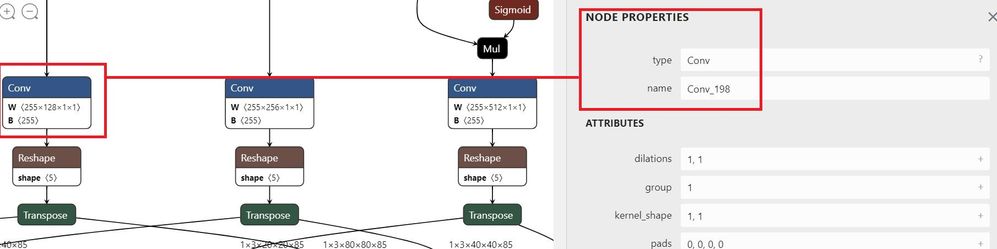

On another note, could you please screenshot your ONNX model’s Convolution layer before each transpose layer for further investigation?

Next, click on the Conv layer and screenshot it, I would like to know the name of the Conv layer.

Thanks.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am facing the same issue with my configuration file too. Have attached the requested images.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ashwin_J_S,

Thanks for sharing the information.

I noticed that the names of the Conv layer were Conv_305, Conv_339, and Conv_271. Could you please use the Conv layers name with the Model Optimizer --output argument?

--output Conv_271,Conv_305,Conv_339

After you have converted the model into IR, you can run Accuracy Checker with your model and your YAML file again.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Wan_Intel , I used the following configuration to get the IR file

mo --input_model last.onnx --output Conv_271,Conv_305,Conv_339 --data_type FP32 --scale_values=images[255] --input_shape=[1,3,640,640] --input=imagesBut the output layers are different in the created xml file. I have updated the configuration file accordingly and got this error I have attached.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ashwin_J_S,

Thanks for sharing the Model Optimizer command and the error with us.

I noticed that your output shapes are as follows:

<1, 27, 80, 80>

<1, 27, 40, 40>

<1, 27, 20, 20>

Could you please try the following command to convert your custom model into IR again? After that, you can proceed to use the IR with Accuracy Checker.

mo --input_model last.onnx --output Conv_271,Conv_305,Conv_339 --data_type FP32 --scale_values=images[27] --input_shape=[1,3,640,640] --input=images

Thanks, and regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ashwin_J_S,

Could you share the error you encountered with us?

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ashwin_J_S,

Could you share the input node using the Netron app?

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page