- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I followed the tutorial here and was able to successfully run a caffe based SSD Mobilenet model trained on the COCO Dataset on my Raspberry Pi 3,

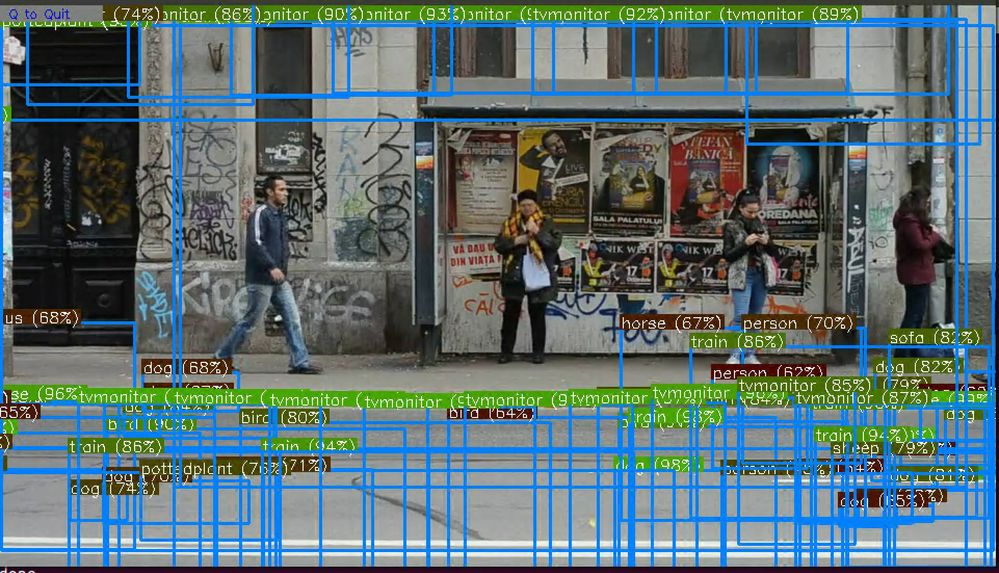

I attempted to train my own 2 class (3 with background) Mobilenet SSD model and converted it to the NCS format (without any errors while conversion). However, when I run this model on my Raspberry Pi my output looks like such:

https://drive.google.com/file/d/1H64Re-d-sstMovGU_GcQL_QfjZnsHey-/view?usp=sharing

I have used this version of caffe:

https://github.com/weiliu89/caffe/tree/ssd

I have used this repo to get the demo for mobilenet SSD model running as well as followed the instructions on it to train my own:

https://github.com/chuanqi305/MobileNet-SSD

The python code I use to run the real time inference is from the tutorial linked above, but I am linking it again here for easy viewing:

https://pastebin.com/wu32HhTw

Finally here is my caffemodel model and prototxt that I try to deploy:

https://drive.google.com/open?id=1AWqduveRb3_AhpP-2jZIfDW1QYPvTO4F

https://drive.google.com/open?id=1zEpfjD3LO1QyNJ1iRv4q9Z6Y3iNabiEh

And I convert the graph using the following:

mvNCCompile models/MobileNetSSD_deploy.prototxt \

-w models/MobileNetSSD_deploy.caffemodel \

-s 12 -is 300 300 -o graphs/mobilenetgraph

Kindly advice on what I am missing here, how can I get my custom model to run just as seamlessly as the example model runs on the Mobilenet repo above.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

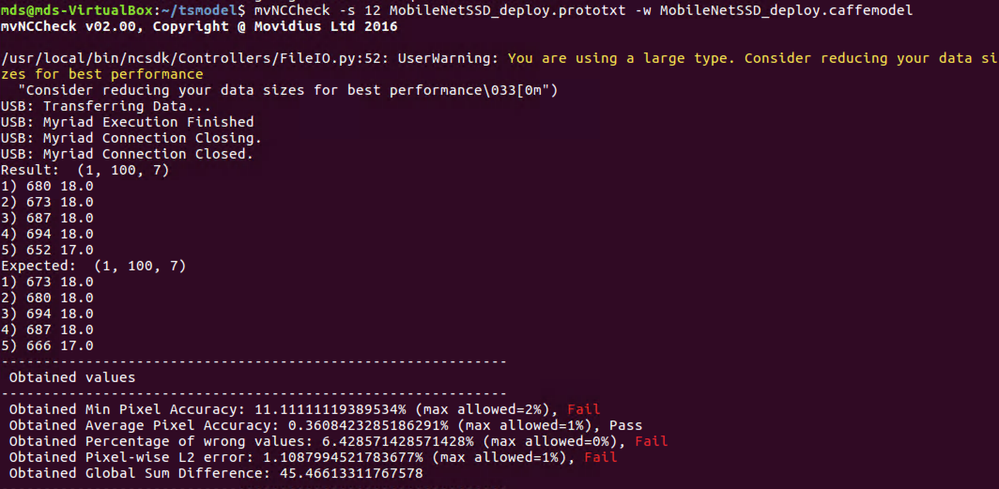

Yes I ran ./test.sh and got an accuracy of over 0.99 which is what had me confused. I ran the mvNCCheck command and had the following output:

https://drive.google.com/file/d/1HTJ0cgs61QY1q_m7Jd47F7cp-ILtZuar/view?usp=sharing

As you can see in the image there were 3 Fails in the Obtained values

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok. I now got the same problem with my retrained model.

A lot of nonsense predictions:

mvNCCheck fails:

Anyone know how to fix it?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@vivekvyas94 @Manto Try changing the confidence_threshold parameter of the last Detection Output layer. I was unable to access @vivekvyas94 's prototxt file, but please try adjusting this value to 0.3.

For example, you can see the confidence_threshold parameter in the DetectionOutput layer below:

layer {

name: "detection_out"

type: "DetectionOutput"

bottom: "mbox_loc"

bottom: "mbox_conf_flatten"

bottom: "mbox_priorbox"

top: "detection_out"

include {

phase: TEST

}

detection_output_param {

num_classes: 21

share_location: true

background_label_id: 0

nms_param {

nms_threshold: 0.45

top_k: 100

}

code_type: CENTER_SIZE

keep_top_k: 100

confidence_threshold: 0.3

}

}

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Tome_at_Intel Thanks for reply. However I didn't find that change to help with my model. I still see similar number of detections. If you have a chance to take a look here are my caffemodel and deploy prototxt files: https://drive.google.com/open?id=1FpdRVznHkHzUWl0GPRIjraSmy1DlHavj (without that change)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Manto I took a look at your model and I was able to experience the same bounding box issue you saw. Interesting that there are so many false detections with high scores. Can you share how you re-trained your SSD model in detail?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Tome_at_Intel Thanks for taking the time to look at it. I trained the model using the code provided in the repo https://github.com/chuanqi305/MobileNet-SSD . I am training a model for traffic sign recognition so I had to remove the

expand_param {

prob: 0.5

max_expand_ratio: 4.0

}

part of the prototxt, because that made the objects too small to be detected. Otherwise the prototxt was similar. I even have the same number of classes (20 + background). With the train.sh script I trained for about 35k iterations.

What I find strange is that the same caffemodel works just fine with when I use it with the demo.py script found in the repo. The detections look like this:

I do have the wrong label names (Pascal VOC) in the image I posted earlier, but that shouldn't affect the detections. So somehow the conversion process makes my model preform badly.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Tome_at_Intel Sure, I downloaded the files from my google drive link again and placed them into the folder, changed the demo.py script to point to the caffemodel. And yes I get similar predictions (the wrong predictions) as in your image. However, if I also change the script to point to my prototxt it works fine. I see the differences between the prototxt provided in the repo and my prototxt are that the names of some of the layers convXX_mbox_conf are convXX_mbox_conf_new in my prototxt.

I modified the merge_bn.py script to remove the "_new" part of the layer names, so now it works with the provided prototxt. Problem solved! Thanks for the help!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Manto I'm glad it's working for you. I had to do the same thing you did to get it functional, however it still provided wrong results. If you got it working, that is good to hear. Let me know if there was anything else you had to do to get it working correctly. Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for all your comments @Tome_at_Intel and @Manto . To be clear, I made the changes in my prototxt file to remove the "_new" part without any results. I also changed the confidence levels without any luck.

To be clear though, @Manto when you say you run demo.py, are you running the .caffemodel and prototxt files as is without converting thing to the ncs format? If so how are you managing to use the Movidius to do so ? This is very curious

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Tome_at_Intel I modified the merge_bn.py script so that the train_proto variable points to the prototxt that I used in training. That prototxt has the "_new" parts in the layer names. Then I changed the deploy_proto to point to a prototxt without the "_new" parts. And finally added one line of code that removes the "_new" parts from layer names to the merge_bn function like this:

conv = net.params[key]

if not net.params.has_key(key + "/bn"):

for i, w in enumerate(conv):

key = key.replace('_new', '') # This is the line I added

nob.params[key][i].data[…] = w.data

So my problem was that I trained the model with the "_new" parts in the layer names and I believe that caused it to fail to match the weights and the deploy prototxt. But with the change in the merge_bn the layer names are the same and the problem is fixed.

@vivekvyas94 I run the demo.py to test that the trained model is converted properly with the merge_bn.py script. It runs on the GPU not on Movidius.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Manto i experienced the same issue and followed your guideline, but the problem still persisted, could you please share the whole SSD_Mobilenet directory

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Manto just to be sure that I understand everything:

- in the merge_bn script, the train_proto variable refers to the prototxt with the "_new" parts while the deploy_proto refers to the modified prototxt without "_new" parts

- in the merge_bn script I add the line that you mentioned

and that's all, right? What if I want to use the original model with batch norm?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page