- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

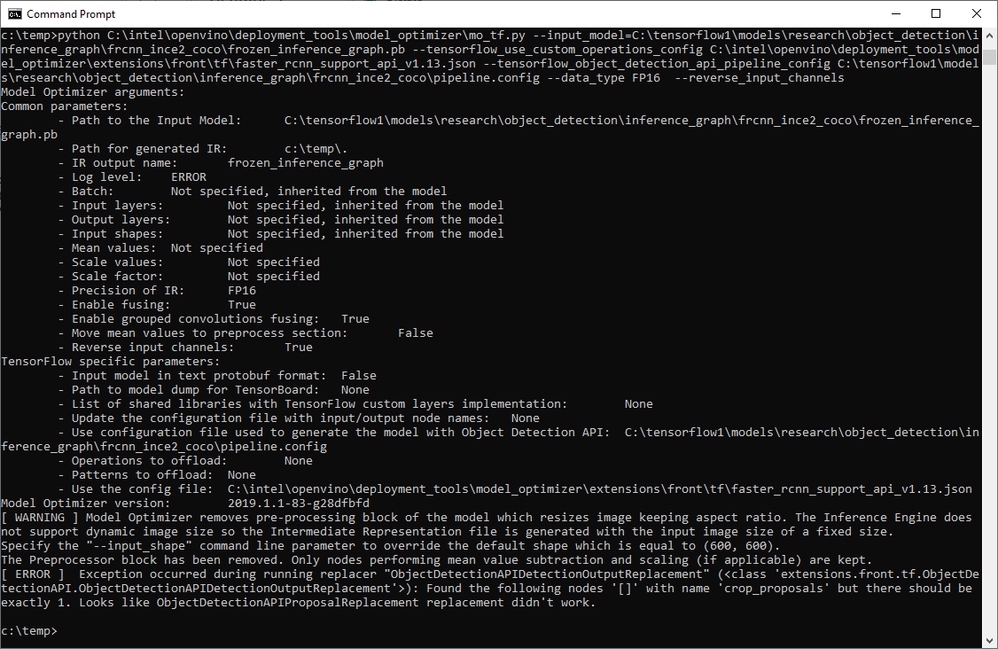

I have trained custom trained FRCNN Inception V2 model with my own training data. Getting the following error while trying to optimize using Openvino 2019 R1:

[ WARNING ] Model Optimizer removes pre-processing block of the model which resizes image keeping aspect ratio. The Inference Engine does not support dynamic image size so the Intermediate Representation file is generated with the input image size of a fixed size.

Specify the "--input_shape" command line parameter to override the default shape which is equal to (600, 600).

The Preprocessor block has been removed. Only nodes performing mean value subtraction and scaling (if applicable) are kept.

[ ERROR ] Exception occurred during running replacer "ObjectDetectionAPIDetectionOutputReplacement" (<class 'extensions.front.tf.ObjectDetectionAPI.ObjectDetectionAPIDetectionOutputReplacement'>): Found the following nodes '[]' with name 'proposals' but there should be exactly 1. Looks like ObjectDetectionAPIProposalReplacement replacement didn't work.

Can anyone help me out with this error?

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear SANYAL, RINI,

It's very important that your custom-trained *.config file is not damaged. Please do a diff compare and compare it to the original faster rcnn pipeline.config and make sure that only necessary items are changed.

Also please find the attached *.zip and use faster_rcnn_support_api_v1.13.json for your MO command. Finally, please make sure you are on the latest OpenVino 2019R1.1 (*.148 if Windows and *.144 if Linux).

Thanks,

Shubha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear SANYAL, RINI (and everyone),

Even after trying that faster_rcnn_support_api_v1.13.json in the *.zip it may still not work for you. I have filed a bug. Really terribly sorry about the inconvenience.

But

If you revert back to a commit on https://github.com/tensorflow/models . Commit ID: 6fc642d423648f6a932673f0b3c07e40268c979f

(Basically use an update prior to May 31 ) it will work.

Thanks !

Shubha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Shubha

I got the same error. How can i overcome this issue?

What are the steps to be followed when using the github page you referred?

The error is as follows

sudo python3 mo_tf.py --input_model=/home/ioz/Desktop/GUN_DETECTION/research/object_detection/gun_inference_graph/frozen_inference_graph.pb --tensorflow_use_custom_operations_config /opt/intel/openvino/deployment_tools/model_optimizer/extensions/front/tf/faster_rcnn_support.json --tensorflow_object_detection_api_pipeline_config /home/ioz/Desktop/GUN_DETECTION/research/object_detection/training/pipeline.config --reverse_input_channels

Model Optimizer arguments:

Common parameters:

- Path to the Input Model: /home/ioz/Desktop/GUN_DETECTION/research/object_detection/gun_inference_graph/frozen_inference_graph.pb

- Path for generated IR: /opt/intel/openvino_2019.1.144/deployment_tools/model_optimizer/.

- IR output name: frozen_inference_graph

- Log level: ERROR

- Batch: Not specified, inherited from the model

- Input layers: Not specified, inherited from the model

- Output layers: Not specified, inherited from the model

- Input shapes: Not specified, inherited from the model

- Mean values: Not specified

- Scale values: Not specified

- Scale factor: Not specified

- Precision of IR: FP32

- Enable fusing: True

- Enable grouped convolutions fusing: True

- Move mean values to preprocess section: False

- Reverse input channels: True

TensorFlow specific parameters:

- Input model in text protobuf format: False

- Path to model dump for TensorBoard: None

- List of shared libraries with TensorFlow custom layers implementation: None

- Update the configuration file with input/output node names: None

- Use configuration file used to generate the model with Object Detection API: /home/ioz/Desktop/GUN_DETECTION/research/object_detection/training/pipeline.config

- Operations to offload: None

- Patterns to offload: None

- Use the config file: /opt/intel/openvino/deployment_tools/model_optimizer/extensions/front/tf/faster_rcnn_support.json

Model Optimizer version: 2019.1.1-83-g28dfbfd

[ WARNING ] Model Optimizer removes pre-processing block of the model which resizes image keeping aspect ratio. The Inference Engine does not support dynamic image size so the Intermediate Representation file is generated with the input image size of a fixed size.

Specify the "--input_shape" command line parameter to override the default shape which is equal to (600, 600).

The Preprocessor block has been removed. Only nodes performing mean value subtraction and scaling (if applicable) are kept.

[ ERROR ] Exception occurred during running replacer "ObjectDetectionAPIDetectionOutputReplacement" (<class 'extensions.front.tf.ObjectDetectionAPI.ObjectDetectionAPIDetectionOutputReplacement'>): Found the following nodes '[]' with name 'crop_proposals' but there should be exactly 1. Looks like ObjectDetectionAPIProposalReplacement replacement didn't work.

Thanks in advance

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Shubha,

I was able to start with your comment about using a previous commit of the Tensorflow API (i.e. not using the latest release).

Here are the steps that allowed me to get an IR for a particular custom-trained trained model based on a pre-trained model:

- I started by pulling the faster_rcnn_resnet101_coco_2018_01_28 from the supported OpenVino Tensorflow model zoo.

- I tweaked the config file faster_rcnn_resnet101_coco.config that I pulled from https://github.com/tensorflow/models/blob/master/research/object_detection/samples/configs/.

- I then pulled the specific commit of the Tensorflow API that you recommended in your post above: git checkout 6fc642d423648f6a932673f0b3c07e40268c979f

- Rather than using the latest release of OpenVino, I reverted to a previous release of it. Version 1.133 was the one that worked for me: /opt/intel/openvino_2019.1.133.

- I downloaded the Tensorflow Custom Operations Config patch from your posting above which contains 2 files, both of which are required: faster_rcnn_support_api_v1.13.json and mask_rcnn_support_api_v1.13.json.

- I copied the 2 json files in Step 5 to /opt/intel/openvino_2019.1.133/deployment_tools/model_optimizer/extensions/front/tf.

- Finally, I was able to run the mo_tf.py optimizer from /opt/intel/openvino_2019.1.133/deployment_tools/model_optimizer.

My hope is that your team will soon getting things fixed in the next release so that others will have a much easier time getting OpenVino deployed. Then, the community will be able to push custom models to devices like the Movidius card for inferencing on real-world production ready use cases.

Best of luck!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear DeVera, Thomas,

Wow. Thank you for elaborating the detailed steps you went through, and yes this is quite terrible. I'm sorry you had to go through this. This one really surprises me:

Rather than using the latest release of OpenVino, I reverted to a previous release of it. Version 1.133 was the one that worked for me: /opt/intel/openvino_2019.1.133.

May I ask what version of Tensorflow you have installed ? Can you upgrade your TF to 1.13 and try with the latest OpenVino (2019R1.1) ?

Thanks,

Shubha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Shubha,

I have the TF version 1.13.1 and OpenVino_2019.1.148 R1.1 installed but still getting the same error. Please see the error log below and guide when a potential solution is expected?? thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In addition, I have downgraded the models commit (Commit ID: 6fc642d423648f6a932673f0b3c07e40268c979f) as you proposed but still getting the same error.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Shubha,

I hope my post find you very well.

I have the same issue using TF 1.14.0 openvino_2019.1.144 python 3.5.2

Command:

$ sudo python3 deployment_tools/model_optimizer/mo_tf.py --input_model=/home/cosma/Development/venvov/dataset/train/fruits_faster_rcnn_inception_inference_graph/frozen_inference_graph.pb --tensorflow_use_custom_operations_config=/opt/intel/openvino_2019.1.144/deployment_tools/model_optimizer/extensions/front/tf/faster_rcnn_support_api_v1.7.json --tensorflow_object_detection_api_pipeline_config=/home/cosma/Development/venvov/dataset/train/fruits_faster_rcnn_inception_inference_graph/pipeline.config --reverse_input_channels

With or without --reverse_input_channels, I have the following error:

[ WARNING ] Model Optimizer removes pre-processing block of the model which resizes image keeping aspect ratio. The Inference Engine does not support dynamic image size so the Intermediate Representation file is generated with the input image size of a fixed size.

Specify the "--input_shape" command line parameter to override the default shape which is equal to (600, 600).

The Preprocessor block has been removed. Only nodes performing mean value subtraction and scaling (if applicable) are kept.

WARNING: Logging before flag parsing goes to stderr.

E1103 13:47:32.123020 140314342913792 main.py:317] Exception occurred during running replacer "ObjectDetectionAPIDetectionOutputReplacement" (<class 'extensions.front.tf.ObjectDetectionAPI.ObjectDetectionAPIDetectionOutputReplacement'>): Found the following nodes '[]' with name 'crop_proposals' but there should be exactly 1. Looks like ObjectDetectionAPIProposalReplacement replacement didn't work.

I Have the same issue using:

faster_rcnn_support_api_v1.7.json

faster_rcnn_support_api_v1.10.json

faster_rcnn_support_api_v1.13.json

Anyhow, I suppose the problem is related to the expected input shape.

So, it could be interesting to know that using --input_shape [600,600] at the end of the command (wathever number I use), the error change as below:

E1103 16:37:00.925748 139676718106368 main.py:323] -------------------------------------------------

E1103 16:37:00.925927 139676718106368 main.py:324] ----------------- INTERNAL ERROR ----------------

E1103 16:37:00.925991 139676718106368 main.py:325] Unexpected exception happened.

E1103 16:37:00.926042 139676718106368 main.py:326] Please contact Model Optimizer developers and forward the following information:

E1103 16:37:00.926090 139676718106368 main.py:327] Exception occurred during running replacer "ObjectDetectionAPIPreprocessorReplacement (<class 'extensions.front.tf.ObjectDetectionAPI.ObjectDetectionAPIPreprocessorReplacement'>)": index 2 is out of bounds for axis 0 with size 2

E1103 16:37:00.926984 139676718106368 main.py:328] Traceback (most recent call last):

File "/opt/intel/openvino_2019.1.144/deployment_tools/model_optimizer/mo/utils/class_registration.py", line 167, in apply_replacements

replacer.find_and_replace_pattern(graph)

File "/opt/intel/openvino_2019.1.144/deployment_tools/model_optimizer/mo/front/tf/replacement.py", line 89, in find_and_replace_pattern

self.replace_sub_graph(graph, match)

File "/opt/intel/openvino_2019.1.144/deployment_tools/model_optimizer/mo/front/common/replacement.py", line 131, in replace_sub_graph

new_sub_graph = self.generate_sub_graph(graph, match) # pylint: disable=assignment-from-no-return

File "/opt/intel/openvino_2019.1.144/deployment_tools/model_optimizer/extensions/front/tf/ObjectDetectionAPI.py", line 430, in generate_sub_graph

height, width = calculate_placeholder_spatial_shape(graph, match, pipeline_config)

File "/opt/intel/openvino_2019.1.144/deployment_tools/model_optimizer/extensions/front/tf/ObjectDetectionAPI.py", line 332, in calculate_placeholder_spatial_shape

user_defined_width = user_defined_shape[2]

IndexError: index 2 is out of bounds for axis 0 with size 2

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/opt/intel/openvino_2019.1.144/deployment_tools/model_optimizer/mo/main.py", line 312, in main

return driver(argv)

File "/opt/intel/openvino_2019.1.144/deployment_tools/model_optimizer/mo/main.py", line 263, in driver

is_binary=not argv.input_model_is_text)

File "/opt/intel/openvino_2019.1.144/deployment_tools/model_optimizer/mo/pipeline/tf.py", line 127, in tf2nx

class_registration.apply_replacements(graph, class_registration.ClassType.FRONT_REPLACER)

File "/opt/intel/openvino_2019.1.144/deployment_tools/model_optimizer/mo/utils/class_registration.py", line 190, in apply_replacements

)) from err

Exception: Exception occurred during running replacer "ObjectDetectionAPIPreprocessorReplacement (<class 'extensions.front.tf.ObjectDetectionAPI.ObjectDetectionAPIPreprocessorReplacement'>)": index 2 is out of bounds for axis 0 with size 2

E1103 16:37:00.927108 139676718106368 main.py:329] ---------------- END OF BUG REPORT --------------

E1103 16:37:00.927165 139676718106368 main.py:330] ------------------------------------------------

Again, it is confirmed that the weak point of OpenVino are still the procesesses to generate the IR files !

Do you have any suggestions from model optimizer developers?

Should I have to downgrade OV to 2019.1.133 ?

If yes, where I can find the link to download exactly this version?

Thank you

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

any news from this issue to get an IR for a particular custom-trained Faster RCNN ?

Command used:

sudo python3 mo_tf.py --input_model "/home/cosma/Development/venvov/dataset/train/train_2/fruits_faster_rcnn_inception_inference_graph/frozen_inference_graph.pb" --tensorflow_use_custom_operations_config "/opt/intel/openvino_2019.1.144/deployment_tools/model_optimizer/extensions/front/tf/faster_rcnn_support_api_v1.13.json" --tensorflow_object_detection_api_pipeline_config "/home/cosma/Development/venvov/dataset/train/train_2/fruits_faster_rcnn_inception_inference_graph/pipeline.config" --reverse_input_channels --data_type FP16

ERROR:

[ WARNING ] Model Optimizer removes pre-processing block of the model which resizes image keeping aspect ratio. The Inference Engine does not support dynamic image size so the Intermediate Representation file is generated with the input image size of a fixed size.

Specify the "--input_shape" command line parameter to override the default shape which is equal to (600, 600).

The Preprocessor block has been removed. Only nodes performing mean value subtraction and scaling (if applicable) are kept.

WARNING: Logging before flag parsing goes to stderr.

E1108 21:10:01.812213 140562589083392 main.py:317] Exception occurred during running replacer "ObjectDetectionAPIDetectionOutputReplacement" (<class 'extensions.front.tf.ObjectDetectionAPI.ObjectDetectionAPIDetectionOutputReplacement'>): Found the following nodes '[]' with name 'crop_proposals' but there should be exactly 1. Looks like ObjectDetectionAPIProposalReplacement replacement didn't work.

Thank you

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i can create the NCS model but i cant load it in NCSv1 or NCSv2. :(

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Shubha,

I am facing some issues in converting the FRCNN model file(i.e .pb) to IR files.

Details have mentioned below and I have attached Screen Shot please check it out.

Model Name- faster_rcnn_inception_v2

OpenVino version- openvino_2019.3.379

Command -python mo_tf.py --input_model path\FRCNN_openvino\OCR_Frcnn.pb --output_dir path\FRCNN_openvino\Optimized_Model\test --input_shape [1,600,600,3] --data_type FP32 --tensorflow_use_custom_operations_config C:\PROGRA~2\IntelSWTools\openvino_2019.3.379\deployment_tools\model_optimizer\extensions\front\tf\faster_rcnn_support_api_v1.14.json --tensorflow_object_detection_api_pipeline_config path\FRCNN_openvino\pipeline.config --log_level=DEBUG

Error-[ ERROR ] Cannot infer shapes or values for node "do_reshape_conf".

[ ERROR ] Number of elements in input [300 16] and output [1, 3600] of reshape node do_reshape_conf mismatch

[ ERROR ]

[ ERROR ] It can happen due to bug in custom shape infer function <function Reshape.infer at 0x0000025BA59C0510>.

[ ERROR ] Or because the node inputs have incorrect values/shapes.

[ ERROR ] Or because input shapes are incorrect (embedded to the model or passed via --input_shape).

I even cross-checked the config file as you suggested in an earlier post and there is no much difference we have changed only a few parameters.

Please check it out and suggest the fix as soon as possible.

Thanks and Regards

Gautham N

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page