- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

System information (version)

- OpenVINO=> For conversion OpenVino in Github c8af311 from 2021-06-11 and for inference openvino_2021.2.185

- Operating System / Platform => Windows 10

- Compiler =>

- Problem classification => Model Inference

- Framework: Tensorflow Object Detection API 2.0

- Model name: Efficientdet D0 input resolution 512x512 trained with custom data and exported with Tensorflow object detection API 2.0

- Model optimizer conversion file: model-optimizer\extensions\front\tf\efficient_det_support_api_v2.4.json

Detailed description

At inference, the result of a retrained TF2 Object Detection API EfficientDet D0 with custom data is only an array of zeros except the first value, which is -1, instead of providing bounding boxes.

Steps to reproduce

-

We trained EfficientDet D0 (model) from the Tensorflow Object 2.0 Detection API on custom data and

-

exported the model with the exporter for TF2 to a saved_model.pb

- Then, we converted the EfficientDet D0 with model-optimizer\mo_tf.py (OpenVino in Github c8af311) and used model-optimizer\extensions\front\tf\efficient_det_support_api_v2.4.json.

We used the following settings: APIFILE=%OPENVINOINSTALLDIR%\model-optimizer\extensions\front\tf\efficient_det_support_api_v2.4.json python %OPENVINOINSTALLDIR%\model-optimizer\mo_tf.py ^ --saved_model_dir="exported-models%MODELNAME%\saved_model" ^ --tensorflow_object_detection_api_pipeline_config=exported-models%MODELNAME%\pipeline.config ^ --transformations_config=%APIFILE% ^ --reverse_input_channels ^ --data_type FP32 ^ --output_dir=exported-models-openvino%MODELNAME%_OV%PRECISION%

The model was successfully converted. The model optimizer log can be found here:

Apply to model tf2oda_efficientdet_512x512_pedestrian_D0_LR08 with precision FP32 '#2 Model to OpenVino Intermediate Representation '#21_SoC_EML\openvino"\model-optimizer\extensions\front\tf\efficient_det_support_api_v2.4.json "Start conversion" Model Optimizer arguments: Common parameters: - Path to the Input Model: None - Path for generated IR: C:\Projekte\21_SoC_EML\scripts-and-guides-samples\oxford_pets_reduced_openvino\exported-models-openvino\tf2oda_efficientdet_512x512_pedestrian_D0_LR08_OVFP32 - IR output name: saved_model - Log level: ERROR - Batch: Not specified, inherited from the model - Input layers: Not specified, inherited from the model - Output layers: Not specified, inherited from the model - Input shapes: Not specified, inherited from the model - Mean values: Not specified - Scale values: Not specified - Scale factor: Not specified - Precision of IR: FP32 - Enable fusing: True - Enable grouped convolutions fusing: True - Move mean values to preprocess section: None - Reverse input channels: True TensorFlow specific parameters: - Input model in text protobuf format: False - Path to model dump for TensorBoard: None - List of shared libraries with TensorFlow custom layers implementation: None - Update the configuration file with input/output node names: None - Use configuration file used to generate the model with Object Detection API: C:\Projekte\21_SoC_EML\scripts-and-guides-samples\oxford_pets_reduced_openvino\exported-models\tf2oda_efficientdet_512x512_pedestrian_D0_LR08\pipeline.config - Use the config file: None [ WARNING ] Failed to import Inference Engine Python API in: PYTHONPATH [ WARNING ] DLL load failed while importing ie_api: Das angegebene Modul wurde nicht gefunden. [ WARNING ] Could not find the Inference Engine Python API. At this moment, the Inference Engine dependency is not required, but will be required in future releases. [ WARNING ] Consider building the Inference Engine Python API from sources or try to install OpenVINO (TM) Toolkit using "install_prerequisites.sh" Model Optimizer version: custom_main_1d892296429f4c47c839bce4eba524edff8eb0d3 2021-06-11 13:57:50.460822: W tensorflow/stream_executor/platform/default/dso_loader.cc:60] Could not load dynamic library 'cudart64_110.dll'; dlerror: cudart64_110.dll not found 2021-06-11 13:57:50.478509: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine. 2021-06-11 13:58:23.317335: I tensorflow/compiler/jit/xla_cpu_device.cc:41] Not creating XLA devices, tf_xla_enable_xla_devices not set 2021-06-11 14:00:13.380157: W tensorflow/stream_executor/platform/default/dso_loader.cc:60] Could not load dynamic library 'nvcuda.dll'; dlerror: nvcuda.dll not found 2021-06-11 14:00:13.408711: W tensorflow/stream_executor/cuda/cuda_driver.cc:326] failed call to cuInit: UNKNOWN ERROR (303) 2021-06-11 14:00:13.435937: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:169] retrieving CUDA diagnostic information for host: dp3510 2021-06-11 14:00:13.461395: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:176] hostname: dp3510 2021-06-11 14:00:13.490841: I tensorflow/core/platform/cpu_feature_guard.cc:142] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags. 2021-06-11 14:00:13.575427: I tensorflow/compiler/jit/xla_gpu_device.cc:99] Not creating XLA devices, tf_xla_enable_xla_devices not set 2021-06-11 14:02:19.125553: I tensorflow/core/grappler/devices.cc:69] Number of eligible GPUs (core count >= 8, compute capability >= 0.0): 0 2021-06-11 14:02:19.144580: I tensorflow/core/grappler/clusters/single_machine.cc:356] Starting new session 2021-06-11 14:02:19.154771: I tensorflow/compiler/jit/xla_gpu_device.cc:99] Not creating XLA devices, tf_xla_enable_xla_devices not set 2021-06-11 14:02:21.336527: I tensorflow/core/grappler/optimizers/meta_optimizer.cc:928] Optimization results for grappler item: graph_to_optimize function_optimizer: Graph size after: 5666 nodes (4965), 13173 edges (12465), time = 405.86ms. function_optimizer: Graph size after: 5666 nodes (0), 13173 edges (0), time = 145.277ms. Optimization results for grappler item: __inference_map_while_Preprocessor_ResizeToRange_cond_false_12678_30252 function_optimizer: function_optimizer did nothing. time = 0.002ms. function_optimizer: function_optimizer did nothing. time = 0ms. Optimization results for grappler item: __inference_map_while_body_12631_30367 function_optimizer: Graph size after: 117 nodes (0), 126 edges (0), time = 2.744ms. function_optimizer: Graph size after: 117 nodes (0), 126 edges (0), time = 2.804ms. Optimization results for grappler item: __inference_map_while_Preprocessor_ResizeToRange_cond_true_12677_15652 function_optimizer: function_optimizer did nothing. time = 0.001ms. function_optimizer: function_optimizer did nothing. time = 0.001ms. Optimization results for grappler item: __inference_map_while_cond_12630_38606 function_optimizer: function_optimizer did nothing. time = 0.001ms. function_optimizer: function_optimizer did nothing. time = 0ms.

[ WARNING ] Model Optimizer removes pre-processing block of the model which resizes image keeping aspect ratio. The Inference Engine does not support dynamic image size so the Intermediate Representation file is generated with the input image size of a fixed size. Specify the "--input_shape" command line parameter to override the default shape which is equal to (512, 512). The Preprocessor block has been removed. Only nodes performing mean value subtraction and scaling (if applicable) are kept. [ WARNING ] Using fallback to produce IR. [ SUCCESS ] Generated IR version 10 model. [ SUCCESS ] XML file: C:\Projekte\21_SoC_EML\scripts-and-guides-samples\oxford_pets_reduced_openvino\exported-models-openvino\tf2oda_efficientdet_512x512_pedestrian_D0_LR08_OVFP32\saved_model.xml [ SUCCESS ] BIN file: C:\Projekte\21_SoC_EML\scripts-and-guides-samples\oxford_pets_reduced_openvino\exported-models-openvino\tf2oda_efficientdet_512x512_pedestrian_D0_LR08_OVFP32\saved_model.bin [ SUCCESS ] Total execution time: 510.97 seconds. "Conversion finished" The converted model can be found here: https://owncloud.tuwien.ac.at/index.php/s/0zmcqbY3HkhUIrL

-

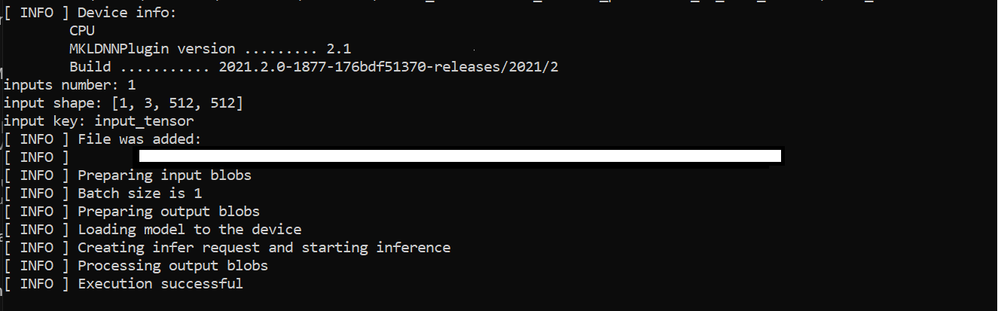

Then we executed the model first with the benchmark_app.py from OpenVino 2021.2.185, which was successful.

-

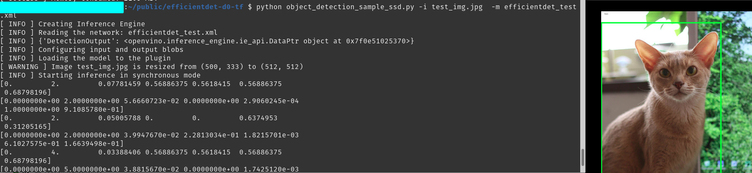

We executed the model with the python script object_detection_sample_ssd.py

- The result was an array with Zeros for each image, except the first value.

Loading network and perform on CPU Starting inference for picture: Abyssinian_116.jpg {'DetectionOutput': array([[[[-1., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.], [ 0., 0., 0., 0., 0., 0., 0.]]]], dtype=float32)}

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Milos Acimovic,

Thank you for your patience, we received an update from developer that the issue has been fixed in the latest OpenVINO release (OpenVINO 2022.1) which will be available soon, or you can pull them from the OpenVINO GitHub (master branch).

Here are quick guides that you can use.

1. Once the latest OpenVINO is available, download and install it in your system.

2. Convert the model to IR using this command:

mo --saved_model_dir ~/openvino_models/saved_model --transformations_config front/tf/efficient_det_support_api_v2.4.json --tensorflow_object_detection_api_pipeline_config ~/openvino_model/fruit_export/pipeline.config -o ~output_directory

3. We also made some modifications to the Python script that is compatible with the latest OpenVINO library (see attachment).

Here are the changes we made:

net = IENetwork(model=model_xml, weights=model_bin) to net --> ie.read_network(model_xml, model_bin)

net.input --> net.input_info

net.inputs[self.input_blob].shape --> net.input_info[self.input_blob].input_data.shape

4. Run inference using the optimized model and please ensure the input image is 512x512 (since this is the input image dimension that the model was trained on).

Hope this information helps.

Sincerely,

Zulkifli

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Milos Acimovic,

Thank you for reaching out to us.

In this thread there is a discussion regarding this issue, try to follow the suggestion and see if the solution could resolve your issue.

Sincerely,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Again, We executed the model with the python script object_detection_sample_ssd.py. That is a script available in OpenVINO's demos.

Here's a snippet from it to refresh your memory:

# ---------------------------Step 6. Prepare input---------------------------------------------------------------------

original_image = cv2.imread(args.input)

image = original_image.copy()

_, _, net_h, net_w = net.input_info[input_blob].input_data.shape

if image.shape[:-1] != (net_h, net_w):

log.warning(f'Image {args.input} is resized from {image.shape[:-1]} to {(net_h, net_w)}')

image = cv2.resize(image, (net_w, net_h))

# Change data layout from HWC to CHW

image = image.transpose((2, 0, 1))

# Add N dimension to transform to NCHW

image = np.expand_dims(image, axis=0)

i.e. there is no scaling the image values on the range from (0, 1) like you suggested with the previous thread. So, something else?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Also a VERY important distinction is that I am not using automl/efficientdet repo to train on custom data,

but Tensorflow Object Detection API 2.x.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Milos Acimovic,

We tested your model on object_detection_sample_ssd.py by using our input image and it was executed successfully. Please share with us your image files.

Sincerely,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the reply

I feel like you didn't understand what the problem is.

It's not about whether or not it executes successfully, it's about whether or not I am getting any meaningful predictions at all.

There are no predicted bounding boxes in the output blob.

Sharing the image.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Milos Acimovic,

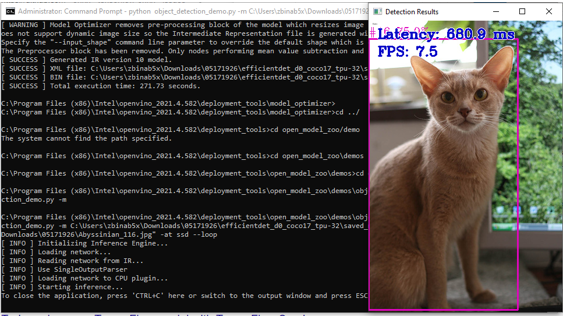

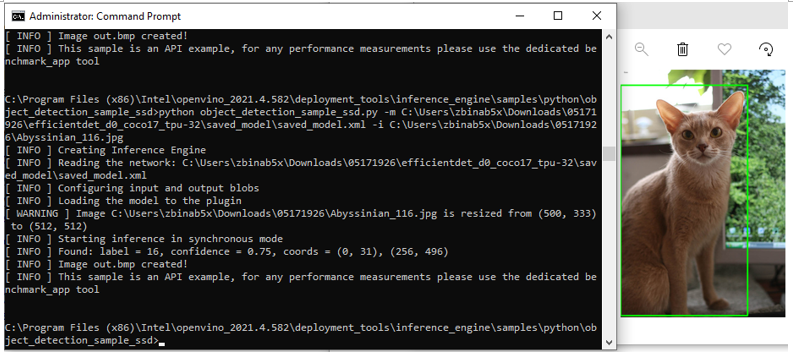

We reproduced your issue, and were able to convert the TensorFlow 2 EfficientDet-D0 model and subsequently executed Object Detection Python Demo and also Object Detection Python Sample SSD using the converted model with OpenVINO 2021.4. Here is the explanation.

The EfficientDet-D0 model was downloaded and converted to IR format using the following command:

python mo_tf.py --saved_model_dir <model_directory>\efficientdet_d0_coco17_tpu-32\saved_model --transformations_config <PATH>\front\tf\efficient_det_support_api_v2.0.json --tensorflow_object_detection_api_pipeline_config <PATH>\efficientdet_d0_coco17_tpu-32\pipeline.config

We used the generated IR files to run the Object Detection Python Demo and Object Detection Python Sample SSD. Both the demo and sample were successfully run, and the bounding boxes were created.

I share the screenshots here.

Object Detection Python Demo

Object Detection Python Sample SSD

When we tried running both these demos using your custom-trained model, we observed that the bounding boxes were not created. This is probably due to incorrect conversion to IR using Model Optimizer.

Hence, we recommend you use the efficient_det_support_api_v2.0.json as the transformations config file with OpenVINO 2021.4 to convert your EfficientDet-D0 model to IR files, using the commands I shared above.

After that, please give a try to run the Object Detection Python Sample SSD using the newly generated IR files, and please share with us your results.

Sincerely,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey Zulkifli,

Thanks so much for your response and for engaging with the issue I was experiencing.

I managed to get it working in the same way you presented, so thanks so much.

I will mark the issue as solved as soon as we solve the problem with the model trained on a custom dataset.

So to update I trained/exported as saved_model my EfficientDet-D0 trained in Tensorflow (2.3) and

then used efficient_det_support_api_v2.0.json as the transformations_config, but still got an error

[ ERROR ] -------------------------------------------------

[ ERROR ] ----------------- INTERNAL ERROR ----------------

[ ERROR ] Unexpected exception happened.

[ ERROR ] Please contact Model Optimizer developers and forward the following information:

[ ERROR ] Exception occurred during running replacer "ObjectDetectionAPIPreprocessor2Replacement (<class 'extensions.front.tf.ObjectDetectionAPI.ObjectDetectionAPIPreprocessor2Replacement'>)":

[ ERROR ] Traceback (most recent call last):

File "/opt/intel/openvino_2021/deployment_tools/model_optimizer/mo/utils/class_registration.py", line 276, in apply_transform

replacer.find_and_replace_pattern(graph)

File "/opt/intel/openvino_2021/deployment_tools/model_optimizer/mo/front/tf/replacement.py", line 36, in find_and_replace_pattern

self.transform_graph(graph, desc._replacement_desc['custom_attributes'])

File "/opt/intel/openvino_2021/deployment_tools/model_optimizer/extensions/front/tf/ObjectDetectionAPI.py", line 710, in transform_graph

assert len(start_nodes) >= 1

AssertionError

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/opt/intel/openvino_2021/deployment_tools/model_optimizer/mo/main.py", line 394, in main

ret_code = driver(argv)

File "/opt/intel/openvino_2021/deployment_tools/model_optimizer/mo/main.py", line 356, in driver

ret_res = emit_ir(prepare_ir(argv), argv)

File "/opt/intel/openvino_2021/deployment_tools/model_optimizer/mo/main.py", line 252, in prepare_ir

graph = unified_pipeline(argv)

File "/opt/intel/openvino_2021/deployment_tools/model_optimizer/mo/pipeline/unified.py", line 13, in unified_pipeline

class_registration.apply_replacements(graph, [

File "/opt/intel/openvino_2021/deployment_tools/model_optimizer/mo/utils/class_registration.py", line 328, in apply_replacements

apply_replacements_list(graph, replacers_order)

File "/opt/intel/openvino_2021/deployment_tools/model_optimizer/mo/utils/class_registration.py", line 314, in apply_replacements_list

apply_transform(

File "/opt/intel/openvino_2021/deployment_tools/model_optimizer/mo/utils/logger.py", line 111, in wrapper

function(*args, **kwargs)

File "/opt/intel/openvino_2021/deployment_tools/model_optimizer/mo/utils/class_registration.py", line 302, in apply_transform

raise Exception('Exception occurred during running replacer "{} ({})": {}'.format(

Exception: Exception occurred during running replacer "ObjectDetectionAPIPreprocessor2Replacement (<class 'extensions.front.tf.ObjectDetectionAPI.ObjectDetectionAPIPreprocessor2Replacement'>)":

[ ERROR ] ---------------- END OF BUG REPORT --------------

[ ERROR ] -------------------------------------------------

Previously I had trained/fine-tuned/exported my EfficientDet-D0 in saved_model format

in the Tensorflow Object Detection API 2.x using Tensorflow 2.5.

Sincerely,

Milos

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Zulkifli,

Tried converting EfficientDet-D0 trained on custom data using your command and got the following error:

[ ERROR ] -------------------------------------------------

[ ERROR ] ----------------- INTERNAL ERROR ----------------

[ ERROR ] Unexpected exception happened.

[ ERROR ] Please contact Model Optimizer developers and forward the following information:

[ ERROR ] Exception occurred during running replacer "ObjectDetectionAPIPreprocessor2Replacement (<class 'extensions.front.tf.ObjectDetectionAPI.ObjectDetectionAPIPreprocessor2Replacement'>)":

[ ERROR ] Traceback (most recent call last):

File "/opt/intel/openvino_2021/deployment_tools/model_optimizer/mo/utils/class_registration.py", line 276, in apply_transform

replacer.find_and_replace_pattern(graph)

File "/opt/intel/openvino_2021/deployment_tools/model_optimizer/mo/front/tf/replacement.py", line 36, in find_and_replace_pattern

self.transform_graph(graph, desc._replacement_desc['custom_attributes'])

File "/opt/intel/openvino_2021/deployment_tools/model_optimizer/extensions/front/tf/ObjectDetectionAPI.py", line 710, in transform_graph

assert len(start_nodes) >= 1

AssertionError

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/opt/intel/openvino_2021/deployment_tools/model_optimizer/mo/main.py", line 394, in main

ret_code = driver(argv)

File "/opt/intel/openvino_2021/deployment_tools/model_optimizer/mo/main.py", line 356, in driver

ret_res = emit_ir(prepare_ir(argv), argv)

File "/opt/intel/openvino_2021/deployment_tools/model_optimizer/mo/main.py", line 252, in prepare_ir

graph = unified_pipeline(argv)

File "/opt/intel/openvino_2021/deployment_tools/model_optimizer/mo/pipeline/unified.py", line 13, in unified_pipeline

class_registration.apply_replacements(graph, [

File "/opt/intel/openvino_2021/deployment_tools/model_optimizer/mo/utils/class_registration.py", line 328, in apply_replacements

apply_replacements_list(graph, replacers_order)

File "/opt/intel/openvino_2021/deployment_tools/model_optimizer/mo/utils/class_registration.py", line 314, in apply_replacements_list

apply_transform(

File "/opt/intel/openvino_2021/deployment_tools/model_optimizer/mo/utils/logger.py", line 111, in wrapper

function(*args, **kwargs)

File "/opt/intel/openvino_2021/deployment_tools/model_optimizer/mo/utils/class_registration.py", line 302, in apply_transform

raise Exception('Exception occurred during running replacer "{} ({})": {}'.format(

Exception: Exception occurred during running replacer "ObjectDetectionAPIPreprocessor2Replacement (<class 'extensions.front.tf.ObjectDetectionAPI.ObjectDetectionAPIPreprocessor2Replacement'>)":

[ ERROR ] ---------------- END OF BUG REPORT --------------

[ ERROR ] -------------------------------------------------

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I do get the same error and debugging documentations on "Graph/Sub-Graph" replacements are quite complicated to follow and use. All the models in Tensorflow2 OD API Model Zoo, are getting convered to OpenVINO IR files. But when we retrain any of the TF2 OD API models on a custom dataset and export it and obtain, a similar "saved_model", converting to OpenVINO IR files, has this issue. I have not been posting the issue in the community here on these issues, but its been there for a long time. I thought the latest OpenVINO version will have something to fix this. Stuck here and thinking about switching to Tensor RT........It is strange to find a "saved_model" from TF2 Model Zoo is getting converted, but not a retrained model and saved/exported to a "saved_model".......Between the original TF2 model to the retrained model (on a custom dataset), I do not change anything (No hyperparameters are touched/changed).......I change only the number of classes, checkpoint files, and path to the labelmap/tfrecord files......And change the number of steps.......

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Milos Acimovic,

Please share with us your custom-trained model. During the conversion, which OpenVINO version did you use?

Sincerely,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sharing with you an example of a custom-trained model. In it you will find the saved_model, pipeline.config and a sample image. Mind you to get meaningful results the confidence threshold needs to be set lower than 0.5 to something like 0.1 but let's first get some bounding boxes from EfficientDet after optimized on OpenVINO. The OpenVINO version I used was 2021.4.582.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Macimovic.......I used to have a work around......I am not sure, if it works on this model......Can you share the label file (.pbtxt file)? I can try.....As I posted earlier, I do have similar issues........I am reading "hard" the Intel documentation.......Have a Gut feeling, there is a direct solution to this, in their documentation......There must be more specific arguments, that has to be used to get the MO grasp, before it converts into an IR format.......If it can covert, a "saved_model", from the TF2 Model Zoo, it should work/convert a re-trained and exported as a "'saved_model"......By the way, did you check outside Tensorboard evaluation, if your retrained and saved_model is detecting the target images? I can check that, if you can share the label_map.pbtxt file......

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here's the label_map.pbtxt if needed. Confirmed that it's detecting, you can check .png, hence, I wrote in the previous message that it's needed to set the confidence(score) threshold low enough somewhere around 0.1.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Milos,

Generally, I first check for detections/bounding box prediction outside tensorflow training/evaluation processes, after training/evaluating the model on a custom dataset for a pre-determined set of epochs. Then, only I try to convert using OpenVINO, and use the IR files on a Intel ATOM/Myriad Edge device.....I have a Vizi-AI starter kit and I can use it to deploy and check using OpenVINO Inference engine in the device. When I checked for off-line detection using your "saved_model"/ label file and test image, it was not detecting (No bounding boxes, even if I set it to a threshold of 0.1.....I run a small python code and pass a CLI argument.....I will share you those here......

This is the argument, I use in the CLI to check for detection....

python detect_objects.py --model_path=/home/kannan/Downloads/fruit_export/saved_model --path_to_labelmap=/home/kannan/Downloads/Fruits_label_map.pbtxt --images_dir=/home/kannan/Downloads/fruit_export/ --threshold 0.1

Do you test it off-line for Bounding box detections and class predictions?

Have a few questions when I looked at your pipeline.config file? Your num-classes = 1, but the label_map.pbtxt has 67 classes...

If you had re-trained the model, your label_map.pbtxt file must show only one class.....

......Other than that most of the changes that you have done are correct......In Line Number 152 (fine_tune_checkpoint: "/home/agremo/ssd/playground/tf2/models/research/object_detection/test_data/chko/ckpt-0"), does this point to the check point from the TF2 Efficientdet Model Zoo?

OpenVINO issue crops up, only if we try to convert a "re-trained model"? It could be some changes in the "re-trained saved_model", that is forcing Model Optimizer to exit (showing the exception)......I am also working on the same issue........If Intel can crack this, it becomes a universal solution.....It will help you as well as me.....

But please check, what I have listed above.......

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Tested offline, all good.

The mismatch in the number of classes is not important. Just wanted to test fine-tuning process, I didn't care if the object inside the bounding box was classified properly.

Yes the fine_tune_checkpoint points to the efficientdet_d0_coco17_tpu-32.

Again I can convert fine using model-optimizer\extensions\front\tf\efficient_det_support_api_v2.4.json

but I am not getting any predicted bounding boxes in the output blob.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hoping you used the image inside fruit_export.zip and not test.png that I shared later with the label map

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I used both the images.....The one inside the fruit_export.zip (the file name was image1.jpg).......I could not see any bounding boxes in this image when I run my python code.....Where as it was predicting on test.png, which had the bounding boxes in the images......I could see bounding boxes, over the existing bounding boxes.......If I increase the threshold to 0.15, the bounding boxes vanishes?

I am wondering and looking at why it is not predicting on both the images......

By the way, 3000 steps is too low for the model to learn........Can you increase the number of steps to 10,000 or 20,000? If you have a GPU, with a Batch size of 4 on Efficientdet B0, will not take more than 1 hour for training?

If you can share the train.tfrecords and test.tfrecord file, I can run it and see how it runs in my GPU.......I have tweaked my system, to run training and testing concurrently.......For every 1000 steps of training, when TF updates the "checkpoint files", I can visualize the training results and their metrics also (coco_detection_metric) also in tensorboard.......I will share you the tensorboard results and also share you the exported files of "saved_model" to you, once the training is finished......

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Vicky_Rama thanks for your help, try setting the threshold to 0.05.

The dataset is freely available on https://public.roboflow.com/object-detection/synthetic-fruit/1 .

This is more for showcasing my problem, my actual dataset, training time is different and threshold that is good enough is higher than 0.5.

This is in the end all besides the point and the problem I am having with OpenVINO.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Milos......I will check the dataset in Roboflow.

This query is to Intel......

(1). Do you advice to freeze the model? Although, TF2 saved_model does not require freezing.....TF2 Model Zoo does not have frozen models, unlike TF1.....We can still freeze the saved_model to a frozen_inference_graph and I remember, OpenVINO converted to the IR format, when I froze the TF2 saved_model....

Intel.....Your inpurts please......

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Vicky,

Thank you for your question and help with this issue.

It is recommended to freeze the model before converting it to IR format. The conversion command will be a bit different. Here is the conversion script for your reference.

Sincerely,

Zulkifli

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page