- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello

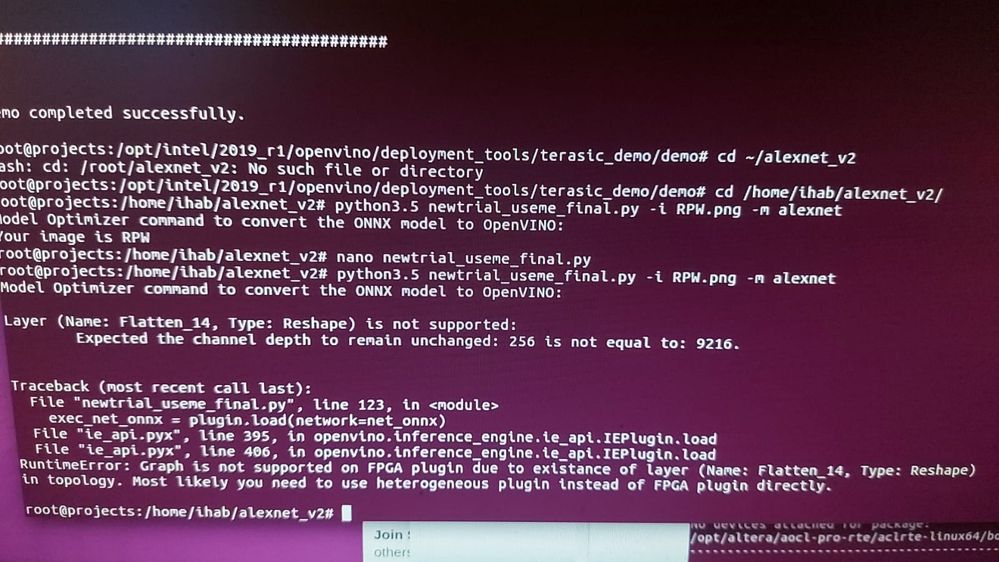

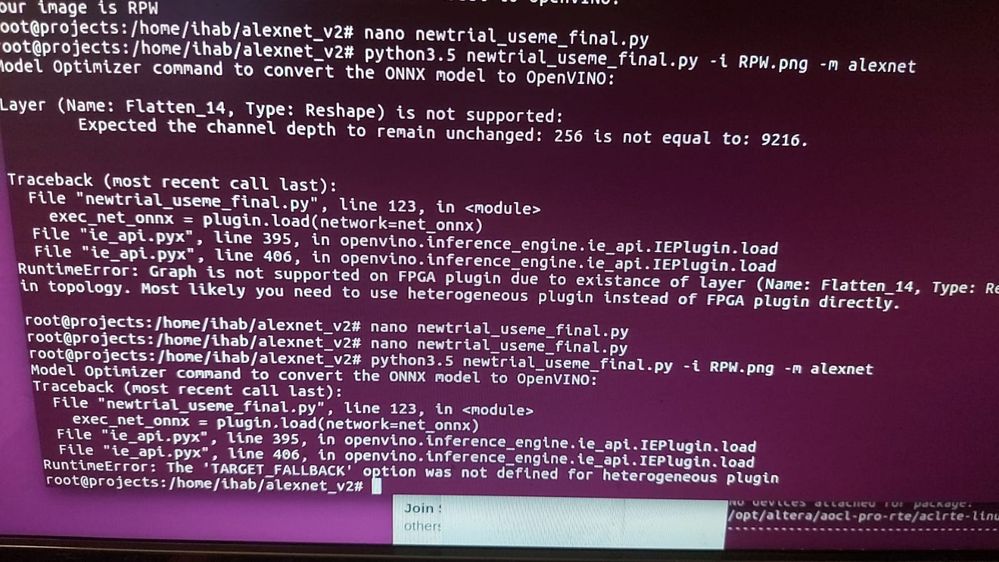

I have an issue when trying to run inference python script on FPGA or HETERO although it is running fine on CPU

Linux: ubuntu 16.04

Python: 3.5

openvino: 2019 R1

<INSTALL_DIR>/deployment_tools/model_optimizer/mo_onnx.py --input_model alexnet.onnx --input_shape "[1,3, 224, 224]" --mean_values "[0.485, 0.456, 0.406]" --scale_values "[58.395, 57.12 , 57.375]" --data_type "FP32" --output_dir .

script.py -m alexnet -i RPW.png -d FPGA

script.py -m alexnet -i RPW.png -d HETERO

on the other hand the squeezenet example in openvino samples is working on HETERO and FPGA normally.

The code:

import sys import time from pathlib import Path import cv2 import matplotlib.pyplot as plt import numpy as np import torch import argparse import os from openvino.inference_engine import IENetwork, IEPlugin parser = argparse.ArgumentParser() parser.add_argument("-m") parser.add_argument("-i") parser.add_argument("-d") args = parser.parse_args() model=os.path.abspath(args.m) imageName=os.path.abspath(args.i) deviceName=args.d BASE_MODEL_NAME = model model_path=model + ".pytorch" onnx_path = model + ".onnx" ir_path = model + ".xml" if not os.path.exists(onnx_path):

dummy_input = torch.randn(1, 3, 224, 224) # For the Fastseg model, setting do_constant_folding to False is required # for PyTorch>1.5.1

torch.onnx.export(

model,

dummy_input,

onnx_path,

opset_version=11,

do_constant_folding=False,

) def normalize(image): """ Normalize the image to the given mean and standard deviation for CityScapes models. """

image = image.astype(np.float32)

mean = (0.485, 0.456, 0.406)

std = (0.229, 0.224, 0.225)

image /= 255.0

image -= mean

image /= std

return image image = cv2.cvtColor(cv2.imread(imageName), cv2.COLOR_BGR2RGB) resized_image = cv2.resize(image, (224, 224)) input_image = np.expand_dims(np.transpose(resized_image, (2, 0, 1)), 0) net_onnx= IENetwork(model="alexnet.xml", weights="alexnet.bin") plugin = IEPlugin(device=deviceName) exec_net_onnx = plugin.load(network=net_onnx) input_layer_onnx = next(iter(exec_net_onnx.inputs)) output_layer_onnx = next(iter(exec_net_onnx.outputs)) # Run the Inference on the Input image... res_onnx = exec_net_onnx.infer( inputs={input_layer_onnx: input_image} ) res_onnx = res_onnx[output_layer_onnx] #print(res_onnx) result_mask_onnx = np.squeeze(np.argmax(res_onnx, axis=1)).astype(np.uint8) #print(result_mask_onnx) if (result_mask_onnx == 0):

print("Your image is not an RPW") elif (result_mask_onnx == 1):

print("Your image is RPW") #show_image_and_result(image, result_mask_onnx)Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi ahmed_hany,

The correct way in using the Hetero plugin is -d HETERO:Device_1,Device_2

Please try running the Python script again with the command below:

script.py -m alexnet -i RPW.png -d HETERO:FPGA,CPU

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for help

And how to run FPGA plugin only, why it is not working with -d FPGA ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi ahmed_hany,

This is because your model contains some layers that are not supported by FPGA plugin. Hence, using HETERO plugin can let those specific layers that are not supported for FPGA plugin, to fall back into CPU plugin.

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It worked with HETERO but it gives completely opposite answer to CPU.

Why can this happen or at least how can I debug that ?

My model is having 2 classes.

In my test CPU gives the right answer while Hetero is wrong

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ahmed_hany,

Please have a try by inferencing with FP16 model to see whether it helps or not. You need to re-convert the model with the option --data_type FP16.

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Changing data type didn't help. FP16 and FP32 are giving similar results and both are having different results in case of HETERO against CPU

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ahmed_hany,

There is a known issue (ID:58) about the accuracy issues on FPGA on AlexNet model. The workaround for this is to upgrade OpenVINO™ version to R2’19 release.

However, the previous versions are no longer available. In this case, please upgrade OpenVINO™ version to 2020.3.2 LTS release as Intel® Distribution of OpenVINO™ toolkit 2020.3.X LTS release will continue to support Intel® Vision Accelerator Design with an Intel® Arria® 10 FPGA and the Intel® Programmable Acceleration Card with Intel® Arria® 10 GX FPGA.

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Does 2020.3.x LTS openvino supports Intel starter platform for openvino toolkit FPGA with cyclone V ?

Thanks,

Ahmed

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ahmed,

For your information, Intel® Arria® 10 GX FPGA Development Kit is no longer supported in 2019 R2 as well as 2020.3.x LTS.

Besides, FPGA with OpenVINO™ also support ended starting from October 2021. Intel has transitioned to the next-generation programmable deep-learning solution based on FPGAs, Intel® FPGA AI Suite.

The devices supported for Intel® FPGA AI Suite are Intel® Agilex™ FPGA, Intel® Cyclone® 10 GX FPGA, Intel® Arria® 10 FPGA.

Inquiries regarding Intel® FPGA AI Suite should be directed to your Intel Programmable Solutions Group account manager or subscribe for the latest updates.

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, Peh_intel. Can you please guide me on where I can get the older versions of OpenVINO with FPGA support or Intel FPGA AI Suite? Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ahmed,

This thread will no longer be monitored since we have provided answers and suggestions. If you need any additional information from Intel, please submit a new question.

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, Ahmed. I am trying to accelerate a CNN model on FPGA using Intel OpenVINO. I am doing my project on Windows OS. I have generated the IR files of the pre-trained vgg16 CNN model using the model optimizer features in OpenVINO and run it on CPU and GPU. Now, I am trying to accelerate the performance on FPGA, however, FPGA support in Intel OpenVINO ended in October 2021. How did you run inference on FPGA this year? Please, I need your guidance. I am in a bit of trouble. I need to complete my project this month. Thank you.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page