- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I can successfully running mask-RCNN with open vino optimizer. However, the execution time is quite long. On CPU, For the mask_rcnn_demo it took 4.5 s for one iteration. I have written a python version of it and the result is similar. I haven't tried it yet with GPU but can someone who have experience on it can tell me the capability of mask-rcnn on openvino ? It is even slower than running with opencv, I remember it is about 2s/iteration on my PC.

I read that it is not supported yet with VPU but is GPU can improve the performance ?

Thank you so much in advance,

Hoa

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey !

From my own experience, i noticed that the first image is everytime slower than others.

For my own project, i process subimages from multiframe tiff images, and i see that the first one is maybe 2 or 3 times longer than others, maybe the time to load the model and network inside ram or cache memory... I don't know !

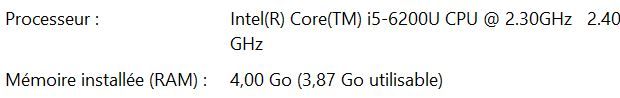

I recorded my inferences time for image 800x1067 on an intel i5-6200U :

Compute times are like that :

[Info] Classes Definition Succeed... [Info] Colors Definition Succeed... Creating Directory... ./Results ... Succeed Image 1 elapsed time: 3709ms Image 3 elapsed time: 2217ms Image 5 elapsed time: 2210ms Image 7 elapsed time: 2223ms Image 9 elapsed time: 2236ms Image 11 elapsed time: 2393ms Image 13 elapsed time: 2288ms Image 15 elapsed time: 2255ms Saving File... ./Results\05899706_k_p1.tif

This time include :

- Resize Subimage

- Inference

- draw defects

I have exactly the same things when i use OpenCV DNN Engine :

[Info] Classes Definition Succeed... [Info] Colors Definition Succeed... Creating Directory... ./Results ... Succeed Image 1 elapsed time: 4688ms Image 3 elapsed time: 4111ms Image 5 elapsed time: 4048ms Image 7 elapsed time: 3987ms Image 9 elapsed time: 4004ms Image 11 elapsed time: 4446ms Image 13 elapsed time: 4337ms Image 15 elapsed time: 4124ms Saving File... ./Results\05899706_k_p1.tif

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Wow, it's quite weird that it takes me more time than you. Which code (sample) exactly did you use ? I ran it in python with image taking from webcam. For the mask rcnn demo I ran just 1 image.

Thank you,

Hoa

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Now the inference time reduce in my case to 2s/frame as yours. However, it is still slow. I'm thinking about trying other model like mask rcnn with mobilenet backbone or Deeplab following this page https://github.com/PINTO0309/OpenVINO-DeeplabV3 . I'm very appreciated if someone with experience on this can give me advices.

Thank you in advance

Hoa

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Katsuya,

Hei, "dhoa" it's me there :D, thank you for coming here, I'm kind of nervous character so asking question everywhere.

Actually now I'm testing several models on UP square board with an Intel Pentium N4200.

- Mask RCNN is just too slow. I get 0.1FPS on CPU and 0.2FPS on GPU

- Deeplab as you said is not fully supported by OpenVINO then we need to offloading Tensorflow layer. However, I'm not succeed yet to install tensorflow on UP board. I think I need to install it from source and not just by using pip. Because of this difficulty, I reversed back and checked your UNet model. I found that it has about 5FPS (same as Deeplab) on CPU with my computer so I tried it on the UP board.

- UNet on UP board: CPU : 1.6FPS GPU: 2.5 FPS

Actually, for me 2.5 FPS is still slow. I stay with UNet now because of its easy to use OpenVINO but very appreciated if someone have new idea on this segmentation problem.

Thank you,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Hyodo Katsuya , Can I ask you how do you get your Unet model ? I can't find unet verified frozen model (like training on Pascal VOC model) which have good performance like the Deeplab model you share.

Thank you in advance,

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page