- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a folder with three files (saved_model.bin saved_model.mapping saved_model.xml) as explained by intel on this link:

PROCESS THAT I FOLLOWED:

I have created the three files follow the process from tensorflow website:

https://www.tensorflow.org/api_docs/python/tf/saved_model/save

I have created the IR:

https://docs.openvino.ai/2021.4/openvino_docs_get_started_get_started_demos.html

Once that i have the IR how can i process it with the Neural Compute stick 2?

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi scavallarin,

Thank you for reaching out to us.

OpenVINO toolkit provides plenty of inference samples and demos for various inference use cases using IR model.

You can follow this guide to perform an Image Classification code sample or Security Barrier Camera Demo Application with the corresponding models:

|

Model Name |

Code Sample or Demo App |

|

squeezenet1.1 |

Image Classification Sample |

|

vehicle-license-plate-detection-barrier-0106 |

Security Barrier Camera Demo |

|

vehicle-attributes-recognition-barrier-0039 |

Security Barrier Camera Demo |

|

license-plate-recognition-barrier-0001 |

Security Barrier Camera Demo |

To perform the inference samples and demos on Intel ® Neural Compute Stick 2 (NCS2),use the -d MYRIAD device flag when executing the samples or demos.

On another note, if you're using Linux OS with NCS2, you will need to install the driver dependencies for Intel® Neural Compute Stick 2 as described here before inferencing.

Regards,

Hairul

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HI Hairul,

I do know how to install the drivers, on Windows and Linux. My problem is not how to run sample models, i have just to read the pages the describe the process into the intel website. The installation process is explained.

What i found is complitely missing is an explanation of when someone would like to use his own tensorflow model. I would like to get it clear in mind how to start from a simple .py code -> convert it into IR format and then --> execute it with the stick.

The stick looks to me like something that can run only prebuild models I can't find documentation that help me on starting from .py to use the stick properly. There is something that I'm missing about this device.

When you wrote :

"To perform the inference samples and demos on Intel ® Neural Compute Stick 2 (NCS2),use the -d MYRIAD device flag when executing the samples or demos."

This is fine is easy and straight forward, i already run sumples on the stick, but like a mokey pressing button without having a clear understanding about what i was doing. I want to run my model, my code.

Can you please give me a precise list of action, step by step instruction on how to run this code on the stick:

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

class Antirectifier(layers.Layer):

def __init__(self, initializer="he_normal", **kwargs):

super(Antirectifier, self).__init__(**kwargs)

self.initializer = keras.initializers.get(initializer)

def build(self, input_shape):

output_dim = input_shape[-1]

self.kernel = self.add_weight(

shape=(output_dim * 2, output_dim),

initializer=self.initializer,

name="kernel",

trainable=True,

)

def call(self, inputs):

inputs -= tf.reduce_mean(inputs, axis=-1, keepdims=True)

pos = tf.nn.relu(inputs)

neg = tf.nn.relu(-inputs)

concatenated = tf.concat([pos, neg], axis=-1)

mixed = tf.matmul(concatenated, self.kernel)

return mixed

def get_config(self):

# Implement get_config to enable serialization. This is optional.

base_config = super(Antirectifier, self).get_config()

config = {"initializer": keras.initializers.serialize(self.initializer)}

return dict(list(base_config.items()) + list(config.items()))

# Training parameters

batch_size = 128

num_classes = 10

epochs = 3

# The data, split between train and test sets

(x_train, y_train), (x_test, y_test) = keras.datasets.mnist.load_data()

x_train = x_train.reshape(-1, 784)

x_test = x_test.reshape(-1, 784)

x_train = x_train.astype("float32")

x_test = x_test.astype("float32")

x_train /= 255

x_test /= 255

print(x_train.shape[0], "train samples")

print(x_test.shape[0], "test samples")

# Build the model

model = keras.Sequential(

[

keras.Input(shape=(784,)),

layers.Dense(256),

Antirectifier(),

layers.Dense(256),

Antirectifier(),

layers.Dropout(0.5),

layers.Dense(10),

]

)

# Compile the model

model.compile(

loss=keras.losses.SparseCategoricalCrossentropy(from_logits=True),

optimizer=keras.optimizers.RMSprop(),

metrics=[keras.metrics.SparseCategoricalAccuracy()],

)

# Train the model

model.fit(x_train, y_train, batch_size=batch_size, epochs=epochs, validation_split=0.15)

# Test the model

model.evaluate(x_test, y_test)

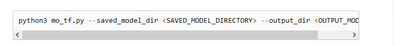

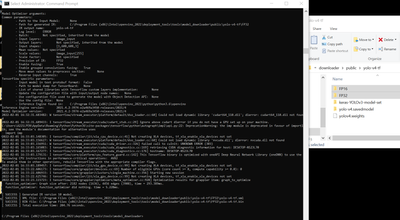

STEP 1 MODEL OPTIMIZER execute this mo-tf.py (that i suppose it does the job of executing the conversion)

- -output dir <OUTPUT " width="778" height="88" border="0">

i convert the file into IR format, so i will have three files.

STEP 2

????

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi scavallarin,

OpenVINO Toolkit is easy to use, and there are only three simple steps involved.

Step 1: Build

Use the Open Model Zoo to find open-source, pretrained, and preoptimized models ready for inference, or use your own deep-learning model.

Step 2: Optimize

Run the trained model through the Model Optimizer to convert the model to an Intermediate Representation (IR), which is represented in a pair of files (.xml and .bin). These files describe the network topology and contain the weights and biases binary data of the model.

Step 3: Deploy

Use the Inference Engine to run inference and output results on multiple processors, accelerators, and environments with a write once, deploy anywhere efficiency.

OpenVINO provides demos and samples to help you implement specific deep learning scenarios. You can try running your converted model (IR) with the relevant supported demos and samples.

For example, if you have an Object Detection model, you can try using your model with

Object Detection Python Demo or Object Detection C++ Demo. To run the demos using Neural Compute Stick 2 (NCS2), you only need to use the -d MYRIAD device flag. Full information for running the demos are available in the respective pages, and you can refer to this video detailing the Inference Process on NCS2.

You can also create your own demo script to test with your custom model. You may refer to the relevant demos and samples to create your own application.

Regards,

Hairul

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is not a reply is a CTRC + C and CTRL + V from a document.

Are you sure that you actually know how to do it?

Everywhere there are just demo and prebuild models. My question is cristal clear, how can i run my model?

Could you please ask internally if someone can explain me how to do this? How to run my code?

Many thanks

s

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

First and foremost, you need to understand & distinguish between a model and inferencing code.

The origin of a model could come from PyTorch, onnx, etc.

This is where you can see they are in a Python script (model.py) which later on will be saved in the specific model format (eg: model.onnx, model.caffe, etc).

Meanwhile, the inferencing code (from OpenVINO perspective) uses the OpenVINO Inference Engine library combined with other required libraries, designed to interpret the model into the desired representation

(eg: object detection, classification,etc).

These are the steps to infer a model with MYRIAD:

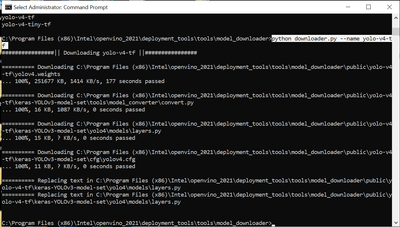

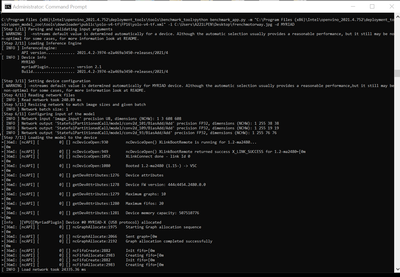

1. Download a model(I'm using OpenVINO Model Downloader): python downloader.py --name yolo-v4-tf

2. Convert the model from its native format into IR(xml and bin): python converter.py --name yolo-v4-tf

Note: I'm using a model officially downloaded using OpenVINO Model Downloader, hence, I can use this conversion script.

If you are using a third-party model (downloaded from somewhere else or custom-made) you'll need to use Model Optimizer.

You may refer here: Converting a Tensorflow Model

Ensure your Tensorflow topology is listed in the Supported Topology section, else, it's not supported.

If your model is not frozen, you'll need to do so. All details including baby steps are available in that documentation.

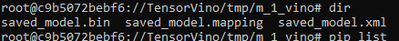

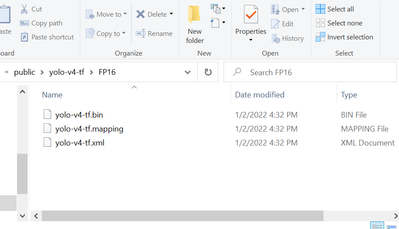

Notice that there are 2 new folders with the name FP32 and FP16. These are the IR files folder. FP32 and FP16 are the names of the IR datatype. Make sure you have 3 files in it (XML, bin, mapping).

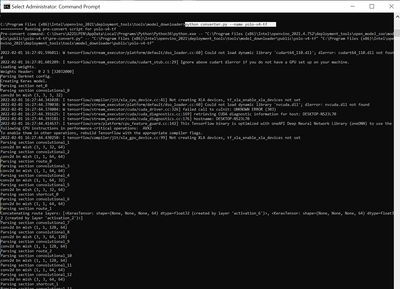

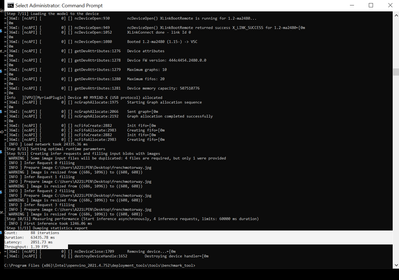

3. Infer the IR using OpenVINO Benchmark App (simplest inference sample app ever for testing): python benchmark_app.py -m "C:\Program Files (x86)\Intel\openvino_2021.4.752\deployment_tools\open_model_zoo\tools\downloader\public\yolo-v4-tf\FP16\yolo-v4-tf.xml" -i C:\Users\A221LPEN\Desktop\frenchmotorway.jpg -d MYRIAD

Parse the parameter -d MYRIAD in the command to infer on NCS2.

Please take a note, for MYRIAD, you must use the FP16 format.

If you managed to reach this stage, then you can graduate from the baby steps and proceed into the advanced process.

You need to know when to specify input shape and when to reverse input channels which vary according to your "custom model".

Feed these arguments with the Model Optimizer command and if you fulfill all the requirements, you'll get proper IR files.

All inferencing steps concepts are the same as I had shown above.

Hope this helps!

Sincerely,

Iffa

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you very much, this is an outstanding answer!!

It will take some time for me to digest and study it.

best

s

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Greetings,

Intel will no longer monitor this thread since this issue has been resolved. If you need any additional information from Intel, please submit a new question.

Sincerely,

Iffa

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page