- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please help me with the difficulties I am experiencing trying to convert a YOLOv4-tiny model to IR (I downloaded the three files classes.txt, yolov4-tiny.cfg and yolov4-tiny.weights somewhere from the Internet some time ago and the model works fine).

I am following this page (https://docs.openvino.ai/latest/workbench_docs_Workbench_DG_Tutorial_Import_YOLO.html) and below, I will be asking questions (I colored in red for you to find them easily) where I have difficulties to understand:

Opening the Github (in the browser) for Yolov4-tiny (https://github.com/openvinotoolkit/open_model_zoo/tree/master/models/public/yolo-v4-tiny-tf), I opened the model.yml file and found these converter parameters:

model_optimizer_args:

- --input_shape=[1,416,416,3]

- --input=image_input

- --scale_values=image_input[255]

- --reverse_input_channels

- --input_model=$conv_dir/yolo-v4-tiny.pb

I noticed the arguments differs from YOLOv4, where the YOLOv4 has an argument that reads --saved_model_dir=$conv_dir/yolo-v4.savedmodel while the Yolov4-tiny has an argument that reads --input_model=$conv_dir/yolo-v4-tiny.pb. But even then, I cannot understand what does the YOLOv4 page mean when it says, "Here you can see that the required format for the YOLOv4 model is SavedModel". What does it mean by "SavedModel"? Does it mean the converted filename extension has to be declared ending with ".savedmodel"?

Opening the Github (in the browser) for Yolov4-tiny, I opened the pre-convert.py file and found the parameters required to use the converter:

subprocess.run([sys.executable, '--',

str(args.input_dir / 'keras-YOLOv3-model-set/tools/model_converter/convert.py'),

str(args.input_dir / 'keras-YOLOv3-model-set/cfg/yolov4-tiny.cfg'),

str(args.input_dir / 'yolov4-tiny.weights'),

str(args.output_dir / 'yolo-v4-tiny.h5'),

'--yolo4_reorder',

], check=True)

I noticed the input_dir argument asks for the "yolov4-tiny.weights" file and the output_dir says "yolo-v4-tiny.h5". Shouldn't the output_dir filename extension be ".pb"? I ask because I noticed that for YOLOv4, both, the model.yml conv_dir and the pre-converter.py output_dir had the same filename with same filename extension ".savedmodel".

I ran the git clone to download the Darknet-to-TensorFlow converter with no issues. I prepared and activated the virtual environment with no issues as well. I saw some warnings (or errors) when I installed the ./keras-YOLOv3-model-set/requirements.txt the first time but, I ran the installation a second time and, I saw no warnings nor errors.

Following the YOLOv4 page again, where I have to organize the folders and files, I understood I had to put the "yolov4-tiny.weights" file (in my case) in the root of the keras-YOLOv3-model-set folder and the "yolov4-tiny.cfg" file in the "cfg" subfolder. However, I could not understand why is it saying there has to be a file named "saved_model" (on the root of the keras-YOLOv3-model-set folder)? Where is this file? And in my case, what should I put instead of "saved_model"?

Finally, should the script to run the converter for my yolov4r-tiny model read as follows?

python keras-YOLOv3-model-set/tools/model_converter/convert.py keras-YOLOv3-model-set/cfg/yolov4-tiny.cfg yolov4-tiny.weights yolo-v4-tiny.pb --yolo4_reorder

If the above script is wrong, can you please write to me how it should read?

Thank you in advanced and will be waiting for your reply,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello RGVGreatCoder,

The yolov4-tiny model was converted to SavedModel format. To convert the model to OpenVINO IR format, specify the--saved_model_dir parameter in the SavedModel directory.

Modify your command as follows:

mo --saved_model_dir C:\openvino-models -o C:\openvino-models\IR --input_shape [1,608,608,3] --data_type=FP16 -n yolov4-tiny

[ SUCCESS ] Generated IR version 10 model.

[ SUCCESS ] XML file: C:\Users\yolo-v4-tiny\yolov4-tiny.xml

[ SUCCESS ] BIN file: C:\Users\yolo-v4-tiny\yolov4-tiny.bin

[ SUCCESS ] Total execution time: 26.04 seconds.

Sincerely,

Zulkifli

Link Copied

- « Previous

-

- 1

- 2

- Next »

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello RGVGreatCoder,

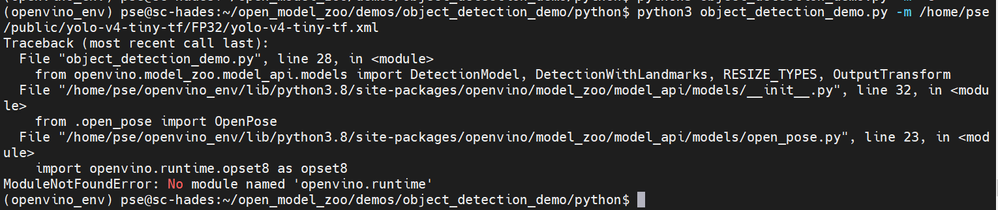

Sorry for the delay. I’m able to reproduce the error:

This error is due to openvino-dev (version 2021.4.2) is not compatible with the cloned Open Model Zoo repository (version 2022.1).

To eliminate the error:

1. Remove the current OMZ folder

rmdir /s open_model_zoo

2. Clone the Open Model Zoo 2021.4.2 repository:

git clone -b 2021.4.2 https://github.com/openvinotoolkit/open_model_zoo.git

3. Run the Object Detection Python demo:

python object_detection_demo.py -m <model_directory>\yolov4-tiny.xml -i <input_directory>\1644965910719.JPEG -at yolov4 --loop

Sincerely,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I ran your steps above and the object_detection_demo.py program finally opened up and ran my jpg and mp4 media files.

Question: Why were the detected objects not boxed like you showed on one of your replies?

Okay, so now it was time to test the object_detection_demo.py program on a miniPC with Windows 10 that will only be used to run the IR models by inference. So I started preparing the device as follows:

- Installed Python 3.8.10 with PATH from https://www.python.org/downloads/windows/

- Installed OpenVINO with PIP: pip install openvino

- Upgraded pip: python -m pip install --upgrade pip

- Installed OpenCV: pip install opencv-python

- Copied the open_model_zoo folder my development PC (the new clone: git clone -b 2021.4.2 https://github.com/openvinotoolkit/open_model_zoo.git) to this miniPC.

- Tried to Configure the Python model API installation on this step but it failed as follows:

>cd open_model_zoo\demos\common\python >python setup.py bdist_wheel python: can't open file 'setup.py': [Errno 2] No such file or directory

I found three setup.py files in open_model_zoo folder none worked with the parameter "bdist_wheel". This is what it displayed:

>python setup.py bdist_wheel

usage: setup.py [global_opts] cmd1 [cmd1_opts] [cmd2 [cmd2_opts] ...]

or: setup.py --help [cmd1 cmd2 ...]

or: setup.py --help-commands

or: setup.py cmd --helpI tried to run the object_detection_demo.py program on the miniPC anyway as follows and it also failed as follows:

>cd open_model_zoo\demos\common\python

>python object_detection_demo.py -at yolov4 -d GPU --loop -m C:\Users\manue\source\repos\TrafficGateTracker\TrafficGateTracker.py-camera\openvino-models\chairs\yolov4-tiny\yolov4-tiny-416.xml -i C:\Users\manue\source\repos\TrafficGateTracker\TrafficGateTracker.py-camera\videos\chairs1.mp4 --labels C:\Users\manue\source\repos\TrafficGateTracker\TrafficGateTracker.py-camera\yolo-models\chairs\classes.txt

Traceback (most recent call last):

File "object_detection_demo.py", line 33, in <module>

import models

File "C:\Users\manue\Documents\Programs\open_model_zoo\demos\common\python\models\__init__.py", line 22, in <module>

from .hpe_associative_embedding import HpeAssociativeEmbedding

File "C:\Users\manue\Documents\Programs\open_model_zoo\demos\common\python\models\hpe_associative_embedding.py", line 18, in <module>

from scipy.optimize import linear_sum_assignment

ModuleNotFoundError: No module named 'scipy'This tells me I need to configure the model API installation which failed on step #6 above.

Please let me know how to fix this issue.

Thank you again and will be waiting for your reply.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello RGVGreatCoder,

As you can see in my previous response. I’m comparing your original yolov4-tiny model (the first model you shared), your latest yolov4-tiny model, and the Intel OMZ yolo-v4-tiny-tf model. Based on these 3 models, the latest yolov4 tiny model didn’t detect the object.

Have you tested your native yolov4-tiny model whether it working or not?

To install openvino-dev 2021.4.2 from pip, please follow the instruction from here.

To eliminate the ModuleNotFoundError: No module named 'scipy', run this command:

pip install scipy

Sincerely,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I was able to successfully run the object_detection_demo.py program on my Windows 10 miniPC, that is destined not for development, not for creating or optimizing IR models, but only to run IR models by inference, by preparing it as follows:

- Installed CMake (click Windows x64 Installer latest version): https://cmake.org/download/

- Installed Python 3.8.10 with PATH from https://www.python.org/downloads/windows/

- Installed OpenVINO (not the development version) with PIP: pip install openvino==2021.4.2

- Upgraded pip: python -m pip install --upgrade pip

- Installed OpenCV: pip install opencv-python

- Copied to this miniPC, the open_model_zoo folder from my development PC (the folder that got created by running the new clone:

git clone -b 2021.4.2 https://github.com/openvinotoolkit/open_model_zoo.git)

Note: I did this to avoid installing git on the deployment device. - Installed SciPy: pip install scipy

I was surprised I did not need to "Configure the model API installation" as you wrote on one of your previous instructions. That was a relief.

I then ran the object_detection_demo.py as follows:

python open_model_zoo/demos/object_detection_demo/python/object_detection_demo.py -at yolov4 -d GPU --loop -m <path_to_file>\yolov4-tiny-416.xml -i <path_to_file>\chairs2.mp4 --labels <path_to_file>\classes.txtHowever, the detected objects are not being boxed. And yes, the original yolov4-tiny-custom.weights/yolov4-tiny-custom.cfg do work and do also box the detected objects. These are run on another Python program where I called this model directly using opencv. Except that it processes 4FPS which is why I am going through all this effort trying to create an IR model (from the yolov4-tiny model which are the yolov4-tiny.weight and yolov4-tiny.cfg files) and run the IR model with OpenVINO.

I will write below again, the steps I performed to create the IR model from the yolov4-tiny model:

# 1) Pre-convert to create the saved_model.pb file

python keras-YOLOv3-model-set/tools/model_converter/convert.py <path_to_file>\yolov4-tiny-custom.cfg <path_to_file>\yolov4-tiny-custom_last.weights <path_to_store_the_save_model.pb_file>

# I created an IR model with reverse input channel and another IR model without it:

# 2.a) Run model optimizer with reverse input channels

mo --saved_model_dir <path_to_the_save_model.pb_file> -o <path_to_create_IR_model> --input_shape [1,416,416,3] --reverse_input_channels --data_type=FP16 -n yolov4-tiny-YesReverse

# 2.b) Run model optimizer without reverse input channels

mo --saved_model_dir <path_to_the_save_model.pb_file> -o <path_to_create_IR_model> --input_shape [1,416,416,3] --data_type=FP16 -n yolov4-tiny-NoReverse

# Ran the object_detection_demo.py program trying IR model with reverse channel input and detected objects were not boxed

python open_model_zoo/demos/object_detection_demo/python/object_detection_demo.py -at yolov4 -d GPU --loop -m <path_to_file>\yolov4-tiny-YesReverse.xml -i <path_to_file>\chairs2.mp4 --labels <path_to_file>\classes.txt

# Ran the object_detection_demo.py program trying IR model without reverse channel input and detected objects were also not boxed

python open_model_zoo/demos/object_detection_demo/python/object_detection_demo.py -at yolov4 -d GPU --loop -m <path_to_file>\yolov4-tiny-NoReverse.xml -i <path_to_file>\chairs2.mp4 --labels <path_to_file>\classes.txt

Questions:

- What am I missing that is causing the program not to box the detected objects?

- The miniPC ran the object_detection_demo.py program successfully however, at an average of 7.7FPS. How can I increase the frames per second?

- Not relevant to this particular case, How can I check the openvino version when it was installed through PIP?

Thank you for your patience and will be waiting for your reply,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello RGVGreatCoder,

1. To get the bounding box, please use this MO command to convert the model:

mo --saved_model_dir <path_to_the_save_model.pb_file> -o < path_to_create_IR_model> --input_shape [1,416,416,3] --reverse_input_channels --data_type=FP16 -n yolov4-tiny-YesReverse --scale_values=[255,255,255]

2. You can use the Post-Training Optimization Tool to accelerate the inference of your model. You can check the performance comparison in Intel Distribution of OpenVINO toolkit Benchmark Results.

3. To check the installed openvino version, you can run the following command. Please ensure that you run the command in the virtual environment.

pip show openvino

Sincerely,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

FANTASTIC! EVERYTHING WORKED! I AM HAPPY!

What made the difference creating the model that could show the boxes on the detected objects was the attribute "--scale_values=[255,255,255]" on the "mo" script line.

I read about the "scale values" on your site but I am not sure I can understand exactly what it is. Would you be able to explain me what it is in easier words?

I could also make use of the connected USB Camera to my miniPC by setting the Input Parameter "-i 0 " as follows:

# 0 is the first camera id connected to the device.

python open_model_zoo/demos/object_detection_demo/python/object_detection_demo.py -at yolov4 -d GPU --loop -m <path_to_file>\yolov4-tiny-YesReverse.xml -i 0 --labels <path_to_file>\classes.txt

I really appreciate your patience, your time and effort on getting back to me with answers to all of my questions on this particular case!

If you can answer my question above, I will appreciate. After that, you may finally consider this case resolved and done.

THANK YOU A TON !

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello RGVGreatCoder,

I’m glad to hear that all the issues have been resolved.

Adding the scale_values will have the data values and weights analyzed by the targeted device correctly and there will be no overflow. You can refer to “When to Specify Mean and Scale Values” for more information.

Thank you for your question. This thread will no longer be monitored since all issues have been resolved. If you need any additional information from Intel, please submit a new question.

Sincerely,

Zulkifli

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- « Previous

-

- 1

- 2

- Next »