- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

I'm trying to reproduce the same results (prints) provided by the code below, that was created using the old Openvino version:

ie = IECore( )

net = ie.read_network (model = "irfiles/resnet-50.xml", weights = "irfiles/resnet-50.bin")

exec_net = ie.load_network (network = net, device_name = "HETERO:CPU, GPU")

layers_map = ie.query_network (network=net, device_name = "HETERO:CPU, GPU")

for layer in layers_map:

print ( " {}: {}" .format (layer, layer_map[layer]))

In this example, the results were:

conv1: GPU

conv1_relu: GPU

data: CPU

fc1000: GPU

In the new Openvino release, which APIs and/or parameters could be used to get the same results?

Thanks,

Robson

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Robson,

The purposes to execute networks in the heterogeneous mode are as follows:

- To utilize accelerators power and calculate heaviest parts of the network on the accelerator and execute not supported layers on fallback devices like CPU.

- To utilize all available hardware more efficiently during one inference.

Heterogeneous plugin will enable automatic inference splitting between several Intel devices if a device doesn’t support certain layers and the fallback will be CPU. There are manual and automatic modes for assigning affinities. In your case, automatic mode assigns all operations that can be executed according to the priorities that you set. In (HETERO: CPU, GPU,), the priority is on the CPU and all operation will execute on the CPU since the device support all the layers in the operation.

Regards,

Aznie

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Robson,

Thanks for reaching out.

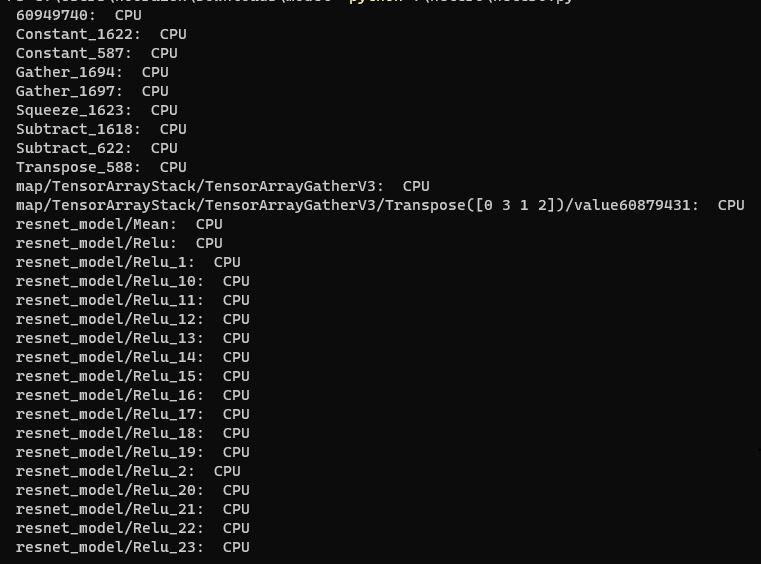

I am able to generate the same result using your code with the latest OpenVINO version 2022.3. Below is the output when inference with resnet-50-tf model.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aznie,

Thanks a lot by the feedback.

I'll try and check the results.

BR,

Robson

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aznie,

I can confirm that the code using Heterogeneous approach, worked.

However, I have a doubt: using two different syntax like: HETERO: CPU, GPU and HETERO: GPU, CPU, the results indicated to me, that there is a kind of priority based on the name of the first device used after HETERO:.

I mean, if we use, for example: HETERO: CPU, GPU, all the layers in the model were mapped to CPU and when we change to GPU first, all layers were mapped to GPU.

Is it correct?

I was expecting that, AUTOMATICALLY the Inference Engine should select some kind of "default settings", selecting the best devices for each layer in order reach the best possible performance considering the combination of CPU and GPU available, but, in fact, this is was not the case. I used two different AI models to check, but the results were the same (all layers just mapped to the fist device in the list after HETERO:. Is this the expected behavior? I'm using an Intel CPU with an integrated GPU, this situation could be the reason why the same device was mapped in all layers?

Thanks.

BR,

Robson

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Robson,

The purposes to execute networks in the heterogeneous mode are as follows:

- To utilize accelerators power and calculate heaviest parts of the network on the accelerator and execute not supported layers on fallback devices like CPU.

- To utilize all available hardware more efficiently during one inference.

Heterogeneous plugin will enable automatic inference splitting between several Intel devices if a device doesn’t support certain layers and the fallback will be CPU. There are manual and automatic modes for assigning affinities. In your case, automatic mode assigns all operations that can be executed according to the priorities that you set. In (HETERO: CPU, GPU,), the priority is on the CPU and all operation will execute on the CPU since the device support all the layers in the operation.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Robson,

This thread will no longer be monitored since this issue has been resolved. If you need any additional information from Intel, please submit a new question.

Regards,

Aznie

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page