- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Katsuya-san,

Looks like some dependency is not met. What is the output of

ldd -r libtensorflow_call_layer.so

Thanks,

Nikos

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Katsuya-san,

> From this result, can I see what modules are missing?

Yes, I believe so ; seems like a mismatch between OpenVino and tensorflow causing this issue.

Ideally when LD_LIBRARY_PATH is set properly then the ldd -r should not show any undefined symbols.

I am also getting the same issue and trying to find a solution. I will try other TF versions.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Question to the Intel OpenVino team please:

RE: https://software.intel.com/en-us/articles/OpenVINO-ModelOptimizer#offloading-computations-tensorflow

Is the documentation still valid for OpenVino SDK R5 ( computer_vision_sdk_2018.5.445 ) ?

Clone the TensorFlow* r1.4 Git repository.

or we need to checkout a different version of Tensorflow?

Thank you!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Question to the Intel OpenVino team please:

I tried all versions from Tensorflow v1.4.1 to v1.12.0.

Although the installation procedure is somewhat different, the same error is displayed in all versions.

- Inspection result (r1.4 - r1.12)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you Katsuya-san, good find!!

I will read the new docs you found and try the new technique.

( BTW I also tried other versions of tensorflow but no luck. )

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Hyodo, Katsuya,

Any progress about this issue? I have encountered the same issue with you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm waiting for the solution as well. I'm hoping intel could build and verify the working tiny-YoloV3 model. I needed to decide to wait for a solution or looking for an alternative.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

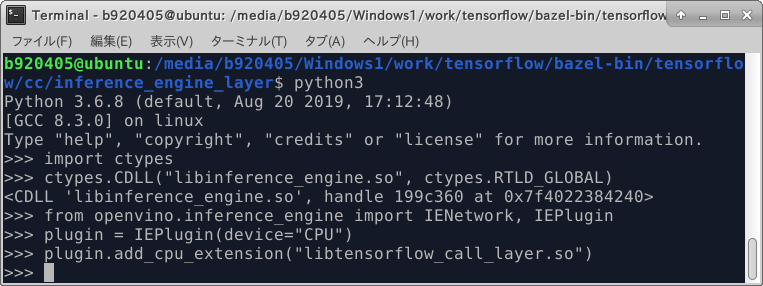

undefined symbols belong to libinference_engine, here is a walkaround:

import ctypes

...

ctypes.CDLL("libinference_engine.so", ctypes.RTLD_GLOBAL); # to export symbols to others now

plugin.add_cpu_extension(plugin_dirs + "libtensorflow_call_layer.so")

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Shaoqiang C. (Intel)

I appreciate it very much!! The error no longer occurs. Let's continue to verify the operation.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page