- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

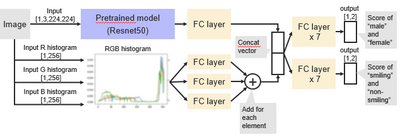

I'm now tring to write script for inference using model with the following network.

This model receives image(NCHW), red histogram(NC), green histogram(NC), blue histogram(NC).

In order to create mat array of histograms, I wrote following script.

cv::Mat hist_data(1, 1, CV_32FC(256), (void*)hist);

*hist is a float pointer to the histogram data.

And I wrote following script to create TensorDesc object before inputting hist_data into the input layer of NC layout of model.

InferenceEngine::TensorDesc tDesc(InferenceEngine::Precision::FP32, {1, 256}, InferenceEngine::Layout::NHWC);

However, following error occured.

C:\j\workspace\private-ci\ie\build-windows-vs2019\b\repos\openvino\inference-engine\src\inference_engine\ie_layouts.cpp:279 Dims and format are inconsistent.

I input InferenceEngine::Layout::NHWC for creating TensorDesc object.

→ because layout of hist_data is NHWC.

I input {1, 256} for creating TensorDesc object.

→ because model receives hist_data in NC layout.

・How should I create hist_data mat array?

・How should I create TensorDesc object correctly?

Please help...

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi 2Yu,

Thanks for reaching out to us.

We are working on this and will update you with the information soon. Could you share the OpenVINO version and command you use to convert the model?

Meanwhile, check out the documentation for Tensor Descriptor class , standard layout format, and ColorFormat for more information. It is recommended to use the Benchmark_app tool to test the inference performance.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @Aznie_Intel

Thank you for reply.

I sent you an e-mail for not only answering your question but also sharing our model.

I would appreciate it if you could check the e-mail I sent.

Regards,

2Yu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi 2Yu,

We have received your email dated 13/3/2022. Thank you for sharing your models. We are investigating this and will update you with the information soon.

Meanwhile, I've tested your ONNX model on Benchmark_app tools, converted the ONNX model into Intermediate Representation (IR) format and no issue arises for that. Also, I am able to run your test_inference.py and get the inference result using the Pytorch model. However, I am unable to get the inference result using the IR file. Could you share your workaround or command to get IR's inference result? So that we can compare the result on our end and understand the difference.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @Aznie_Intel

Sorry for late reply.

I will sent scripts and environment for running the model of OpenVINO soon.

I would appreciate it if you could confirm it.

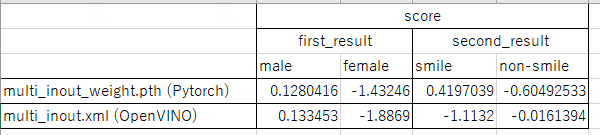

In addition, the results of running the A and B models are shown below.

(Results may vary slightly depending on the processor used)

regards,

2Yu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi 2Yu,

Based on this documentation, we might need to disable NHWC to NCHW by using the argument of --disable_nhwc_to_nchw when optimizing the ONNX model. Here is the command used: python mo.py --input_model multi_inout.onnx --data_type FP32 --disable_nhwc_to_nchw --output_dir to successfully convert the model into IR files.

Apart from that, I have received your email with the script for the IR files inference. We are still working on it and will get back to you soon.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @Aznie_Intel

I added the argument --disable_nhwc_to_nchw when optimizing the ONNX model.

However, inference result didn't change.

Could you run the scripts I sent, and did you find any solution...?

Regards,

2Yu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi 2Yu,

After a detailed investigation, it seems the histogram data's value for both test_inference.py and main.cpp does not match and due to this, the error occurs when the NHWC layout is used.

We would advise you to check the values of the histogram prior to creating the inference blob.

You can also refer to the Hello Classification sample code for some idea on pre-processing the data

If your application allows, we would also suggest using the "ANY" layout instead of specific NC or specifying NC instead of NCHW for the histogram inputs.

Hope this information helps.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @Aznie_Intel

I have also conducted an additional research.

As a result, I found that an onnx model transformation significantly changed the inference results.

So, it seems there is no problem in OpenVINO.

I am currently inquiring teams of Pytorch and ONNX.

https://github.com/pytorch/pytorch/issues/74732

I really appreciate for your kind attention.

Regards,

2Yu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi 2Yu,

Thank you for your question.

This thread will no longer be monitored since we have provided the information. If you need any additional information from Intel, please submit a new question.

Regards,

Aznie

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page