- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am trying to run the Openvino Object Detection python demo on my server dual socket Intel Xeon Gold 6230. The demo run perfectly but the result i get is really bad. I have an impression that the application runs solely on single core of one socket xeon.

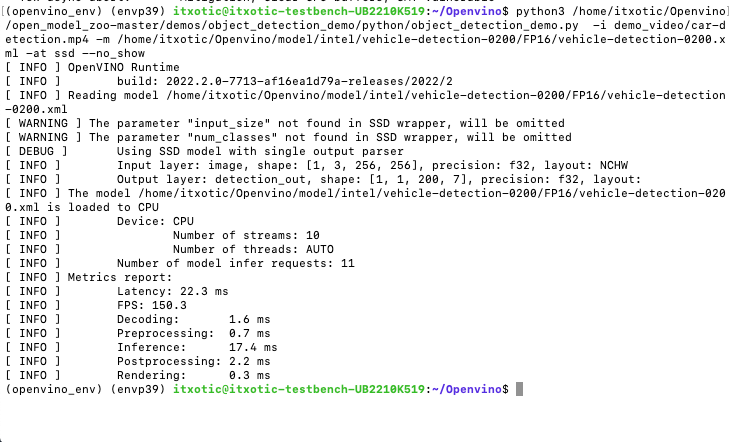

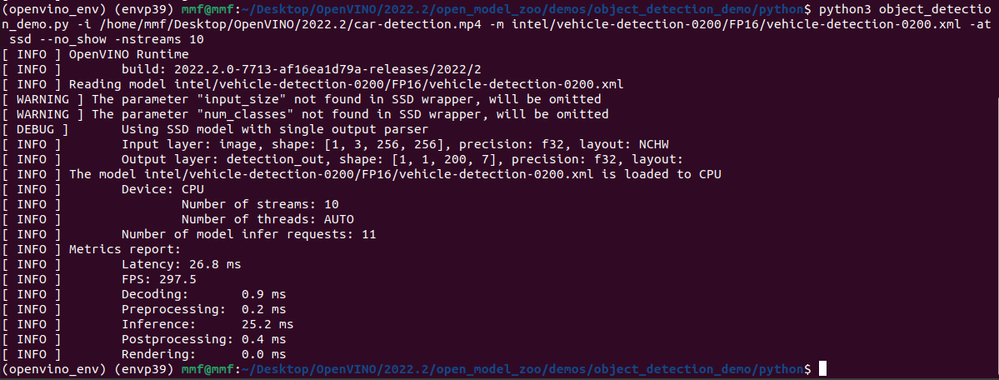

Here is the command i enter :

python3 /home/itxotic/Openvino/open_model_zoo-master/demos/object_detection_demo/python/object_detection_demo.py -i demo_video/car-detection.mp4 -m /home/itxotic/Openvino/model/intel/vehicle-detection-0200/FP16/vehicle-detection-0200.xml -at ssd --no_show

Here is the result i get after i enter the above command :

My server is running Ubuntu 22.10 Kernel 5.19.

So, my question, is there any specific command that i need to precise so that Openvino will take advantage to run on two socket cpu ? I am expecting that my server would able to get like 1000+ fps for 10 streams. I appreciate any kind of help that you may to offer, or point out to me. Thanks.

p/s : i did tried with option "-d CPU", same result.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aqil-ITXOTIC,

Thank you for reaching out to us.

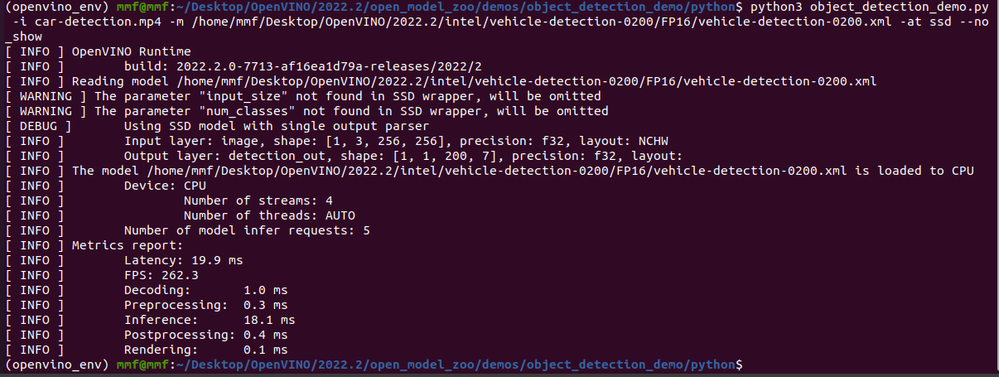

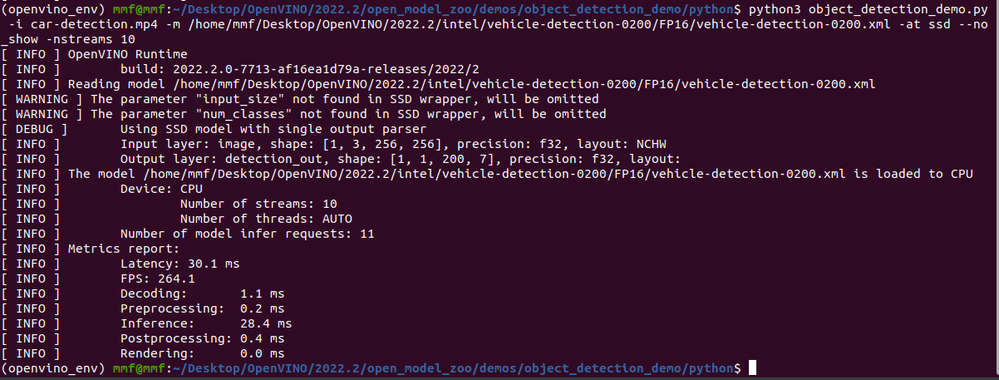

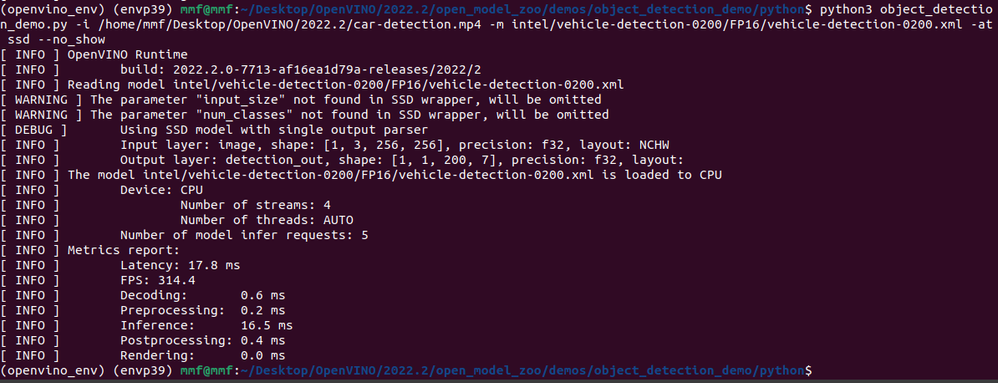

I have validated on my end Object Detection Python Demo with vehicle-detection-0200 model using the car-detection.mp4 video from the sample videos page. I ran the demo on both Ubuntu 20.04 and Ubuntu 22.10 using Intel® Core™ i7-11700K.

I also configured the number of streams "-nstreams" to auto, automatically set to 4 and manually to 10. I share my results below:

Ubuntu 20:

Ubuntu 20 "-nstreams 10":

Ubuntu 22:

Ubuntu 22 "-nstreams 10":

For your information, vehicle-detection-0200 model is based on the MobileNetV2 backbone. From our Benchmark Results, the ssd_mobilenet_v2_coco_tf model was validated on Intel® Xeon® Gold 5218T CPU and got 412 FPS for FP32 precision. Do note that the CPU configuration uses Ubuntu 20.04.3 LTS and Kernel 5.4.0-42-generic. You can find more information on the configuration details from Benchmark Setup Information.

On another note, regarding the FPS performance issue on your CPU, I will contact the Engineering team for further information and will update you once I have obtained feedback from them.

Regards,

Megat.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Megat,

Thank you for respond to my question.

I did the same benchmark on i9-12900K, i9-10980XE and i7-11700. With these 3 processors, we got the "expected" correct result. I can share with you of my excel sheet where i wrote the benchmark result for these 3 processors if you want.

I decided to run my testbench PCs with Ubuntu 22.10 Kernel 5.19 because with Kernel 5.19 and above gives an improvement in performance for intel 10th-gen and above. Comparing to Ubuntu 22.04 Kernel 5.15, we gained one more stream to push on these 3 processors in our "in-development-application" for CCTV surveillance with Kernel 5.19. Although for intel 12th-gen and above, there is a small problem with P-core and E-core management within the Kernel itself, thus resulting about the same performance with i9-10980XE. I believe this issue lies within the Ubuntu OS and its Kernel on how it handles 12th-gen intel processor. But, we see an improvement for i7-11700 and i9-10980XE if we run in Kernel 5.19 and above.

Now, we are planning to test our application within our local cloud server, which is dual socket intel Xeon Gold 6230. We wanna see how many streams and fps throughput we can push. But, the benchmark result give me a very disappointing result, which is way slower than i9-10980XE. To me, it doesn't make sense. We are hoping to fully use its dual socket cpu to fullest.

I wanna thank you for your sharing on your benchmark on i7-11700K. I can confirmed to you that i got similar result as yours.

So here is my further question to ask. Intel has done the benchmark for intel Xeon Gold 5218T under FP32 precision. May i know the configuration that Intel has used ? Like what is the command that your team entered ? Are you running with single socket configuration or dual socket configuration ?

p/s : regarding my benchmark on my dual socket Xeon Gold 6230, i tried with NUMA options (which i believe should force the benchmark_app to use all threads on the cpu and use both socket cpu), which it did, i can see all the threads been using during inferencing, but still get the bad result (as i posted). I also did played with other possible option configuration for the command, and i still get a very bad result.

Looking forward to your response. Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aqil-ITXOTIC,

Thank you for your patience.

For your information, based on the feedback I got, it is suggested to move to C++ implementation if performance is the priority.

The C++ implementation is faster than Python for 2 reasons:

- The language itself is faster.

- There’s GIL in Python which drags performance down for multithreaded scenarios, which is the case for object_detection_demo.

The demo needs to perform stuff like image reading from a video, running model pre/post-processing, and drawing bounding boxes on a resulting frame. That keeps 1 core busy (which is 2 threads because of hyperthreading). That core isn’t used for inference.

This can be verified by comparing different scenarios for the demos. Use -nthreads to compare between scenarios.

If you are experiencing FPS fluctuation which results in poor performance, having -nstreams 12 means that the system generates results for 12 frames almost at the same time. For the demo that means that it generates results for 12 images almost at the same time and after that, there’s a long pause. You need to choose between throughput and latency scenarios. The latency scenario is achieved by setting -nstreams 1. With that, it will reduce FPS fluctuations, but the average FPS will drop.

It is suggested to reproduce the experiments with different arguments and see if there is any improvement in the demos. You can help to share the results as well.

- Run the demo by increasing the -nstreams and -nthreads values instead of default values.

- Choose between throughput and latency scenarios: -nstreams 1 for latency scenarios.

On the other hand, I would suggest you try out our latest OpenVINO™ Toolkit 2022.3 version as this issue might be minimized in the newer version.

Regarding the Benchmark Results for intel Xeon Gold 5218T under FP32 precision. You can find the listing of all platforms and configurations used for testing in the HW platforms (pdf) and Configuration Details (xlsx) documents. Additionally, from the CPU Devices page it is mentioned that on multi-socket platforms, load balancing and memory usage distribution between NUMA nodes are handled automatically.

Regards,

Megat

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Megat,

Thank you for your feedback.

As of now, i will stick to benchmark_app C++ to benchmark my server performance.

The issue of FPS fluctuation still an issue that i don't know the answer. But let's put that aside, i want to ask a new question.

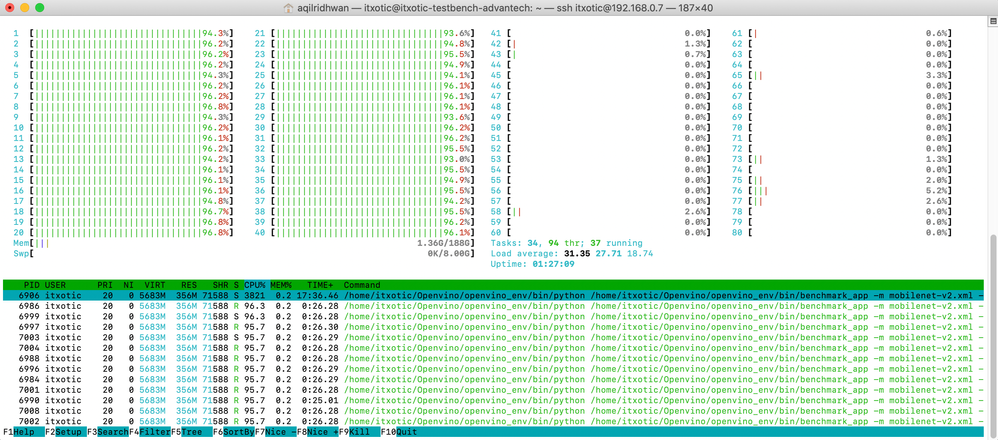

I am running benchmark_app using the model mobilenet-v2 FP16 in Ubuntu Live Server 20.04 Kernel 5.04, and this is the result i get :

The FPS i got is reasonable good, but if we take a look at the CPU usage, we can see only single socket is doing the work, while the other socket is on idle.

My question is, how do i get both socket to run on full load with benchmark_app ? Which option/flag in the command should i use ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aqil-ITXOTIC,

For your information, there is a similar issue regarding the CPU only using half of the available cores from the GitHub page here.

To run the benchmark application on full load using all cores on your CPU, you can try setting the number of threads (-nthreads) to 80 (the number of your virtual cores).

However, please note that using your CPU on full load using all cores might not equate to the best performance. This is an expected default behavior in the current design as the Single-socket platform will use maximum CPU utilization including hyper-threading processors while the Dual-socket platform uses 50% CPU utilization without hyper-threading processors.

Each physical core is configured to support two logical processors. Either of the two logical processors can use all of the resources of the physical core. Most processes do not use all the resources of the physical core. It is possible to use both logical processors on the physical core however, the performance might go down when using both.

On the other hand, using one logical processor per physical core could be considered to be 100% CPU utilization, but is reported to be 50% CPU utilization. You can reach 50% CPU utilization either by using one logical processor on each physical core or by using two logical processors on one-half of the physical cores. You can find more details regarding CPU usage from the discussion here.

Additionally, as mentioned on the CPU Devices page, load balancing and memory usage distribution on multi-socket systems are handled automatically. The CPU plugin should optimize the number of parallel and queued inferences for CPU devices based on the number of CPU cores. However, you can try to change different parameters (e.g. -nstreams, -nthreads) to find the optimum configuration.

Regards,

Megat

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aqil-ITXOTIC,

Thank you for your question. This thread will no longer be monitored since we have provided a suggestion. If you need any additional information from Intel, please submit a new question.

Regards,

Megat

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page