- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Guys,

Finnally I made tiny-yolov3 work on my NCS2 followed the official documantation !!!!!

Enviroment:

OpenVINO: 2019.R1.087

Python: v3.6.7

TensorFlow: 1.12.0

Operation System: Windows 10

Darknet: built with CUDA v9.2

The performance is quite impresive(about 20fps)

===========================

InferenceEngine:

API version ............ 1.6

Build .................. 22443

Parsing input parameters.

API version ............ 1.6

Build .................. 22443

Description ....... myriadPlugin

Network and weights loaded in 43.36ms.

Model loaded to the plugin in 1702.50ms.

Start inference...

Inference time: 48.32 ms

Parsing Yolo results ....

Parsing Yolo results ....

Anchor 0 found object(90,185,402,613) in cell (1,8)

Anchor 1 found object(58,217,291,725) in cell (1,8)

Anchor 2 found object(0,339,98,749) in cell (1,8)

Anchor 0 found object(324,400,401,603) in cell (3,8)

Anchor 1 found object(299,426,294,711) in cell (3,8)

Anchor 2 found object(201,524,110,749) in cell (3,8)

Anchor 0 found object(506,586,400,604) in cell (5,8)

Anchor 1 found object(479,612,292,712) in cell (5,8)

Anchor 2 found object(376,715,105,749) in cell (5,8)

Anchor 0 found object(618,692,406,606) in cell (6,8)

Anchor 1 found object(594,716,300,712) in cell (6,8)

Anchor 2 found object(499,811,117,749) in cell (6,8)

Anchor 0 found object(815,897,409,601) in cell (8,8)

Anchor 1 found object(788,924,307,703) in cell (8,8)

Anchor 2 found object(682,1029,131,749) in cell (8,8)

Anchor 0 found object(909,998,408,601) in cell (9,8)

Anchor 1 found object(879,1027,306,703) in cell (9,8)

Anchor 2 found object(765,1142,129,749) in cell (9,8)

Anchor 0 found object(1118,1191,402,608) in cell (11,8)

Anchor 1 found object(1094,1215,293,718) in cell (11,8)

Anchor 2 found object(1001,1302,105,749) in cell (11,8)

Execution successful,

===========================

But still see the gap between the two outputs,

This is my codes( I also attach the whole project)

// Copyright (C) 2018 Intel Corporation

// SPDX-License-Identifier: Apache-2.0

//

/**

* \brief The entry point for the Inference Engine object_detection demo application

* \file object_detection_demo_yolov3_async/main.cpp

* \example object_detection_demo_yolov3_async/main.cpp

*/

#include <gflags/gflags.h>

#include <functional>

#include <iostream>

#include <fstream>

#include <random>

#include <memory>

#include <chrono>

#include <vector>

#include <string>

#include <algorithm>

#include <iterator>

#include <math.h>

using namespace std;

#include <opencv2/opencv.hpp>

#include <inference_engine.hpp>

using namespace InferenceEngine;

#include <samples/ocv_common.hpp>

#include <samples/common.hpp>

#include <samples/slog.hpp>

using namespace gflags;

#include "tiny_yolov3_async.hpp"

#include <ext_list.hpp>

#include <ie_layers.h>

typedef std::chrono::high_resolution_clock Time;

typedef std::chrono::duration<double, std::ratio<1, 1000>> ms;

typedef std::chrono::duration<float> fsec;

bool ParseAndCheckCommandLine(int argc, char *argv[]) {

// ---------------------------Parsing and validating the input arguments--------------------------------------

ParseCommandLineNonHelpFlags(&argc, &argv, true);

if (FLAGS_h) {

showUsage();

return false;

}

cout << "Parsing input parameters.\n" ;

if (FLAGS_i.empty()) {

throw logic_error("Parameter -i is not set");

}

if (FLAGS_m.empty()) {

throw logic_error("Parameter -m is not set");

}

return true;

}

static int EntryIndex(int side, int lcoords, int lclasses, int location, int entry) {

int n = location / (side * side);

int loc = location % (side * side);

return n * side * side * (lcoords + lclasses + 1) + entry * side * side + loc;

}

struct DetectionObject {

int xmin, ymin, xmax, ymax, class_id;

float confidence;

/*DetectionObject(double x, double y, double h, double w, int class_id, float confidence, float h_scale, float w_scale) {

this->xmin = static_cast<int>((x - w * 0.5) * w_scale);

this->ymin = static_cast<int>((y - h * 0.5) * h_scale);

this->xmax = static_cast<int>(this->xmin + w * w_scale);

this->ymax = static_cast<int>(this->ymin + h * h_scale);

this->class_id = class_id;

this->confidence = confidence;

}*/

bool operator<(const DetectionObject &s2) const {

return this->confidence < s2.confidence;

}

};

double IntersectionOverUnion(const DetectionObject &box_1, const DetectionObject &box_2) {

double width_of_overlap_area = fmin(box_1.xmax, box_2.xmax) - fmax(box_1.xmin, box_2.xmin);

double height_of_overlap_area = fmin(box_1.ymax, box_2.ymax) - fmax(box_1.ymin, box_2.ymin);

double area_of_overlap;

if (width_of_overlap_area < 0 || height_of_overlap_area < 0)

area_of_overlap = 0;

else

area_of_overlap = width_of_overlap_area * height_of_overlap_area;

double box_1_area = (box_1.ymax - box_1.ymin) * (box_1.xmax - box_1.xmin);

double box_2_area = (box_2.ymax - box_2.ymin) * (box_2.xmax - box_2.xmin);

double area_of_union = box_1_area + box_2_area - area_of_overlap;

return area_of_overlap / area_of_union;

}

inline double logistic_activate(double x) { return x; }

void ParseYOLOV3Output(const CNNLayerPtr& layer, const float* output_data, const SizeVector& dims , int img_w, int img_h,

double threshold, vector<DetectionObject> &objects) {

// --------------------------- Validating output parameters -------------------------------------

const int out_blob_h = dims[2];

const int out_blob_w = dims[3];

if (layer->type != "RegionYolo")

throw runtime_error("Invalid output type: " + layer->type + ". RegionYolo expected");

if (out_blob_h != out_blob_w)

throw runtime_error("Invalid size of output " + layer->name +

" It should be in NCHW layout and H should be equal to W. Current H = " + to_string(out_blob_h) +

", current W = " + to_string(out_blob_h));

// --------------------------- Extracting layer parameters -------------------------------------

std::vector<float> anchors = { 10,14,23,27,37,58,81,82,135,169,344,319 };

try { anchors = layer->GetParamAsFloats("anchors"); }

catch (...) {}

cout << "Parsing Yolo results ....\n";

int num = 3;

try { num = layer->GetParamAsInts("mask").size(); }

catch (...) {}

int coords = 4;

coords = layer->GetParamAsInt("coords");

int classes = 1;

classes = layer->GetParamAsInt("classes");

int channels = num * (1 + classes + coords);

if (channels != dims[1]) {

throw runtime_error("Invalid size of channels " + layer->name +

" It should be " + to_string(channels) + ", Current channels = " + to_string(dims[1]) );

}

int anchor_offset = (13 == out_blob_h) ? 6 : 0;

int map_size = out_blob_h * out_blob_w;

double fw = 1.0 / out_blob_w;

double fh = 1.0 / out_blob_h;

DetectionObject obj;

int anchor_channels = coords + classes + 1;

for (int i = 0; i < map_size; i++) {

int row = i / out_blob_w;

int col = i % out_blob_w;

for (int a = 0, c_offset = 0; a < num;

a++, c_offset += anchor_channels) {

int x_index = anchor_channels * map_size + i;

int y_index = x_index + map_size;

int w_index = y_index + map_size;

int h_index = w_index + map_size;

int conf_index = h_index + map_size;

obj.confidence = logistic_activate(output_data[conf_index]);

if (obj.confidence < threshold) continue;

//cool. we get predictions

double x = (col + logistic_activate(output_data[x_index])) * fw;

double y = (row + logistic_activate(output_data[y_index])) * fh;

double h = exp(logistic_activate(output_data[h_index])) *

anchors[anchor_offset + 2 * a + 1] / 416.0;

double w = exp(logistic_activate(output_data[w_index]))

* anchors[anchor_offset + 2 * a] / 416.0;

int cls_index = conf_index + map_size;

obj.xmin = (x - 0.5 * w) * img_w;

if (obj.xmin < 0) obj.xmin = 0;

obj.xmax = (x + 0.5 * w) * img_w;

if (obj.xmax >= img_w)

obj.xmax = img_w - 1;

obj.ymin = (y - 0.5 * h) * img_h;

if (obj.ymin < 0) obj.ymin = 0;

obj.ymax = (y + 0.5 * h) * img_h;

if (obj.ymax >= img_h) obj.ymax = img_h - 1;

cout << "Anchor " << a << " found object(" << obj.xmin << "," << obj.xmax <<

"," << obj.ymin << "," << obj.ymax << ") in cell (" << col << "," << row << ")\n";

obj.class_id = -1;

for (int c = 0; c < classes; c++) {

double cls_probability = logistic_activate(output_data[cls_index]);

cls_index += map_size;

if (cls_probability * obj.confidence < threshold) continue;

obj.class_id = c;

}

if (obj.class_id != -1) {

objects.push_back(obj);

}

}

}

}

int main(int argc, char *argv[]) {

try {

/** This demo covers a certain topology and cannot be generalized for any object detection **/

cout << "InferenceEngine: " << GetInferenceEngineVersion() << endl;

// ------------------------------ Parsing and validating the input arguments ---------------------------------

if (!ParseAndCheckCommandLine(argc, argv)) {

return 0;

}

auto t0 = Time::now();

InferencePlugin plugin = PluginDispatcher({""}).getPluginByDevice("MYRIAD");

printPluginVersion(plugin, cout);

/** Per-layer metrics **/

if (FLAGS_pc) {

plugin.SetConfig({ { PluginConfigParams::KEY_PERF_COUNT, PluginConfigParams::YES } });

}

// -----------------------------------------------------------------------------------------------------

// --------------- 2. Reading the IR generated by the Model Optimizer (.xml and .bin files) ------------

t0 = Time::now();

CNNNetReader netReader;

netReader.ReadNetwork(FLAGS_m);

netReader.ReadWeights(fileNameNoExt(FLAGS_m) + ".bin");

auto t1 = Time::now();

fsec fs = t1 - t0;

double dur = chrono::duration_cast<ms>(fs).count();

std::cout << " Network and weights loaded in " << fixed << setprecision(2) << dur << "ms.\n";

CNNNetwork network = netReader.getNetwork();

vector<string> labels;

ifstream f("coco.names");

if (f.is_open()) {

char label[100];

while (f.getline(label, 100)) {

labels.push_back(label);

}

f.close();

}

cv::Mat orig_image = cv::imread(FLAGS_i.c_str());

if (orig_image.empty()) {

slog::warn << "Image " << FLAGS_i << " cannot be read!" << slog::endl;

return 0;

}

int img_w = orig_image.cols;

int img_h = orig_image.rows;

InputsDataMap inputs_info(network.getInputsInfo());

if (inputs_info.size() != 1) {

throw logic_error("This demo accepts networks that have only one input");

}

InputInfo::Ptr& input = inputs_info.begin()->second;

string inputName = inputs_info.begin()->first;

input->setPrecision(Precision::U8);

// --------------------------------- Preparing output blobs -------------------------------------------

OutputsDataMap outputs_info(network.getOutputsInfo());

for (auto& output : outputs_info) {

output.second->setPrecision(Precision::FP32);

}

t0 = Time::now();

// --------------------------- 4. Loading model to the plugin ------------------------------------------

ExecutableNetwork executable_network = plugin.LoadNetwork(network, {});

// --------------------------- 5. Creating infer request -----------------------------------------------

InferRequest infer_request = executable_network.CreateInferRequest();

Blob::Ptr network_input = infer_request.GetBlob(inputName);

const SizeVector& in_dims = network_input->getTensorDesc().getDims();

int net_w = in_dims[3];

int net_h = in_dims[2];

int net_c = in_dims[1];

int image_size = net_h * net_w;

cv::Mat resized = cv::Mat::zeros(net_h, net_w, CV_8UC3);

resize(orig_image, resized, resized.size());

t1 = Time::now();

unsigned char* data = static_cast<unsigned char*>(network_input->buffer());

for (int i = 0; i < image_size; i++) {

for (size_t c = 0; c < net_c; c++) {

data[c * image_size + i] = resized.data[i * net_c + c];

}

}

// --------------------------- 6. Doing inference ------------------------------------------------------

dur = chrono::duration_cast<ms>(t1 - t0).count();

std::cout << "Model loaded to the plugin in " << fixed << setprecision(2) << dur << "ms.\nStart inference...\n";

t0 = Time::now();

infer_request.Infer();

t1 = Time::now();

dur = chrono::duration_cast<ms>(t1 - t0).count();

std::cout << "Inference time: " << fixed << setprecision(2) << dur << " ms\n";

vector<DetectionObject> objects;

for (auto& output : outputs_info) {

const Blob::Ptr output_blob = infer_request.GetBlob(output.first);

const float* detection = static_cast<PrecisionTrait<Precision::FP32>::value_type*>(output_blob->buffer());

const SizeVector& out_dims = output_blob->getTensorDesc().getDims();

ParseYOLOV3Output(network.getLayerByName(output.first.c_str()),detection,out_dims, img_w,img_h, FLAGS_t,objects);

}

std::sort(objects.begin(), objects.end());

for (int i = 0; i < objects.size(); ++i) {

if (objects.confidence == 0) continue;

for (int j = i + 1; j < objects.size(); ++j)

if (IntersectionOverUnion(objects, objects) >= 0.3)

objects.confidence = 0;

}

char text[100];

for (DetectionObject &object : objects) {

if (object.confidence < FLAGS_t) continue;

int label = object.class_id;

float confidence = object.confidence;

if (FLAGS_r) {

std::cout << "[" << label << "] element, prob = " << confidence <<

" (" << object.xmin << "," << object.ymin << ")-(" << object.xmax << "," << object.ymax << ")"

<< ((confidence > FLAGS_t) ? " WILL BE RENDERED!" : "") << endl;

}

if (confidence > FLAGS_t) {

/** Drawing only objects when >confidence_threshold probability **/

if (object.class_id < labels.size()) {

sprintf(text, "%s : %.2f%%", labels[object.class_id].c_str(), object.confidence* 100.0);

}

else {

sprintf(text, "label #%d : %.2f%%", label, object.confidence * 100.0);

}

cv::putText(orig_image, text, cv::Point2i(object.xmin, object.ymin - 5),

cv::FONT_HERSHEY_COMPLEX_SMALL, 1, cv::Scalar(0, 0, 255));

cv::rectangle(orig_image, cv::Point2i(object.xmin, object.ymin),

cv::Point2i(object.xmax, object.ymax), cv::Scalar(0, 0, 255));

}

}

if (FLAGS_pc) {

printPerformanceCounts(infer_request, std::cout);

}

vector<int> params;

params.push_back(cv::IMWRITE_JPEG_QUALITY);

params.push_back(90);

imwrite("predictions.jpg", orig_image, params);

}

catch (const exception& error) {

cerr << "[ ERROR ] " << error.what() << endl;

return 1;

}

catch (...) {

cerr << "[ ERROR ] Unknown/internal exception happened.\n" ;

return 1;

}

cout << "Execution successful,\n" ;

return 0;

}

Is this because I did not write the code right?

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Tsin, Ross,

congratulations on your success ! Does this gap exist when you run the OpenVino sample object_detection_demo_yolov3_async.py ?

Thanks,

Shubha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Ross, it seems to me that there is a problem with your anchor boxes. According to this darknet issues 568 there is a program within darknet which calculates the anchors based on passed in image:

and for 9 anchors for YOLO-3 I used C-language darknet:

darknet3.exe detector calc_anchors obj.data -num_of_clusters 9 -width 416 -height 416 -showpause

I personally haven't used this tool, but I think such a tool may help solve your bounding box issue. Remember, these anchors are coded into your yolo_v3.json, the default version of which is found under C:\Program Files (x86)\IntelSWTools\openvino_2019.1.087\deployment_tools\model_optimizer\extensions\front\tf. In other words, these anchor box values feed into generation of IR by Model Optimizer.

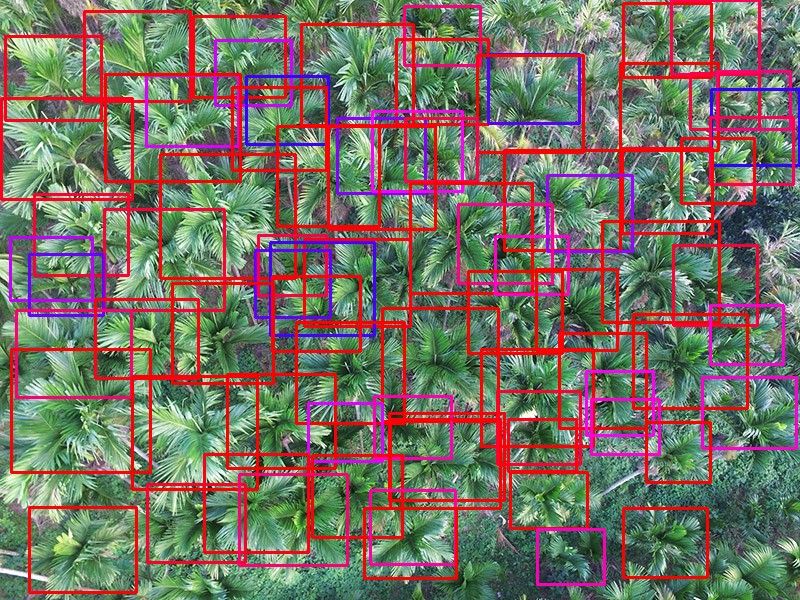

The Intersection Over Union (IOU) code logic is pretty standard but they use as their base the anchors, which in turn dictate the bounding boxes. Also the OpenVino image you post above has pretty terrible probabilities. This could very well happen with a poor initial choice of anchors.

This is also an interesting excerpt:

YOLO's anchors are specific to dataset that is trained on (default set is based on PASCAL VOC). They ran a k-means clustering on the normalized width and height of the ground truth bounding boxes and obtained 5 values.

The final values are based on not coordinates but grid values. YOLO default set:

anchors = 1.3221, 1.73145, 3.19275, 4.00944, 5.05587, 8.09892, 9.47112, 4.84053, 11.2364, 10.0071

this means the height and width of first anchor is slightly over one grid cell [1.3221, 1.73145] and the last anchor almost covers the whole image [11.2364, 10.0071] considering the image is 13x13 grid.Hope this gives you a bit clearer idea if not complete.

An important question for you - is your input image size 416x416 or 608x608 ?

Thanks and I hope this helps you,

Shubha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Shubha,

Thank you for you detailed reply and explanation. However, I think you might omit some information in this case.

The anchors I used in NCS2 inference and GPU inference are the same. Furthermore, in the converted model generated by my RQNet, I don't refer to the yolo_v3.json file, I use anchors coded in my network definition file instead. and this gap still exists.

In a word, with same inputs NCS2 and GPU do not produce same outputs in this case.

And I use 416x416 as network size.

When I debug my RQCodes, usually I use the same input, and compare outputs of every layer to a benchmark tool( like darknet.exe), and sometimes I found the root cause of the issue.

With same input image and models, I think in every layer, the NCS2 should output the same data as GPU does.

I can do this inspection in some time, and let you know which layer does not produce expected output. but I am not sure how to define an output layer which just simply copy the input data. It's great if you can tell me.

Thank you again.

Ross

Shubha R. (Intel) wrote:

Dear Ross, it seems to me that there is a problem with your anchor boxes. According to this darknet issues 568 there is a program within darknet which calculates the anchors based on passed in image:

and for 9 anchors for YOLO-3 I used C-language darknet:

darknet3.exe detector calc_anchors obj.data -num_of_clusters 9 -width 416 -height 416 -showpauseI personally haven't used this tool, but I think such a tool may help solve your bounding box issue. Remember, these anchors are coded into your yolo_v3.json, the default version of which is found under C:\Program Files (x86)\IntelSWTools\openvino_2019.1.087\deployment_tools\model_optimizer\extensions\front\tf. In other words, these anchor box values feed into generation of IR by Model Optimizer.

The Intersection Over Union (IOU) code logic is pretty standard but they use as their base the anchors, which in turn dictate the bounding boxes. Also the OpenVino image you post above has pretty terrible probabilities. This could very well happen with a poor initial choice of anchors.

This is also an interesting excerpt:

YOLO's anchors are specific to dataset that is trained on (default set is based on PASCAL VOC). They ran a k-means clustering on the normalized width and height of the ground truth bounding boxes and obtained 5 values.

The final values are based on not coordinates but grid values. YOLO default set:

anchors = 1.3221, 1.73145, 3.19275, 4.00944, 5.05587, 8.09892, 9.47112, 4.84053, 11.2364, 10.0071

this means the height and width of first anchor is slightly over one grid cell [1.3221, 1.73145] and the last anchor almost covers the whole image [11.2364, 10.0071] considering the image is 13x13 grid.Hope this gives you a bit clearer idea if not complete.

An important question for you - is your input image size 416x416 or 608x608 ?

Thanks and I hope this helps you,

Shubha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Tsin, Ross

you are absolutely correct. I missed this key point:

In a word, with same inputs NCS2 and GPU do not produce same outputs in this case.

Ross, does GPU produce correct outputs ? If it does then this must be an NCS2 bug. There is a way - you can add your own outputs to the network and print values for those outputs. Please study the below forum post (in particular, study the sample object_detection_demo).

https://software.intel.com/en-us/forums/computer-vision/topic/804937#comment-1934014

If you could pinpoint the actual problematic layer Ross (the one which misbehaves in NCS2), that would be very helpful for my filing a bug. By my filing a bug, it quickly gets development's attention - either they will provide a workaround or commit to fixing the bug in the next release.

Thanks a lot for your cooperation -

Shubha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Shubha,

After training tens of thousands batch by GPU, and resulting in a convergent model as expected, I think GPU computaion is reliable.

I'll compare differences between the outputs of every layer infered by NCS2 and GPU and let you know if I find something.

Cheers,

Ross

Shubha R. (Intel) wrote:Dear Tsin, Ross

you are absolutely correct. I missed this key point:

In a word, with same inputs NCS2 and GPU do not produce same outputs in this case.

Ross, does GPU produce correct outputs ? If it does then this must be an NCS2 bug. There is a way - you can add your own outputs to the network and print values for those outputs. Please study the below forum post (in particular, study the sample object_detection_demo).

https://software.intel.com/en-us/forums/computer-vision/topic/804937#comment-1934014

If you could pinpoint the actual problematic layer Ross (the one which misbehaves in NCS2), that would be very helpful for my filing a bug. By my filing a bug, it quickly gets development's attention - either they will provide a workaround or commit to fixing the bug in the next release.

Thanks a lot for your cooperation -

Shubha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Tsin, Ross

I hope you understand what I mean by GPU. Not Nvidia GPU ! I mean, if FP16 has the same problem on an Intel GPU as it does on NCS2, this is an interesting datapoint. Or if it doesn't have the issue on an Intel GPU but it does on NCS2 - again an interesting data point. It makes no sense to compare your results on an Nvidia GPU with NCS2 because OpenVino and Nvidia use completely different toolchains.

I hope you understand-

Shubha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Shubha, Now I get your point now,haha.

Well, I do have two GPUs in my laptom(Intel 630 and nVidia 1060), by default, I use Intel GPU as display device and NVidia GPU as computation device. I can do FP16 inference with my RQNet.exe and nVidia GPU. result is nearly the same as FP32.

As for using Intel GPU as computaion device( with openvino), should I force the OS to use nVidia GPU as display device?

Cheers,

Ross

Shubha R. (Intel) wrote:Dear Tsin, Ross

I hope you understand what I mean by GPU. Not Nvidia GPU ! I mean, if FP16 has the same problem on an Intel GPU as it does on NCS2, this is an interesting datapoint. Or if it doesn't have the issue on an Intel GPU but it does on NCS2 - again an interesting data point. It makes no sense to compare your results on an Nvidia GPU with NCS2 because OpenVino and Nvidia use completely different toolchains.

I hope you understand-

Shubha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

RossT,

Thanks for the interest.

Using Intel on die graphics as a display device should not have an affect on compute performance.

*edit/rephrase: There should be no compute related reason to choose the Intel device to drive the display versus some other device. Intel ondie Graphics should be togglable through system bios. If a difference in compute performance is observed for the Intel device simply because it's driving the display, could you please cite the issue in the forums and provide a reproducer?

Thanks.

-MichaelC

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi MichaelC,

I converted my own weights into IR model(FP32), and use GPU to inference, I get a lot of false positives.

and I also see some confidence values bigger than 1.0

see the demostration here.

http://sanyafruits.com/temp/tiny-yolo-v3-demo.html

By using CUDA, the output looks perfect.

I guess something goes wrong in the "YoloRegion" layer. e.g. the logistic activation function.

Will use my custom layer to replace that and investigate. And please check my assumption if possible.

Cheers

Ross

MICHAEL C. (Intel) wrote:RossT,

Thanks for the interest.

Using Intel on die graphics as a display device should not have an affect on compute performance.

*edit/rephrase: There should be no compute related reason to choose the Intel device to drive the display versus some other device. Intel ondie Graphics should be togglable through system bios. If a difference in compute performance is observed for the Intel device simply because it's driving the display, could you please cite the issue in the forums and provide a reproducer?

Thanks.

-MichaelC

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I checked, yes, there is a bug in logistic activation of yolo layer.

I add these lines and parse information from outputs of the conv layers

network.addOutput("layer16.convolution");

network.addOutput("layer21.convolution");

and

for (auto &output : outputInfo) {

auto output_name = output.first;

if(output_name.find("yoloex") != std::string::npos) continue ;

CNNLayerPtr layer = network.getLayerByName(output_name.c_str());

Blob::Ptr blob = async_infer_request_curr->GetBlob(output_name);

ParseYOLOV3Output(layer, blob, resized_im_h, resized_im_w, height, width, FLAGS_t, objects);

}

MICHAEL C. (Intel) wrote:

RossT,

Thanks for the interest.

Using Intel on die graphics as a display device should not have an affect on compute performance.

*edit/rephrase: There should be no compute related reason to choose the Intel device to drive the display versus some other device. Intel ondie Graphics should be togglable through system bios. If a difference in compute performance is observed for the Intel device simply because it's driving the display, could you please cite the issue in the forums and provide a reproducer?

Thanks.

-MichaelC

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Shubha, I checked, it turned out to be the GPU output is the same. :(

maybe the issue is from the logistic activation in the detection layer.

I made a workaround, and now perfect. please fix this bug.

Shubha R. (Intel) wrote:Dear Tsin, Ross

I hope you understand what I mean by GPU. Not Nvidia GPU ! I mean, if FP16 has the same problem on an Intel GPU as it does on NCS2, this is an interesting datapoint. Or if it doesn't have the issue on an Intel GPU but it does on NCS2 - again an interesting data point. It makes no sense to compare your results on an Nvidia GPU with NCS2 because OpenVino and Nvidia use completely different toolchains.

I hope you understand-

Shubha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dearest Tsin, Ross,

I don't understand exactly. What is the bug ? And what was your workaround ? Still not clear on this. Also I have PM'd you so you can send me the bad code and the workaround (fixed) code.

Thanks for your help !

Shubha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Any update here? I also use yolov3 on openvino. But I find that the weight and pb model preform good. When i translate it into IR model, there are a lot of false positives.

Also see https://github.com/PINTO0309/OpenVINO-YoloV3/issues/34 and https://github.com/PINTO0309/OpenVINO-YoloV3/issues/32 .

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I wonder if it's because that there are many small objects in our dataset. So the small object detection result is not good.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page