- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, I am currently facing difficulties to perform classification on my NCS1 (Myriad 2). The conversion ONNX -> IR works fine, but at the classification level, it throws either:

RuntimeError: Can not init Myriad device: NC_ERROR

(model conversion : openvino/ubuntu18_dev:2020.4 docker image

classification: openvino/ubuntu18_dev:2020.4 docker image)

or:

RuntimeError: Unexpected CNNNetwork format: it was converted to deprecated format prior plugin's call

(model conversion : openvino/ubuntu18_dev:2020.3 docker image

classification: openvino/ubuntu18_dev:2020.3 docker image)

I tested with some of the latest versions (2022) but still the same issue. The same model does not throws any errors with my NCS2 (Myriad X).

Using benchmark_app from openvino_2020.3.341 It also throws

[Step 7/11] Loading the model to the device

[ ERROR ] Can not init Myriad device: NC_ERROR

I attached the IR model + an input.

Any idea?

Regards

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Benguigui__Michael,

Thank you for reaching out to us.

The RuntimeError: Can not init Myriad device: NC_ERROR occurs because Intel® Movidius™ Neural Compute Stick (NCS1) can only be used with OpenVINO™ versions up to OpenVINO™ 2020.3 LTS release. You can refer here for more information.

For the RuntimeError: Unexpected CNNNetwork format, please ensure the IR model version and OpenVINO™ inference version are the same. And could you please tell us how you run the docker image file?

To use the MYRIAD accelerator on docker, run the image with the following command:

docker run -it --device-cgroup-rule='c 189:* rmw' -v /dev/bus/usb:/dev/bus/usb --rm openvino/ubuntu18_dev:2020.3

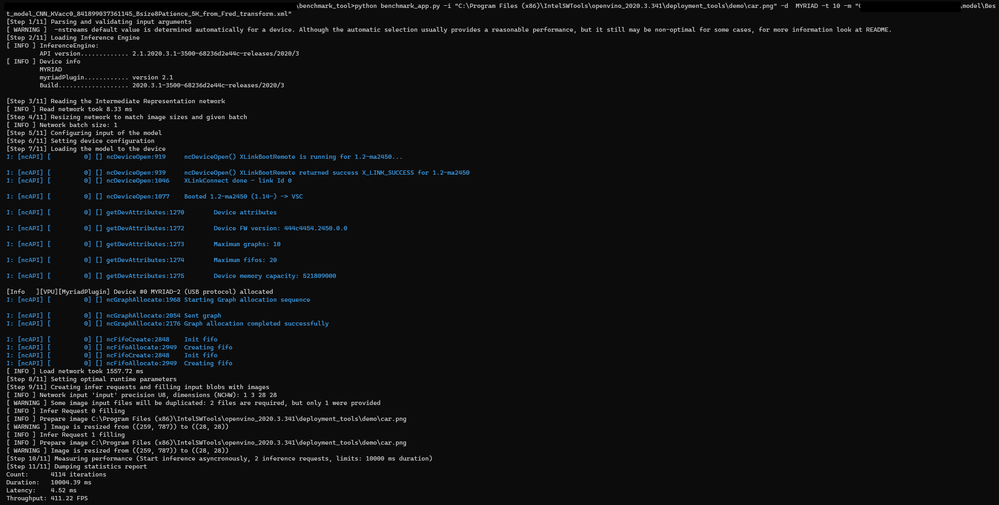

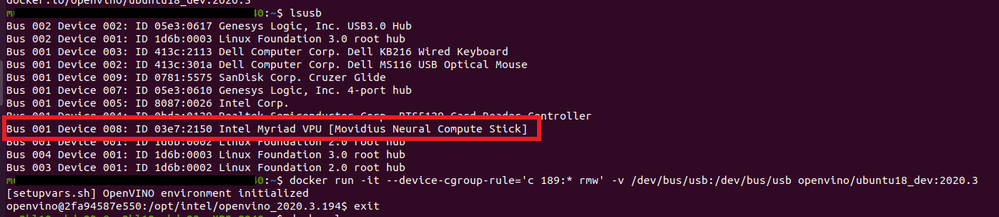

On another note, I have validated the benchmark app in openvino_2020.3.341 using your model with Intel® Movidius™ Neural Compute Stick (NCS1) on my side and it works fine. Please refer to this article to ensure the device is connected to your system and is detected by Ubuntu* with the lsusb command. I share my result below.

Regards,

Megat

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks a lot for your very quick reply.

I can manage to detect my NCS1 via lsusb -v.

I am using the same OpenVINO version (openvino/ubuntu18_dev:2020.3) for all steps: mo.py, classification_sample_async.py, benchmark_app.py.

Here are my tests/results (I left the array style format since i am not fully working in bash):

BENCHMARK APP

[docker, run, --rm, --env, HOME=/tmp, -u, 0, --device-cgroup-rule, c 189:* rmw, --network, host, -v, /dev/bus/usb:/dev/bus/usb, -v, /tmp:/tmp, -v, /ir_models:/ir_models, -v, /my_images:/my_images, openvino/ubuntu18_dev:2020.3]

python3 /opt/intel/openvino/deployment_tools/tools/benchmark_tool/benchmark_app.py --path_to_model /ir_models/Best_model_CNN_KVacc0.841899037361145_Bsize8Patience_5K.h5with1batch__28x28__v10.xml --paths_to_input /my_images --target_device MYRIAD --report_type detailed_counters --report_folder /tmp --api_type=async --batch_size=1

->

[Step 1/11] Parsing and validating input arguments

[ WARNING ] -nstreams default value is determined automatically for a device. Although the automatic selection usually provides a reasonable performance, but it still may be non-optimal for some cases, for more information look at README.

[Step 2/11] Loading Inference Engine

[ INFO ] InferenceEngine:

API version............. 2.1.2020.3.0-3467-15f2c61a-releases/2020/3

[ INFO ] Device info

MYRIAD

myriadPlugin............ version 2.1

Build................... 2020.3.0-3467-15f2c61a-releases/2020/3

[Step 3/11] Reading the Intermediate Representation network

[ ERROR ] Cannot create ShapeOf layer model/softmax/Softmax/ShapeOf_ id:31

CLASSIFICATION

[docker, run, --rm, --env, HOME=/tmp, -u, 0, --device-cgroup-rule, c 189:* rmw, --network=host, -v, /dev/bus/usb:/dev/bus/usb, -v, /tmp:/tmp, -v, /ir_models:/ir_models, -v, /my_images:/my_images, openvino/ubuntu18_dev:2020.3]

find /my_images -name '1615412977270_2_*_img_msec.png' | xargs python3 /opt/intel/openvino/deployment_tools/inference_engine/samples/python/classification_sample_async/classification_sample_async.py -d MYRIAD --labels /tmp/cloud_no_cloud_labels.txt -nt 2 -m /tmp/Best_model_CNN_KVacc0_841899037361145_Bsize8Patience_5K_from_Fred_transform.xml -i

->

Traceback (most recent call last):

[4582t8@ipf4100.irt-aese.local;10:12:26] File "/opt/intel/openvino/deployment_tools/inference_engine/samples/python/classification_sample_async/classification_sample_async.py", line 183, in <module>

[4582t8@ipf4100.irt-aese.local;10:12:26] sys.exit(main() or 0)

[4582t8@ipf4100.irt-aese.local;10:12:26] File "/opt/intel/openvino/deployment_tools/inference_engine/samples/python/classification_sample_async/classification_sample_async.py", line 142, in main

[4582t8@ipf4100.irt-aese.local;10:12:26] exec_net = ie.load_network(network=net, device_name=args.device)

[4582t8@ipf4100.irt-aese.local;10:12:26] File "ie_api.pyx", line 178, in openvino.inference_engine.ie_api.IECore.load_network

[4582t8@ipf4100.irt-aese.local;10:12:26] File "ie_api.pyx", line 187, in openvino.inference_engine.ie_api.IECore.load_network

[4582t8@ipf4100.irt-aese.local;10:12:26] RuntimeError: Unexpected CNNNetwork format: it was converted to deprecated format prior plugin's call

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Benguigui__Michael,

From my end, using the same command as yours, I have validated your model and input image with Benchmark Python Tool and Image Classification Python Sample Async. And I did not receive any error for both.

I suggest you try installing the Docker image again. For your information, I installed the openvino/ubuntu18_dev:2020.3 Docker image using the command docker pull openvino/ubuntu18_dev:2020.3. You can refer here for more information.

On the other hand, since the IR model that you gave works on my end, could you please try running the Benchmark Python Tool and Image Classification Python Sample Async again with that model?

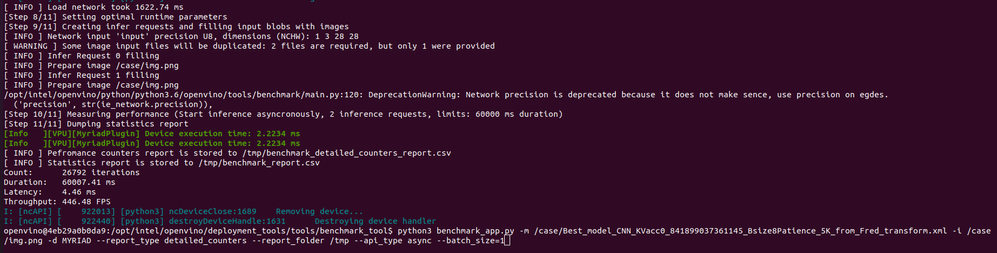

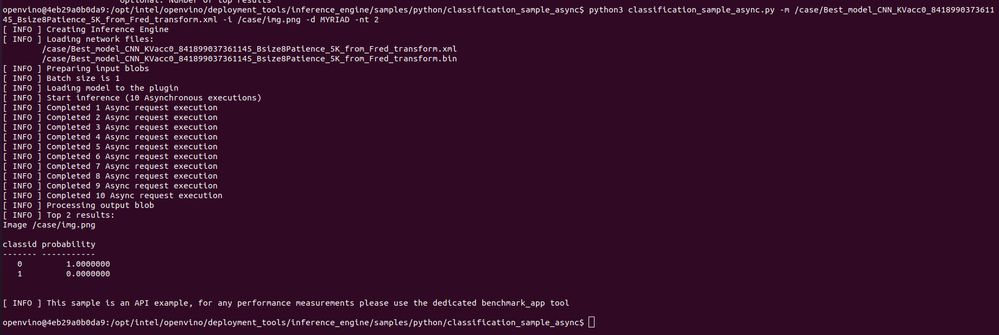

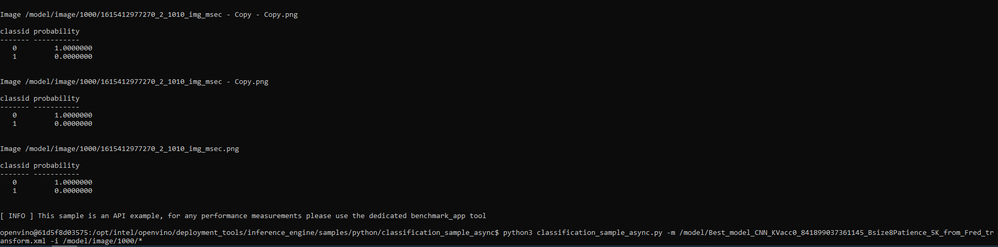

I attach my results below:

Docker Command:

Benchmark App:

Classification Sample Async:

Regards,

Megat

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As usua, thanks again for your quick replay. What I've done, after having git pulled a freshed openvino/ubuntu18_dev:2020.3 docker image:

docker run -it --device-cgroup-rule='c 189:* rmw' -v /dev/bus/usb:/dev/bus/usb -v /hdd-raid0/home_server/ciar/onnx_infer_multiple_contexts/ir_models/:/ir_models -v /external_hdd/images/CLOUDS/OPSAT/OPSAT_DB_NO_BLACK_TEST_10_28x28:/my_images openvino/ubuntu18_dev:2020.3

openvino@f9266f577de6:/opt/intel/openvino/deployment_tools/tools/benchmark_tool$ python3 benchmark_app.py -m /ir_models/Best_model_CNN_KVacc0.841899037361145_Bsize8Patience_5K.h5with1batch__28x28__v10.xml -i /my_images/1617140346629_2_3289_img_msec.png -d MYRIAD --report_type detailed_counters --report_folder /tmp --api_type async --batch_size=1

[Step 1/11] Parsing and validating input arguments

[ WARNING ] -nstreams default value is determined automatically for a device. Although the automatic selection usually provides a reasonable performance, but it still may be non-optimal for some cases, for more information look at README.

[Step 2/11] Loading Inference Engine

[ INFO ] InferenceEngine:

API version............. 2.1.2020.3.0-3467-15f2c61a-releases/2020/3

[ INFO ] Device info

MYRIAD

myriadPlugin............ version 2.1

Build................... 2020.3.0-3467-15f2c61a-releases/2020/3

[Step 3/11] Reading the Intermediate Representation network

[ ERROR ] Cannot create ShapeOf layer model/softmax/Softmax/ShapeOf_ id:31

Traceback (most recent call last):

File "/opt/intel/openvino/python/python3.6/openvino/tools/benchmark/main.py", line 53, in run

ie_network = benchmark.read_network(args.path_to_model)

File "/opt/intel/openvino/python/python3.6/openvino/tools/benchmark/benchmark.py", line 123, in read_network

ie_network = self.ie.read_network(xml_filename, bin_filename)

File "ie_api.pyx", line 136, in openvino.inference_engine.ie_api.IECore.read_network

File "ie_api.pyx", line 157, in openvino.inference_engine.ie_api.IECore.read_network

RuntimeError: Cannot create ShapeOf layer model/softmax/Softmax/ShapeOf_ id:31

[ INFO ] Statistics report is stored to /tmp/benchmark_report.csv

Could you send me your input png file ?

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Rather than testing with your png file, could could please test with mine (attached) ?

Regards++

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Benguigui__Michael,

For your information, I used the input image that you provided with the model and only changed the file name as shown below.

The error occurs because the benchmark app failed to read the Intermediate Representation network model. This issue seems to be related to the model layers. I noticed that the model name you provided (Best_model_CNN_KVacc0_841899037361145_Bsize8Patience_5K_from_Fred_transform.xml) is different from the model you used (Best_model_CNN_KVacc0.841899037361145_Bsize8Patience_5K.h5with1batch__28x28__v10.xml).

Is there any difference between these two models and can you run the benchmark app again using the Best_model_CNN_KVacc0_841899037361145_Bsize8Patience_5K_from_Fred_transform.xml model?

On the other hand, I highly recommend you use Intel® Neural Compute Stick 2 (Intel® NCS2) with the latest OpenVINO™ version (2022.1.0) because the latest version has more supported framework layers and this might help to solve your issue.

Regards,

Megat

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

(thx again) You're definitely right. I selected the wrong model at the benchmarking step. As a result, the benchmark app WORKS FINE. BUT the classification still throws me

RuntimeError: Unexpected CNNNetwork format: it was converted to deprecated format prior plugin's call

And you managed to classify using the same openvino version for the conversion/classification, the same model on the same input.

Damned!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

(and the right model is Best_model_CNN_KVacc0_841899037361145_Bsize8Patience_5K_from_Fred_transform).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I cannot understand why, but using the model I provided (without regenerating one from the onnx version for each test), and by testing again on a single image, the classification works fine. Trying to understand why.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, after few more tests, it comes from the way inputs are specified.

python3 classification_sample_async.py -d MYRIAD --labels cloud_no_cloud_labels.txt -nt 2 -m Best_model_CNN_KVacc0_841899037361145_Bsize8Patience_5K_from_Fred_transform.xml -i /my_images/1615412977270_2_999_img_msec.png

->

classid probability

------- -----------

<cloud> 1.0000000

<no_cloud> 0.0000000

(OK)

find /my_images -name '1615412977270_2_999_img_msec.png' | xargs python3 classification_sample_async.py -d MYRIAD --labels cloud_no_cloud_labels.txt -nt 2 -m Best_model_CNN_KVacc0_841899037361145_Bsize8Patience_5K_from_Fred_transform.xml -i

->

classid probability

------- -----------

<cloud> 1.0000000

<no_cloud> 0.0000000

(OK)

python3 classification_sample_async.py -d MYRIAD --labels cloud_no_cloud_labels.txt -nt 2 -m Best_model_CNN_KVacc0_841899037361145_Bsize8Patience_5K_from_Fred_transform.xml -i /my_images

->

AttributeError: 'NoneType' object has no attribute 'shape'

(KO)

python3 classification_sample_async.py -d MYRIAD --labels cloud_no_cloud_labels.txt -nt 2 -m Best_model_CNN_KVacc0_841899037361145_Bsize8Patience_5K_from_Fred_transform.xml -i /my_images/*

->

bash: /usr/bin/python3: Argument list too long

(the well known bash limitation)

find /my_images -name '1615412977270_2_*_img_msec.png' | xargs python3 classification_sample_async.py -d MYRIAD --labels cloud_no_cloud_labels.txt -nt 2 -m Best_model_CNN_KVacc0_841899037361145_Bsize8Patience_5K_from_Fred_transform.xml -i

->

RuntimeError: Unexpected CNNNetwork format: it was converted to deprecated format prior plugin's call

(KO)

I think I will need to perform a classification_sample_async.py call per image.

Michael

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Michael,

I'm glad that you can successfully run the Benchmark Python Tool and Image Classification Python Sample Async.

The bash: /usr/bin/python3: Argument list too long error occurs because too many arguments are fed to the command and it hits the maximum length. This can happen while working with a large number of files. You can refer here for more information. (These are external links and are not maintained by Intel).

On another note, try to run the Image Classification Python Sample Async using batches of images instead of running per image.

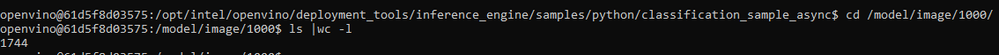

For your information, I created a folder with 1744 copies of the input image and successfully ran the Image Classification Python Sample Async as shown below.

Regards,

Megat

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, finally I managed to isolate the issue related to the classification app. For the conversion and classification, I use openvino/ubuntu18_dev:2020.3. The classification on a single image works fine on the CPU AND on the MYRIAD (replace TARGET by either CPU or MYRIAD):

>find /my_images -name '1615412977270_2_999_img_msec.png' | xargs python3 classification_sample_async.py -d TARGET --labels /tmp/cloud_no_cloud_labels.txt -nt 2 -m /my_ir_models/Best_model_CNN_KVacc0_841899037361145_Bsize8Patience_5K_from_Fred_transform.xml -i

But, on more than a single image, it ONLY fails on the MYRIAD

>find /my_images -name '1615412977270_2_99*_img_msec.png' | xargs python3 classification_sample_async.py -d MYRIAD --labels /tmp/cloud_no_cloud_labels.txt -nt 2 -m /my_ir_models/Best_model_CNN_KVacc0_841899037361145_Bsize8Patience_5K_from_Fred_transform.xml -i

->

File "/opt/intel/openvino/deployment_tools/inference_engine/samples/python/classification_sample_async/classification_sample_async.py", line 142, in main

exec_net = ie.load_network(network=net, device_name=args.device)

File "ie_api.pyx", line 178, in openvino.inference_engine.ie_api.IECore.load_network

File "ie_api.pyx", line 187, in openvino.inference_engine.ie_api.IECore.load_network

RuntimeError: Unexpected CNNNetwork format: it was converted to deprecated format prior plugin's call

Michael

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

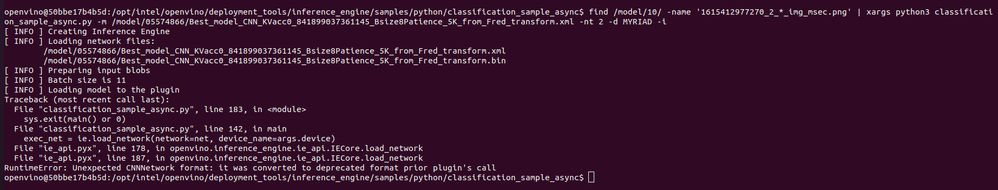

Hi Michael,

On my end, I have validated and was able to replicate the same error as yours. I am able to run classification with multiple input images on the CPU but got the error when using MYRIAD plugin as shown below.

This seems to be a known issue, as seen in the GitHub discussion here. Note that this issue has been fixed by the Development team in the later 2020.4 release. However, Intel® Movidius™ Neural Compute Stick (NCS1) is not supported on the 2020.4 release. Therefore, we suggest you use a single input image to run classification on NCS1.

Regards,

Megat

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Michael,

This thread will no longer be monitored since we have provided a suggestion. If you need any additional information from Intel, please submit a new question.

Regards,

Megat

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page