- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The Detections result is different in both the cases.

Myriad Results:

results [[[-5.87500000e+00 -9.79687500e+00 3.38281250e+00 3.91796875e+00

1.38998032e-04 3.34960938e-01]

[-4.57812500e+00 -1.27421875e+01 3.24609375e+00 3.92968750e+00

0.00000000e+00 3.88427734e-01]

[ 3.67431641e-01 -1.29296875e+01 2.89648438e+00 3.69726562e+00

0.00000000e+00 4.71435547e-01]

...

[-4.85595703e-01 4.73437500e+00 -3.82812500e-01 6.41601562e-01

0.00000000e+00 9.86328125e-02]

[ 2.15039062e+00 6.93750000e+00 6.95312500e-01 1.01757812e+00

0.00000000e+00 1.17431641e-01]

[ 1.97167969e+00 8.57031250e+00 2.93164062e+00 3.54296875e+00

3.71932983e-04 2.06420898e-01]]]

CPU Results:

results [[[-8.4628897e+00 -1.3867578e+01 3.7331104e+00 4.3173060e+00

2.2710463e-04 4.3838483e-01]

[-5.8339972e+00 -1.9294828e+01 3.4993901e+00 4.2565918e+00

4.6186513e-05 5.2212775e-01]

[ 1.0963238e+00 -2.0203087e+01 3.1744423e+00 4.0058889e+00

2.3131161e-05 5.8755422e-01]

...

[-1.2445596e+00 7.6259720e-01 -4.1536269e+00 -2.3483934e+00

3.5924142e-05 8.0652714e-02]

[ 1.3845503e-01 2.4664059e+00 -2.0437424e+00 -1.5161291e+00

2.2524493e-05 1.0168492e-01]

[ 1.3065454e+00 6.8799105e+00 2.3910873e+00 2.9384508e+00

7.6559943e-04 1.8868887e-01]]]

Results Posted by CPU Inference are correct and movidius (myriad X NCS2 stick)results are wrong.

Kindly assist how to handle this scenario as results obtained on movidius are having higher standard deviation.

Download Path:

https://drive.google.com/drive/folders/1Wyw423tf7WE1YU_w7T0h3hdbusDdodAJ?usp=sharing

Command:

python demo\OpenVINO\python\demo.py

NOTE:

I have attached .pth ,onnx and IR models in it. you can try with both IR and ONNX models.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi MohnishJain,

Thank you for reaching out to us.

I've replicated this issue and received similar results (I've tested with CPU and MYRIAD plugins on Windows 10).

We're investigating this issue and shall get back to you soon.

Regards,

Hairul

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Hairul_Intel Do You have any updates for this issue? we kind off needed the resolution/reason behind this issue on priority as we have to move this in production in couple of weeks.

So, we would appreciate if you can help a bit here.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi MohnishJain,

Apologies for the delayed response.

Currently we are escalating this issue to the development team for further troubleshooting. This should take a while, thanks for your patience so far.

Regards,

Hairul

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Hairul_Intel Any Update on this issue resolution, it has been 2 months since the issue has been raised.I have been waiting long for this to get solved and temporary fixes are throwingissues now.

we are moving now in production, if the issue still exists we need to drop the movidius stick option for inferencing.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi MohnishJain,

Apologies for the delay on this.

Our development team is still looking to rectify this and working to provide a robust solution. I will keep you updated once available.

Sorry for the inconvenience caused.

Regards,

Hairul

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi MohnishJain,

We sincerely apologize for the delay in providing updates for this issue.

Our development team is still working on resolving this issue. The fix will usually be embedded into the next upcoming release. We will keep you posted once we obtain the official timeline from the development team.

We appreciate your understanding in this matter and thank you for your continuous support of OpenVINO.

Regards,

Hairul

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi MohnishJain,

Glad to inform you that our development team has rectified the reported issue on our latest releases (OpenVINO 2022.1 onwards). We would like to invite you to test from your side.

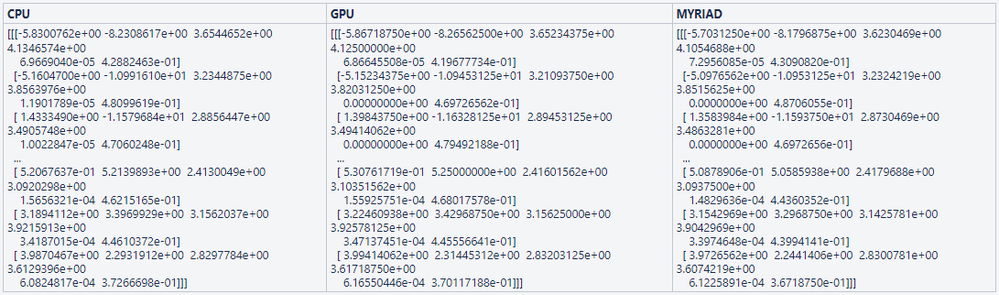

Sharing our replication results using OpenVINO 2022.1 (with API 2.0) to compare inference results across CPU, GPU and Myriad as shown in the screenshot below.

MO command:

mo --input_model public_model.onnx --data_type FP16 --layout NCHW --use_new_frontend --input_shape [1,3,1024,1024] --mean_values [0.485,0.456,0.406] --scale 255 --model_name demo_m_public --output_dir ir_models/2022.1/

Regards,

Hairul

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi MohnishJain,

This thread will no longer be monitored since we have provided a solution. If you need any additional information from Intel, please submit a new question.

Regards,

Hairul

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page