- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

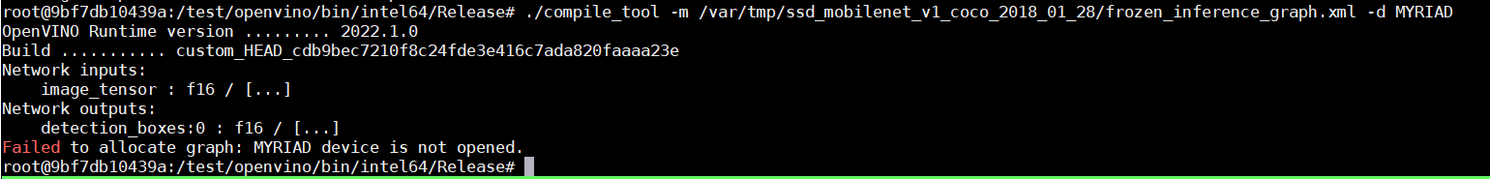

OpenVINO 2022.1.0 compile tool(https://github.com/openvinotoolkit/openvino/tree/2022.1.0/tools/compile_tool) is expected to work on a machine that does not have the physical device connected. But it throws the below error when tried to compile and export a blob for MYRIAD device.

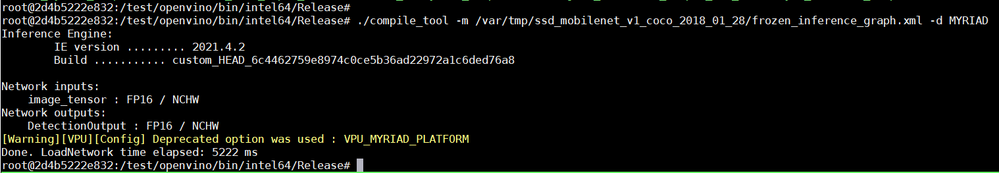

The same usecase works on OpenVINO 2021.4.2 compile tool.

Looks like this is a bug on 2022.1.0 version.

Note: System does not have MYRIAD device connected and usecases were run inside docker containers with ubuntu 20.04 base OS. The hostsystem has ubuntu 20.04 OS as well.

Also is this compile tool supported for CPU and GPU devices ?

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi SandeshKum_S_Intel,

Thanks for reaching out to us.

Thank you for reporting this bug, referring to this GitHub thread, our developer has reported this issue to the development team. As mentioned in here, developer will link the pull request once they have a fix.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the response Wan.

In OpenVINO 2022.1.0 version, is compile tool supported for CPU and GPU device ? Can we generate a compiled blob for target device as CPU/GPU ? If yes, can we run inference using the compiled blob on CPU/GPU device instead of IR files(i.e. bin and xml files) ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi SandeshKum_S_Intel,

According to the documentation, the compile tool only compiles networks for Intel® Neural Compute Stick 2 (MYRIAD plugin) only.

Run the compile_tool.exe with MYRIAD as the target device:

compile_tool.exe -m model.xml -o model.blob -d MYRIAD

By the way, we can generate a compiled blob using export() function of the ExecutableNetwork class. However, this model caching is only available for CPU plugin only.

1. Export the current executable network.

ie = IECore()

net = ie.read_network(model=path_to_xml_file, weights=path_to_bin_file)

exec_net = ie.load_network(network=net, device_name="CPU", num_requests=4)

exec_net.export(“<path>/model.blob”)

2. Blob file is generated in the path.

3. Create an executable network from a previously exported network.

ie = IECore()

exec_net_imported = ie.import_network(model_file=”<path>/model.blob”, device_name="CPU")

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi SandeshKum_S_Intel,

We’ve got a reply from our developer. The permanent fix is in progress. You may use the following workaround provided by our developer to compile a network for inference and export it to a binary file:

Step 1: Create "myriad.conf" file with the following content:

MYRIAD_ENABLE_MX_BOOT NO

Step 2: Execute compile_tool using the following command:

compile_tool.exe -m model.xml -d MYRIAD -c myriad.conf

Hope it helps!

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi SandeshKum_S_Intel,

Thanks for your question.

This thread will no longer be monitored since we have provided a workaround.

If you need any additional information from Intel, please submit a new question.

Best regards,

Wan

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page