- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

We're working with a client's TensorFlow model which was exported using freeze graph. The model is able to be loaded using their script and runs inference successfully. However, when run through the model optimizer, this error occurs:

/glob/intel-python/python3/lib/python3.6/site-packages/h5py/__init__.py:34: FutureWarning: Conversion of the second argument of issubdtype from `float` to `np.floating` is deprecated. In future, it will be treated as `np.float64 == np.dtype(float).type`.

from ._conv import register_converters as _register_converters

[ ERROR ] Cannot infer shapes or values for node "WRRS_Model/LSTM/Dropout/cond/sub/Switch".

[ ERROR ] Retval[0] does not have value

[ ERROR ]

[ ERROR ] It can happen due to bug in custom shape infer function <function tf_native_tf_node_infer at 0x2afc36886d08>.

[ ERROR ] Or because the node inputs have incorrect values/shapes.

[ ERROR ] Or because input shapes are incorrect (embedded to the model or passed via --input_shape).

[ ERROR ] Run Model Optimizer with --log_level=DEBUG for more information.

[ ERROR ] Stopped shape/value propagation at "WRRS_Model/LSTM/Dropout/cond/sub/Switch" node.

For more information please refer to Model Optimizer FAQ (<INSTALL_DIR>/deployment_tools/documentation/docs/MO_FAQ.html), question #38.

Model Optimizer arguments:

Common parameters:

- Path to the Input Model: /home/u14379/witronix/bell_detection.pb

- Path for generated IR: /home/u14379/openvino/deployment_tools/model_optimizer/.

- IR output name: bell_detection

- Log level: ERROR

- Batch: Not specified, inherited from the model

- Input layers: Not specified, inherited from the model

- Output layers: Not specified, inherited from the model

- Input shapes: Not specified, inherited from the model

- Mean values: Not specified

- Scale values: Not specified

- Scale factor: Not specified

- Precision of IR: FP32

- Enable fusing: True

- Enable grouped convolutions fusing: True

- Move mean values to preprocess section: False

- Reverse input channels: False

TensorFlow specific parameters:

- Input model in text protobuf format: False

- Offload unsupported operations: False

- Path to model dump for TensorBoard: None

- Update the configuration file with input/output node names: None

- Operations to offload: None

- Patterns to offload: None

- Use the config file: None

Model Optimizer version: 1.2.110.59f62983

How can I resolve this error? Thanks!

Mark

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

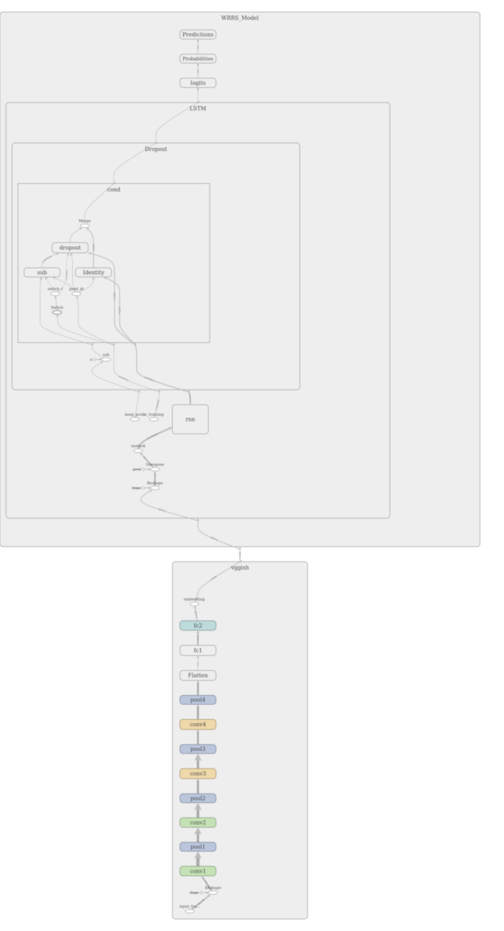

I ran the model through tensorboard, and the node's information is

WRRS_Model/LSTM/Dropout/cond/Switch

Operation: Switch

Attributes (1)

T

{"type":"DT_BOOL"}

Inputs (1)

WRRS_Model/LSTM/is_training

Outputs (1)

WRRS_Model/LSTM/Dropout/cond/switch_t

the input node is

WRRS_Model/LSTM/is_training

Operation: Placeholder

Attributes (2)

dtype

{"type":"DT_BOOL"}

shape

{"shape":{"unknown_rank":true}}

Inputs (0)

Outputs (2)

WRRS_Model/LSTM/Dropout/cond/Switch

WRRS_Model/LSTM/Dropout/cond/pred_id

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mark,

in our documentation regarding Facenet, we show how to use a placeholder on a Switch node, please take a look at that : /Intel/computer_vision_sdk_2018.3.343/deployment_tools/documentation/docs/FaceNetTF.html

In your case, I think you should try it on WRRS_Model/LSTM/is_training.

Best,

Severine

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Severine,

Thanks for the reply. I've re-run the command with a few settings, which is now:

python mo.py --input_model=/home/mark/work/[removed]/bell_detection.pb --freeze_placeholder_with_value "WRRS_Model/LSTM/is_training->False","WRRS_Model/LSTM/keep_prob->1.0" --input=vggish/input_features --input_shape=[10,96,64]

This seems to be closer, but there is now an error about the output layer which seems to cast the float input to one-hot int64 output.

[ ERROR ] Input 0 of node WRRS_Model/Predictions/one_hot was passed float from WRRS_Model/Predictions/ArgMax_port_0_ie_placeholder:0 incompatible with expected int64.

What would be the next step here? Been looking through the FAQ and Google but can't find anything related to this. Thanks.

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mark,

For further answers, I need to redirect you to our inside blue forum as you are an Intel employee. In particular, you could repost your issue and precise which topology are you using?

PM in case you don't know the inside blue page address.

Best,

Severine

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page