- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

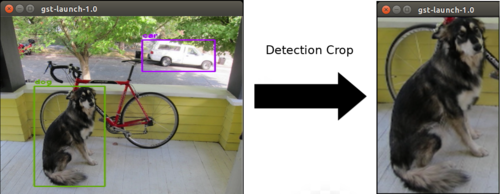

GstInference is a GStreamer plugin that enables out-of-the-box integration of deep learning models with GStreamer pipelines for inference tasks. This project is open source, multi platform and now it supports OpenVINO through the ONNX Runtime inference engine. Support for Intel® CPUs, Intel® Integrated Graphics and Intel® MovidiusTM USB sticks is now available.

Check out code samples, documentation and benchmarks for GstInference here.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @jchaves

Thanks for sharing your project with OpenVINO community.

We have also informed developers team about it.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @jchaves

Thanks for sharing your project with OpenVINO community.

We have also informed developers team about it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @jchaves

OpenVINO Toolkit includes DL Streamer which provides GStreamer Video Analytics plugin with elements for Deep Leaning inference using OpenVINO inference engine on Intel CPU, GPU and VPU. For more details on DL Streamer, please refer to the open-source repository here.

Currently, DL Streamer inference elements require models converted to IR format. We have plans to support ONNX models directly without IR conversion in our future version.

DL Streamer is highly optimized for Intel platforms. We have listed some of the optimizations below:

- Optimized interop between media decode, preprocessing and inference

- Optimal color format conversions

- Zero-copy buffer sharing between decode, pre-processing and inference on CPU or GPU

- Asynchronous pipeline execution

- Optimized multi-stream processing

- Sharing of IE instances

- Offloading decode, preprocess to GPU

- Ability to reduce inference frequency by leveraging object tracking in between inference operations

- Ability to skip classification on the same object by leveraging object tracking

We will continue to optimize it further and support all the Intel HW. Thank you for your contribution to supporting OpenVINO inference via ONNX RT in GstInference.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page