- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

I was able to convert successfully my Keras model using limited options : `--use_new_frontend` and `--saved_model_dir`.

The load is also successful using `read_model` (using `model` and `weights` parmaters to load the .xml and .bin files).

I've an error with `compile_model(model=model, device_name="CPU")` : `RuntimeError: Can not constant fold eltwise node`.

I can't find documents or web pages on that error.

==> Any idea how that can be solved ?

Versions :

- Python 3.8.10

- tensorflow 2.11.0 (running within docker tensorflow/tensorflow:2.11.0-gpu)

- openvino-dev 2022.3.0

In the model there is all thoses : BatchNormalization, Conv1D, Activation (relu and sigmoid), Add, Multiply, Concatenate, squeeze, stack, split, CosineSimilarity, and tf.math (divide, multiply, add, reduce_sum, log, exp).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Steff,

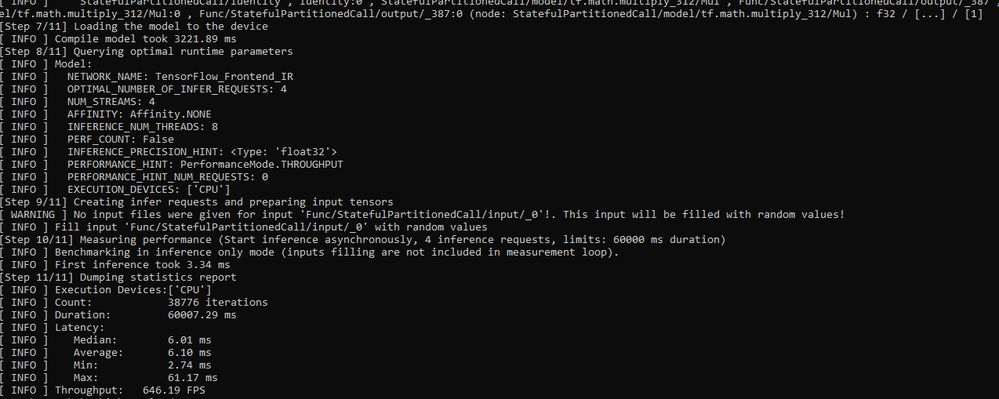

The issue is related to the missing proper support for f64 type of transformation. Is it need f64 really needed in the model or can be replaced with f32? This can be done by changing the following in the model.py:

# set precision to 64 bit

- tf.keras.backend.set_floatx('float64')

+ tf.keras.backend.set_floatx('float32')

The Intermediate Representation (IR) file where f64 is replaced with f32 can be successfully inferred. See below:

Regards,

Aznie

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Steff,

Thanks for reaching out.

Eltwise layer is supported for CPU if the output layout is in NCDHW data format. You may refer to Supported Layers.

For further testing, can you share your Model Optimizer command and your model file when converting the model? Please share Intermediate Representation (IR), original model and all necessary files.

You can share it here or privately to my email: noor.aznie.syaarriehaahx.binti.baharuddin@intel.com

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Steff,

The error message happened when constant folding was not successful. The - use_new_frontend can be avoided in Model Optimizer command. The Tensorflow Frontend is available as a preview feature starting from 2022.3. That means that you can experiment with - the use_new_frontend option passed to Model Optimizer to enjoy improved conversion time for the limited scope of models.

Can you add --framework tf and input shape while converting? However, we can only provide suggestions based on the information shared so far. We can actually work on it if we have the original model with us.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

mo --framework tf --saved_model_dir /usr/src/Python/random_test/model --output_dir /usr/src/Python/random_test/model_openvino[ ERROR ] Cannot infer shapes or values for node "StatefulPartitionedCall/model/conv1d/Conv1D".

[ ERROR ] index 3 is out of bounds for axis 0 with size 3

[ ERROR ]

[ ERROR ] It can happen due to bug in custom shape infer function <function Convolution.infer at 0x7fd1a7e31550>.

[ ERROR ] Or because the node inputs have incorrect values/shapes.

[ ERROR ] Or because input shapes are incorrect (embedded to the model or passed via --input_shape).

[ ERROR ] Run Model Optimizer with --log_level=DEBUG for more information.

[ ERROR ] Exception occurred during running replacer "REPLACEMENT_ID" (<class 'openvino.tools.mo.middle.PartialInfer.PartialInfer'>): Stopped shape/value propagation at "StatefulPartitionedCall/model/conv1d/Conv1D" node.

For more information please refer to Model Optimizer FAQ, question #38. (https://docs.openvino.ai/latest/openvino_docs_MO_DG_prepare_model_Model_Optimizer_FAQ.html?question=38#question-38)

[ INFO ] You can also try to use new TensorFlow Frontend (preview feature as of 2022.3) by adding `--use_new_frontend` option into Model Optimizer command-line.

Find more information about new TensorFlow Frontend at https://docs.openvino.ai/latest/openvino_docs_MO_DG_TensorFlow_Frontend.htmlmo --framework tf --input_shape [1,20,2] --saved_model_dir /usr/src/Python/random_test/model --output_dir /usr/src/Python/random_test/model_openvino[ ERROR ] Cannot infer shapes or values for node "StatefulPartitionedCall/model/conv1d/Conv1D".

[ ERROR ] index 3 is out of bounds for axis 0 with size 3

[ ERROR ]

[ ERROR ] It can happen due to bug in custom shape infer function <function Convolution.infer at 0x7feafcd33550>.

[ ERROR ] Or because the node inputs have incorrect values/shapes.

[ ERROR ] Or because input shapes are incorrect (embedded to the model or passed via --input_shape).

[ ERROR ] Run Model Optimizer with --log_level=DEBUG for more information.

[ ERROR ] Exception occurred during running replacer "REPLACEMENT_ID" (<class 'openvino.tools.mo.middle.PartialInfer.PartialInfer'>): Stopped shape/value propagation at "StatefulPartitionedCall/model/conv1d/Conv1D" node.

For more information please refer to Model Optimizer FAQ, question #38. (https://docs.openvino.ai/latest/openvino_docs_MO_DG_prepare_model_Model_Optimizer_FAQ.html?question=38#question-38)

[ INFO ] You can also try to use new TensorFlow Frontend (preview feature as of 2022.3) by adding `--use_new_frontend` option into Model Optimizer command-line.

Find more information about new TensorFlow Frontend at https://docs.openvino.ai/latest/openvino_docs_MO_DG_TensorFlow_Frontend.html

mo --use_new_frontend --framework tf --input_shape [1,20,2] --saved_model_dir /usr/src/Python/random_test/model --output_dir /usr/src/Python/random_test/model_openvino[ INFO ] The model was converted to IR v11, the latest model format that corresponds to the source DL framework input/output format. While IR v11 is backwards compatible with OpenVINO Inference Engine API v1.0, please use API v2.0 (as of 2022.1) to take advantage of the latest improvements in IR v11.

Find more information about API v2.0 and IR v11 at https://docs.openvino.ai/latest/openvino_2_0_transition_guide.html

[ INFO ] IR generated by new TensorFlow Frontend is compatible only with API v2.0. Please make sure to use API v2.0.

Find more information about API v2.0 at https://docs.openvino.ai/latest/openvino_2_0_transition_guide.html

[ SUCCESS ] Generated IR version 11 model.

[ SUCCESS ] XML file: /usr/src/Python/random_test/model_openvino/saved_model.xml

[ SUCCESS ] BIN file: /usr/src/Python/random_test/model_openvino/saved_model.binie = Core()

model = ie.read_model(model=ir_path, weights=ir_path.with_suffix(".bin"))

compiled_model = ie.compile_model(model=model, device_name="CPU")RuntimeError Traceback (most recent call last)

File /usr/local/lib/python3.8/dist-packages/openvino/runtime/ie_api.py:399, in Core.compile_model(self, model, device_name, config)

393 if device_name is None:

394 return CompiledModel(

395 super().compile_model(model, {} if config is None else config),

396 )

398 return CompiledModel(

399 super().compile_model(model, device_name, {} if config is None else config),

400 )

RuntimeError: Can not constant fold eltwise nodeIf I can share the model, what do you need ? The model as code snippet ? Or the .pb files and variables files ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Steff,

Thanks for sharing the testing output.

You can share your original model and any related files for us to duplicate and validate.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Steff,

The issue is related to the missing proper support for f64 type of transformation. Is it need f64 really needed in the model or can be replaced with f32? This can be done by changing the following in the model.py:

# set precision to 64 bit

- tf.keras.backend.set_floatx('float64')

+ tf.keras.backend.set_floatx('float32')

The Intermediate Representation (IR) file where f64 is replaced with f32 can be successfully inferred. See below:

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank-you Aznie for your help,

For the moment the model only work in 64 bit. I will check if there is a way to fix.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Steff,

This thread will no longer be monitored since this issue has been resolved. If you need any additional information from Intel, please submit a new question.

Regards,

Aznie

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page